“Out Of Memory” Does Not Refer to Physical Memory

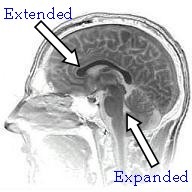

I started programming on x86 machines during a period of large and rapid change in the memory management strategies enabled by the Intel processors. The pain of having to know the difference between “extended memory” and “expanded memory” has faded with time, fortunately, along with my memory of the exact difference.

I started programming on x86 machines during a period of large and rapid change in the memory management strategies enabled by the Intel processors. The pain of having to know the difference between “extended memory” and “expanded memory” has faded with time, fortunately, along with my memory of the exact difference.

As a result of that early experience, I am occasionally surprised by the fact that many professional programmers seem to have ideas about memory management that haven’t been true since before the “80286 protected mode” days.

For example, I occasionally get the question “I got an ‘out of memory’ error but I checked and the machine has plenty of RAM, what’s up with that?”

Imagine, thinking that the amount of memory you have in your machine is relevant when you run out of it! How charming! :-)

The problem, I think, with most approaches to describing modern virtual memory management is that they start with assuming the DOS world – that “memory” equals RAM, aka “physical memory”, and that “virtual memory” is just a clever trick to make the physical memory seem bigger. Though historically that is how virtual memory evolved on Windows, and is a reasonable approach, that’s not how I personally conceptualize virtual memory management.

So, a quick sketch of my somewhat backwards conceptualization of virtual memory. But first a caveat. The modern Windows memory management system is far more complex and interesting than this brief sketch, which is intended to give the flavour of virtual memory management systems in general and some mental tools for thinking clearly about what the relationship between storage and addressing is. It is not by any means a tutorial on the real memory manager. (For more details on how it actually works, try this MSDN article.)

I’m going to start by assuming that you understand two concepts that need no additional explanation: the operating system manages processes, and the operating system manages files on disk.

Each process can have as much data storage as it wants. It asks the operating system to create for it a certain amount of data storage, and the operating system does so.

Now, already I am sure that myths and preconceptions are starting to crowd in. Surely the process cannot ask for “as much as it wants”. Surely the 32 bit process can only ask for 2 GB, tops. Or surely the 32 bit process can only ask for as much data storage as there is RAM. Neither of those assumptions are true. The amount of data storage reserved for a process is only limited by the amount of space that the operating system can get on the disk. (*)

This is the key point: the data storage that we call “process memory” is in my opinion best visualized as a massive file on disk.

So, suppose the 32 bit process requires huge amounts of storage, and it asks for storage many times. Perhaps it requires a total of 5 GB of storage. The operating system finds enough disk space for 5GB in files and tells the process that sure, the storage is available. How does the process then write to that storage? The process only has 32 bit pointers, but uniquely identifying every byte in 5GB worth of storage would require at least 33 bits.

Solving that problem is where things start to get a bit tricky.

The 5GB of storage is split up into chunks, typically 4KB each, called “pages”. The operating system gives the process a 4GB “virtual address space” – over a million pages - which can be addressed by a 32 bit pointer. The process then tells the operating system which pages from the 5GB of on-disk storage should be “mapped” into the 32 bit address space. (How? Here’s a page where Raymond Chen gives an example of how to allocate a 4GB chunk and map a portion of it.)

Once the mapping is done then the operating system knows that when the process #98 attempts to use pointer 0x12340000 in its address space, that this corresponds to, say, the byte at the beginning of page #2477, and the operating system knows where that page is stored on disk. When that pointer is read from or written to, the operating system can figure out what byte of the disk storage is referred to, and do the appropriate read or write operation.

An “out of memory” error almost never happens because there’s not enough storage available; as we’ve seen, storage is disk space, and disks are huge these days. Rather, an “out of memory” error happens because the process is unable to find a large enough section of contiguous unused pages in its virtual address space to do the requested mapping.

Half (or, in some cases, a quarter) of the 4GB address space is reserved for the operating system to store it’s process-specific data. Of the remaining “user” half of the address space, significant amounts of it are taken up by the EXE and DLL files that make up the application’s code. Even if there is enough space in total, there might not be an unmapped “hole” in the address space large enough to meet the process’s needs.

The process can deal with this situation by attempting to identify portions of the virtual address space that no longer need to be mapped, “unmap” them, and then map them to some other pages in the storage file. If the 32 bit process is designed to handle massive multi-GB data storages, obviously that’s what its got to do. Typically such programs are doing video processing or some such thing, and can safely and easily re-map big chunks of the address space to some other part of the “memory file”.

But what if it isn’t? What if the process is a much more normal, well-behaved process that just wants a few hundred million bytes of storage? If such a process is just ticking along normally, and it then tries to allocate some massive string, the operating system will almost certainly be able to provide the disk space. But how will the process map the massive string’s pages into address space?

If by chance there isn’t enough contiguous address space then the process will be unable to obtain a pointer to that data, and it is effectively useless. In that case the process issues an “out of memory” error. Which is a misnomer, these days. It really should be an “unable to find enough contiguous address space” error; there’s plenty of memory because memory equals disk space.

I haven’t yet mentioned RAM. RAM can be seen as merely a performance optimization. Accessing data in RAM, where the information is stored in electric fields that propagate at close to the speed of light is much faster than accessing data on disk, where information is stored in enormous, heavy ferrous metal molecules that move at close to the speed of my Miata. (**)

The operating system keeps track of what pages of storage from which processes are being accessed most frequently, and makes a copy of them in RAM, to get the speed increase. When a process accesses a pointer corresponding to a page that is not currently cached in RAM, the operating system does a “page fault”, goes out to the disk, and makes a copy of the page from disk to RAM, making the reasonable assumption that it’s about to be accessed again some time soon.

The operating system is also very smart about sharing read-only resources. If two processes both load the same page of code from the same DLL, then the operating system can share the RAM cache between the two processes. Since the code is presumably not going to be changed by either process, it's perfectly sensible to save the duplicate page of RAM by sharing it.

But even with clever sharing, eventually this caching system is going to run out of RAM. When that happens, the operating system makes a guess about which pages are least likely to be accessed again soon, writes them out to disk if they’ve changed, and frees up that RAM to read in something that is more likely to be accessed again soon.

When the operating system guesses incorrectly, or, more likely, when there simply is not enough RAM to store all the frequently-accessed pages in all the running processes, then the machine starts “thrashing”. The operating system spends all of its time writing and reading the expensive disk storage, the disk runs constantly, and you don’t get any work done.

This also means that "running out of RAM" seldom(***) results in an “out of memory” error. Instead of an error, it results in bad performance because the full cost of the fact that storage is actually on disk suddenly becomes relevant.

Another way of looking at this is that the total amount of virtual memory your program consumes is really not hugely relevant to its performance. What is relevant is not the total amount of virtual memory consumed, but rather, (1) how much of that memory is not shared with other processes, (2) how big the "working set" of commonly-used pages is, and (3) whether the working sets of all active processes are larger than available RAM.

By now it should be clear why “out of memory” errors usually have nothing to do with how much physical memory you have, or how even how much storage is available. It’s almost always about the address space, which on 32 bit Windows is relatively small and easily fragmented.

And of course, many of these problems effectively go away on 64 bit Windows, where the address space is billions of times larger and therefore much harder to fragment. (The problem of thrashing of course still occurs if physical memory is smaller than total working set, no matter how big the address space gets.)

This way of conceptualizing virtual memory is completely backwards from how it is usually conceived. Usually it’s conceived as storage being a chunk of physical memory, and that the contents of physical memory are swapped out to disk when physical memory gets too full. But I much prefer to think of storage as being a chunk of disk storage, and physical memory being a smart caching mechanism that makes the disk look faster. Maybe I’m crazy, but that helps me understand it better.

*************

(*) OK, I lied. 32 bit Windows limits the total amount of process storage on disk to 16 TB, and 64 bit Windows limits it to 256 TB. But there is no reason why a single process could not allocate multiple GB of that if there’s enough disk space.

(**) Numerous electrical engineers pointed out to me that of course the individual electrons do not move fast at all; it's the field that moves so fast. I've updated the text; I hope you're all happy with it now.

(***) It is possible in some virtual memory systems to mark a page as “the performance of this page is so crucial that it must always remain in RAM”. If there are more such pages than there are pages of RAM available, then you could get an “out of memory” error from not having enough RAM. But this is a much more rare occurrence than running out of address space.

Comments

Anonymous

June 08, 2009

I'm sure this is a stupid question related to my not having a clear understanding of the differences between address space, virtual memory, etc, but then where does the 2GB memory allocation limit come into play? There is no 2GB memory allocation limit. You can allocate as much memory as you want; you have 2GB of address space to map it into. If you want to have 5GB allocated, great, you do that, but you only can have up to 2GB of it mapped at any one time. -- Eric For example, when many applications I use attempt to cross the ~2GB limit, they error or crash. If your app author is unwilling to take on the pain of writing its own memory mapper/unmapper, then clearly it is stuck with not allocating more memory than it can map. -- Eric There's also the Windows limit of 2GB for user-mode applications (the /3GB switch stuff). What you've said here makes sense, so should I assume that the only way to get access to more than 2GB of address space is to use alternative memory allocation techniques (LARGEADDRESSAWARE for example)? Indeed. All the 3GB switch does is give you an extra GB of user address space by stealing it from the operating system's address space. That's the only way to get more address space in 32 bit windows. Again, you can allocate as much memory as you want, but address space is strictly limited to 2GB or 3GB in 32 bit windows. -- Eric It seems like your article ends right before a giant "BUT this is how it usually ends up working in real world applications" caveat. In most apps, you can only allocate as much memory as you have room to map. -- EricAnonymous

June 08, 2009

Great article, thanks! :) I would like to see a 'back-in-the-day' type article regarding Extended & Expanded memory, just for a history lesson - I never could get the hang of it back then...Anonymous

June 08, 2009

Excellent article, and I think your view of memory as large file on disk with the RAM being an optimization makes a lot more sense!Anonymous

June 08, 2009

I agree that thinking of RAM as a performance optimization makes the whole concept more straightforward. The CPU cache(s), the physical memory, and the virtual memory swap file are really just different levels of a multi-level cache, each level begin larger but slower than the level before.Anonymous

June 08, 2009

We don't think about the system cache as being a device that swaps out to RAM when it gets too full, so it does make a lot of sense to think about memory as being a disk file and RAM just an optimization detail. Great article.Anonymous

June 08, 2009

Physics nitpick: The electrons in a circuit only travel a few miles per hour, it is their displacement wave that travels near the speed of light.Anonymous

June 08, 2009

The comment has been removedAnonymous

June 08, 2009

And about .NET development... If I need to access many GBs of data... the CLR is intelligent enough to do it for me? or... I must to write my own memory mapper to deal with the issue? and how? On 64 bit CLR, you're all set. On 32 bit CLR, if you need to do custom mapping and unmapping of huge blocks of memory, then I personally would not want to handle that in managed code. There are ways to do it, of course, but it would not be pretty. -- EricAnonymous

June 08, 2009

This comment is by no means a tutorial on tutorials. I find that posts that begin with such wording are usually the best tutorials available. This blog post is a great example of that. Previously, while I knew about these differences, I had to think to keep them in mind. Now, Eric has transformed that thought process into a state of mind. Yeah, memory is a file; it's cached in the RAM for performance. It truly makes perfect sense.Anonymous

June 08, 2009

The comment has been removedAnonymous

June 08, 2009

A nitpick: considering RAM as a cache for the disk is not quite correct, since the manager can choose to never write a page in RAM to disk (although I believe it ensures there is enough storage in the page file just in case). I just think of the combination of RAM and disk as the "physical memory" (or just "backing" to reduce confusion with RAM), where the manager tries to keep the often used stuff in the fast part of the memory.Anonymous

June 08, 2009

@Blake Coverett - Thanks a bunch for the intro =) You're probably right about there not being enough meat for a full-blown article - but still, very interesting information nonetheless.Anonymous

June 08, 2009

Simon Buchan: but that's just an optimisation applied to many caches. If the cached range never needs to touch the slow medium during its lifetime, there's not point doing so. Just as when you "allocate" a variable for a calcuation, it might get optimised away.Anonymous

June 08, 2009

@Mark: Perhaps we have different definitions of "cache" then... I've always thought of it as being a copy of the authoritative value made for performance reasons. It's just the word though, this is getting super-nitpicky. (That is too a word, Safari!)Anonymous

June 08, 2009

"There is no 2GB memory allocation limit. You can allocate as much memory as you want; you have 2GB of address space to map it into." What's the use? I can't have more than 2GB allocated AT THE SAME TIME. The use case of memory is typically that you want to remember something. Perhaps you have four billion bytes of video that you are processing, and you want to keep the whole video in memory. You could map the bits you're currently editing into virtual memory while leaving the rest unmapped until you're done with what you've got. -- Eric Out of memory can be raised because there's not enough virtual space free. The limit is the memory you can address not the memory you can map. If I allocate three 1 GB byte arrays it will probably fail with an out of memory error because I simple do not have enough memory to address the virtual address of the last one.Anonymous

June 08, 2009

I started programming in the old times of 16-bit MS-DOS, where you had only 640 KB of memory, and youAnonymous

June 08, 2009

I agree with your conceptualisation. Its the way I've prefered to look at it ever since coding on VAX/VMS systems from which NT inherited these concepts via David Cutler.Anonymous

June 08, 2009

Actually why is the hard disk size the limit of how much you could allocate? Why doesn't windows go to the cloud for more space ? And then the limit is the total amount of free space available on the internet ..... which is allot. But of course if you go to the cloud with the allocation scheme, you need some URI scheme to reference your allocated space: pointer -> Uri translation. And then you could think your PC is just a Cache for data allocated on the cloud/internet ;-). P.S. This was meant as a joke :), obviouslyAnonymous

June 09, 2009

The comment has been removedAnonymous

June 09, 2009

Bob, I remember RSX-11/M ODL files well (along with RT-11 means of dealing with the same memory management issues). In fact I still have a couple of working PDP-11's (primarily Q-BUS), and a working PDP-8. Now that was some real fun memory management. MAX 4KW of Code and 4KW of Data. And the mapping unit was also 4KW (which meant that when you changed mapping you immediately lost your current context <eek>). [People interested in the "classics" can contact me via: david dot corbin at dynconcepts dot comAnonymous

June 09, 2009

Interesting Finds: June 9, 2009Anonymous

June 09, 2009

Mapping data files into your address space is another way of using your process space. It's more efficient than buffered access, that has to copy data into you process space buffers. Can you map files in .NET?Anonymous

June 09, 2009

@halpierson There is a new namespace in .NET 4.0 that allows for memory mapped files: http://msdn.microsoft.com/en-us/library/system.io.memorymappedfiles(VS.100).aspxAnonymous

June 09, 2009

Lovely post, thanks! Of course, electrons flowing through conductors in the form of electricity actually move at significantly slower speeds than even your miata. The conductor just happens to always be full of electrons, making the signal propagate at that speed. Think of a hose full of water. Turn on the faucet and water comes out immediately. That doesn't mean the water is moving at the speed of light, just that the hose was full of water.Anonymous

June 09, 2009

The actual flow rate of electrons can be VERY VERY slow....Even the wave propogation is significantly slower in a non-vacuum environment. I remember well laying out circuit boards and measuring trace lengths to determine propogation delay (and the fact that a signal would arrive at two different points in the circuit at different times). IIRC: the normal number we used for copper PCB's was 1.5nS per FOOT..... Indeed, Grace Hopper used to hand out a foot of phone wire when she'd give talks. She'd use the foot of wire to illustrate the concept of a nanosecond. -- EricAnonymous

June 09, 2009

Welcome to the 49th edition of Community Convergence. The big excitment of late has been the recent releaseAnonymous

June 09, 2009

"Accessing data in RAM, where the information is stored in tiny, lightweight electrons that move at close to the speed of light is much faster than accessing data on disk, where information is stored in enormous, heavy iron oxide molecules that move at close to the speed of my Miata." -- made my day :) great article, I have to admit that I was one of those “I got an ‘out of memory’ error but I checked and the machine has plenty of RAM, what’s up with that?”. Shame on me. But now I know better.Anonymous

June 09, 2009

I can't seem to fully understand your view of the memory management regarding storage file as being the primary level of memory and RAM as an optimization Let's assume a process is started and requires memory. What's the primary memory source? The storage file and then the OS copies the memory to RAM? Please carefully re-read the sixth paragraph. -- Eric Let's assume I have 6GB of RAM and only 2GB of storage file (in contradiction to MS recommendations) What happens there? Probably nothing pleasant. -- EricAnonymous

June 09, 2009

Я начал программировать на x86 машинах во время периода значительных и быстрых изменений в стратегияхAnonymous

June 10, 2009

Simon Buchan said: A nitpick: considering RAM as a cache for the disk is not quite correct, since the manager can choose to never write a page in RAM to disk (although I believe it ensures there is enough storage in the page file just in case). The file system's in-memory cache can make the same decision, especially if you tell CreateFile that a file is "temporary" and then you delete it quickly. It may never get written to the disk at all, but only ever exist in memory. And yet we still call it the "disk cache"! The reality (in both VM and disk caching) is that we have some stuff in memory, and some stuff on disk, and there's some overlap between the two, and that's about all we can say in general.Anonymous

June 10, 2009

>>For example, when many applications I use attempt to cross the ~2GB limit, they error or crash. >If your app author is unwilling to take on the pain of writing its own memory mapper/unmapper, then clearly it is stuck with not allocating more memory than it can map. -- Eric Which is it? Your main article appeared to say 'It Just Works' but this response seems to contradict that earlier statement. It's always been my experience that if you're going to work with very very large amounts of data, you have to be able to process them without needing it all in memory at the same time. When you hit that stage, you're working with files on disk from the start. It's the same idea as iterating over an enumerator. You don't always want always assume you can Count() or ToList() it because what happens if it's streaming data?Anonymous

June 10, 2009

"Accessing data in RAM, where the information is stored in tiny, lightweight electrons that move at close to the speed of light is much faster than accessing data on disk, where information is stored in enormous, heavy iron oxide molecules that move at close to the speed of my Miata." Ha, this was great. But speaking as an old hard-disk guy, iron oxide is almost as outdated as expanded/extended memory. Your new shiny computer probably uses cobalt thin film media rather than rust on its disk platters. So maybe more like your Ferrari (come on, we know you have one)?Anonymous

June 10, 2009

Well, in my case, i sometimes prefer to disable paging file, thus removing

- the cost of unnecessary disk io

- the chance of allocating memory more than what i have as RAM. All in all, if i don't need too much memory on my laptop (i have 4GB of RAM and it's almost always enough) this method reduces the I/O and also helps to keep myalways moving laptop's harddrive physicaly safer (less IO less chance of bad sectors) In this case, there is no use for page faults, and it's a happy system as long as you don't need big chunks of memory. And trust me, it boosts the performance since Windows DOES paging even if everything fits in RAM, but unable to do so with this method.

Anonymous

June 11, 2009

Thank you for submitting this cool story - Trackback from DotNetShoutoutAnonymous

June 11, 2009

The comment has been removedAnonymous

June 11, 2009

Cool. Reminded me of my operating systems classes in college. A brief explanation of the dining philosophers problem would have been excellent.Anonymous

June 11, 2009

Started programming in the 32-bit systems (x86). I think it makes a lot of sense, although i work mostly with managed code. It explains why an app running on a system with almost 4GB of ram and free disk space of 20GB, throws an out of memory exception when it ram usage bearly 1.5GB (after running for long hours). And it is the only major app running on the system. What do you suggest will solve the problem? (Leaving mapping/unmapping memory aside, as it is managed code). My suggestion: use the CLR memory profiler to figure out why you are using so much memory. If you have a memory leak, fix it. If you don't, figure out what the largest consumer of memory is, and optimize it so that it doesn't consume so much memory. -- EricAnonymous

June 19, 2009

in all of this, I'm curious. What does this set of memory guru's recommend as a swap file setting? Is it Swap File = RAM or Swap File = RAM * 1.5 (or some other setting)? Or, do you really recommend letting Windows manage the swap file size? I'd heard (long ago) that this just eats up system resources letting Windows manage it and I've always opted to just set it to 1.5 times the RAM. Thanks!Anonymous

June 19, 2009

The comment has been removedAnonymous

June 24, 2009

Excellent arcticle. One question. I have a website that relies heavily on caching to reduce database access. About once a day I start to receive OutOfMemory exceptions and the worker process recycles itself. Would you recommend upgrading RAM ( Currently 4GB ) or the OS to 64 bit? Better than either of those strategies would be to fix the actual bug, which is that the caching logic leaks memory. If the cache is growing without bound and eventually filling up all of memory, giving it more room to grow is just making the problem worse, not better. -- EricAnonymous

July 03, 2009

I'm sure this won't be a popular question: I have a number of boxes running XP, and have been for years. I used to be able to run dozens of applications at the same time. Now, after the past few service packs, I've noticed that GIVEN THE SAME INSTALLATIONS, AND THE SAME HARDWARE I cannot run nearly as many applications at the same time. A number of colleagues have noticed similar issues. Its almost as though something has been pushed out in a service pack to peg the number of concurrent apps, or limit memory in some way. Of course this might encourage some to "upgrade" to x64, but given the number of everyday apps that dont install / run on x64 that is not an option. Just to re-iterate - this is not the gradual slowing down of performance we have all come to expect, this has been a distinct change over the past few packs, on several machines. Any thoughts?Anonymous

July 22, 2009

Reminded me of my operating systems classes in UniAnonymous

August 15, 2009

I do like this article quite a bit, and the concept of treating RAM as a disk cache is refreshing. Of course, you did neglect to acknowledge memory allocations that neither (a) mirror data on disk or (b) are ever intended to reach the disk. I'm thinking mainly of the application stack, but also of temporary allocations used for decompressing data, and other such transitive operations. Perhaps it's best to ignore these exceptions for sanity's sake!Anonymous

August 26, 2009

Loddie, the OS already uses the 1.5 x RAM rule for the minimum when there is less than 2GB fitted or 1 x RAM for more than 2GB. The maximum is 3 x RAM in both cases. At least, that's my recollection, I don't have my copy of Windows Internals 5th Edition to hand right now. There really is no right setting for the swap file. In Task Manager, before Windows Vista, Commit Charge Peak shows the maximum amount of the swap file that has been used (actually physical + swap file) since the system was booted. Limit is the current maximum possible commit. ('Commit Charge' - you 'commit' virtual memory to make it usable, 'reserve' just stops anything else from using that address range, but you get access violation exceptions if you try to reference reserved, but not committed, memory. The process is 'charged' for memory it commits, so 'commit charge' is the total memory committed by all processes.) Windows Vista rather more sensibly shows the actual page file usage. Commit Charge Peak isn't shown.Anonymous

November 10, 2009

While horribly late to the party (way beyond fashionable), I'd still like to ask a question. What do I do if this "out of memory" error happens in the managed app running under CLR? I don't get direct access to any memory/pages. So while the problem is clear to me after reading this article, I am in complete darkness as to what should I do about it. Rewrite the program to not use so much memory per process. Or, less good, tell the user to stop throwing problems at the program that require so much memory. -- EricAnonymous

December 15, 2009

Very nice article, explains the concept of a win32's process memory/address space in a very simple way. ThanksAnonymous

January 12, 2010

Great topic. i want to know one thing And about .NET development... If I need to access many GBs of data... the CLR is intelligent enough to do it for me? when there is less than 2GB fitted or 1 x RAM for more than 2GB. The maximum is 3 x RAM in both cases.Anonymous

January 12, 2010

and one thing more A nitpick: considering RAM as a cache for the disk is not quite correct, since the manager can choose to never write a page in RAM to diskAnonymous

July 15, 2010

This was great , it was simple enough to understand but elegantAnonymous

August 01, 2010

Could anyone possibly provide an updated link to the MSDN article regarding the Memory Manager?, the one in the article appears to be dead!Anonymous

March 23, 2011

The link to the MSDN article didn't include the full URL. The correct link is: msdn.microsoft.com/.../system.io.memorymappedfiles.aspxAnonymous

March 26, 2011

i guess that the "...amount of data storage reserved for a process is only limited by the amount of space that the operating system can get on the disk." depends on the size of pagefile.sys ? Or is the data written normally to maybe TEMP folder?Anonymous

July 17, 2011

"Usually it’s conceived as storage being a chunk of physical memory, and that the contents of physical memory are swapped out to disk when physical memory gets too full." and yet this seems like the correct way of viewing it, when you look at a system without a swapfile*. Stuff gets put in RAM, and when there is no more, it dies without a swap file to put things in. There is no chunk of disk storage that's being sped up by being cached in RAM. The swap, if it existed, would serve as overflow for RAM. *anything using Enhanced Write Filter for exampleAnonymous

October 28, 2011

correct me if i get this wrong. so the key thing here seems to be the mapping of virtual memory to virtual address space. if you use default mapping (system pagefile), you will encounter those limits (2GB on 32 bit). if you manually do the mapping, you can use as much as available space on the disk? also, on 64 bit system, there seems to a size limit on system pagefile (8TB). so that will be the limit of how much memory you can allocate?Anonymous

January 30, 2012

I'm a little late to the game, but I wanted to thank you for providing a definitive article on the subject. Sadly, many developers believe they know all there is to know about this- like the guys I used to work with that insisted Windows was horribly, unusably slow unless you disable virtual memory. Yes, I know - everything about that statement is wrong.Anonymous

March 23, 2012

Blake Coverett: I believe you meant AWE is the modern-day Expanded Memory, not PAE. I agree, it is as hackish as the original and only slightly better than nothing.Anonymous

June 28, 2012

Hi, thanks for the great article. you mention that 64 bit machine shouldn't have that problem but my machine 64 and i have 16gb of physical memory. I cache some DB tables into memory and P. Memory goes up to 8gb, only half but i am getting that exception "system out of memory". why is that happening? do you have any idea? thanks a lot.Anonymous

November 17, 2012

i have a application which is showing similar error ..i want to access the data...of that application . how do i do that ?Anonymous

November 25, 2012

The first link (to the explanation of the Windows Memory Manager) has been removed. If you still read commnts this far back, do you happen to know where it went?