Seeding a DAG Database Copy Across Sites Fails Due to DAG Network Configuration

I want to clear up some confusion around Database Availability Group ("DAG") Network configuration that seems to be fairly widespread. When you create a new DAG with New-DatabaseAvailabilityGroup, Exchange will automatically create DAG networks based on the subnets that exist on the network cards of the DAG members. This works fine as long as you don't want to specify one network specifically for MAPI and one for replication, and as long as all your servers are in the same subnet. To read more about how to specify networks for replication, see this link: https://technet.microsoft.com/en-us/library/dd297927.aspx.

In this article I want to talk about what happens when you let Exchange auto-create the DAG networks and you have DAG members in different subnets. For example, suppose we have a two-node DAG stretched across two AD sites in different subnets. After we create the DAG and add the two member servers, the network configuration will look something like this:

As you can see, we have one DAG network for each subnet. Now let's assume you want to specify the 10.0.x.x networks for replication. You would use the Set-DatabaseAvailabilityGroupNetwork cmdlet with the -ReplicationEnabled:$false parameter to disable replication on the "DAGNetwork01" and "DAGNetwork02" networks, which are on the 192.168.x.x subnets.

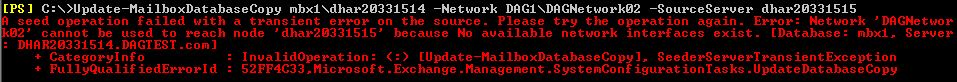

All done, right? We now have replication set to use the 10.0.x.x networks and MAPI should be using 192.168.x.x. We confirm that all routing is setup properly, so that there is no "crosstalk" between the networks. (For example, the 10.0.2.0 network can't route traffic to the 192.168.1.0 network - only to 10.0.1.0). However, now a new problem crops up. When we try to seed a Mailbox Database Copy specifying either replication network, it fails with an error:

Notice the error message - "No available network interfaces exist." What's happening here is that both nodes are trying to use network "DAGNetwork02" and that network only exists on one of the nodes. DAGNetwork02 refers to the 10.0.1.0/24 subnet, which only exists on one node. When the other node tries to use that network, it fails because it has no network interfaces on that subnet. This is why the cmdlet fails with the same error no matter which network you specify. As you might suspect, this means that seeding this way between DAG members in the same subnet will succeed, because they will each have a NIC on the specified subnet.

Now, the fix for this is really simple. We just need to "collapse" the networks so they are grouped by their purpose. We will create one new network for MAPI access and one for Replication use, specifying the networks for each purpose. Here are the cmdlets I ran in my test lab:

New-DatabaseAvailabilityGroupNetwork -DatabaseAvailabilityGroup DAG1 -Name MAPI -Subnets 192.168.1.0/24,192.168.2.0/24 -ReplicationEnabled:$false

New-DatabaseAvailabilityGroupNetwork -DatabaseAvailabilityGroup DAG1 -Name Replication -Subnets 10.0.1.0/24,10.0.2.0/24

Now if we run Get-DatabaseAvailabilityGroupNetwork for the DAG, we see the new networks have been added and the subnets have moved to them:

We still need to remove the auto-generated DAG networks, so we can do that with this cmdlet:

Get-DatabaseAvailabilityGroupNetwork DAG1\DAGNetwork* | Remove-DatabaseAvailabilityGroupNetwork

After confirming each deletion, the output of Get-DatabaseAvailabilityGroupNetwork looks like this:

If we retry seeding, specifying the "DAG1\Replication" network now, it succeeds:

The purpose of this article is really to make you aware that you must perform some manual configuration of your networks when you have a DAG with members in different subnets. This should be a fairly common scenario as more enterprises deploy Exchange 2010, but is easy to miss or misunderstand. I hope this is helpful to you in your deployment.

Comments

Anonymous

January 01, 2003

Great blog Dale! I can just imagine the hits that this is generating as there isn't any formal documentation out there on this issue.Anonymous

August 15, 2013

Awesome information!! Thanks for sharing !!