Hello @Abhishek Gaikwad ,

Yes, you can access ADLS Gen1 and Gen2 account from the same databricks cluster.

Note: If you mount a storage account can be accessed across all the clusters provisioned in the databricks workspace.

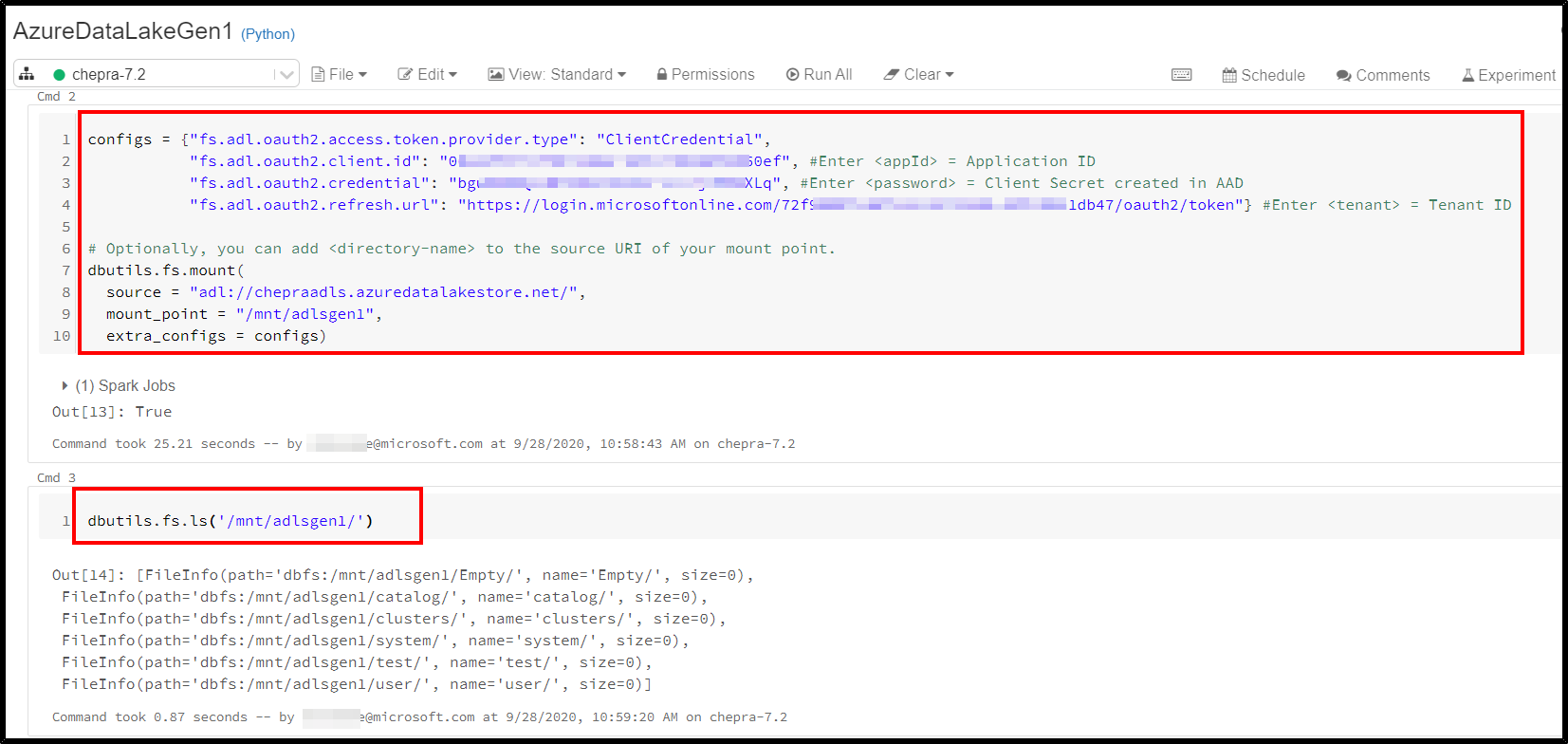

To mount Azure Data Lake Storage Gen1 resource or folder, using the following command:

configs = {"<prefix>.oauth2.access.token.provider.type": "ClientCredential",

"<prefix>.oauth2.client.id": "<application-id>",

"<prefix>.oauth2.credential": dbutils.secrets.get(scope = "<scope-name>", key = "<key-name-for-service-credential>"),

"<prefix>.oauth2.refresh.url": "https://login.microsoftonline.com/<directory-id>/oauth2/token"}

# Optionally, you can add <directory-name> to the source URI of your mount point.

dbutils.fs.mount(

source = "adl://<storage-resource>.azuredatalakestore.net/<directory-name>",

mount_point = "/mnt/<mount-name>",

extra_configs = configs)

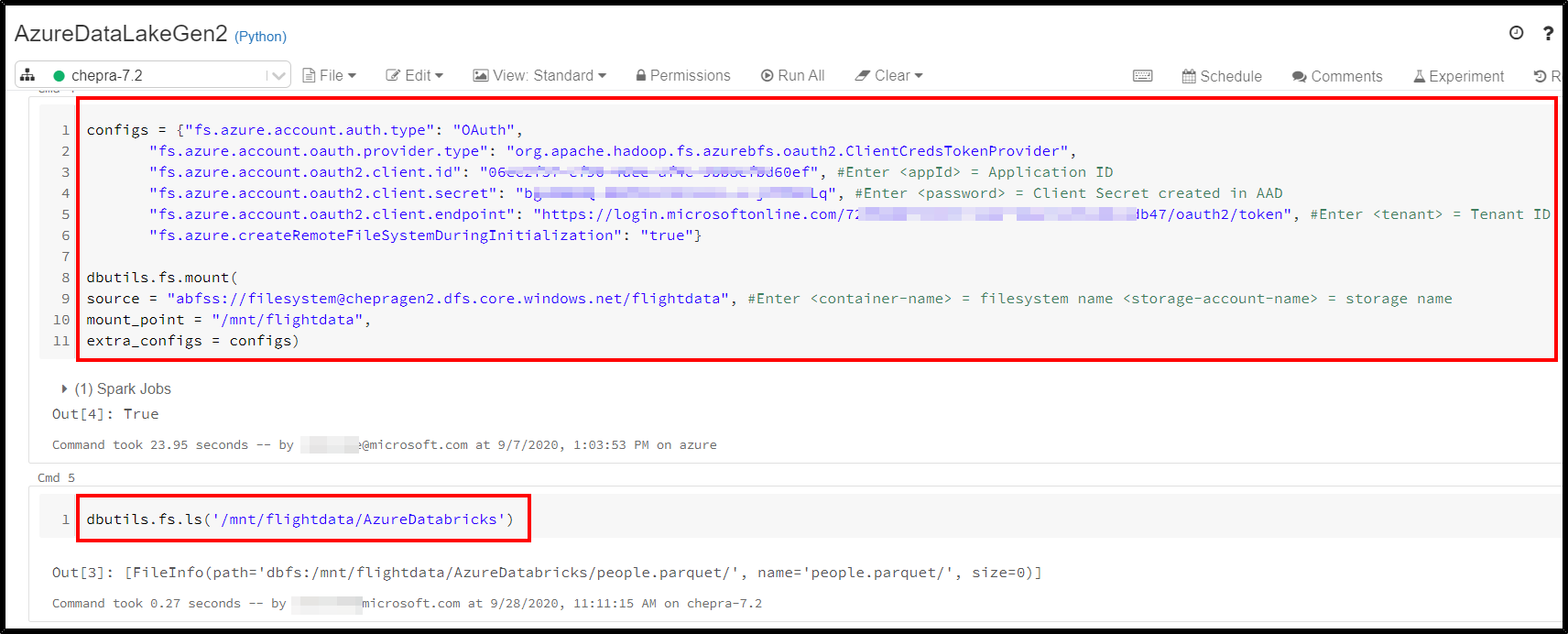

To mount Azure Data Lake Storage Gen2 or a folder inside a container, using the following command:

configs = {"fs.azure.account.auth.type": "OAuth",

"fs.azure.account.oauth.provider.type": "org.apache.hadoop.fs.azurebfs.oauth2.ClientCredsTokenProvider",

"fs.azure.account.oauth2.client.id": "<application-id>",

"fs.azure.account.oauth2.client.secret": dbutils.secrets.get(scope="<scope-name>",key="<service-credential-key-name>"),

"fs.azure.account.oauth2.client.endpoint": "https://login.microsoftonline.com/<directory-id>/oauth2/token"}

# Optionally, you can add <directory-name> to the source URI of your mount point.

dbutils.fs.mount(

source = "abfss://<file-system-name>@<storage-account-name>.dfs.core.windows.net/",

mount_point = "/mnt/<mount-name>",

extra_configs = configs)

Hope this helps. Do let us know if you any further queries.

----------------------------------------------------------------------------------------

Do click on "Accept Answer" and Upvote on the post that helps you, this can be beneficial to other community members.