Hello,

What I want to do is to to detect reflective targets on the infrared frame and to create a sphere in its real position in the world.

What I do for now, which kinda works, is to get the infrared frame and detect the targets position, get the depth frame and get the value of the pixel corresponding to the center of the target in the infrared frame (same camera so same pixel) to have the depth in millimeters, and finally change the coordinate system to have the in world coordinates.

To do all that, I use the ResearchMode API as illustrated in the Hololens2forCV samples https://github.com/microsoft/HoloLens2ForCV (and mostly the SensorVisualization sample)

But positions of created spheres are not great, some are close to real targets but others are not in the good place. So I tried to display each steps to see where something is wrong : targets in the depth frame, targets in the left front VLC camera (which is the origin of the device based on the Research Mode API documentation), and then targets in the RGB main camera.

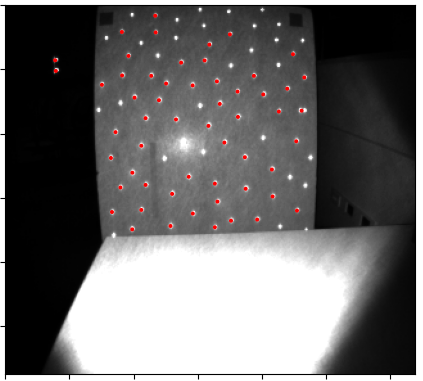

Here is the infrared camera and in red, targets detected :

As you can see, the image is distorted.

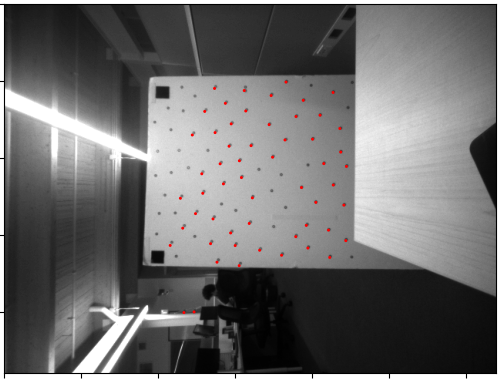

And now the same targets but in left front VLC after changing their coordinate system :

We can see that there is already a shift in some targets. I suspect this is because of the distortion in the depth frame.

Here is the code I made to do that :

// x and y are pixel positions of target in infrared frame

float xy[2] = { x, y };

float uv[2] = { 0 };

// m_pCameraSensor is the depth long throw sensor

m_pCameraSensor->MapImagePointToCameraUnitPlane(xy, uv);

float3 pixelCoordInDepth = { uv[0], uv[1], 1.0f };

pixelCoordInDepth *= depth; // depth is the value of depth frame pixel in (x, y)

m_tmpDepthPos3D.push_back(pixelCoordInDepth);

// get position of pixel in device == VLC left front camera

DirectX::XMFLOAT4X4 DXExtrinsicMatrixTmp;

winrt::check_hresult(m_pCameraSensor->GetCameraExtrinsicsMatrix(&DXExtrinsicMatrixTmp));

DirectX::XMMATRIX DXExtrinsicMatrix = DirectX::XMLoadFloat4x4(&DXExtrinsicMatrixTmp);

DirectX::XMMATRIX DXInvertExtrinsicMatrix = DirectX::XMMatrixInverse(nullptr, DXExtrinsicMatrix);

float4x4 invertExtrinsicMatrix;

DirectX::XMStoreFloat4x4(&invertExtrinsicMatrix, DXInvertExtrinsicMatrix);

auto pixelCoordInDevice = transform(pixelCoordInDepth, invertExtrinsicMatrix);

pixelCoordInDevice /= pixelCoordInDevice.z;

uv[0] = pixelCoordInDevice.x;

uv[1] = pixelCoordInDevice.y;

float ab[2] = { 0 };

m_pLFCameraSensor->MapCameraSpaceToImagePoint(uv, ab);

// ab is pixel positions of target in VLC left front camera

I get the frames same way it is done in the Hololens2forCV github. Maybe the problem is elsewhere, but I suspect it is because of distortion.

Based on the "Distortion error" on this page https://video2.skills-academy.com/en-us/windows/mixed-reality/develop/advanced-concepts/locatable-camera-overview, there is a difference between preview streams which are undistorted and "normal" streams. I believe ResearchMode gives us preview streams, but I would like to get normal streams. How is it possible ?

Thanks in advance, I wanted to ask this in the github but it seems quite dead.