@jigsm You can try the below approach if this helps:

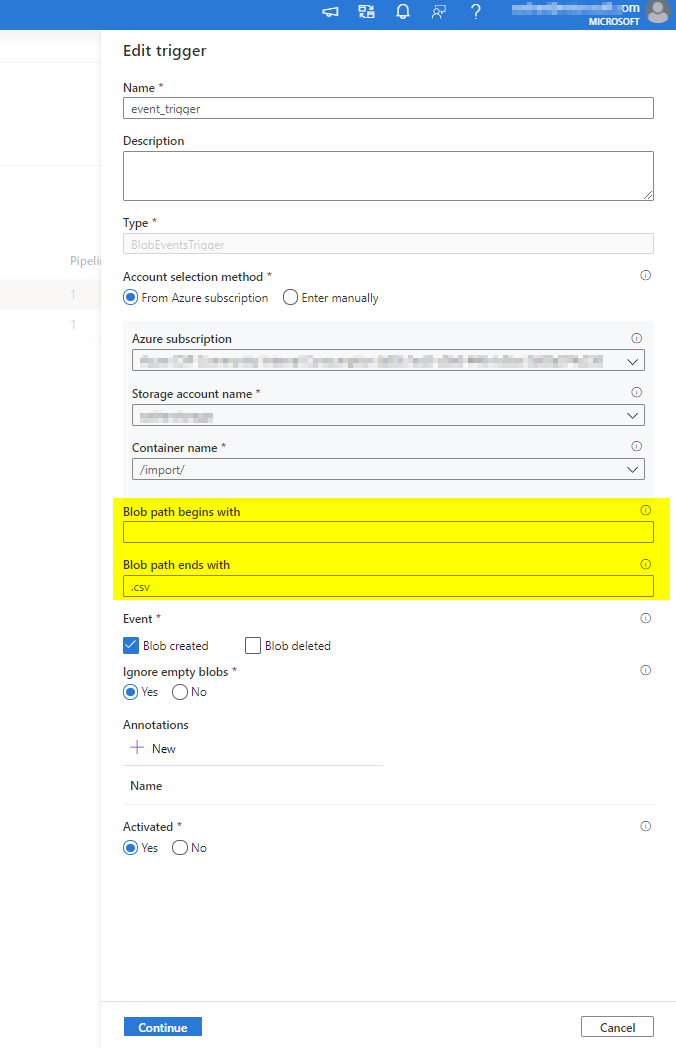

While creating the event trigger you can leave the "Blob path begins With" property as blank and "Blob path ends with" as ".csv" to consider all incoming blobs to the container which can reside under any of the underlying folders tenant1 or tenant2.

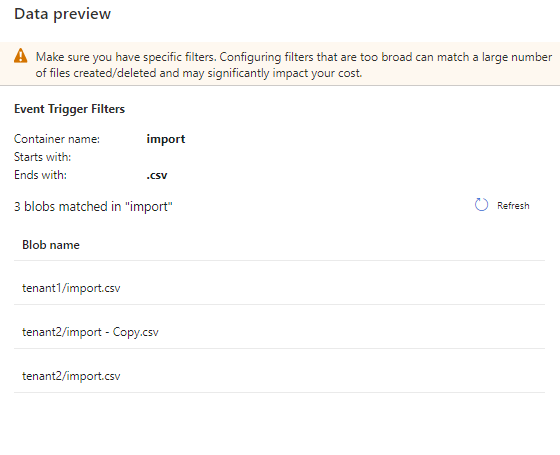

Also, when you click continue you can see the blobs being detected under any of these folders.

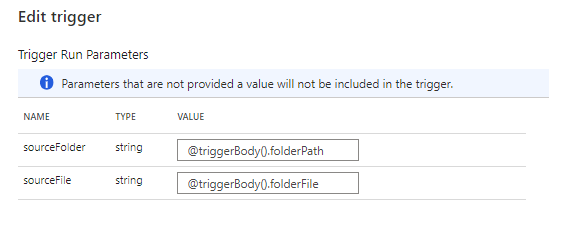

The event trigger captures the folder path and file name of the blob into the properties @triggerBody().folderPath and @triggerBody().fileName. So, to use the values of these properties in your pipeline you need to map these properties to pipeline parameters. Then you can access the values captured by trigger through @pipeline().parameter.parameterName in your pipeline.

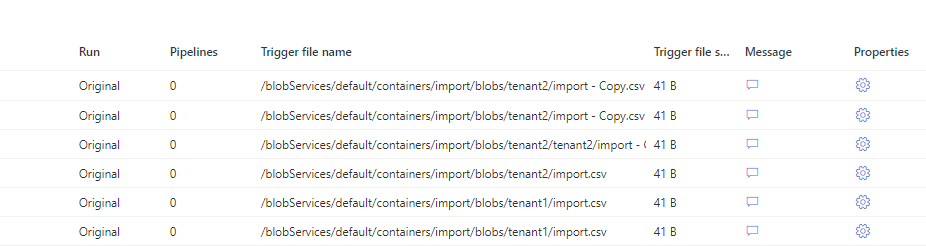

So, your pipeline will be triggered when a new file is uploaded in your blob container in either tenant1 or tenant2 folder path. (see trigger runs screenshot below)

Only problem with this approach is the pipeline will be triggered even if the blob is uploaded in the container outside of the folder structure (tenant1, tenant2 etc.) as well. If your csv file is always going to come under these folders then you won't have any issues otherwise you may need to add logic in your pipeline to perform activity if file comes under your tenant specific folder paths.

----------

Please do not forget to "Accept the answer" wherever the information provided helps you to help others in the community.