Data Factory copy activity writes a huge file from a tiny file : throttling error ?

Hello,

A copy activity has written very large files (over 1TB) when the files should have been tiny (less than 1MB). Here are the details :

- The activity copy has an SFTP source, binary, and ADLS Gen 2 sink, binary as well.

- The files have a ".csv.zip" extension, though it shouldn't matter with binary datasets.

- The same file were copied successfully in previous pipelines

- Among 5 221 files copied in this activity, there are 3 files that were copied as extra large files (8.6KB to 1.3 TB ; 296B to 1.7TB ; 828B to 1.3TB)

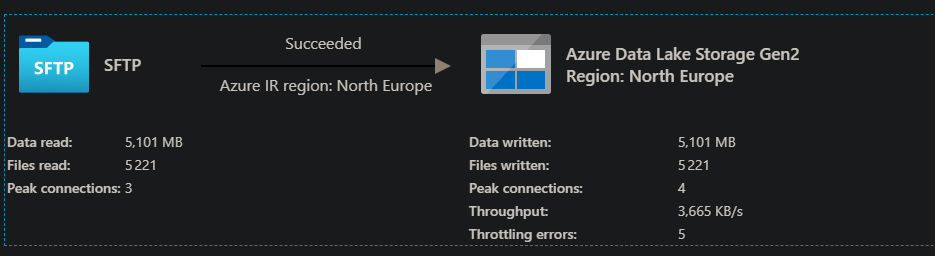

- See below the copy activity monitor : note that it displays only a little more than 5GB copied, and the 5 throttling errors (that I have never seen before and are not in our pipeline's other copy activities)

- The copy activity has a normal output except for the throttling errors, with this message :

"Tip": "5 write operations were throttled by the sink data store. To achieve better performance, you are suggested to check and increase the allowed request rate for Azure Data Lake Storage Gen2, or reduce the number of concurrent copy runs and other data access, or reduce the DIU or parallel copy.",

"ReferUrl": "https://go.microsoft.com/fwlink/?linkid=2102534 ",

"RuleName": "ReduceThrottlingErrorPerfRecommendationRule"

}

Information about throttling errors here only mention long steps for the copy activity.

With the activity's output not being alarming except for throttling errors, it seems that it did copy only 5GB of files, but has written some of these files' metadata improperly, thus the size over 1TB displayed in storage explorer.

This also means that we're getting errors in our subsequent copy activities, as they think the file is over 1TB :

Operation on target Lan to Arc failed: Failure happened on 'Sink' side. ErrorCode=AdlsGen2OperationFailed,'Type=Microsoft.DataTransfer.Common.Shared.HybridDeliveryException,Message=ADLS Gen2 operation failed for: Operation returned an invalid status code 'RequestedRangeNotSatisfiable'. Account: 'XXX'. FileSystem: 'XXX'. Path: 'XXX.csv.zip'. ErrorCode: 'InvalidRange'. Message: 'The range specified is invalid for the current size of the resource.'.

Any info on where this could come from ?

Edit with additional info :

- We are using an Azure Auto-resolve Integration Runtime