@Daniel Pearson - Thanks for the question and using MS Q&A platform.

It sounds like you are having trouble creating tables in your serverless Lake Database in Synapse Analytics workspace using Azure Synapse Link. Let me see if I can help you with that.

Firstly, it is correct that when you use Azure Synapse Link to export your Dynamics tables to Azure Data Lake Storage, it should automatically create a serverless Lake Database in your Synapse Analytics workspace. However, the tables from the CSV files are not automatically created in this database. You will need to create external tables in the serverless SQL pool to access the data in the CSV files.

To create external tables, you can use T-SQL CREATE EXTERNAL TABLE command. Here is an example:

CREATE EXTERNAL TABLE [dbo].[MyTable]

(

[Column1] [nvarchar](50) NOT NULL,

[Column2] [nvarchar](50) NOT NULL,

[Column3] [nvarchar](50) NOT NULL

)

WITH

(

LOCATION='/MyFolder/MyFile.csv',

DATA_SOURCE = MyDataSource,

FILE_FORMAT = MyFileFormat,

REJECT_TYPE = VALUE,

REJECT_VALUE = 0

);

In this example, MyTable is the name of the external table you want to create, and Column1, Column2, and Column3 are the columns in the CSV file. You will need to replace MyFolder/MyFile.csv with the location of your CSV file in your Azure Data Lake Storage, and MyDataSource and MyFileFormat with the names of your data source and file format.

Follow-up question: What are the appropriate permissions for the storage account and the Synapse Analytics workspace? I'm not hugely familiar with the role assignments. Can you give me some suggestions and how to add?

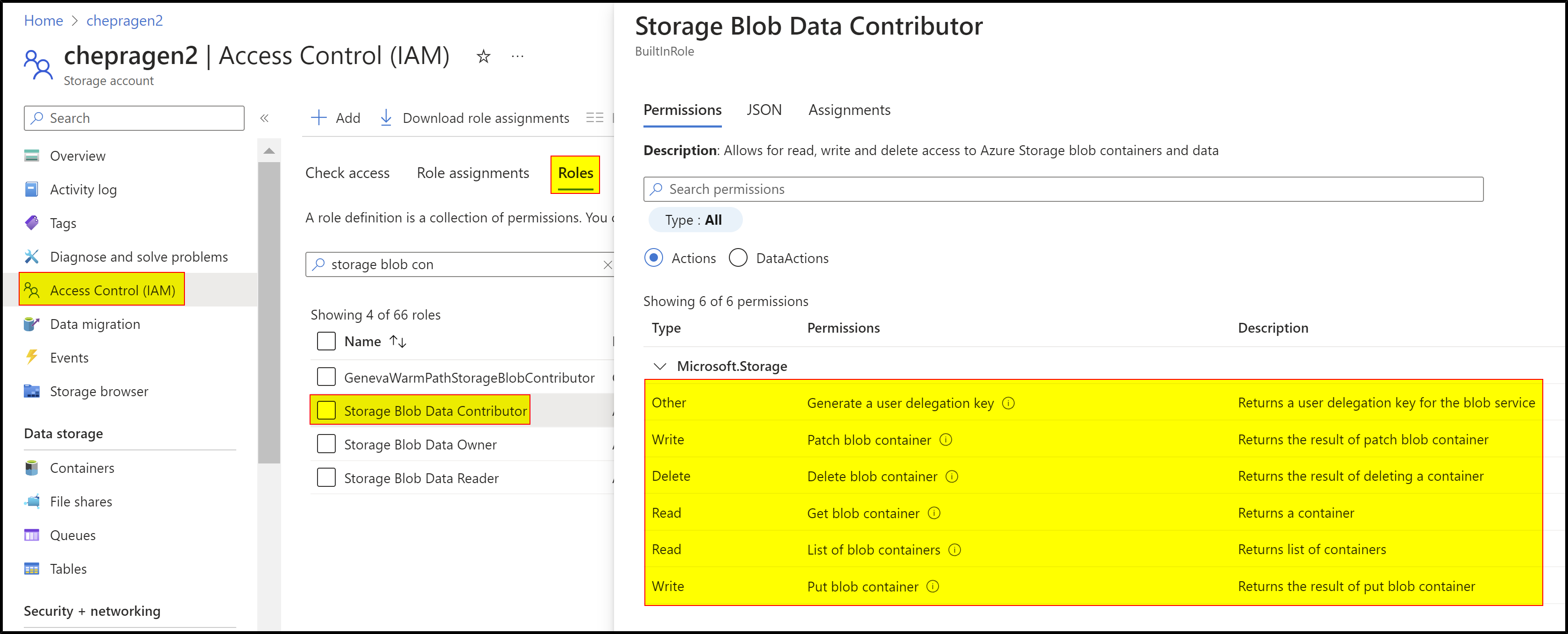

The Storage Blob Data Contributor role is a built-in role in Azure that provides read, write, and delete access to blob containers and data. This role is necessary for Synapse Analytics workspace to access data in Azure Data Lake Storage Gen2 (ADLS Gen2).

When you assign the Storage Blob Data Contributor role to a user or service principal at the level of a container or storage account, they are granted read, write, and delete access to all of the blobs in that container or storage account. This role is required for Synapse Analytics workspace to access data in ADLS Gen2.

In summary, the Storage Blob Data Contributor role is important for Synapse Analytics workspace to access data in ADLS Gen2, and it grants the minimum permission required to read, write, and delete containers and blobs.

Why do we need a Storage Blob Data Contributor role for Azure Synapse users?

Note: Storage Blob Data Contributor: Use to grant read/write/delete permissions to Blob storage resources.

If you are not assigning "Storage Blob Data Contributor" to other synapse users, they will be not able to access the data from ADLS gen2 due to the lack of permission on the storage account.

If they try to access data from ADLS gen2 without the "Storage Blob Data Contributor" role on the storage account, they will receive the following error message:

Error: org.apache.spark.sql.AnalysisException: java.lang.RuntimeException: Operation failed: \"This request is not authorized to perform this operation using this permission", 403, HEAD, https://storage_account.dfs.core.windows.net/synapse/tmp/hive?upn=false&action=getStatus&timeout=90;";

For more details, refer to How to set up access control for your Azure Synapse workspace

Hope this helps. Do let us know if you any further queries.

If this answers your query, do click Accept Answer and Yes for was this answer helpful. And, if you have any further query do let us know.