I have some pipelines that ingest data from Oracle and write directly to Delta in a lakehouse on Fabric.

I have other pipelines that ingest data from Oracle and write to a .parquet file in a lakehouse on Fabric.

The pipelines that write directly to Delta are staying in the Azure Data Factory queue for an eternity and don’t even start as "In Progress."

At the source, I run a query, and when I click on "View Data," I see the full data. In the collector, the connection is successful.

This problem of staying in the queue started after the Fabric capacity was exceeded, but the writing to .parquet files is running normally.

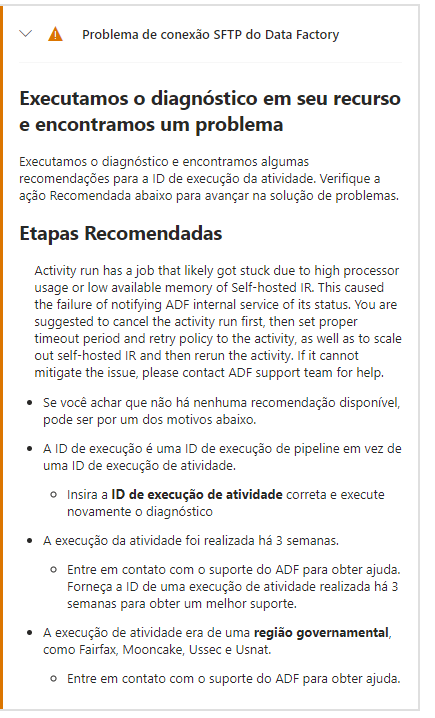

When i copy the pipeline id and run diagnostics, its returns the messagem attached in the picture bellow.

There is nothing running in the azure data factory, still all the integration runtime available only for this pipeline that is very light, lessa than 100 lines, no treatment, only a copy activity.