Hello @Nallaperumal, Natarajan (Cognizant) ,

Welcome to the Microsoft Q&A platform.

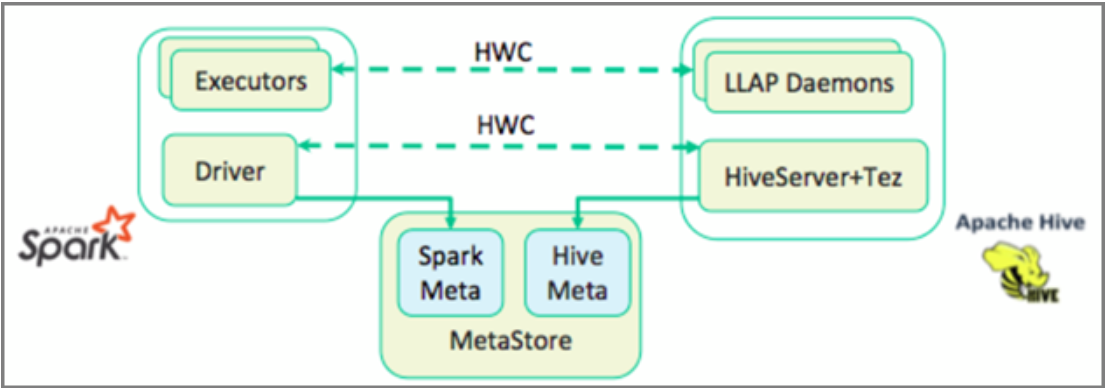

Yes, Apache Hive Warehouse Connector (HWC) is required to integrate Apache Spark and Apache Hive clusters.

The Apache Hive Warehouse Connector (HWC) is a library that allows you to work more easily with Apache Spark and Apache Hive. It supports tasks such as moving data between Spark DataFrames and Hive tables. Also, by directing Spark streaming data into Hive tables. Hive Warehouse Connector works like a bridge between Spark and Hive. It also supports Scala, Java, and Python as programming languages for development.

Reference: Integrate [Apache Spark and Apache Hive with Hive Warehouse Connector in Azure HDInsight][2].

Hope this helps. Do let us know if you any further queries.

- Please accept an answer if correct. Original posters help the community find answers faster by identifying the correct answer. Here is [how][3].

- Want a reminder to come back and check responses? Here is how to subscribe to a notification. [2]: https://video2.skills-academy.com/en-us/azure/hdinsight/interactive-query/apache-hive-warehouse-connector [3]: https://video2.skills-academy.com/en-us/answers/articles/25904/accepted-answers.html