Hello @Abdul Sattar ,

Thanks for the ask and using the Microsoft Q&A platform .

I think you forgot to mount the container .

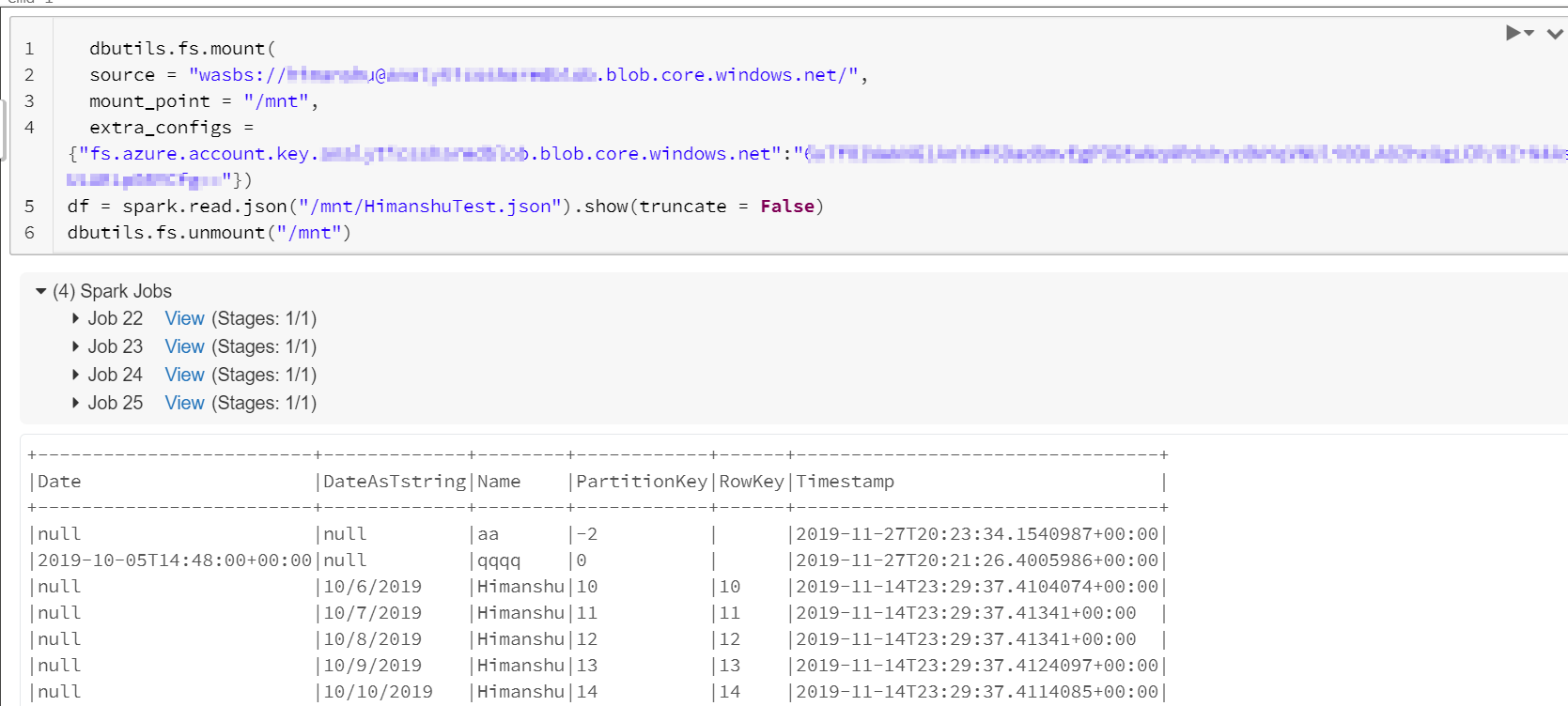

I have tested the below code and it is working . I am using the access key from the blob .

dbutils.fs.mount(

source = "wasbs://yourcontainer@ yourstorageaccount.blob.core.windows.net/",

mount_point = "/mnt",

extra_configs = {"fs.azure.account.key.yourstorageaccount.blob.core.windows.net":"acccess key from the portal"})

df = spark.read.json("/mnt/HimanshuTest.json").show(truncate = False)

dbutils.fs.unmount("/mnt")

Output

Thanks

Himanshu

Please do consider to click on "Accept Answer" and "Up-vote" on the post that helps you, as it can be beneficial to other community members