@AntonioAli-4907 Is it possible to share details of your experiment and issue from the ml.azure.com portal for a service engineer to lookup the issue? This option is available from the top right hand corner of the portal by clicking the smiley face, Please select the option Microsoft can email you about the feedback along with a screen shot so our service team can lookup and advise through email.

Real-time endpoint response is empty

Hi, I've created and deployed NLP pipeline as a real-time endpoint, but the response that I get is an empty result ( {"Results":{}} ) with Status 200 OK.

I have the Owner role, so i think that the permission is not a problem. I've also tried to deploy the preset experiment "Sample 1: Regression - Automobile Price Prediction (Basic)" using the same resources and it returned the results without a problem.

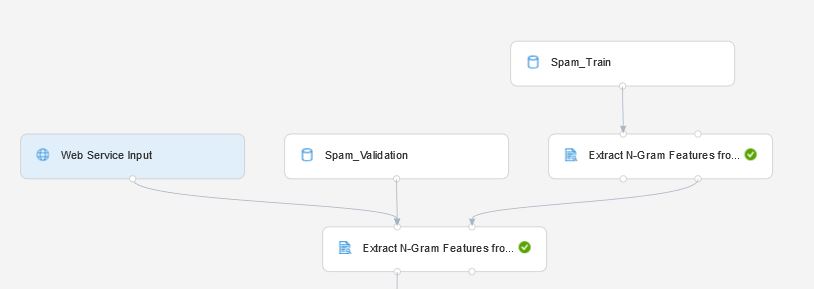

In the training pipeline, I've used the train and validation datasets. After creating the real-time inference pipeline, it automatically created two Web Service inputs the one linked to the train dataset input is irrelevant in the real-time inference pipeline as it's used only to create the Vocabulary for the Extract N-Gram Features from Text module linked to the validation dataset.

After that, I've deleted the Web Service input connected to the training dataset and tried to deploy the real-time endpoint.

After submitting the training pipeline, I've saved the Result Vocabulary from the Extract N-Gram Features from Text module that is connected to the training dataset and used it as an input vocabulary for the N-Gram module (ReadOnly vocabulary mode) in the real-time inference pipeline and deployed that as a real-time endpoint.

In both cases above, I didn't get any errors, scoring and evaluation of a model are correct but the response from the endpoint is empty.

It's worth mentioning, that if I leave the real-time pipeline as is (with two inputs) it doesn't work because of the parameter settings in the Extract N-Gram Features from Text module where training dataset is connected.