Hello @Manoj Kumar ,

Welcome to the Microsoft Q&A platform.

I'm able to successfully get all the list of files present in the mount point by using the same code mentioned in the document.

Could you please share the code which you are running along with the complete stack trace of the error message which you are experiencing?

How this InMemoryFileIndex.bulkListLeafFiles() function works?

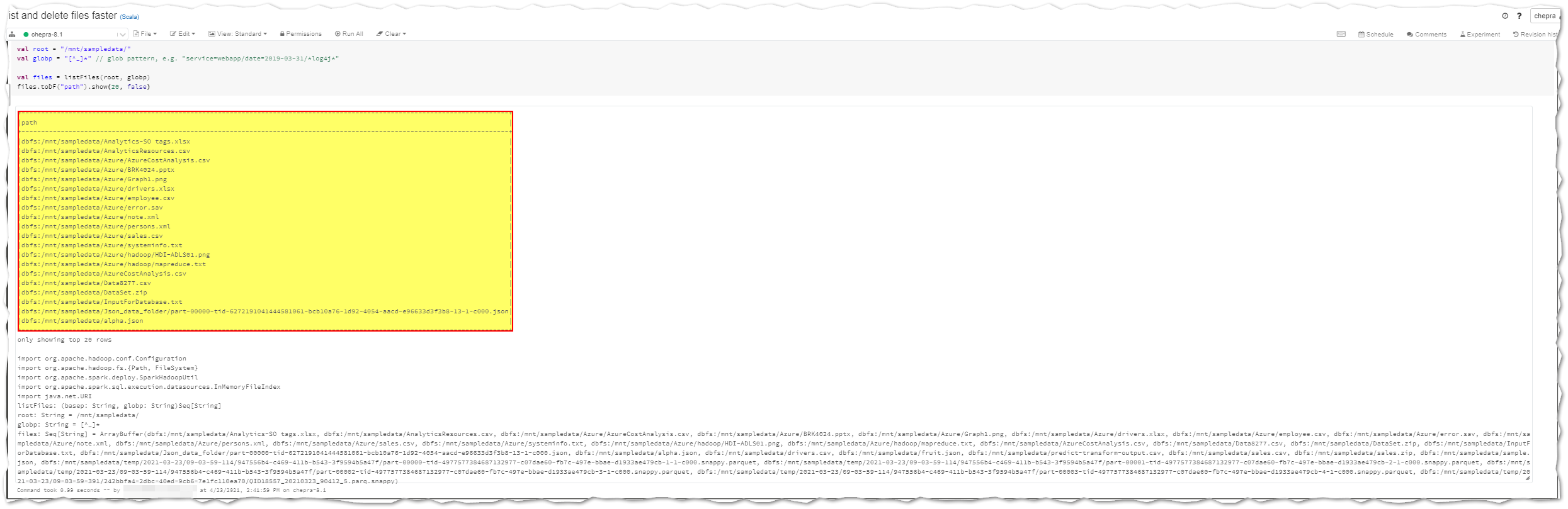

The listFiles function takes a base path and a glob path as arguments, scans the files and matches with the glob pattern, and then returns all the leaf files that were matched as a sequence of strings.

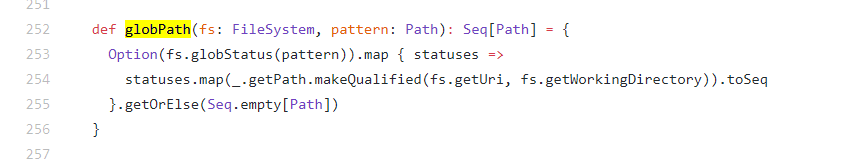

The function also uses the utility function globPath from the SparkHadoopUtil package. This function lists all the paths in a directory with the specified prefix, and does not further list leaf children (files). The list of paths is passed into InMemoryFileIndex.bulkListLeafFiles method, which is a Spark internal API for distributed file listing.

Neither of these listing utility functions work well alone. By combining them you can get a list of top-level directories that you want to list using globPath function, which will run on the driver, and you can distribute the listing for all child leaves of the top-level directories into Spark workers using bulkListLeafFiles.

The speed-up can be around 20-50x faster according to Amdahl’s law. The reason is that, you can easily control the glob path according to the real file physical layout and control the parallelism through spark.sql.sources.parallelPartitionDiscovery.parallelism for InMemoryFileIndex.

Hope this helps. Do let us know if you any further queries.

------------

Please don’t forget to Accept Answer and Up-Vote wherever the information provided helps you, this can be beneficial to other community members.