@azureuser586-9670, Welcome to the Microsoft Q&A platform.

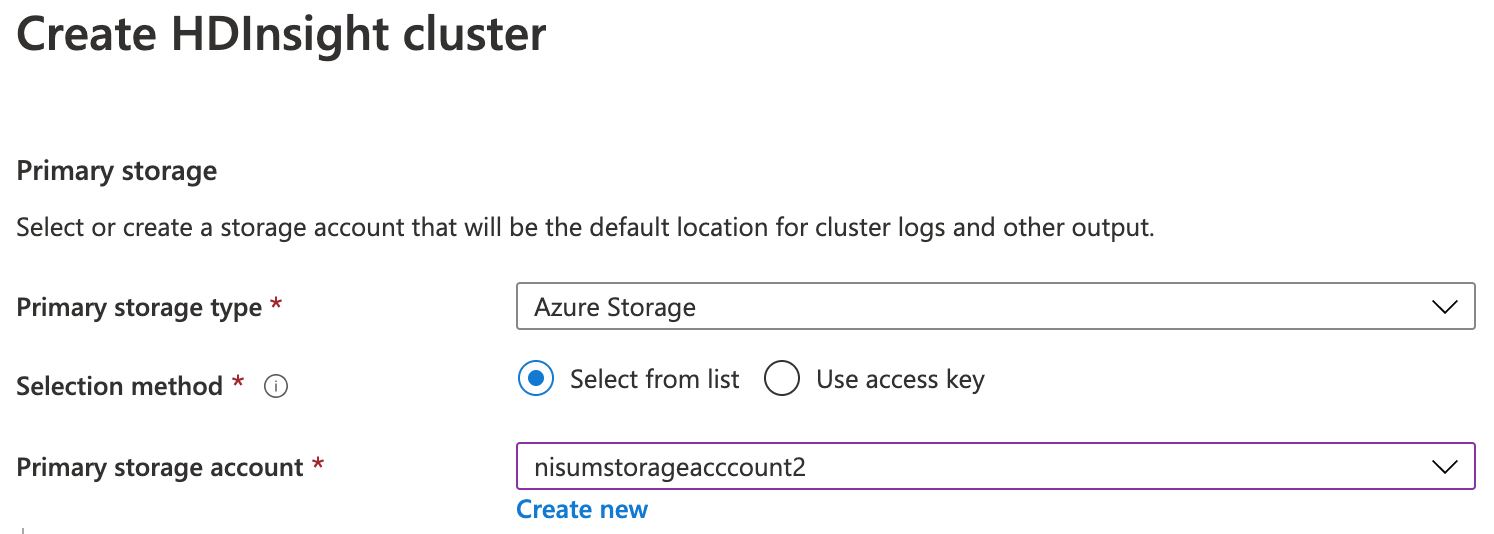

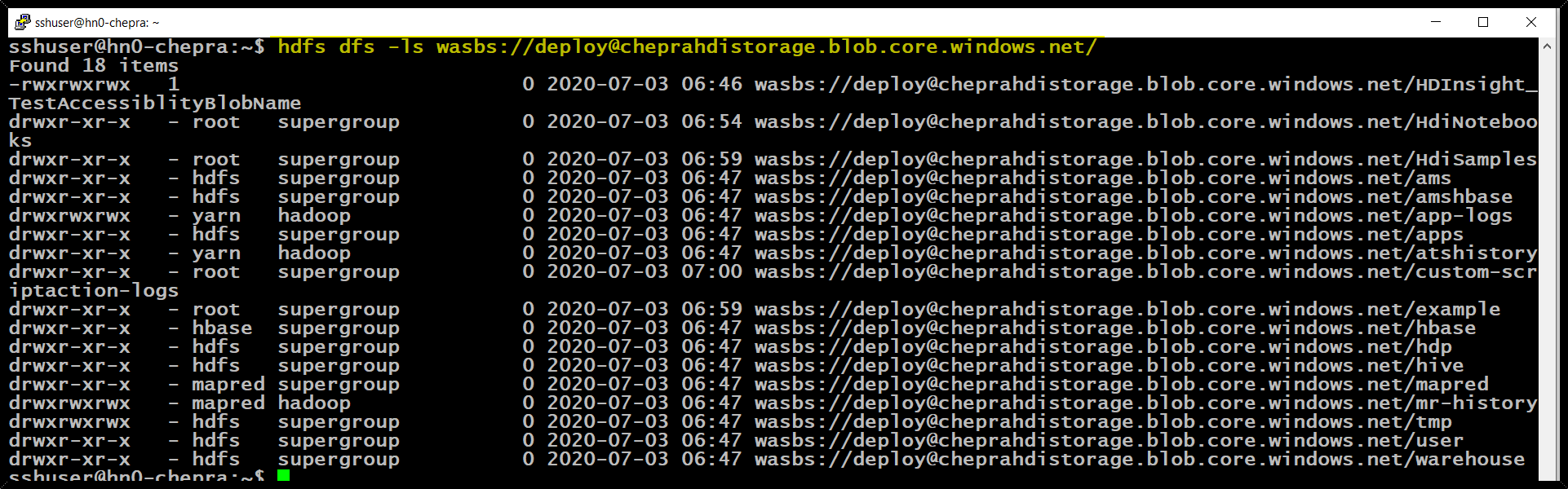

Have you attached the storage account to the HDInsight cluster?

Note: Accessing the data residing in the external storage which is not configured in HDInsight cluster is not allowed.

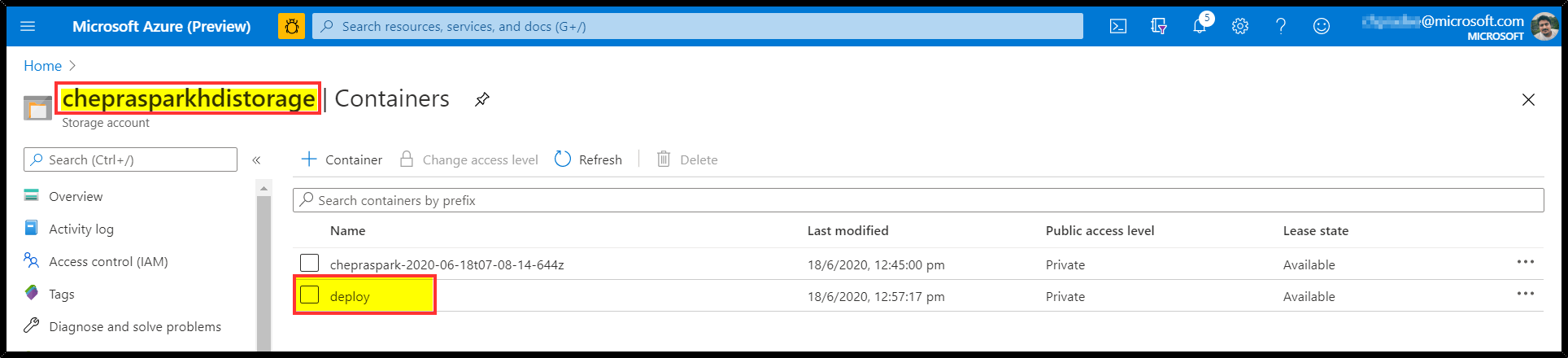

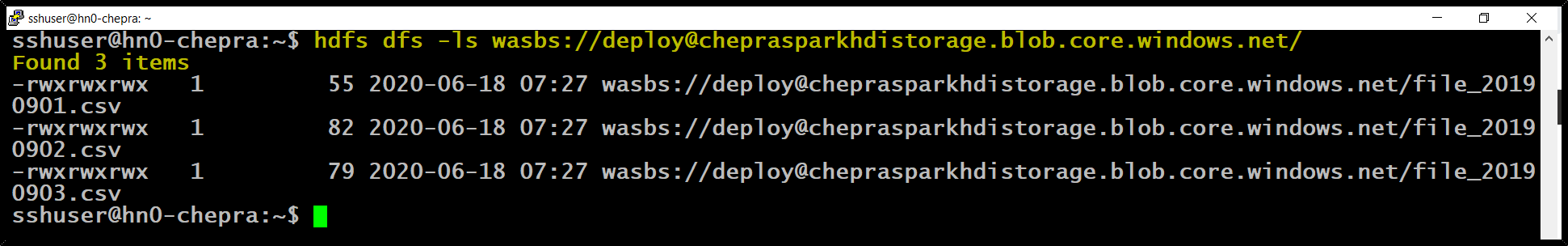

If you want to access the data residing on the external storage. Then you will have to add that storage as additional storage in the HDInsight cluster.

Steps to add storage accounts to the existing clusters via Ambari UI:

Step 1: From a web browser, navigate to https://CLUSTERNAME.azurehdinsight.net, where CLUSTERNAME is the name of your cluster.

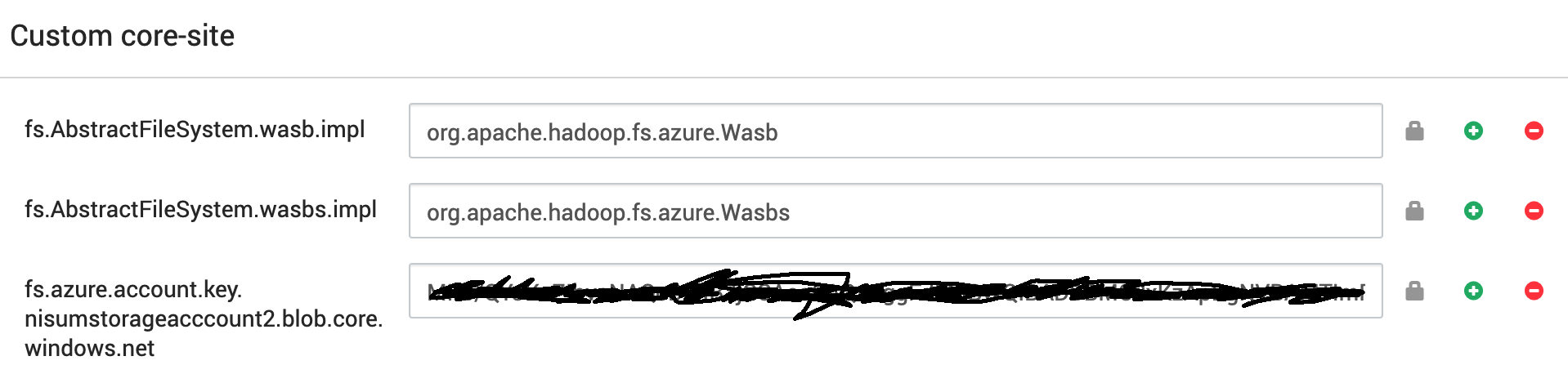

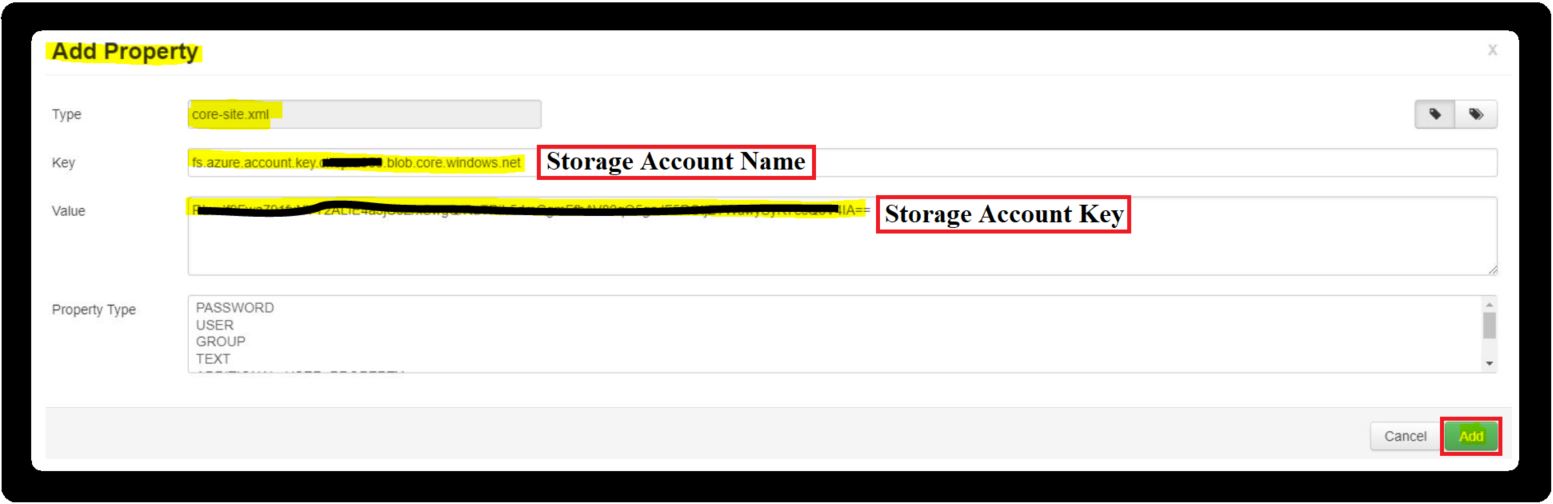

Step 2: Navigate to HDFS -->Config -->Advanced, scroll down to Custom core-site

Step 3: Select Add Property and enter your storage account name and key in following manner

Key => fs.azure.account.key.(storage_account).blob.core.windows.net

Value => (Storage Access Key)

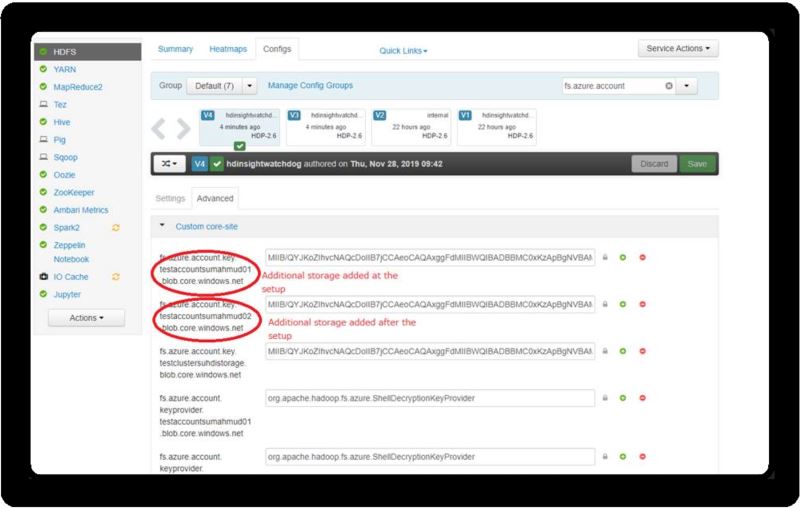

Step 4: Observe the keys that begin with fs.azure.account.key. The account name will be a part of the key as seen in this sample image:

Reference: Add additional storage accounts to HDInsight

Hope this helps. Do let us know if you any further queries.

Do click on "Accept Answer" and Upvote on the post that helps you, this can be beneficial to other community members.