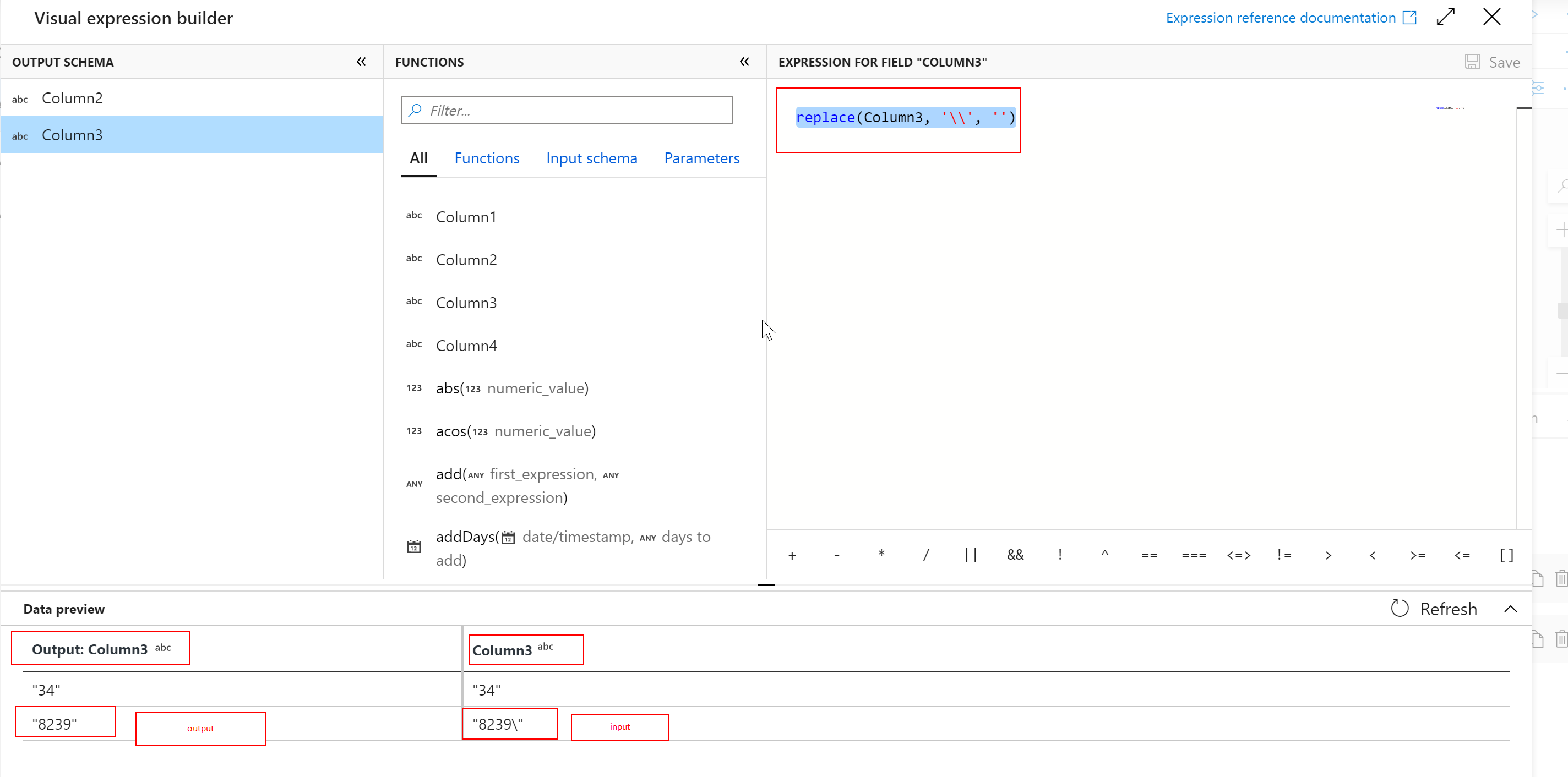

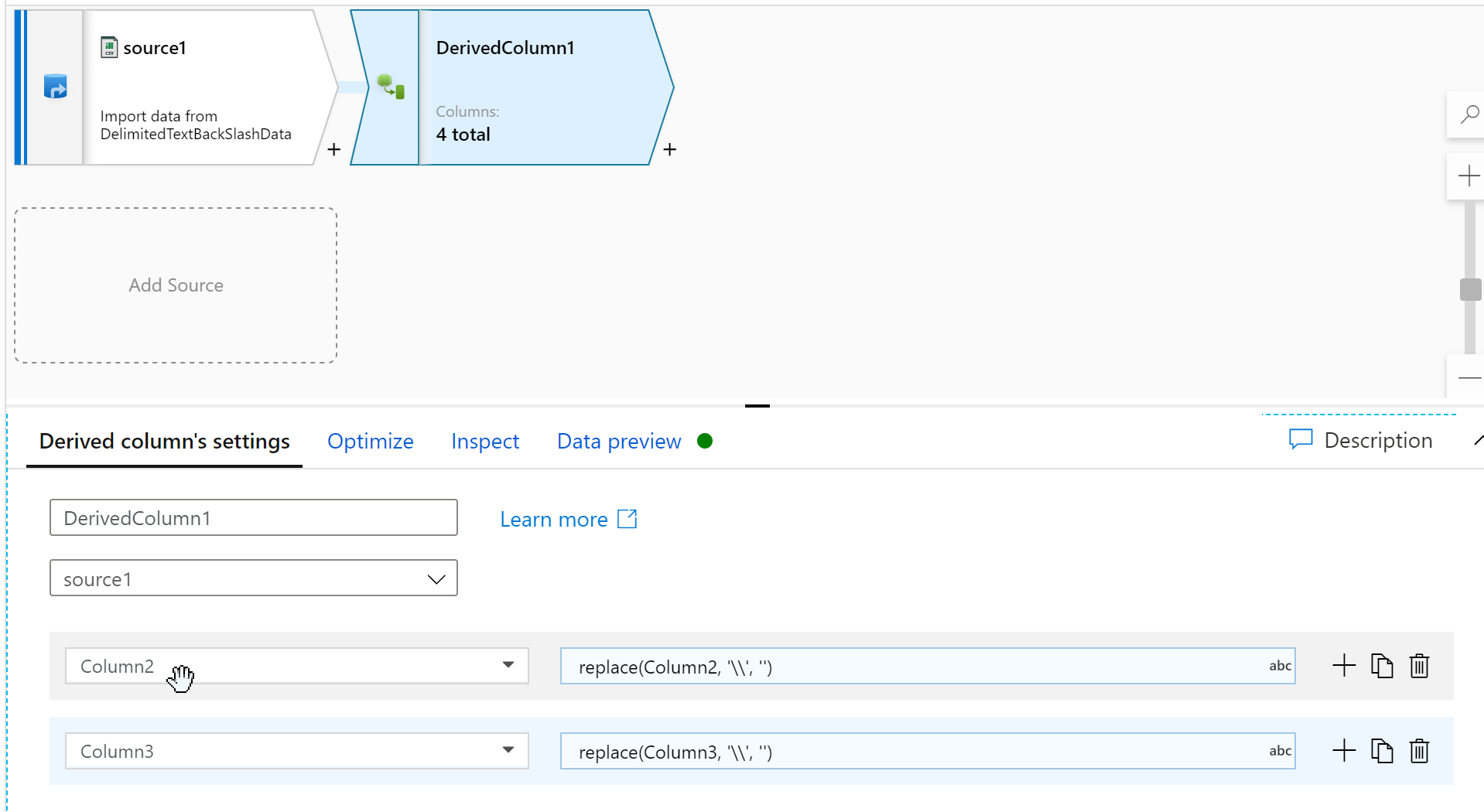

We have bad data from a source that is unlikely to update it on their end. They have multiple columns where the value in the column is 01F\ or 8239\ and the backslash is written in their spec to be part of the value, not to be considered an escape character like it is standardized to be in the rest of the world.

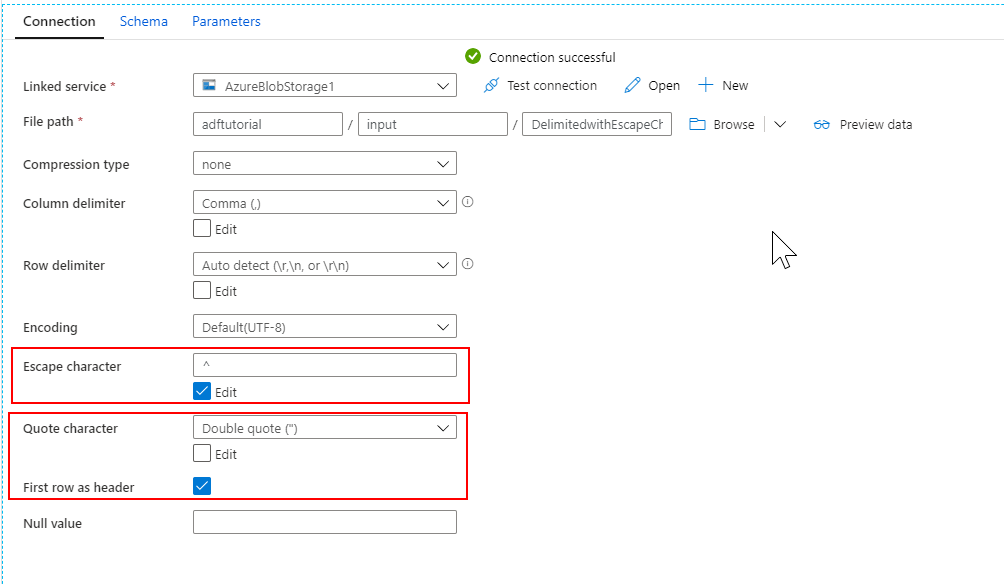

The overall set up of the files are that they are comma delimited, each column's contents is in " ", and we have all of the normal new line characters. It's just the backslash that is not complying with standards. E.g.

"Column 1","Column 2","Column 3","Column 4"

"John","01F\","34","NY"

"Jane","3K","8239\","CA"

|---------------------|------------------|------------------|------------------|

| Column 1 | Column 2 | Column 3 | Column 4 |

|---------------------|------------------|------------------|------------------|

| "John" | "01F\" | "34" | "NY" |

|---------------------|------------------|------------------|------------------|

| "Jane" | "3K" | "8239\" | "CA" |

|---------------------|------------------|------------------|------------------|

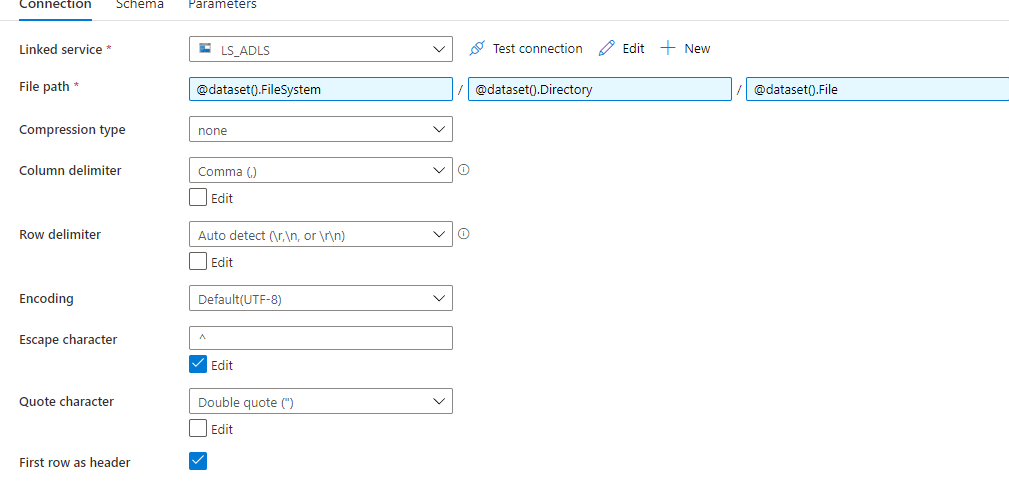

In Azure Data Factory we are trying to see if we can make it ignore the \ as being an escape character. (FYI when we leave it as being treated as an escape character, it pulls the column right after the column with the backslash into one column). We can see in the data set where to set it so that there is no escape character.

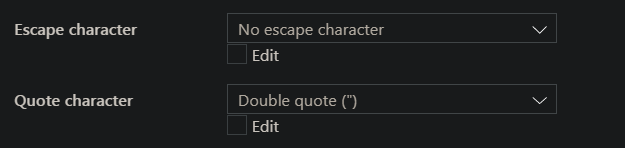

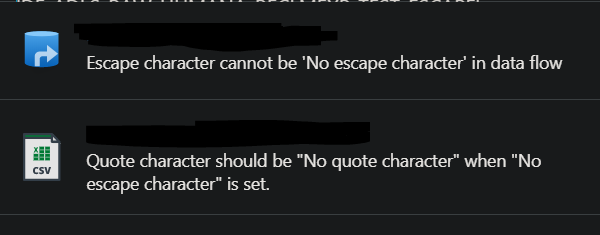

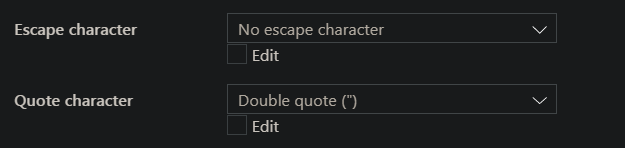

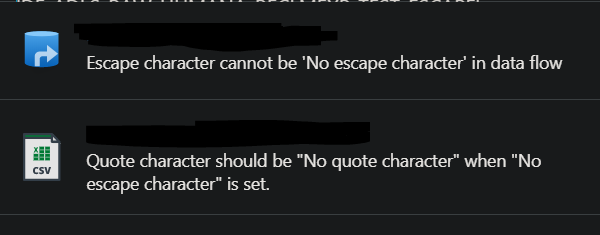

However when we then add that data set to our data flow and attempt to preview the data there, we get an error that we can't have no escape character in the data flow, and that quote character should be no quote character when we have no escape character.

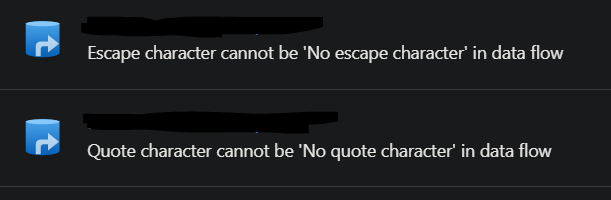

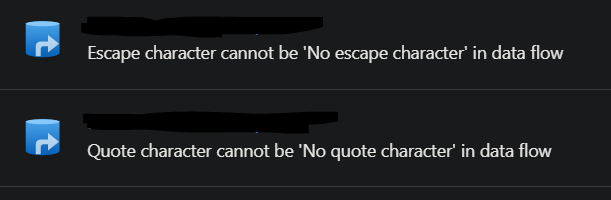

If we try to go back and also set no quote characters (which we don't actually want to do, just to test if that'll let it work), we get an error that the data flow can't have no escape or quote characters.

Are these two options supposed to work in Azure Data Factory? Or is there somewhere else we need to update additional settings to make this work?