Hello @Birajdar, Sujata ,

Thanks for the ask and using Microsoft Q&A platform .

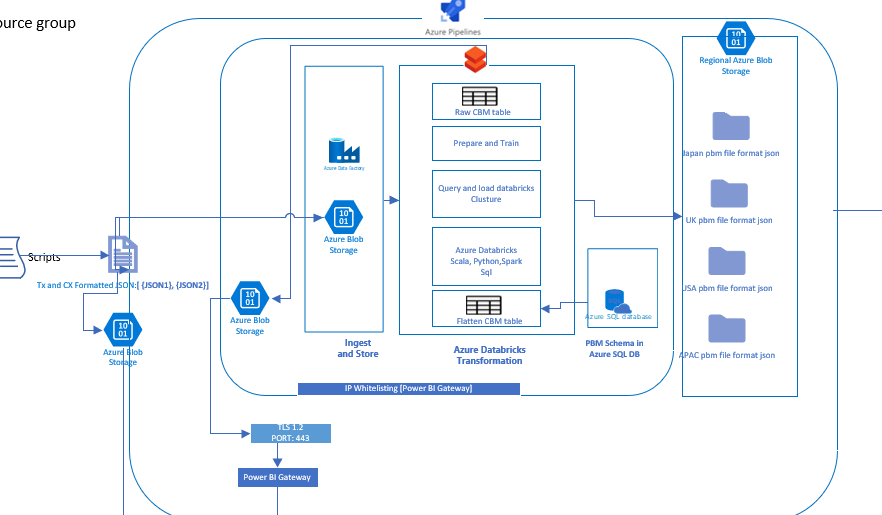

Dataframe not being persisitent is my design and I think thats one of the key reason why operations on the ADb is faster then Hadoop . But then if you want you can always write some audit data in the storage blob to keep an eye on the status . if you are concerned that if there is an compute issues while the processing is happeing the whole design of head/worker should take of that . I do understand that since you are transferring 50 million records you definitely do not want hit any error at the later part of the processing which makes you redo the whole thing again .

If I were you I could have invested in validation script to validate/transform the the data and find out the odd data records ( if any ) . Also the you can take the advantage of the many workers running on Adb when you partition the data . Please look at the input blobs and see if you partition on container / date etc .

Also I see that you are wrting the data to different region ( end goal ) , I suggest you to start with the region with least data , it can work as a trial for your validation scripts \ runbook and also you will eastablish the baseline on how much it will take .

Please do let me know how it goes .

Thanks

Himanshu

-------------------------------------------------------------------------------------------------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators