Hi @Brad ,

There are a lot of possibilities here that are difficult to answer without knowing the full setup of the environment. However, I will attempt to give you some information that can help you troubleshoot the cause of this issue.

First, I would ensure the nodes are all fully up-to-date with the latest patches. This will help to ensure you aren't running into any issues that may have already been resolved.

Next, I would confirm your networking is properly set up between sites. We frequently see stretched configurations that are not designed as per our best practice. These configurations have caused numerous issues with stretched clusters, so it is worth taking the time to verify. Most of the common misconfigurations we see include multiple paths between sites, SR traffic using unintended NICs, attempting to use a stretched L2 network for the cluster hosts (this is OK for the VM traffic though) and bandwidth bottlenecks between sites. The requirements can be found here - https://video2.skills-academy.com/en-us/azure-stack/hci/concepts/host-network-requirements#stretched-clusters

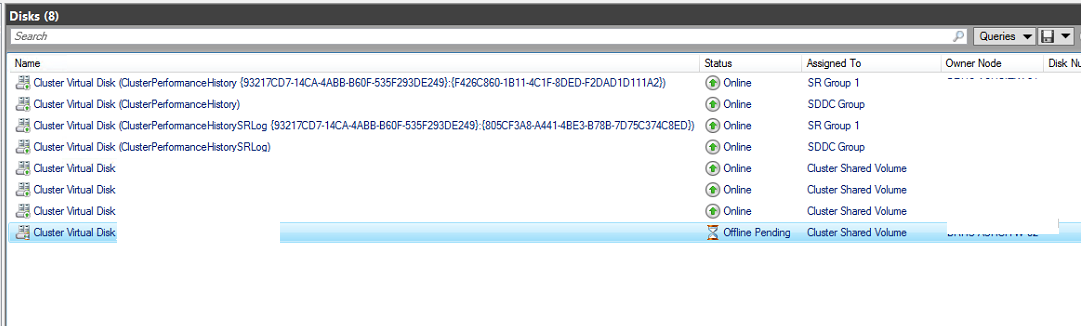

Another thing to consider, does this issue happens without SR configured? If so, this is a simpler configuration to troubleshoot. If not, this could point to a possible issue with the stretch configuration. If you are using a Synchronous replication, you could attempt to try Asynchronous replication to see if it changes the behavior as well.

As for data to collect, the best would be the SDDC diagnostics - https://github.com/PowerShell/PrivateCloud.DiagnosticInfo. This would give you the best overall data as you could review the cluster and SR logs for clues to what is happening. Look at the logs on the node you are attempting to move the resource from first. Hopefully this can lead you to an answer, but if you need additional help, I would suggest opening a case where we can deep dive into the logs with you further.

Hope this helps!

Trent