Understanding (the Lack of) Distributed File Locking in DFSR

Ned here again. Today’s post is probably going to generate some interesting comments. I’m going to discuss the absence of a multi-host distributed file locking mechanism within Windows, and specifically within folders replicated by DFSR.

Some Background

- Distributed File Locking – this refers to the concept of having multiple copies of a file on several computers and when one file is opened for writing, all other copies are locked. This prevents a file from being modified on multiple servers at the same time by several users.

- Distributed File System Replication – DFSR operates in a multi-master, state-based design. In state-based replication, each server in the multi-master system applies updates to its replica as they arrive, without exchanging log files (it instead uses version vectors to maintain “up-to-dateness” information). No one server is ever arbitrarily authoritative after initial sync, so it is highly available and very flexible on various network topologies.

- Server Message Block - SMB is the common protocol used in Windows for accessing files over the network. In simplified terms, it’s a client-server protocol that makes use of a redirector to have remote file systems appear to be local file systems. It is not specific to Windows and is quite common – a well known non-Microsoft example is Samba, which allows Linux, Mac, and other operating systems to act as SMB clients/servers and participate in Windows networks.

It’s important to make a clear delineation of where DFSR and SMB live in your replicated data environment. SMB allows users to access their files, and it has no awareness of DFSR. Likewise, DFSR (using the RPC protocol) keeps files in sync between servers and has no awareness of SMB. Don’t confuse distributed locking as defined in this post and Opportunistic Locking.

So here’s where things can go pear-shaped, as the Brits say.

Since users can modify data on multiple servers, and since each Windows server only knows about a file lock on itself, and since DFSR doesn’t know anything about those locks on other servers, it becomes possible for users to overwrite each other’s changes. DFSR uses a “last writer wins” conflict algorithm, so someone has to lose and the person to save last gets to keep their changes. The losing file copy is chucked into the ConflictAndDeleted folder.

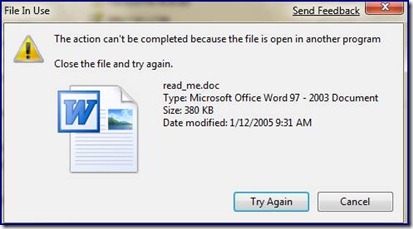

Now, this is far less common than people like to believe. Typically, true shared files are modified in a local environment; in the branch office or in the same row of cubicles. They are usually worked on by people on the same team, so people are generally aware of colleagues modifying data. And since they are usually in the same site, the odds are much higher that all the users working on a shared doc will be using the same server. Windows SMB handles the situation here. When a user has a file locked for modification and his coworker tries to edit it, the other user will get an error like:

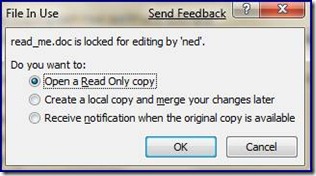

And if the application opening the file is really clever, like Word 2007, it might give you:

DFSR does have a mechanism for locked files, but it is only within the server’s own context. As I’ve discussed in a previous post, DFSR will not replicate a file in or out if its local copy has an exclusive lock. But this doesn’t prevent anyone on another server from modifying the file.

Back on topic, the issue of shared data being modified geographically does exist, and for some folks it’s pretty gnarly. We’re occasionally asked why DFSR doesn’t handle this locking and take of everything with a wave of the magic wand. It turns out this is an interesting and difficult scenario to solve for a multi-master replication system. Let’s explore.

Third-Party Solutions

There are some vendor solutions that take on this problem, which they typically tackle through one or more of the following methods*:

- Use of a broker mechanism

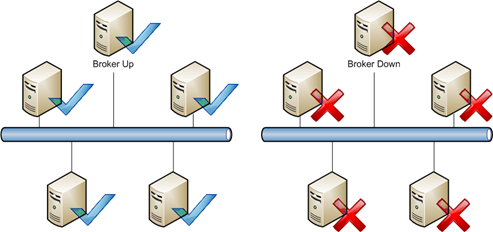

Having a central ‘traffic cop’ allows one server to be aware of all the other servers and which files they have locked by users. Unfortunately this also means that there is often a single point of failure in the distributed locking system.

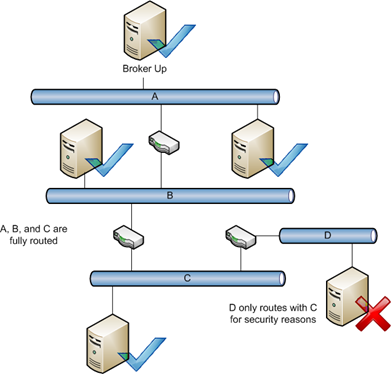

- Requirement for a fully routed network

Since a central broker must be able to talk to all servers participating in file replication, this removes the ability to handle complex network topologies. Ring topologies and multi hub-and-spoke topologies are not usually possible. In a non-fully routed network, some servers may not be able to directly contact each other or a broker, and can only talk to a partner who himself can talk to another server – and so on. This is fine in a multi-master environment, but not with a brokering mechanism.

- Are limited to a pair of servers

Some solutions limit the topology to a pair of servers in order to simplify their distributed locking mechanism. For larger environments this is may not be feasible.

- Make use of agents on clients and servers

- Do not use multi-master replication

- Do not make use of MS clustering

- Make use of specialty appliances

* Note that I say typically! Please do not post death threats because you have a solution that does/does not implement one or more of those methods!

Deeper Thoughts

As you think further about this issue, some fundamental issues start to crop up. For example, if we have four servers with data that can be modified by users in four sites, and the WAN connection to one of them goes offline, what do we do? The users can still access their individual servers – but should we let them? We don’t want them to make changes that conflict, but we definitely want them to keep working and making our company money. If we arbitrarily block changes at that point, no users can work even though there may not actually be any conflicts happening! There’s no way to tell the other servers that the file is in use and you’re back at square one.

Then there’s SMB itself and the error handling of reporting locks. We can’t really change how SMB reports sharing violations as we’d break a ton of applications and clients wouldn’t understand new extended error messages anyways. Applications like Word 2007 do some undercover trickery to figure out who is locking files, but the vast majority of applications don’t know who has a file in use (or even that SMB exists. Really.). So when a user gets the message ‘This file is in use’ it’s not particularly actionable – should they all call the help desk? Does the help desk have access to all the file servers to see which users are accessing files? Messy.

Since we want multi-master for high availability, a broker system is less desirable; we might need to have something running on all servers that allows them all to communicate even through non-fully routed networks. This will require very complex synchronization techniques. It will add some overhead on the network (although probably not much) and it will need to be lightning fast to make sure that we are not holding up the user in their work; it needs to outrun file replication itself - in fact, it might need to actually be tied to replication somehow. It will also have to account for server outages that are network related and not server crashes, somehow.

And then we’re back to special client software for this scenario that better understands the locks and can give the user some useful info (“Go call Susie in accounting and tell her to release that doc”, “Sorry, the file locking topology is broken and your administrator is preventing you from opening this file until it’s fixed”, etc). Getting this to play nicely with the millions of applications running in Windows will definitely be interesting. There are plenty of OS’s that would not be supported or get the software – Windows 2000 is out of mainstream support and XP soon will be. Linux and Mac clients wouldn’t have this software until they felt it was important, so the customer would have to hope their vendors made something analogous.

The Big Finish

Right now the easiest way to control this situation in DFSR is to use DFS Namespaces to guide users to predictable locations, with a consistent namespace. By correctly configuring your DFSN site topology and server links, you force users to all share the same local server and only allow them to access remote computers when their ‘main’ server is down. For most environments, this works quite well. Alternative to DFSR, SharePoint is an option because of its check-out/check-in system. BranchCache (coming in Windows Server 2008 R2 and Windows 7) may be an option for you as it is designed for easing the reading of files in a branch scenario, but in the end the authoritative data will still live on one server only – more on this here. And again, those vendors have their solutions.

We’ve heard you loud and clear on the distributed locking mechanism though, and just because it’s a difficult task does not mean we’re not going to try to tackle it. You can feel free to discuss third party solutions in our comments section, but keep in mind that I cannot recommend any for legal reasons. Plus I’d love to hear your brainstorms – it’s a fun geeky topic to discuss, if you’re into this kind of stuff.

- Ned ‘Be Gentle!’ Pyle

Comments

Anonymous

February 21, 2009

PingBack from http://www.ditii.com/2009/02/21/understanding-distributed-file-locking-in-dfsr/Anonymous

February 23, 2009

The comment has been removedAnonymous

April 08, 2009

We are about to go live with a DFS setup spread across multiple sites, and have turned to Peerlock to solve our file locking issues. While most of our data won't be worked on collaboratively, quite a bit of our AutoCAD drawings will be. However I would really look forward to a solution built into DFS, although it's unfortunate that it will probably require an OS purchase and upgrade to implement.Anonymous

April 09, 2009

Thanks jmiles. If you get a chance, we'd love to hear your feedback here in a month or so with how that implementation worked out for you.Anonymous

May 05, 2009

Well, we've been running with Peerlock for about 3 weeks now, and everything appears to be working great. We had to ensure that the temporary locking files weren't being replicated, and we had to turn off the saving of deleted files in the Conflict&Deleted folders, but once that's done, it appears to work excellent. CPU usage is up to ~35% consistently, where it used to be 5%, on a file server serving 800 GB to about 100 users. I'd be more than willing to answer any questions anyone has about it.Anonymous

May 05, 2009

@jmiles: Could you share some more information - especially on how many servers do you do the locking, how are these servers interconnected, and how many files do you replicate? There seems to be a lot of links to this product when Googling for a sort like solution, but almost no user experiences unfortunately so your experience would be very interessting :)Anonymous

May 06, 2009

The comment has been removedAnonymous

May 06, 2009

Whoops, hit Tab and then space, and it entered the comment. Downsides:

- Peerlock can run as a GUI, or a service, but not both. This means to run on a server that is logged off, you need to use the service, but to make configuration changes, you have to stop the service, start the GUI, make changes, close the GUI, start the service. A hassle, but apparently changing in a future version.

- Logging isn't terrible, but isn't great either. You have options to change log file size, but not type of logging, or verbosity.

- CPU usage. As mentioned before, the program is using about 35% CPU constantly, on brand new PowerEdge 2900 servers with a single Quad Core CPU.

- Price - it was somewhere around $990 CAD per license, and you need one for each server. As far as sizing goes, we are replicating about 250GB in one folder group, and 500 GB in another. These are mostly AutoCAD/Office documents, and are accesses by around 120 users daily. There's about 5 GB of changes on a busy day according to our incrementals. As always, feel free to ask for more info.

Anonymous

May 08, 2009

Thank you for your information! :)Anonymous

May 15, 2009

For me, file locking as an issue has been replaced by replication and and sharing violations when using Autodesk files. Has anyone found a fix for this?Anonymous

May 15, 2009

The comment has been removedAnonymous

August 18, 2009

I've got an issue with the file locking wrt namespace configuration, etc. I've tried to configure such that the namespace to go to the main server in office as it is the first server and should go to the lowest cost. The remote office has a higher cost as it is off-site. This works most of the time, but the following scenario has been giving me problem Whenever a MS Office file is opened on the primary server, subsequent attempt at opening this file will be redirected to the off-site server. This was documented in the DFS-R help file Win2k3R2. Can this behavior be changed or did I forget something? I don't mind if the local copy is read-only, but this behavior of opening the file on the remote site is causing a lot of update conflict.Anonymous

August 18, 2009

Can you paste in the help file text that says this? As an interim fix, you can always explore using Target Priority. That will make your preferred server always at the top of the referral list and the other server would only be used if the prioritized server was completely unavailable (down, dead network, etc).Anonymous

November 19, 2009

The comment has been removedAnonymous

November 19, 2009

Yes, after the first user on A locks a file for WRITE, any subsequent users connecting to A will not be able to modify the file. At this point you are not dealing with DFSR in any way, but instead SMB behavior. And thanks, I too hope are well. :)Anonymous

November 20, 2009

The comment has been removedAnonymous

November 20, 2009

It's possible that they weren't actually locking the file though - it depends on the user application to decide about locks. For example, two people can edit the same TXT file simultaneously if they are both using NOTEPAD.EXE, because that application does not lock files. It's not always a hard and fast rule.Anonymous

November 24, 2009

Hi Jmiles We have also been using Peerlock with DFS-R but recently it has begun doing strange things like not releasing locks and causing DFS-R to think an older file on the other is newer ect. Can you please let me know your contact details I would like to talk to you. Also has anyone used Peer Sync - it purports to add a lot of features in a replication engine such as versioning ect.Anonymous

December 02, 2009

AndrewN, you can reach me by emailing to jadus01 at hotmail.com (obfuscated to avoid spam bots). We had quite a few problems after initially setting up Peerlock, with backlogs of 1000's of files caused by peerlock, but have that mostly figured out though. We're still looking at alternatives, and I think we're going to begin testing GlobalScape WAFS product, in place of DFSR. It will do the replication, as well as file locking, and hopefully do so without all the sharing violations. One of the biggest things is apparently it will replicate files after a save, even if they're still open.Anonymous

December 02, 2009

The comment has been removedAnonymous

February 23, 2010

Just as an update regarding the Globalscape product, we will most likely not be purchasing it. After some research, the following negatives were determined:

- An agent and server is required, so for our hub site, we would need to store 900GB of data for the server, and 900GB of data for the agent

- Only 1 file transfer occurs at a time, as opposed to DFSR's 16 concurrent transfers

- The software cannot be run as a service on Windows Server 2008. It will only run as an application with a logged on user. I'm a little disappointed, as there really aren't many other options for branch office replication. It must not be as popular as I had assumed when we chose this infrastructure. I just find it hard to believe that many companies would sacrifice LAN speed access for centralised data, when collaboration is necessary. DFSR for the most part is a great solution, it's just that I don't think it was designed for our use case, with 100's of files open at once causing sharing violations and backlogs. If only we could tweak how often opened files are retried (and extend that period), and have native file locking.

Anonymous

September 09, 2010

JMiles, Hi it's been a few months now. Would you still recommend Globalscape as a product? how resource hungry is it please? I have a client currently using Peersync (80% CPU usage!!) but no file locking - they are having a nightmare. They are a small company 12 users in one site 6 in another with a server at each site. Many Thanks in advanceAnonymous

October 15, 2010

The comment has been removed