Assess an Enterprise With Data Migration Assistant–Part 1: Prerequisites

In my previous posts How to Consolidate JSON Assessment Reports and Report on Your Consolidadted Assessments With PowerBI, I show how to run scaled DMA assessments across your estate and report on the results using PowerBI.

In the next series of posts I’ll take you through the enhancements to the process, scripts and reports to help you really get the value of those scaled assessments and see the efforts involved in upgrading or migrating to a modern data platform.

This series will be split into 4 posts, this being post number 1.

- Part 1 - Prerequisites

- Part 2 - Running an assessment

- Part 3 - Loading the assessment data

- Part 4 - Reporting on the assessment data with PowerBI

Prerequisites

The following is a list of prereqs which are required in order to perform a successful scaled assessment.

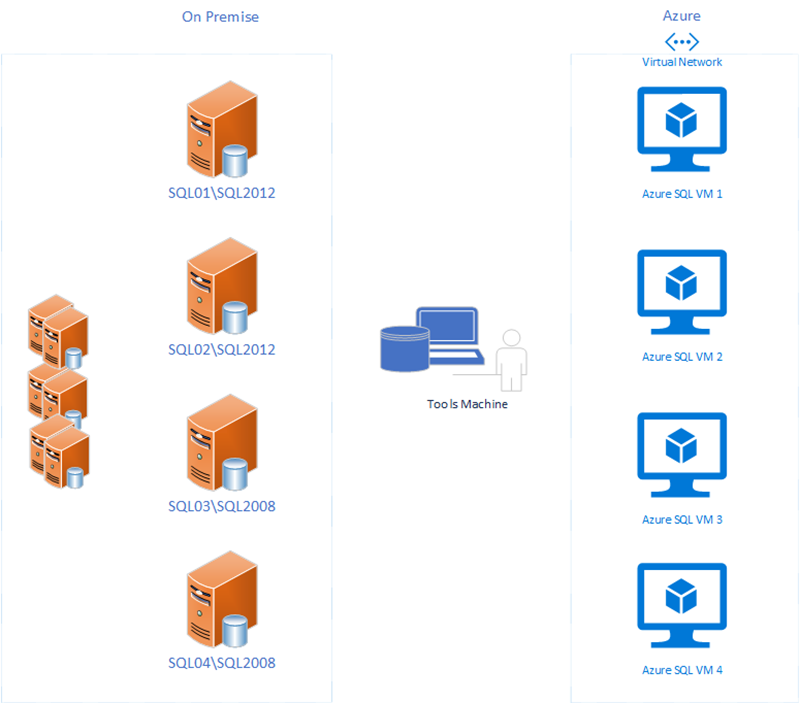

- Designate a tools machine on your network which is where DMA will be initiated from. Ensure this machine has connectivity to your SQL Server targets

- Data Migration Assistant

- PowerShell v5

- .NET Framework v4.5 or above

- SSMS 17.0 or above

- PowerBI desktop

The following image simply illustrates that the assessments can be done on premise or against Azure VMs as long as the tools machine has network connectivity to the target on the TCP port SQL Server is listening on.

Loading the PowerShell modules

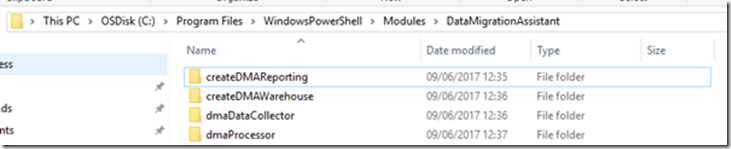

Saving the PowerShell modules into the PowerShell modules directory enables you to call the modules without the need to explicitly load them before use.

To load the modules follow these steps:

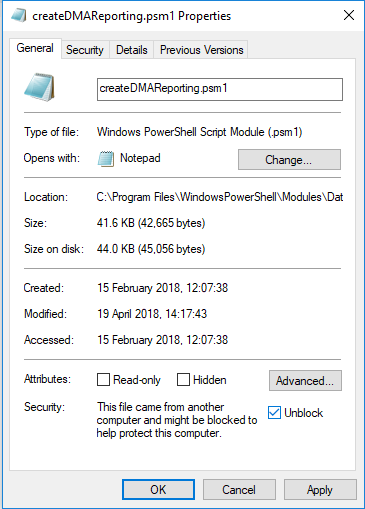

Navigate to C:\Program Files\WindowsPowerShell\Modules and create a folder called DataMigrationAssistant. Place the PowerShell modules into this directory

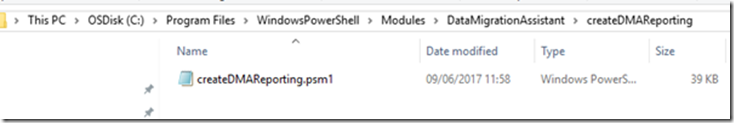

Each folder contains the respective psm1 file.

e.g.

Note: The folder and file must have the same name. Also note that once the files have been saved in your WindowsPowerShell directory, you may be required to "unblock" the file so that PowerShell can successfully load it.

PowerShell should now automatically load these modules when a new PowerShell session starts.

Create an inventory of SQL Servers

Before running the PowerShell script to assess your SQL Servers, you first need to build an inventory or SQL Servers which you want to assess.

This inventory can be in one of 2 forms.

- Excel CSV file

- SQL Server table

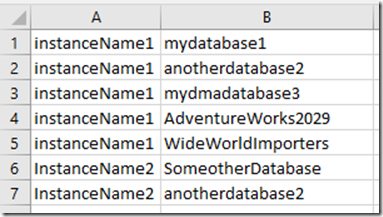

If using a CSV file

When using a csv file to import the data ensure there are only 2 columns of data – Instance Name and Database Name and that the columns don’t have header rows.

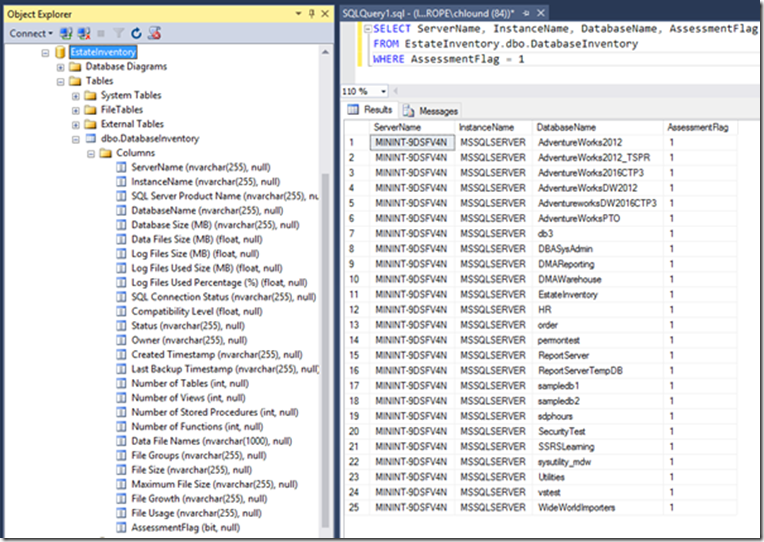

If using SQL Server table

Create a database called EstateInventory and a table called DatabaseInventory. The table containing this inventory data can have any number of columns, as long as the following 4 columns exist:

- ServerName

- InstanceName

- DatabaseName

- AssessmentFlag

If this database is not on the tools machine, ensure that the tools machine has network connectivity to this SQL Server instance.

The benefit of using a SQL Server table over the CSV file is that you can use the assessment flag column to control which instance / database gets picked up for assessment which makes it easier to separate assessments into smaller chunks. You can then span multiple assessments (see the next post on running an assessment) which is easier than maintaining multiple CSV files.

Keep in mind that depending on the number of objects and their complexity, an assessment can take a very long time (hours+), so separating into manageable chunks is a good idea.

In the next post we will look at how to run an assessment against your SQL Server enterprise in preparation for loading and reporting.

Get the PowerShell scripts here

Script Disclaimer

The sample scripts provided here are not supported under any Microsoft standard support program or service. All scripts are provided AS IS without warranty of any kind. Microsoft further disclaims all implied warranties including, without limitation, any implied warranties of merchantability or of fitness for a particular purpose. The entire risk arising out of the use or performance of the sample scripts and documentation remains with you. In no event shall Microsoft, its authors, or anyone else involved in the creation, production, or delivery of the scripts be liable for any damages whatsoever (including, without limitation, damages for loss of business profits, business interruption, loss of business information, or other pecuniary loss) arising out of the use of or inability to use the sample scripts or documentation, even if Microsoft has been advised of the possibility of such damages. Please seek permission before reposting these scripts on other sites/repositories/blogs.