Achieving balance in testing software

Like nearly all things in life, testing software requires a balancing act. There are many different aspects of a software package that need to be validated. There are many different tools and approaches to do this validation. Spend too much time on any one part of the product or with a single tool or approach and you may have a major gaps in your results.

A question that I occasionally use with college students is a scenario where they have two hours to test the Windows calculator on their first morning on the job. They have access to any information that they want, but they must provide a recommendation to their new manager at the end of the two hours.

Most candidates do a good job of taking a structured approach to functionally testing the calculator, moving from basic functions to advanced functions to error conditions. What very few recognize is the need to think about performance, usability, localization, help, being a good citizen in the OS, etc etc. Even a fairly simple application requires different approaches to do a thorough validation job.

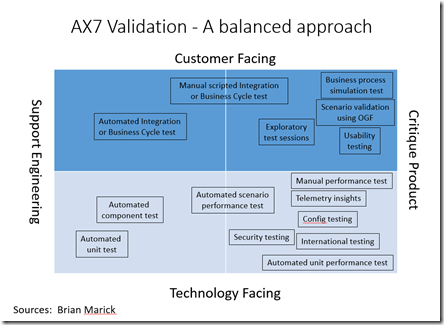

A tool that I've found helpful to explain this to others internally throughout the development life of AX'7' is a variation of Brian Marick's testing matrix. Please see https://www.exampler.com/old-blog/2003/08/21/#agile-testing-project-1 for the original post from Brian. My version with some different types of tests layered into the matrix is below.

An effective testing program will have activities in all four quadrants. You can visualize emphasizing a particular aspect by moving the vertical middle line to the left or right. Move it to the left to make the Critique Product quadrants larger and you take on regression risk as the engineering team makes changes. Move it to the right and you don't have confidence that the product actually solves the customer needs, even if you have really good engineering support. I've been in projects that have made both of these mistakes. Moving the horizontal middle line up or down results in different project risks, but risks nonetheless.

The matrix can also help you realize that the same tool / approach can't be all things for the project. For example, the tendency for many software professionals is to believe that the same scenario oriented tests that we use to manually Critique Product are the best tests to automate to Support Engineering. While these tests can give you confidence in the product, they are expensive to automate and maintain, slow to run, and are imprecise (it's difficult to pinpoint the cause of a failure). A regression suite is far more effective when primarily made up of component and unit tests. In future posts, I will talk more about test automation qualities and regression suite make up.

If an interview candidate were to draw Marick's testing matrix and describe to me how he/she would approach testing the Windows calculator, that candidate's chances of getting hired would be very good!!