Lessons Learned From Benchmarking Fast Machine Learning Algorithms

This post is authored by Miguel Fierro, Data Scientist, Mathew Salvaris, Data Scientist, Guolin Ke, Associate Researcher, and Tao Wu, Principal Data Science Manager, all at Microsoft.

Boosted decision trees are responsible for more than half of the winning solutions in machine learning challenges hosted at Kaggle, according to KDNuggets. In addition to superior performance, these algorithms have practical appeal as they require minimal tuning. In this post, we evaluate two popular tree boosting software packages: XGBoost and LightGBM, including their GPU implementations. Our results, based on tests on six datasets, are summarized as follows:

- XGBoost and LightGBM achieve similar accuracy metrics.

- LightGBM has lower training time than XGBoost and its histogram-based variant, XGBoost hist, for all test datasets, on both CPU and GPU implementations. The training time difference between the two libraries depends on the dataset, and can be as big as 25 times.

- XGBoost GPU implementation does not scale well to large datasets and ran out of memory in half of the tests.

- XGBoost hist may be significantly slower than the original XGBoost when feature dimensionality is high.

All our code is open-source and can be found in this repo. We will explain the algorithms behind these libraries and evaluate them across different datasets. Do you like your machine learning to be quick? Then keep reading.

The Basics of Boosted Decision Trees

Gradient boosting is a machine learning technique that produces a prediction model in the form of an ensemble of weak classifiers, optimizing a differentiable loss function. One of the most popular types of gradient boosting is boosted decision trees, that internally is made up of an ensemble of weak decision trees. There are two different strategies to compute the trees: level-wise and leaf-wise. The level-wise strategy grows the tree level by level. In this strategy, each node splits the data prioritizing the nodes closer to the tree root. The leaf-wise strategy grows the tree by splitting the data at the nodes with the highest loss change. Level-wise growth is usually better for smaller datasets whereas leaf-wise tends to overfit. Leaf-wise growth tends to excel in larger datasets where it is considerably faster than level-wise growth.

Level-wise growth strategy vs leaf-wise growth strategy. The level-wise strategy adds complexity extending the depth of the tree level by level. As a contrary, the leaf-wise strategy generates branches by optimizing a loss.

A key challenge in training boosted decision trees is the computational cost of finding the best split for each leaf. Conventional techniques find the exact split for each leaf, and require scanning through all the data in each iteration. A different approach approximates the split by building histograms of the features. That way, the algorithm doesn’t need to evaluate every single value of the features to compute the split, but only the bins of the histogram, which are bounded. This approach turns out to be much more efficient for large datasets, without adversely affecting accuracy.

XGBoost started in 2014, and it has become popular due to its use in many winning Kaggle competition entries. Originally XGBoost was based on a level-wise growth algorithm, but recently has added an option for leaf-wise growth that implements split approximation using histograms. We refer to this version as XGBoost hist. LightGBM is a more recent arrival, started in March 2016 and open-sourced in August 2016. It is based on a leaf-wise algorithm and histogram approximation, and has attracted a lot of attention due to its speed (Disclaimer: Guolin Ke, a co-author of this blog post, is a key contributor to LightGBM). Apart from multithreaded CPU implementations, GPU acceleration is now available on both XGBoost and LightGBM too.

Evaluating XGBoost and LightGBM

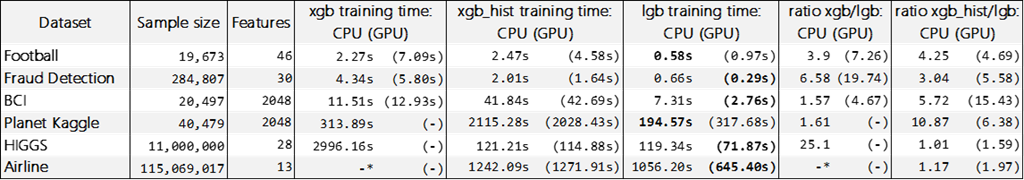

We performed machine learning experiments across six different datasets. These experiments are in the python notebooks in our github repo. We also showed the specific compilation versions of XGBoost and LightGBM that we used and provided the steps to install them and set up the experiments. We tried classification and regression problems with both CPU and GPU. All experiments were run on an Azure NV24 VM with 24 cores, 224 GB of memory and NVIDIA M60 GPUs. The operating system was Ubuntu 16.04. In all experiments, we found XGBoost and LightGBM had similar accuracy metrics (F1-scores are shown here), so we focused on training times in this blog post. The table below shows training times and the training time ratios between the two libraries in both CPU and GPU implementations.

Benchmark of XGBoost vs LightGBM training times and training time ratios between the libraries in CPU and GPU. In the situations where XGBoost GPU training time does not appear (-), we got an out of memory error. In the Airline dataset with XGBoost in CPU (-*), we stopped the computation after 5 hours. The best training time for each dataset is in boldface text.

Learnings from the Benchmark

As it is usually said, no benchmark is true but some of them are useful. From our experiments, we found the leaf-wise implementation faster than the level-wise one in general. However, the CPU results for BCI and Planet Kaggle datasets, as well as the GPU result for BCI, show that XGBoost hist takes considerably longer than standard XGBoost. This is due to the large size of the datasets, as well as the large number of features, which causes considerable memory overhead for XGBoost hist.

We also found that XGBoost GPU implementation did not scale well, as it gave out of memory errors for 3 larger datasets. In addition, we had to terminate XGBoost training on the Airline dataset after 5 hours.

Finally, between LightGBM and XGBoost, we found that LightGBM is faster for all tests where XGBoost and XGBoost hist finished, with the biggest difference of 25 times for XGBoost and 15 times for XGBoost hist, respectively.

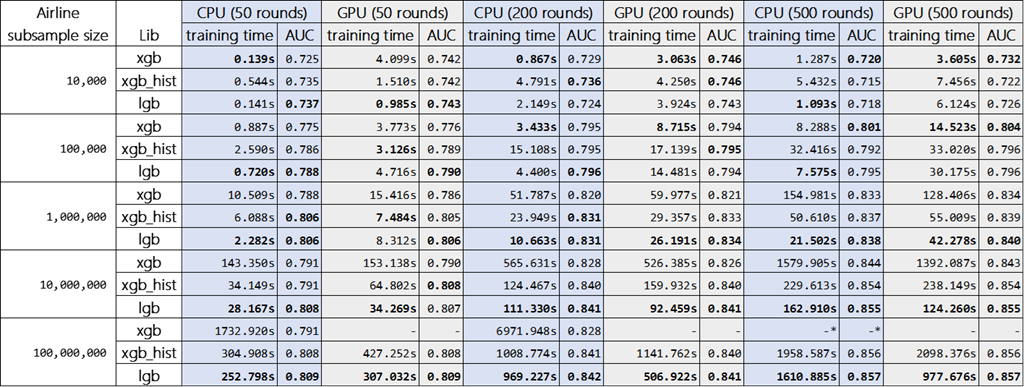

We wanted to investigate the effect of different data sizes and number of rounds in the performance of CPU vs GPU. In the next table, we show the results of using subsamples of the Airline dataset. When comparing the CPU and GPU training times, we see that the GPU version of LightGBM outperforms the CPU one when the dataset is large and for a high number of rounds. As expected, with small datasets, the additional IO overhead of copying the data between RAM and GPU memory overshadows the speed benefits of running the computation on GPU. Here, we did not observe any performance gains in using XGBoost hist on GPU. As a side note, the standard implementation of XGBoost (exact split instead of histogram based) does not benefit from GPU either, as compared to multi-core CPU, per this recent paper.

Benchmark of XGBoost, XGBoost hist and LightGBM training time and AUC for different data sizes and rounds. Same as before, XGBoost in GPU for 100 million rows is not shown due to an out of memory (-). In XGBoost for 100 million rows and 500 rounds we stopped the computation after 5 hours (-*). The best training time and the highest AUC for each sample size are in boldface text.

Overall, we find that LightGBM is faster than XGBoost, in both CPU and GPU implementations. Furthermore, if XGBoost is used, we would recommend keeping a close eye on feature dimensionality and memory consumption. The significant speed advantage of LightGBM translates into the ability to do more iterations and/or quicker hyperparameter search, which can be very useful if you have a limited time budget for optimizing your model or want to experiment with different feature engineering ideas.

Happy coding!

Miguel, Mathew, Guolin & Tao.

Acknowledgment: We would like to thank Steve Deng, David Smith and Huseyin Yildiz from Microsoft for their assistance and feedback on this post.

Comments

- Anonymous

July 25, 2017

awesome post Thank you for sharing- Anonymous

July 31, 2017

Thank you, I hope this was useful information

- Anonymous

- Anonymous

July 26, 2017

Great benchmark! It would've been nice to recognize previous related benchmarks though. e.g. https://github.com/szilard/GBM-perf, https://github.com/szilard/GBM-multicore, https://github.com/szilard/benchm-ml- Anonymous

July 31, 2017

There are many benchmarks out there, we haven't tested the ones you posted

- Anonymous

- Anonymous

July 29, 2017

Parameter tuning can be key to achieving the best results. Both libraries offer parameter tuning guidelines: XGBoost (http://xgboost.readthedocs.io/en/latest/how_to/param_tuning.html) and LightGBM (https://github.com/Microsoft/LightGBM/blob/master/docs/Parameters-tuning.md).