Cloud Datacenter Network Architecture in the Windows Server “8” era

In the previous blog, we talked about what makes Windows Server “8” a great cloud platform and how to actually get your hands dirty building your own mini-cloud. I hope by now that

In the previous blog, we talked about what makes Windows Server “8” a great cloud platform and how to actually get your hands dirty building your own mini-cloud. I hope by now that you’ve had a chance to explore the Windows Server “8” cloud infrastructure technologies, so now you’re ready to start diving a bit deeper into the implications of the new features on how a cloud architecture can look like in the Windows Server “8” era. We can also start talking more about the “why” and less about the “how”. We’ll start with the networking architecture of the datacenter.

you’ve had a chance to explore the Windows Server “8” cloud infrastructure technologies, so now you’re ready to start diving a bit deeper into the implications of the new features on how a cloud architecture can look like in the Windows Server “8” era. We can also start talking more about the “why” and less about the “how”. We’ll start with the networking architecture of the datacenter.

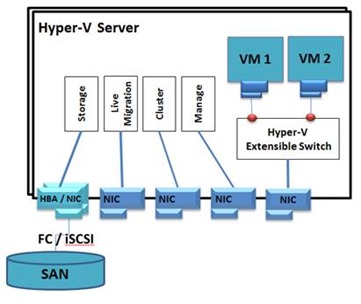

Until today, if you wanted to build a cloud, the best practice recommendation was that each of your Hyper-V hosts should have at least 4-5 different networks. This is because of the need to have security and performance guarantees for the different traffic flows coming out of a Hyper-V server. Specifically, you would have a separate NIC and network for cluster traffic, for live migration, for storage access (that could be either FibreChannel or Ethernet), for out of band management to access the servers, and of course, for your actual VM tenant workloads.

The common practice with Hyper-V today is usually to have physical separation and 4-5 NICs in each server, usually 1GbE. If you wanted resiliency, you’d even double that, and indeed, many customers have added NICs for redundant access to storage and sometimes even more. Eventually, each server ends up looking something like this:

Simplifying the Network architecture

Windows Server “8” presents new opportunities to simplify the datacenter network architecture. With 10GbE and Windows Server 8 support for features like host QoS, DCB support, Hyper-V Extensible Switch QoS and isolation policies, and Hyper-V over SMB, you can now rethink the need for separate networks, as well as how many physical NICs you actually need on the server. Let’s take a look at two approaches for simplification.

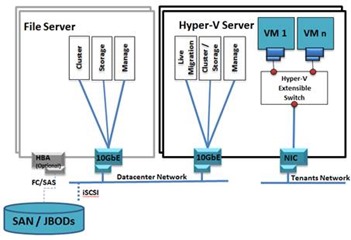

Approach #1: Two networks, Two NICs (or NIC teams) per server

Given enough bandwidth on the NIC (which 10GbE provides), you can now converge your entire datacenter networks into basically two networks, physically isolated: A datacenter network can carry all the storage, live migration, clustering and management traffic flows, and a second network can carry all of the VM tenant generated traffic. You can still apply QoS policies to guarantee minimum traffic for each flow (both at the host OS level for the datacenter native traffic, as well as at the Hyper-V switch level, to guarantee bandwidth for virtual machines. Also, thanks to the Hyper-V support for remote SMB server storage (did you read Jeffrey Snover’s blog?), you can use your Ethernet fabric to access the file servers and can minimize your non-Ethernet fabric. You end up with a much simpler network, which will look something like this:

Notice that now you have just two networks. There are no hidden VLANs, although you could have them if you wanted to. Also note that the file server obviously still needs to be connected to the actual storage, and there are various options there as well (FC, iSCSI or SAS), but we’ll cover that in another blog.

Once you adopt this approach, you can now add more NICs and create teams to introduce resiliency to network failures, so you can end up with two NIC teams on each server. The new Windows Server “8” feature called Load Balancing and Failover (LBFO) makes NIC teams easy.

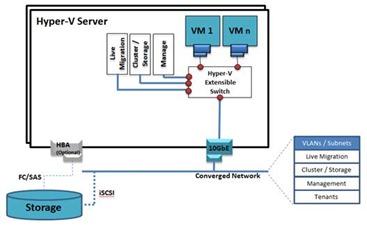

Approach #2: Converging to a single NIC (or NIC team)

Now, if we take this even further down the network convergence road, we could still reduce the number of NICs in the server and use VLANs for running different traffic flows on different networks, with a single NIC or a NIC team. This is again made possible by the fact that you can create additional switch ports on the Hyper-V extensible switch and route host traffic through them, while still applying isolation policies and QoS policies at the switch level. Such a configuration would look like this (and we promise a how-to guide very soon. It’s in the works, and you will need some PowerShell to make it work):

In summary, Windows Server “8” truly increases the flexibility in datacenter network design. You can still have different networks for different traffic flows, but you don’t have to. You can use QoS and isolation policies, combined with the increased bandwidth of 10GbE to converge them together. In addition, the number of NICs on the server doesn’t have to equal the number of networks you have. You can use VLANs for separation, but you can also use the built in NIC teaming feature and the Hyper-V extensible switch to create virtual NICs from a single NIC and separate the traffic that way while keeping your isolation and bandwidth guarantees. It’s up to you to decide which approach works and integrates best with your datacenter.

So, what do you think? We would really like to hear from you! Would you converge your networks? If yes, which approach would you take? If not, why? Please comment back on this blog and let’s start a dialog!

Yigal Edery

On behalf of the Windows Server 8 Cloud Infrastructure team

Comments

Anonymous

January 01, 2003

Thomas, Isn't it the case that each VLAN counts as one Team-Interface? Would that not limit it? TomAnonymous

January 01, 2003

The team is presented to the OS as a single interface. When you using 802.1q VLAN tagging there are no limits imposed by the team.Anonymous

January 01, 2003

@William: I am assuming you're asking if you can move to regular 10GbE. The answer is that it depends... If you move to use file servers and SMB, then sure! If you continue to use iSCSI and FC, then the same benefits of a CNA (hardware offloading of the processing) still applies, so it's really a choice you are making on cost/performance. If you had other reasons to use CNAs, then you can submit a followup question and I'll be happy to answer.Anonymous

January 01, 2003

Could it be that there is a limitation of 32 VLAN's for each Nic-Team? We are actually building up a Showcase where our architect planned to have 125 VLAN's for the environment and now it looks like they are limited.Anonymous

January 01, 2003

Thomas, FWIU, there is a limit of 32 NICs to a single NIC team. I am not aware of any limitations in terms of number of VLANs you can create per team. Thanks! TomAnonymous

January 01, 2003

Hi Thomas - There is information at technet.microsoft.com/.../hh831441.aspx and also Josh Adams shows you how to put it all together in PowerShell over at blogs.technet.com/.../building-a-windows-server-2012-cloud-infrastructure-with-powershell-by-josh-adams.aspx HTH, TomAnonymous

May 24, 2012

We currently use 10GB CNA adapters to run IP, iSCSI, and FCoE over a single wire to a converged network switch. So, are you saying that Windows 8 could accomplish the same thing while using a standard Ethernet switch? If so, we'd be interested, as that should translate into quite a bit of cost-savings.Anonymous

April 11, 2013

@Yigal Edery Thanks a lot for your great articles, they are very useful and because of your help we kicked out vm**** :-) You made a promise: Such a configuration would look like this (and we promise a how-to guide very soon. It’s in the works, and you will need some PowerShell to make it work) Unfortunately, I was ot able to find this guide... Does it exist? Thanks a lot in advance, kind regards, Thomas