Feeling TiPsy…Testing in Production Success and Horror Stories

One topic I hope to return to many times in this blog is that of TiP, or Testing in Production. For a good introduction to this check out Ken Johnston’s blog entry TIP-ING SERVICES TESTING BLOG #1: THE EXECUTIVE SUMMARY which is an updated version of the executive summary of the ThinkWeek paper that I co-authored with Ken and Ravi Vedula on TiP-ing Service Testing. If you do not want to take the time to check out that link let me sum it up in one sentence:

Let’s test our services in the real world, in the data center and possibly with real users, because our system is too complex to test in the lab (think layering, dependencies, and integration) and the environment it runs in is impossible to model in the lab (think scale, or diversity of users and scenarios).

Yes there is more to it than that, so go read Ken’s excellent blog entry, but in case you’re in a rush I didn’t want to leave you lost and without context.

When an irresistible force meets an immovable object

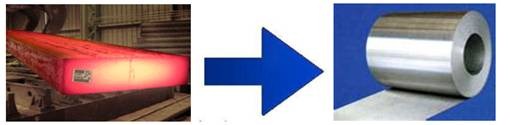

I used to write software to automate hot rolling mills for the steel industry. One type of mill has several (say 4) sequential “stands” consisting of sets of steel rolls weighing several tons each like laundry wringers. A 10 ton slab of steel, red hot at 2400 F barrels down the table towards the first set of rolls set to a gap size less than the thickness of the slab, then on the next set of rolls at a narrower gap, and onward until the steel is shooting out the other end (remember conservation of mass) a lot thinner and a bit wider to be coiled up to make cans or car doors or pipes.

I wrote the code that determined what gaps to set so that we obtained the thickness and properties that the product was supposed to be (known as the level 2 system), but the actual movement of machinery was done by more basic logic programming that we called the level 1 system. Everyone - level 1, level 2, UI or whatever - developed their software the same way. We got the specs, we wrote the code, we tested it out using simulators in-house, we got together and tested out the integrated product using simulators, then we went to the field and tried it out.

Already you may note we were not a software company. We tested our own code, with most engineers never having heard of professional software QA, much less waterfall, agile, RUP, or any other software process. Now when I say we tried it out in the field, it starts out innocently enough with dry runs and simply actuating the equipment and making adjustments, but a majority of the software changes were made after the system had been “turn-keyed” and was producing actual product. I saw (and did…yes I did) changes made to software between the time a red hot slab dropped out of the furnace and when it hit the first mill, and that brings me to the exciting part of this story. Gus (name changed), a level 1 engineer innocently made an off-by-one error so that stand 1 got stand 2’s gap setting, stand 2 got stand 3’s etc. This meant that first “bite” was a harsh one, but the worst part was stand 4, which looking for stand 5’s setting and not finding a stand 5, decided to set its gap to zero. The 10 ton slab being propelled by the multi-ton rolls in stand 3 firmly gripping it unavoidably hurtled towards stand 4 firmly shut and WHAM, from my seat in the pulpit it was quite a scene with pieces of mill and steel flying everywhere…U.S. Steel was not happy.

The lesson: This was NOT TiP, this was chaos. TiP is not about abdicating the need to test or the need for process (a perhaps common mistake as you may hear a dev manager or someone else ask if we test in production why do we need QA?). TiP is about applying the same careful quality sensibilities we have always applied but with some new (even daring) strategies. We still need to be professional and we still need to be QA.

VIP Access Only: Exposure Control

Due to the complexity of a system under test, even if you have a standard pre-release test cycle you still want the assurance of running it in production before exposing it to your user base. Or sometimes it is so complex, production is the only place it will run (I know I will catch flack for that, but even if this can technically always be overcome, it is not always feasible), and again you (the QA and Dev teams) need to see it deployed to production, but you want no one else to see it.

If you control your front-end, then you can control this. For instance, one of the most iron-clad implementations of this was at Amazon.com where it was baked-in to the website presentation framework. Developers could with a single if-statement say only show this or do this if the user is within the Amazon corporate network….neat! This enabled development of new features, for example a pre-order system for Amazon Video on Demand (formerly Unbox) without any way for outside users to see or access it. Now of course the downside of this system was that when it was ready to go publically live it required a code change to make it so. I see two problems with this: 1. The overhead of making and deploying the code change itself. 2. The risk associated both with the code change itself, and with the fact that testing was done on the pre-changed code paths (Yes it should be just a simple if-statement, but QA trusts no one, right?).

Amazon also has the ability to enable/disable public access to “secret” websites that does not involve code changes, but the one problem with this approach is its distinct lack of iron-cladding as evidenced by the ignominious leak before the launch of Amazon’s (then) new Unbox service. Note that the leaker used to work for Amazon….don’t assume your friendlies will always be friendly.

The lesson: Exposure Control with a “break line” at the internal/external boundary can be a very useful tool, just don’t count on it to protect high business impact secret materials unless you designed it to do so.

The Day the Phones Went Out In Kansas

[If you don’t “get” the section heading see this (after you finish reading the blog of course)]

My company made software that “interrogated” expensive telecom equipment and did cool stuff with the data. One protocol used was TL1 which was intended to be person-input/readable, but was regular enough that a program could interact with it. The telecom equipment was very expensive gear so we would get some access to lab gear and occasionally live gear, collect responses to our queries, and create simulators for us to develop code against. This meant the final testing had to be testing in production because that was generally where the real telecom switches were.

I’m in Kansas City at Sprint and I manage to get the IP addresses of a few of the switch models I am interested in (the ones I am developing code for). To my pleasure I can actually reach them from the conference room of the boring meeting I am in so I can get some testing and development done while big-bureaucracy grinds its gears around me. It’s going well, my code is sending queries and pretty much getting the responses it expects, and other than a few tweaks my code looks good. It’s actually not until hours later that we find out that these these multi-million dollar switches that are designed to handle TB/s traffic switching have an interesting design quirk… since they do not expect anyone to be sending them TL1 commands faster than your average human, if you send them say as fast as a computer can sequentially, the switch will seem to respond fine, but then some time after that go into a desultory sulking coma, and in this case knock out phone service to the better part of Kansas. (Yes I was in Kansas and the switch served Kansas, guess the one that they gave me to “play” with was a local one). The upshot was apologies all around, new processes around what we were allowed to test and when, and a shiny new throttling system added to our product.

I’m in Kansas City at Sprint and I manage to get the IP addresses of a few of the switch models I am interested in (the ones I am developing code for). To my pleasure I can actually reach them from the conference room of the boring meeting I am in so I can get some testing and development done while big-bureaucracy grinds its gears around me. It’s going well, my code is sending queries and pretty much getting the responses it expects, and other than a few tweaks my code looks good. It’s actually not until hours later that we find out that these these multi-million dollar switches that are designed to handle TB/s traffic switching have an interesting design quirk… since they do not expect anyone to be sending them TL1 commands faster than your average human, if you send them say as fast as a computer can sequentially, the switch will seem to respond fine, but then some time after that go into a desultory sulking coma, and in this case knock out phone service to the better part of Kansas. (Yes I was in Kansas and the switch served Kansas, guess the one that they gave me to “play” with was a local one). The upshot was apologies all around, new processes around what we were allowed to test and when, and a shiny new throttling system added to our product.

The lesson: TiP made sense here. It makes no sense to pay for these expensive switches to put them in a test lab. But what also makes sense is a process around what to test and when. My conference room antics were not the right process (to be fair to me it had always worked before), but instead a clear messaging of what is the test and what it entails would have alerted the customer in this case to possible effects. In this case we almost needed to test the testing before we proceeded.

Containment: User Exposure Control

Previously I said Exposure Control could be used to keep users completely out of your new service. But what if you want a few users in there? If you are deploying something new and dangerous to production (for testing) then you want users to see it, otherwise your not really exercising it, but you want few users to be affected if things go bad. Ideally you want to start with a few users and monitor things, then add a few more users and monitor, lather-rinse-repeat until you have all your users online with the new system and high confidence.

At Microsoft we have the Experimentation Platform (ExP), which is generally used for A/B testing but can be used for just this type of user ramp-up. Although they didn’t use ExP, Microsoft’s new search engine Bing made use of this type of Exposure Control during their pre-launch testing. Outside Microsoft I think one of the slickest instances of this type of testing I’ve heard of is at the chat engine IMVU where they incorporate it into every deployment. From Timothy Fitz, Continuous Deployment at IMVU: Doing the impossible fifty times a day:

Back to the deploy process, nine minutes have elapsed and a commit has been greenlit for the website. The programmer runs the imvu_push script. The code is rsync’d out to the hundreds of machines in our cluster. Load average, cpu usage, php errors and dies and more are sampled by the push script, as a basis line. A symlink is switched on a small subset of the machines throwing the code live to its first few customers. A minute later the push script again samples data across the cluster and if there has been a statistically significant regression then the revision is automatically rolled back. If not, then it gets pushed to 100% of the cluster and monitored in the same way for another five minutes. The code is now live and fully pushed. This whole process is simple enough that it’s implemented by a handful of shell scripts.

To be continued

OK, I have lots more examples, but this is getting long…. let me know what you think and if I get some positive response I will tell you some more…..

Comments

Anonymous

December 15, 2009

Good post. Sometimes you just have to TIP. This topic came up last week in a workshop I was conducting at an aviation systems (including engines) manufacturer. We were discussing Windows 7 remediation testing processes with a group of non-development types. After discussing the concept of TIP, they all agreed that it's not a really good concept when you're dealing with airplane jet engines.Anonymous

December 16, 2009

@Tom, Aerospace, whether it be the engines or the flight control software seems like a poor application for TiP. So does the medical industry for that matter. The fact is most of us in software don’t work on these, but instead work on shiny baubles. On these the worst thing we can generally do here is lose or corrupt the users' data which does not cost lives, but does cost money and reputations. I will address this in a future post.Anonymous

July 09, 2012

Hi Seth, thanks for the posts..very informative indeed.I am in the banking sector which has lots of compliance and regulatory requirements which need to be met.Whats your opinion on using TiP for applications which are deployed in the banking/financial industry ?