Problems Crawling the non-Default zone *Explained

In a nutshell, there is an undocumented assumption baked into SharePoint Search that the Default Public URL of a Web Application will be crawled. If you want everything to work auto-magically, crawl the Web Application's Public URL for the Default zone (*Note: the crawler requires Windows Authentication [NTLM or Kerberos] in whatever zone your crawl …meaning your Default zone should include Windows Authentication). Otherwise, when crawling a non-Default zone, expect things to break such as contextual queries like "this site" and "this list" as well as inconsistent URLs returned in query results as demonstrated in my first "Beware crawling the non-Default zone…" post.

For reference, please see the following where I have written extensively on this topic:

- Alternate Access Mappings (AAMs) *Explained

- Beware crawling the non-Default zone for a SharePoint 2013 Web Application

- And discussed at SPC14 [#SPC375] (at the 58 minute mark)

Explaining the Assumption of the Default zone

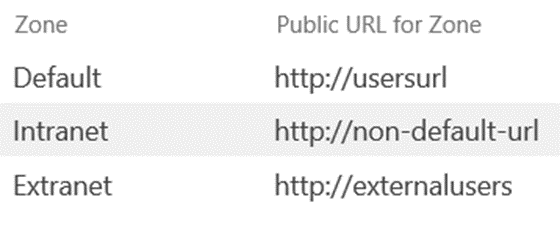

If configured correctly (e.g. crawling the Default zone), we would expect all search results from the same Web Application to be relative to URL from which they were queried. For example, assume the following Web Application and Alternate Access Mappings (AAMs):

In this case, a query from the Default zone would render results relative to the Public URL of this zone https://usersUrl, such as:

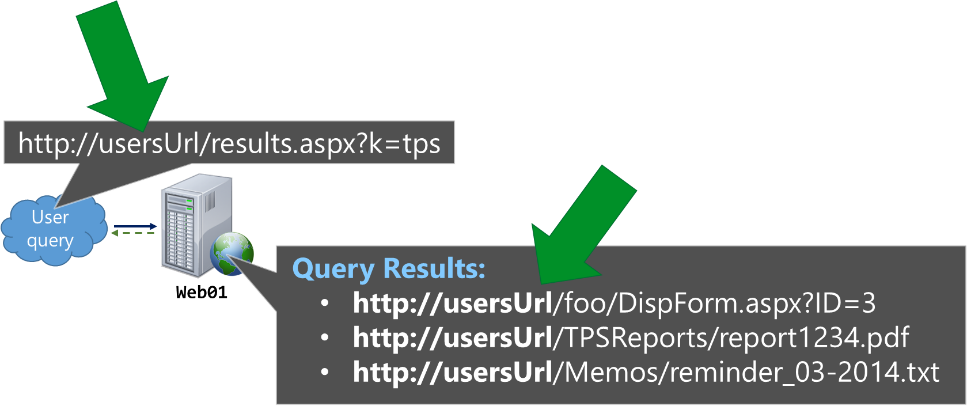

Or, if making the same query from the Extranet zone, the same results would now be relative to https://externalUsers such as:

…and so on for any of the five zones for this Web Application.

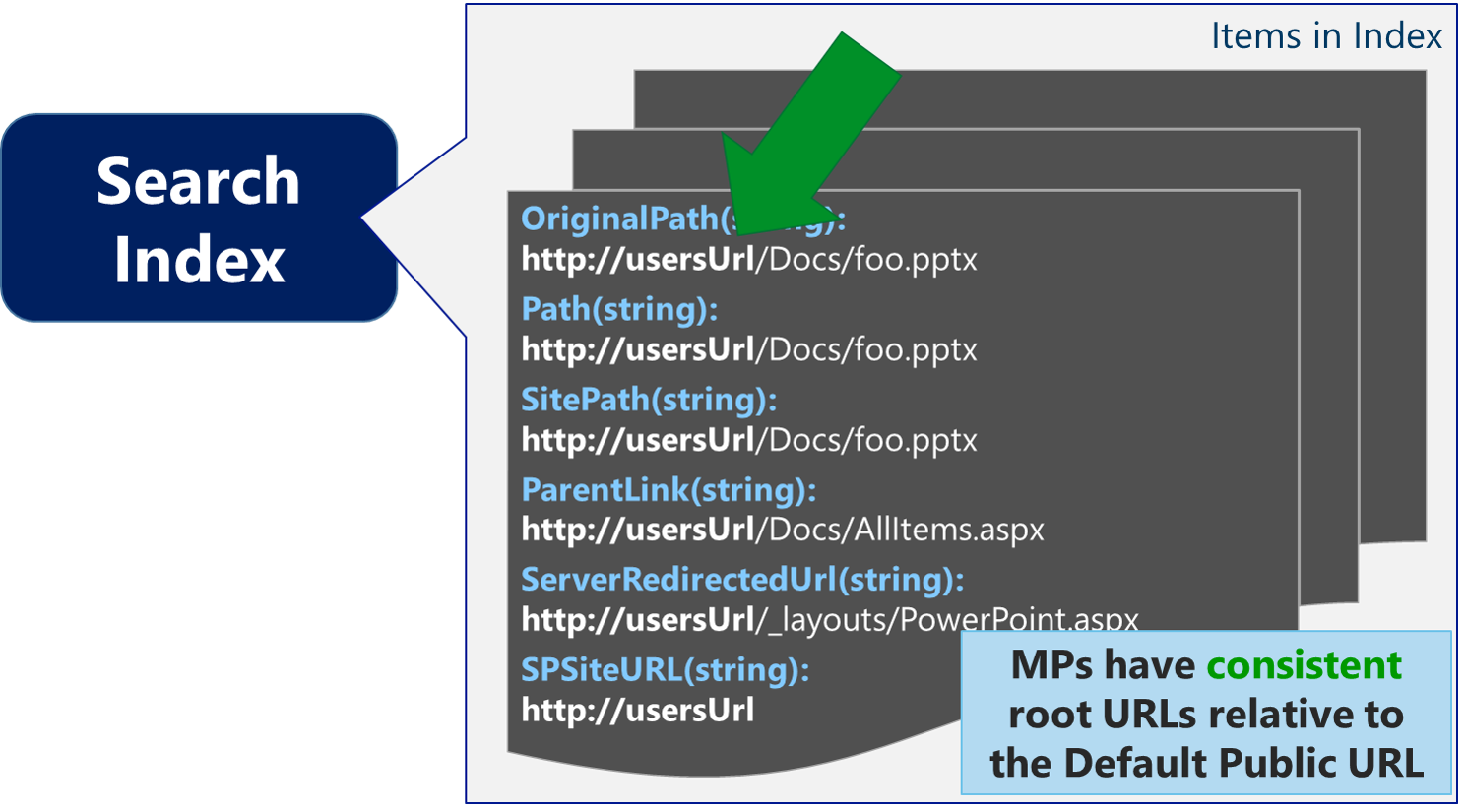

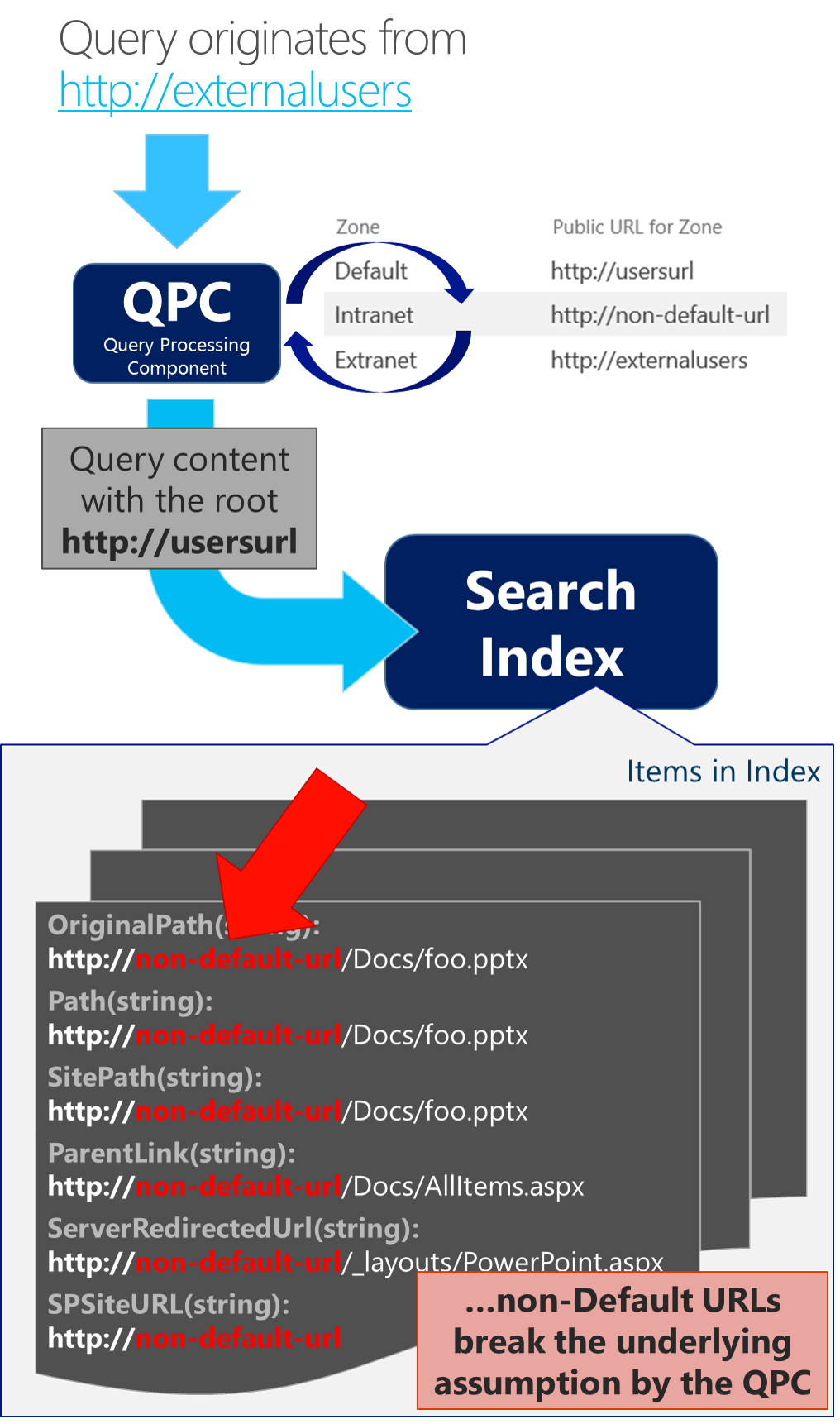

This behavior above occurs because the Query Processing component uses the Alternate Access Mappings to map the URLs in Search results to match the zone from which the query is made. For this behavior to work, it predicates that the QPC assume URL-based properties in the Search Index correspond with the Default Public URLs from each Web Application.

- If the URLs in the Search Index match the Default Public URL for a Web Application, the URLs in results can be properly re-mapped to the current zone as above

- However, if the URLs in the Search Index DO NOT match the Default Public URL for any Web Application in the AAMs, then no re-mapping occurs (spoiler alert: this explains the behavior in my "Beware crawling the non-Default zone…" post, which I'll explain further below)

Crawl the Public URL of the Default Zone

There is an oft referenced TechNet for "Plan for user authentication…", which notes "If NTLM authentication is not configured on the default zone, the crawl component can use a different zone that is configured to use NTLM authentication". Although it isn't technically unsupported to crawl a non-Default zone, it might as well should be – when doing so, Search won't behave as expected as noted above and further explained below. Being said, in my opinion, this statement from the Plan for user authentication article is misguided at best and will only lead you to frustration.

In its place, we helped get this written (by the Search folks rather than the Authentication folks), so I would defer to this guidance instead:

Best practices for crawling in SharePoint Server 2013

Particularly, the section: Crawl the default zone of SharePoint web applications

The most common reasoning I hear for crawling the non-Default zone typically (and reasonably) stems from a business need to use a custom claims provider (e.g. SAML/ADFS) as the Authentication method used in the Default zone, but also needing to make Windows Authentication available in another zone to facilitate the SharePoint Search crawl component.

If you are unable to configure in a way where the Default zone uses Windows Authentication and the extended zone uses your custom claims provider, then the only viable option entails:

- Configure BOTH a custom claims provider and Windows Authentication (e.g. NTLM) in the Default zone of the Web Application

- And implement a custom login form such as the one by Russ Maxwell here (SP2013) or the one by Steve Peschka here (SP2010)

- Without the custom login form, the crawler won't know how to pick which authentication method to use and crawls will fail

- Depending on your implementation, you may also need to change to a "Custom Sign In Page" for the Web Application

| Updated (July 2016) Avoid Heads up when using "/_trust/default.aspx?trust=name-of-your-trusted-identity-provider" as the Custom Sign in Page as this will break Office integration and users will not be redirected properly for authentication when opening client application.* I originally said "Avoid using..." and updated this to "heads up when using..." because it will break the Office integration. However, there is a VERY easy workaround of simply logging in with the browser before attempting to open files with the Office clients. Alternatively, in the comments, someone else noted the use of a registry key setting (e.g. "RefreshFormBasedAuthCookie") as another potential workaround (but that one is outside of my wheelhouse, so I'll defer to the KB for any further details). |

Impacts of crawling the non-Default zone

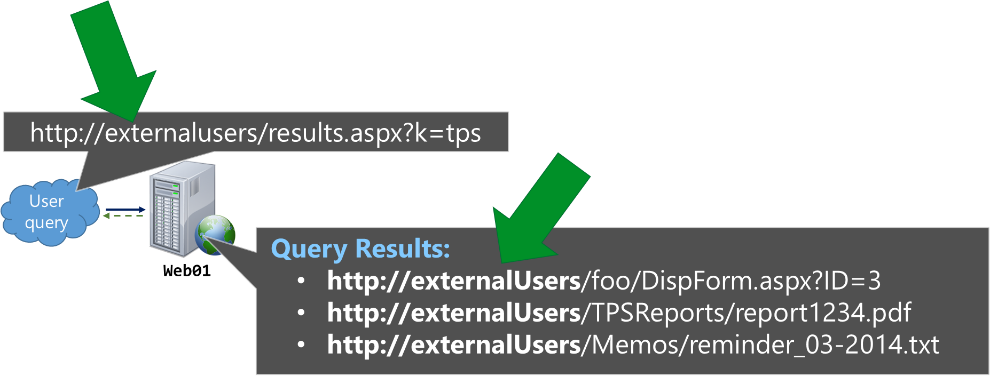

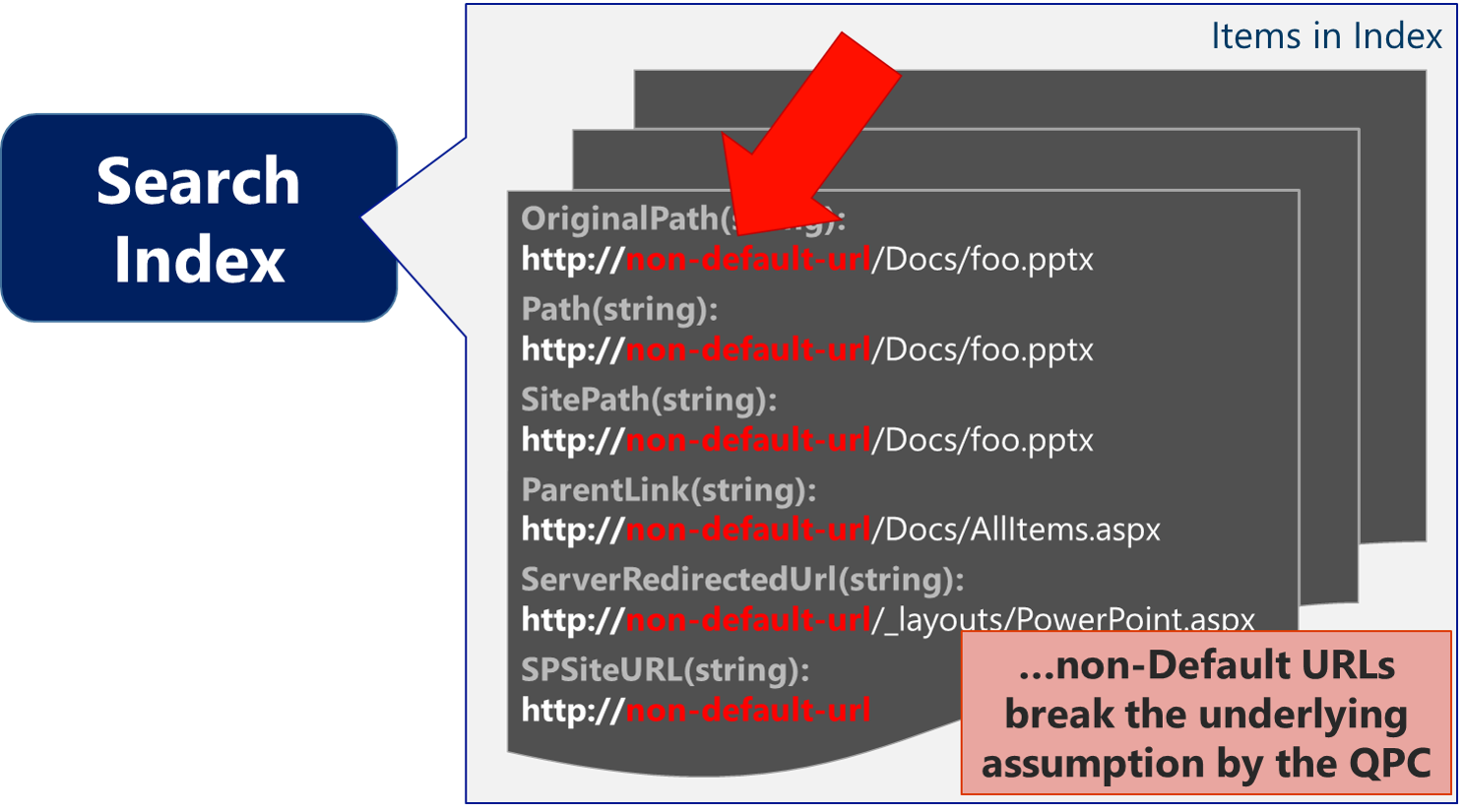

For example, assume the same Web Application and AAMs as above. If the SharePoint Search Content Source has https://non-default-url as the start address (which is not advised, but assumed for this example to prove a point), then URL-based Managed Properties (MPs) in the index will now all [incorrectly] be relative to this https://non-default-url as illustrated below (spoiler alert: this is why contextual scope queries break… but I'll illustrate that further down)

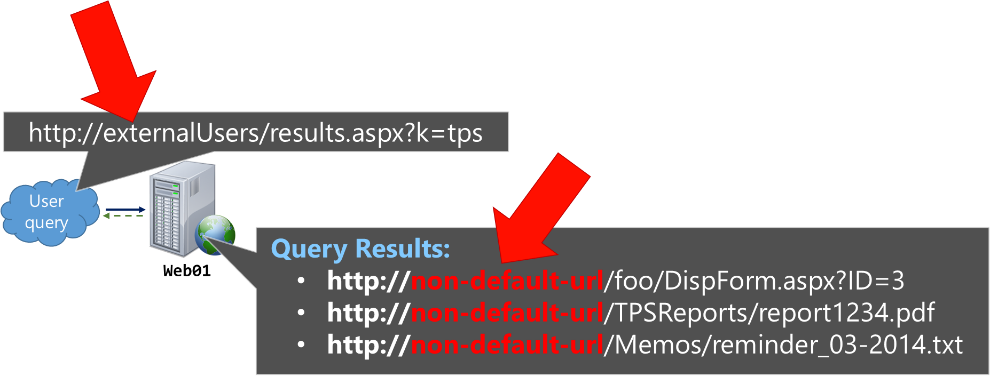

As noted above, the QPC assumes URLs correlate with the Default Public URLs - if the URL-based MPs in the Search Index DO NOT match the Default Public URL for any Web Application in the AAMs, then no re-mapping can occur. In this new scenario (*assuming a full crawl occurred since changing the Content Source with this new start address), we can demonstrate this behavior when the user now browses https://externalUsers and issues the same query as before.

For this, the URL-based MPs in the Search Index all contain values relative to https://non-default-url, which does not match the Default Public URL. Therefore, the QPC cannot correctly re-mapped search results with the URL of the current zone (e.g. https://externalUsers). Instead, the results will all remain relative to the URL that was crawled (which is the value in the Index) as below:

In my "Beware crawling the non-Default zone…" post, I provide these scenarios in further detail. The takeaway should simply be that the results show the incorrect URL root because that is the URL that is in the Search Index when crawling a non-Default zone.

This goes back to the undocumented assumption baked into SharePoint Search that the Default Public URL of a Web Application will be crawled. Because, in this example scenario, the non-Default URL was crawled, this built-in assumption is not valid and thus the results cannot be mapped back to the appropriate zone when rendering of query results occurs.

For similar reasons, this also begins to explain why contextually scoped queries such as "this site" or "this list" return no results if crawling the non-Default zone.

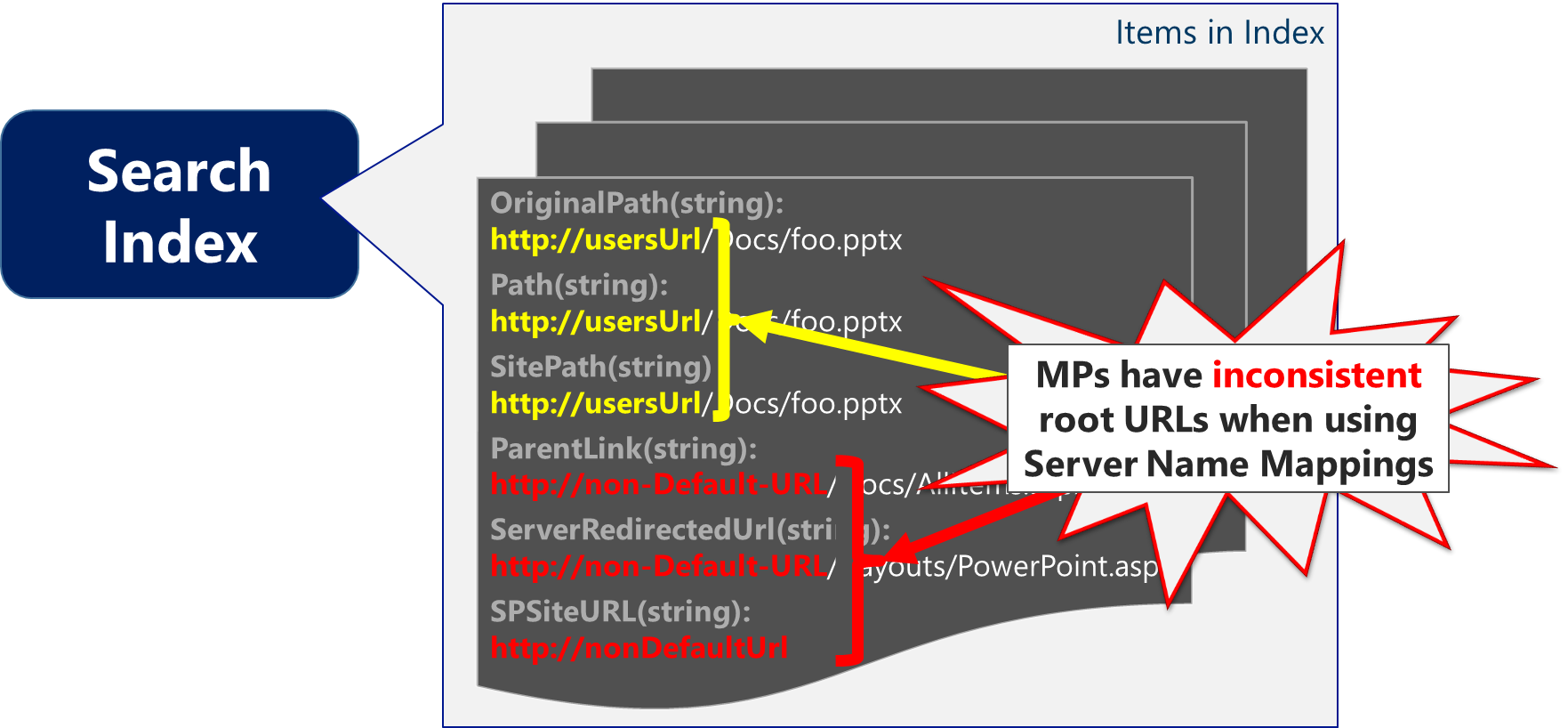

Avoid Server Name Mappings

Another misconception I hear is to simply use Server Name Mappings to overcome the problems that manifest by crawling a non-Default zone. However, Server Name Mappings are not intended for SharePoint content and using them will cause you more grief (e.g. not all Managed Properties adhere to this mapping, so you'll end up with inconsistencies in URL-based MPs, which also breaks OWA), especially in SP2013 …it isn't technically unsupported to use them, but it too might as well be (e.g. it's going to break things in an expected manner and you'll likely never get a fix for any of these because Server Name Mappings are not intended for this purpose).

However, when Server Name Mappings get introduced, the problem only gets worse (particularly for SP2013). Because not all Managed Properties *adhere to this mapping, you will end up with inconsistencies in URL-based MPs such as below (*Server Name Mappings aren't intended for SharePoint content, so these MPs do not adhere to the mappings effectively by design)

It's worth pointing out that the ServerRedirectedUrl Managed Property is one of these MPs that do not get re-mapped. With both FAST Search for SP2010 and SharePoint 2013, this MP holds the "URL used in association with [Office Web Apps (OWA)]. This enables you to provide thumbnail previews in the result. " By impacting this MP, expect the document previews in the search results to break.

Similarly, the SPSiteURL, which holds the URL of the SharePoint site where the matching item is located – and commonly used for refinement, does not get re-mapped. For example, assume the user browses to Default zone and attempts to refine by the Site Collection. In this case, there will be no matches because https://usersUrl (the Default Public URL) does not match https://nonDefaultUrl (the value in the SPSiteURL MP in the Search Index).

As a side note:

The SharePoint 2013 Search Query Tool on CodePlex is a great way to see the inconsistency of URL-based properties when Server Name Mappings have been configured. To knowledge, this impacts at least the following managed properties such as OriginalPath, Path, SitePath, ParentLink, ServerRedirectedUrl, and SPSiteUrl...

Why this Breaks Contextual Queries

Let's continue to assume the earlier [ill-advised] configuration with https://non-default-url as the start address in the SharePoint Search Content Source (and, for simplicity, assume no Server Name Mappings are configured – although it would not make much difference for the examples below). Now, the user navigates to https://externalUsers and issues a query using "This Site" (which is the default Result Source for a SharePoint Team Site search box).

In this case, the request is submitted to https://externalUsers/_layouts/15/osssearchresults.aspx?u= http%3A%2F%2Fexternalusers&k= bowling%20pins, which fires the query. In this URL, we see two noteworthy parameters (which I've URL decoded for an easier read):

- u=https://externalusers (which provides a Url context for the "This Site" part of the query)

- k=bowling pins (which defines the Keyword being queried)

With contextually scoped queries (e.g. "this site" or "this list"), the Query Processing component uses the AAMs to re-map the URL in the u parameter BEFORE submitting the query to the Index. Here again, we have evidence of the baked in assumption that the Default URL is expected in the Search Index because the QPC specifically maps this u parameter value …wait for it… to the Default Public URL.

To continue this example, the u parameter value gets remapped from https://externalusers to https://usersurl (e.g. the Default zone) BEFORE submitting the query to the Search Index as below:

However, because the Search Index only has MPs with https://non-default-url, this contextually scoped query will find zero results because nothing matches https://usersurl (which is the result of the QPC converting the URL passed in with the u parameter at query time into the Default Public URL). Whereas, by simply crawling the Default Public URL, the underlying assumption (e.g. that the Default zone got crawled) remains valid and allows for the contextual query to properly limit results by the appropriate site URL regardless of the originating zone from which the query is performed.

Avoid Manually Modifying Public URLs in AAMs

Another pitfall I see is direct modification of the Public URLs in the Alternate Access Mappings (*Note: You can pretty much add or remove Internal URLs all day long – the potential trouble occurs when modifying the Public URLs for a Web Application).

When extending a web application, two things occur:

- The new AAM for this new zone is created

- …and, more importantly, a corresponding IIS Site is created for this new zone

However, when just creating the AAM by only using the "Mange AAMs" page in Central Admin, the corresponding IIS Site doesn't get created. Keep in mind that the web.config and site bindings live in the IIS site, so it's actually creating an ambiguous scenario for SharePoint and more typically, for any customizations [that won't be properly] registered with the existing web.config. This is why SharePoint reports a warning message in ULS if you create the new zone by simply modifying Public URLs rather than extending the Web Application.

Being said, you technically CAN create a new zone by modifying the AAMs from Central Admin, but I advise against it in almost all cases (personally, I wish a giant warning could be added to the "Edit Public URLs" page from Central Admin if not removed outright). If you do change Public URLs directly, just make sure you handle all the other manual pieces (e.g. web.config and IIS bindings) and account for this when enabling the Web Application service on another server in the Farm.

Summary

I probably sound like a broken record at this point, but I have to reiterate this again. There is an undocumented assumption baked into SharePoint Search that the Default Public URL of a Web Application will be crawled. By not crawling the Default URL, you will see the unexpected behaviors described below:

- Contextual scopes (like "this site" and "this list") will break and not return any results

- Cross-Web App queries will not map to the correct zone (this is what's being described in the post below stating "when I visit the search center from intranet zone, the items returned in search result page have URL for intranet zone corresponding to their specific web application also"), and I have described this same behavior here [SPC] and here [blog].

- Similarly, if you use Server Name Mappings with SharePoint content (particularly in SharePoint 2013), the results will be further impacted in a negative way:

- Server Name Mappings were not intended/designed for SharePoint content, so they are not expected to work

- Server Name Mappings do not re-map all URL based Managed Properties in the Search Index (see the first bullet point ...again, not intended for this), so using Server Name Mappings will actually result in an inconsistency in your Search Index

- Office Web Apps will break because the ServerRedirectedUrl MP does not get re-mapped, so the iFrame used to render the OWA part will actually reference the URL root that was crawled rather than the default URL

I hope this helps…

Comments

Anonymous

July 08, 2014

The comment has been removedAnonymous

July 09, 2014

The comment has been removed- Anonymous

December 07, 2017

Can you suggest how to search result appearing SSL offloading using the default zone

- Anonymous

Anonymous

July 09, 2014

Thanks a lot Brian!! I'm trying to do some new tests, and as soon as I can, I'll post news again! I prefer to move this question on forums, probably the better place for it ;) social.technet.microsoft.com/.../sharepoint-2013-search-contextual-scope-and-aam-mistakesAnonymous

August 11, 2014

Thanks a lot for posting, this is one of the best post on MSDN that I've read lately! I was precisely wondering if this "non-default zone crawl" bug was solved in SP2013...Anonymous

July 05, 2015

Hi, and awesome explanations! providing you manage the auth prompt at login by code, what prevents you to simply activate both NTLM and ADFS on a unique default zone?Anonymous

July 07, 2015

Hi Emmanuel... nothing :-) in fact, that's what I recommend: • Configure BOTH a custom claims provider and Windows Authentication (e.g. NTLM) in the Default zone of the Web Application • And implement a custom login form (e.g. to manage the auth prompt at login by code)Anonymous

July 21, 2015

Very well explained !! Thanks a ton !!Anonymous

February 13, 2016

Hi Brian, Regarding this:

"Avoid using "/_trust/default.aspx?trust=name-of-your-trusted-identity-provider" as the Custom Sign in Page as this will break Office integration and users will not be redirected properly for authentication when opening client application"

We are on SharePoint 2013 SP1, and use a 3rd party federated authentication solution (PingFederate), and have specified this URL as the 'Custom sign-in page' option under the web application settings: /_trust/?trust=<TrustedIdentityProviderName> We have configured BOTH a custom claims provider and Windows Authentication (NTLM) in the Default zone of the Web Application. Search will crawl the Default zone using Windows auth per your recommendation in this blog post. We also have Office Web Apps 2013 SP1 installed. So, Office documents are displayed in the web browser by default, and can be opened up in the client application by selecting the 'Edit in Word/Excel/PowerPoint/OneNote' option in IE. Based on my initial testing, I haven't seen any authentication issues with opening documents in Office client applications. Are you aware of any other specific use cases with client applications breaking when the custom sign in page is set to /_trust/?trust=<TrustedIdentityProviderName> ? If there is any other MS documentation / blog posts that you can refer me to regarding this, that would be great. Thanks, Mario

* **Anonymous**

April 26, 2017

Spender, thanks a lot for this awesome article. I got to this article a little late.Originally we had all the search settings configured correctly to crawl the default public URL. We have our application with full url http://spcollab.company.com and short url (extended, aam) http://spcollab I added the extended url onto content source and it doubled up the crawled contents, now when i remove the short url i stopped seeing the search results when i access the site from the short url.is there a way of bringing this behaviour to default (e.g. crawling with default url and getting results in both access methods).appreciate your response

Anonymous

February 17, 2016

The issue is probably not as big as the warning I provided... Essentially, if you authenticate via the web and then attempt to open a document, everything works as expected. But, if after your fedAuth cookie expires, if you try opening the document from the client (without authenticating via the web), it will fail... but the workaround is to just login via the web. I hope this helps, --BrianAnonymous

February 21, 2016

Thanks for clarifying, Brian. In the blog post by Russ Maxwell that you linked to ( blogs.msdn.com/.../bypassing-multiple-authentication-providers-in-sharepoint-2013.aspx ) , he mentions 2 options. Option 1 is to set the 'Custom sign-in page' in the web app settings to '/_trust/default.aspx' , which is essentially what I have currently done. And Option 2 is to write custom code for the login page to redirect SAML users. However, at first glance, the sample code for Option 2 looks to be directing users to the same location - i.e. '/_trust/default.aspx' I'm wondering if Option 2 really resolves the issue of users needing to authenticate via web when opening documents in Office clients, after the FedAuth cookie expires. If Option 2 does not resolve the issue, then is there any way to get around this issue when you have both Windows and SAML authentication enabled on the Default zone? Would appreciate any further insights you might have about this. Thanks !Anonymous

June 01, 2016

A problem we ran into: SharePoint alerts use the 'Default' URL. So to have alerts work for external users, we had to use the https url as the default.Anonymous

June 25, 2016

Just wanted to post an update here, since I've resolved a couple of issues when you have a single Default zone with both Windows authentication (for search crawl) and SAML authentication with a trusted identity provider (for end users), and you have specified a custom sign-in URL in the web application settings:1) When the FedAuth cookie expires, the OneDrive for Business client prompts for authentication with the message 'We need your credentials to sync some libraries', and provides an 'Enter Credentials' link. Clicking the link results in an error. The fix is to change the custom sign-in URL in the web app settings from /_trust/default.aspx?trust=name-of-your-trusted-identity-provider TO /_trust/default.aspx . Once this is done, clicking the 'Enter credentials' link in the OneDrive for Business client successfully authenticates you to the trusted identity provider.2) When the FedAuth cookie expires, if you have a SharePoint document open in an MS Office client, attempting to save the document will result in this error message: "Upload Failed - You are required to sign in to upload your changes to this Location. Sign In" . When you click the Sign In button in the yellow bar below the ribbon, nothing happens. The fix for this issue is a client side fix. On the client computer running MS Office, create a new registry value named RefreshFormBasedAuthCookie with type DWORD and value 1, under the key HKEY_CURRENT_USER\Software\Microsoft\Office\15.0\Common\Internet\FormsBasedAuthSettings (Create the FormsBasedAuthSettings key if it does not exist) . This fix is document here: https://support.microsoft.com/en-us/kb/2910931 . This article also mentions setting the OptionsTimeout registry value, but it my case, I did not have to create it. Simply creating the RefreshFormBasedAuthCookie value and setting it to 1 was sufficient. After applying this registry fix, MS Office will automatically reauthenticate to the trusted identity provider when the FedAuth cookie expires. The user will no longer have to visit the web site to authenticate in this scenario.Brian - You may want to update the big yellow warning in the article with this updated information and fix from https://support.microsoft.com/en-us/kb/2910931 :)- Anonymous

July 06, 2016

Much appreciated Mario ...agreed and doing so now.

- Anonymous

Anonymous

October 18, 2016

The comment has been removedAnonymous

February 15, 2017

Hi again Brian, nearly two years since my last comment :)Now we have quite handled the onprem problems, I wonder if you have some information about what (really) happens with the security trimming and cloud index? I mean, onprem, SPS can use the custom provider to resolve the user's identity. My understanding is that natively, the cloud results are security trimmed with the windows sid found in the AAD's user account? If true, that excludes any kind of FBA or even Claim-based trimming, hmmm.- Anonymous

February 24, 2017

*(I'm assuming you are talking Cloud Hybrid Search)Unfortunately, the only route supported is Windows-based claims... FBA or SAML-based claims are not supported for security trimming content. - Anonymous

February 28, 2017

Emmanuel - you are correct...For reference, here is a good thread that discusses some scenarios around this:https://social.technet.microsoft.com/Forums/en-US/71b2c9d9-ad3c-4c88-b11c-8c58e6cdb771/hybrid-search-security-trimming-issues?forum=CloudSSAAs Neil mentions there, this is a current product limitation. You can either add a User Voice request to https://sharepoint.uservoice.com or submit a formal DCR to support this. It will be considered by the triage team.

- Anonymous