What happens when you await? – Scaling it out (part III)

This mini-series of three blog entries dives into what actually happens when you use the await keyword in C#. We will evolve a small codebase from a synchronous to a scaled-out asynchronous implementation, and get into the details as we go along. This is the third and final post and it shows how we modify the code to run as parallel as possible and handle all the problems and details of this transition.

Getting to the core(s)

In the last blog post we transformed our code from executing synchronously to an asynchronous implementation. All work is done in the background and the UI remains responsive while we kept the coding style synchronous. That’s the power of the async/await pattern and it makes writing asynchronous code simple. But we still have a problem in the code though, our calculations are done one at the time, or sequentially. Todays CPUs all have multiple cores and we have a real possibility to make things go faster by executing the calculations in parallel. This blog post will layout how to do this, and what pitfalls you might encounter.

The perils of parallelism

Writing code that executes in parallel is really, really hard. Or used to be anyway. Back in the Win32 days, we got access to threads, critical sections, mutexes, events etc. to handle this. These are operating system entities, real low-level objects that provides no abstractions and patterns to aid in developing. Net result of this is that every team created their own abstractions like thread pools, queue managers and so forth. This was clearly not an ideal situation. With the advent of multiple cores in the CPUs in recent years, the need for better abstractions and a better way to write parallel code has only accelerated.

The .NET team has recognized this, and has for a number of years worked on this. In fact, it is the Parallel extensions team that has done this. They have produced a number of abstractions and libraries like:

- System.Ling

- Parallel extensions

- System.Collections.Concurrent

- Immutable collections

- System.Threading.Tasks

- Async/Await

- TPL DataFlow

What we have now is a toolbox of abstractions and classes that helps us immensely when we want to scale out our code to execute in parallel. These are just enablers of course, we still have to solve the fundamental problems when writing code that executes in parallel.

- Manage concurrent read access to a shared state

- Manage concurrent write access to a shared state.

- Manage composability or sequentially of the code.

- Handling our SynchronizationContext to avoid deadlocks.

Details about this is a series of blog posts in itself, let’s conclude this for now that in our toolbox we have a number of tools that helps solve these problems in very different ways. But back to our Task at hand (no pun intended). Let’s scale out our code.

Executing in parallel

We need to modify our code to start the all calculations at the same time. One way of doing this is to not use await for the asynchronous call to pi() , but instead use the fact that we are working with Task. The Task class has static function WhenAll() that waits for a range of tasks, and only returns when all of them has completed. It has a similar static method called WhenAny() that returns as soon as at least one of the tasks has completed. And here’s the kicker: Both WhenAll() and WhenAny() returns a Task that we can await. An implementation might look like this:

private async Task RunScenario()

{

var tasks = new Task<double>[NumThreads];

for (int i = 0; i < NumThreads; i++) {

tasks[i] = Task.Run<double>(() => { return pi(); });

}

await Task.WhenAll(tasks);

for (int i = 0; i < NumThreads; i++) {

WorkDone(i, tasks[i].Result);

}

}

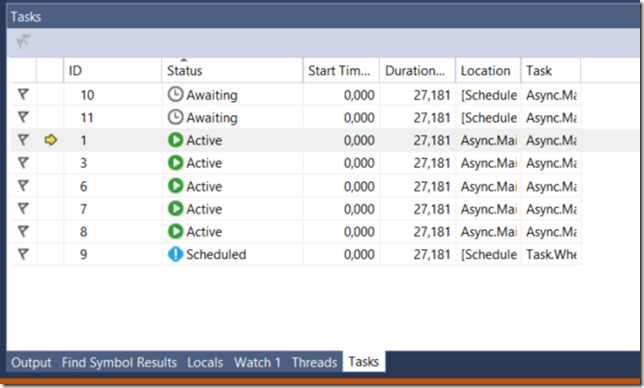

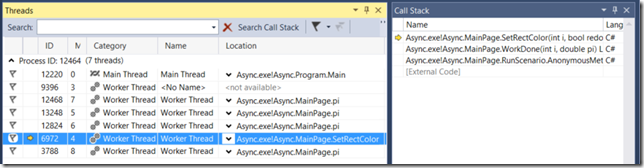

This code will now start of all calulations in parallel, wait for them all to complete and then verify it and updating the UI. Let’s run it and see what we get, Huh? It never ends? Wasn´t it supposed to go faster? We need to debug this. We break the execution and open up the new Tasks Debug Window.

We can see that we have 5 tasks Active, 2 awaiting and 1 scheduled so no one has completed. Double-clicking on one of the Active tasks opens up the source code where it’s currently executing.

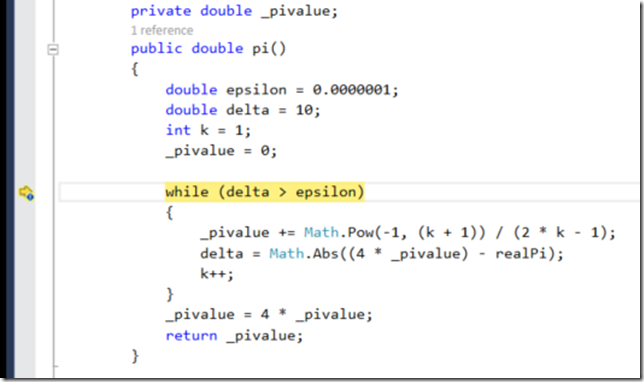

So the code seems to be stuck in the while loop, not surprising given that it never completes. Opening up the other Active tasks confirms that it is doing the same thing. But why? Let’s see if we can examine why it never completes. Opening up Another debug window, Parallel Watch we can examine a variable’s value concurrently in all Active tasks, like this.

Why is _piValue the same in all instances of the Tasks? Why is delta so large? And why is _piValue not remotely close to the actual value of pi. Ah, but of course. See where we have declared the _piValue, it’s a member variable shared between the instances. In other words, it’s a shared state that we are both reading from and writing to. In all previous incarnations of the code, pi() has never been run in parallel, so the problem hasn’t surfaced.

Ok, let’s fix this problem. We need to guard access to _piValue. Since it’s used in while loop, let’s just guard the execution with use of the lock keyword:

public double pi()

{

lock (this)

{

double epsilon = 0.0000001;

double delta = 10;

int k = 1;

_pivalue = 0;

while (delta > epsilon)

{

_pivalue += Math.Pow(-1, (k + 1)) / (2 * k - 1);

delta = Math.Abs((4 * _pivalue) - realPi);

k++;

}

_pivalue = 4 * _pivalue;

return _pivalue;

}

}

Let’s run the code again. now it completes but it actually looks worse than the previous version. It still takes too long, and the user interface, while responsive, isn’t updated until all calulations are done. The reason it doesn’t go faster is because we’ve serialized access to _piValue, and we had to do it so that the entire function is serialized. Clearly a bad choice, but we will improve on that later. The reason UI is not updated is because we don’t call WorkDone() inside the first loop. Let’s change the code to this and run again.

private async Task RunScenario()

{

var tasks = new Task[NumThreads];

for (int i = 0; i < NumThreads; i++) {

tasks[i] = Task.Run(() =>

{

var pival = pi();

WorkDone(i, pival);

});

}

await Task.WhenAll(tasks);

}

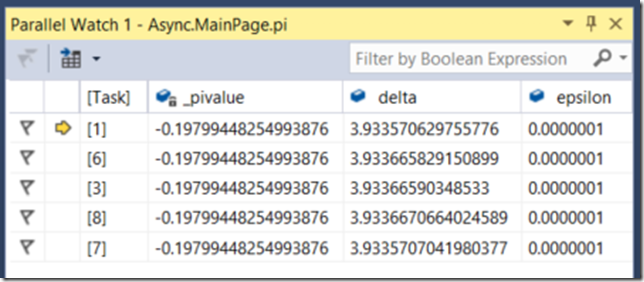

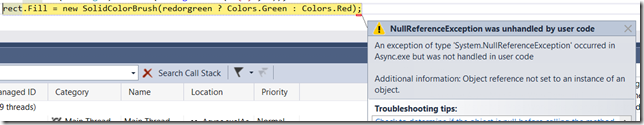

Running it again produces the following exception when it has finished the first calculation:

Looking in the debugger on the call stack and the Threads Window we get the following information:

So, in our new code we call WorkDone() when a calculation is complete. This is run in a task on a thread from some thread pool. Here we try to manipulate UI elements and a strict rule for this in WinRT is that is only allowed on the UI thread. What’s the UI Thread? Every top-level window has it’s own thread which processes messages. At the heart of this is something that has been around since the incarnation of Windows - the actual C-code looks something like this:

MSG msg;

while (GetMessage(&msg, NULL, 0, 0)) {

TranslateMessage(&msg);

DispatchMessage(&msg);

}

Everything that happens to a top window (and it’s descendants) go through here. Keeping this loop running is what makes the UI responsive. As an interesting side note, if you were to look inside a Windows 1.01 app from 1985, you would see the exact same code. I’m not kidding. In those days there were no threads (well, technically one I guess), and every top window shared the same big loop. It was up to the programmer to make sure it didn’t do anything lengthy in here, since it froze the entire system, just not the app. It was called cooperative multitasking. It’s kind of mind-blowing that here, 30 years later, this central loop looks exactly the same.

So, whenever your code is running something in the UI Thread, the DispatchMessage() call above is at the top of the call stack. If we then perform some lengthy operation, we will block this loop from processing any incoming messages and your UI is frozen. As for the rule that all operations on UI elements only can happen on the UI thread, we can only speculate. The code will certainly run faster since we don’t have to guard any shared state from concurrent access.

Now, we need to fix our problem. The trick is to find out if we are running on the UI thread or not. If not, we need to post the operation back to the UI thread so it can execute in it’s proper context. We need to rewrite WorkDone() to this:

private void WorkDone(int i, double pi)

{

if (!Dispatcher.HasThreadAccess)

{

Dispatcher.RunAsync(CoreDispatcherPriority.Low, () => { WorkDone(i, pi); });

}

else

{

SetRectColor(i, IsPiCorrect(pi));

}

}

So, Dispatcher.HasThreadAccess (an instance of CoreDispatcher) returns whether the synchronization context we’re running is running on the UI thread. If so, we can safely call SetRectColor() but if not we use the RunAsync() method to make an asynchronous call to ourselves, this time on the UI thread. We compile and run again:

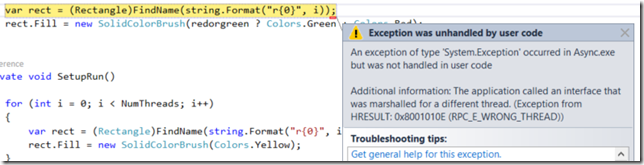

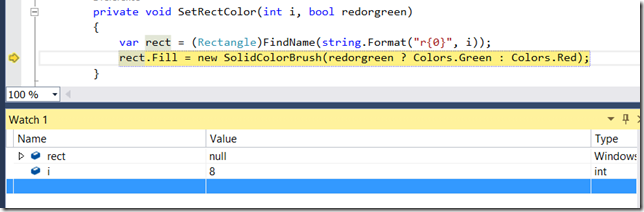

Now we got an null reference exception. Stepping in to the code we see the following:

Okay, I can see why there was a null reference exception, the rect variable is null. But why, well i = 8 and since we have set NumThreads to 8, i should never be 8. How is this possible. Reexamining the calculation loop give us some insight.

private async Task RunScenario()

{

var tasks = new Task[NumThreads];

for (int i = 0; i < NumThreads; i++) {

tasks[i] = Task.Run(() =>

{

var pival = pi();

WorkDone(i, pival);

});

}

await Task.WhenAll(tasks);

}

So in order for this to fail, i must be 8 when calling WorkDone() , but clearly inside the loop that shouldn’t happen. Well, after the loop has completed, i will certainly be 8. Hmm. The answer is that the code inside Task.Run() is run asynchronously, but it uses context from outside it’s context. So by the time WorkDone() is called, i certainly is 8 for all tasks. We need to capture i on the stack so we don’t overwrite it. This will fix the problem:

private async Task RunScenario()

{

var tasks = new Task[NumThreads];

for (int i = 0; i < NumThreads; i++)

{

var index = i;

tasks[i] = Task.Run(() =>

{

var pival = pi();

WorkDone(index, pival);

});

}

await Task.WhenAll(tasks);

}

Running the code again we are almost there. UI never locks up, rectangles get green one-by-one and interestingly enough there’s a random order in which the rectangles get done. But that’s only natural, given that we kick off a number of tasks that run in parallel, and it’s non-determistic.

So, can we scale this code out to run on multiple cores and get this code done. Yes, we can, let’s revisit the pi() function and see why it’s suboptimal:

private double _pivalue;

public double pi()

{

lock (this)

{

double epsilon = 0.0000001;

double delta = 10;

int k = 1;

_pivalue = 0;

while (delta > epsilon)

{

_pivalue += Math.Pow(-1, (k + 1)) / (2 * k - 1);

delta = Math.Abs((4 * _pivalue) - realPi);

k++;

}

_pivalue = 4 * _pivalue;

return _pivalue;

}

}

The problem was that the function was using a shared state ( _piValue) for both read and write access. The need to guard the shared states from concurrent reading and Writing makes the code very suboptimal. The solution in this case (and in all such cases)is to minimize or even completely remove any shared state that we need write access to. In this case it simple, convert the member variable _piValue to a local variable inside the pi() function instead. Something like this:

public double pi()

{

double epsilon = 0.0000001;

double delta = 10;

int k = 1;

double pivalue = 0;

while (delta > epsilon)

{

pivalue += Math.Pow(-1, (k + 1)) / (2 * k - 1);

delta = Math.Abs((4 * pivalue) - realPi);

k++;

}

pivalue = 4 * pivalue;

return pivalue;

}

Yes! Running the code again we get the expected result. UI is still responsive, the code completes much faster and we can visually see the rectangles go green concurrently. Mission accomplished.

Summary

We hope we have provided you with some insight into how you can scale out your workload on all available cores. Also, we hope that we have pointed out some common pitfalls and some hard-to-fix issues that can show up. The parallel and asynchronous World is sure an exciting and surprising one!