Parsing Data From a Flat File With Inconsistent Delimiters in SQL Server

Introduction

This article explains variations on an approach which can be used to parse and import data with inconsistent delimiters from flat files.

Quite a common requirement is to work with data where we have no control of how it is generated. Ideally issues would be addressed at source. In the real world it is unfortunately all too common to find this is not an option. The data comes however it is delivered and we must just get on with importing that data. Any problems are ours to solve.

Bulk Copy Options

There are many bulk copy options available in SQL Server. We will see how the above scenario is handled by of each of these. The common aspect to all these options is the use of a format file.

BULK INSERT

BULK INSERT can be used for importing flat file data to database table. The standard BULK INSERT command has a COLUMN DELIMITER option where we can specify the delimiter for the file. By definition here the file delimiter is not just one character so we cannot use COLUMN DELIMITER.

Consider the below sample file for our illustration

ABC,123;Test value|xxyy

XYZ,345;blah blah|iuuy

JJH,776;iyuiy|xxyy

KKP,991;iuyu|xxyyw

As seen from above the file has , ; and | characters coming as delimiters

Now lets see how we can import the above file data to a SQL Server table using BULK INSERT

For this purpose we will first create a table as below

CREATE TABLE testBI

(

Col1 varchar(100),

ID int,

Col2 varchar(100),

Col3 varchar(100)

)

The format file for the above file data looks like below

10.0

4

1 SQLCHAR 0 3 "," 1 Col1 SQL_Latin1_General_CP1_CI_AS

2 SQLINT 0 100 ";" 2 Col2 ""

3 SQLCHAR 0 100 "|" 3 Col3 SQL_Latin1_General_CP1_CI_AS

4 SQLCHAR 0 100 "\r\n" 4 Col4 SQL_Latin1_General_CP1_CI_AS

Notice the 5th column in the format file. This gives the information about delimiters for the corresponding columns. The BULK INSERT statement will use this information to parse the data from the file and populate the table

The BULK INSERT command will look like below

BULK INSERT TestBI

FROM '<file path>\Sample.txt'

WITH (FORMATFILE = '<file path>\Format.fmt');

Make sure you replace the place holders with the correct file path for your machine.

If you check the table you can see the data properly populated to the table as below

OPENROWSET....BULK

Another common method that can be used for the import of bulk data to database table is by using OPENROWSET..BULK mode. Lets see how we can use this to parse our flat file with inconsistent delimiters.

The OPENROWSET..BULK has a FORMAT FILE option through which you can specify the format file to be used for parsing the file.

The query will look like below

INSERT INTO TestBI

SELECT *

FROM OPENROWSET(BULK '<file path>\Sample.txt',

FORMATFILE='<file path>\Format.fmt'

) as t1 ;

The format file will specify the delimiter information which will be used by the OPENROWSET command for parsing out the files

The full command along with the populated data can be shown by the below

BCP

bcp is a command line option used for bulk import and export of data from and to Sql Server database. Now lets see how bcp can be used to parse out data from a file with inconsistent delimiters.

bcp command includes -f switch which can be used to specify format file.

The bcp command will look like this

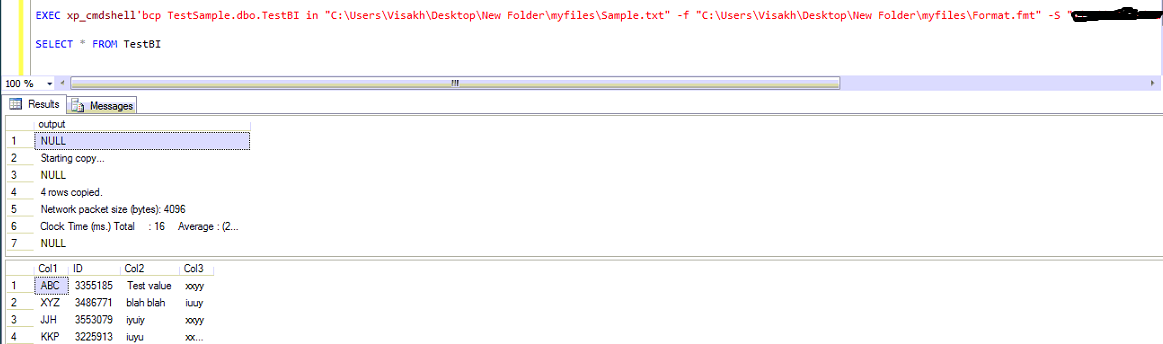

EXEC xp_cmdshell'bcp TestSample.dbo.TestBI in "<file path>\Sample.txt" -f "<file path>\Format.fmt" -S "ServerName" -T'

The above assumes windows authentication.

SQL Server authentication is rather less attractive since we need to also specify username and password as below

EXEC xp_cmdshell'bcp TestSample.dbo.TestBI in "<file path>\Sample.txt" -f "<file path>\Format.fmt" -S "ServerName" -U <Username> -P <password>'

Once this is done bcp will parse the data from file and bulk copy it to the table

Here we would require using xp_cmdshell to invoke bcp as the latter is a command line utility. So if we need to use bcp in SQLServer we need to make sure that xp_cmdshell is enabled at the server level using sp_configure

Here's the full illustration

Files With Text Qualifiers

Things become a little more interesting when the file also includes text qualifiers which encapsulates the data. This occurs usually when the data can itself have embedded delimiter characters within it. The text qualifiers would help us to parse the data out clearly from the delimiters.

Now lets see how we can apply the above logic in a case where file has inconsistent delimiters coupled with text qualifiers.

Consider this file data for the illustration

ABC,123;"Test value"|xxyy

XYZ,345;"blah blah|iuuy

JJH,776;"iyuiy, uyui"|xxyy

KKP,991;"iuyu, khkjhj"|xxyyw

Here we have text qualifiers in the file to representing data with embedded delimiter characters. Lets see how this data can be parsed using the various bulk options discussed above.

The solution is quite similar to the one discussed above. The main difference here would be the format file where we have to also account for the text qualifiers which appears in the file

The format file for the file with text qualifiers looks like this

10.0

4

1 SQLCHAR 0 3 "," 1 Col1 SQL_Latin1_General_CP1_CI_AS

2 SQLINT 0 100 ";\"" 2 Col2 ""

3 SQLCHAR 0 100 "\"|" 3 Col3 SQL_Latin1_General_CP1_CI_AS

4 SQLCHAR 0 100 "\r\n" 4 Col4 SQL_Latin1_General_CP1_CI_AS

Notice the delimiter section where we also specify the text qualifier characters, allowing correct parsing of data.

The syntax for the bulk options (BULK INSERT,OPENROWSET..BULK and bcp) remains the same

Now using the above format file we can see how the data gets parsed in the three cases discussed previously

BULK INSERT

OPENROWSET...BULK

BCP

So we have seen from all the above cases that by specifying a format file we would be able to get data from flat file onto a Sql Server table.

We can go for any of the above approaches for data transfer so far as it does not involve any intermediate logic to be included. However things become little more interesting when we have some intermediate processing to be done on the data like reference data lookups, transformations to change raw data into useful information.

In cases where we require further manipulation using these data we need to use some kind of ETL tool like SSIS which will provide standard tasks for performing the above activities.

Parsing Flat File With Inconsistent Delimiters in SSIS

Consider the case where we have flat file with inconsistent delimiters between columns which needs to be processed by SSIS package inside data flow task. The SSIS package will also have tasks used for further data manipulation inside.

The standard way of implementing this kind of functionality is by using a data flow task with flat file source task and then using required tasks like lookup,derived column etc in between to apply the required transformations and finally a OLEDB destination to populate the data to the table. Though this works fine for standard files, scenarios like above where the delimiters between columns are not consistent cannot be handled by the above approach. This is because flat file connection manager expects consistent delimiter information to be used for the file source. As such we have to do a small workaround to make this work correctly for file like this.

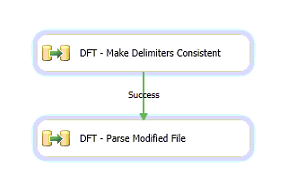

The package in that case would be as below

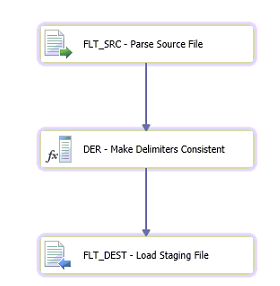

There will be two data flow tasks. The first data flow task will be used to parse the text file with inconsistent delimiters and make the delimiter information consistent. This is done by parsing it as a single column of data and then applying pattern replace logic using a derived column transformation.

The flat file connection manager would be configured as below for the source

As you see the entire row of file data is parsed into a single column. This is followed by derived column step which will have an expression used as below

REPLACE(REPLACE([Column 0],",","|"),";","|")

This will ensure all the delimiters get replaced into common delimiter (in this case |)

The full data flow logic looks like below

The converted file looks like this

The second data flow will use this converted file as the source which can be parsed by the standard data flow task to populate the data into a table. The standard flat file connection manager will be able to parse the converted file as its delimiter information is now consistent. This can now be parsed directly and data can be populated to the required table.

Files With Text Qualifiers in SSIS

Now lets see the scenario where the file involves embedded text qualifiers in them. In this case also we would be using the same approach as before. The only difference in this case would be to replace the pattern with text qualifier also to be based on the new consistent delimiter. Then in the second data flow in the connection manager properties specify the text qualifier detail and it will be able to parse out the data properly.

The expression used for the derived column in this case would be as below.

REPLACE(REPLACE([Column 0],",","|"),";","|")

Once you use the expression as above the delimiters will be made consistent. The converted file in this case looks like below

The second data flow will have two additional things in this case.

The first one would be that in flat file connection manager properties text qualifier would be specified as " character

We will also have an additional step in the data flow which would be a derived column task. This is for converting the embedded characters back ie as ,. The expression used will be as below

REPLACE([Column 2],"|",",")

Once populated the data will look like below

Summary

As you see from the above we can use format files along with bulk import options to extend the functionality to parse files with inconsistent delimiters or with text qualifiers. In the case of using SSIS using an additional data flow task with parsing logic will help us to make delimiters consistent. In case of text qualifiers being present we need to be aware of what are characters coming encapsulated within text qualifiers to apply a reverse logic in the end to get them back. Once conversion to standard format is done then we can make use of standard data flow task for parsing the files and retrieve the data.

See Also

Examples of Bulk Import Export of Data

Format File to Bulk Import Data

Using Bulk Insert to Import Inconsistent Data Format (Using Pure T-SQL)