C#: Image recognition with Emgu libraries

Abstract

In the following we'll see how to realize an image recognition program, using C# and EmGu, a .NET wrapper for the Intel OpenCV image-processing library.

At the end of the article, the reader will be able to develop a simple application which will search into a list of images for the one containing a smaller portion of the original one, graphically showing the points of intersection between the two.

Download and install EmGu

EmGu libraries can be downloaded at https://sourceforge.net/projects/emgucv/files/. The latest version of the package is located at https://sourceforge.net/projects/emgucv/files/latest/download"source=files, and comes in the form of an exe installer. For a pretty complete reference of the library, the reader is invited to visit http://www.emgu.com/wiki/index.php/Main_Page, which is the official Wiki of EmGu. Here will be considered only the indispensable aspects and functions to reach the declared goal of matching two images to find the smaller one in a list of possible candidates.

Once downloaded, the package can be installed in a folder of choice. Upon completion, the install folder will appear like the following, with the \bin folder containing the core components of the package, i.e. the DLLs that will be referenced in a project.

Create a EmGu referenced project

Let's create a new project (CTRL+N) in Visual Studio. For the present example, choose "Visual C#" and "Winforms application" from the Templates menu. Now, from Visual Studio top menu go to "Project", "Add References". Select "Browse", and - going to the EmGu path created by the previously launched installer - select Emgu.CV.World.dll and Emgu.CV.UI.dll (fig.2). Confirming with OK button, Visual Studio will proceed to add those references to the project, as shown in fig.3.

Important: to make the compiled solutions work, the x86 and x64 folders from \emgucv-windesktop 3.1.0.2504\bin must be copied into the compiled executable path. Otherwise, the programs which use Emgu won't be able to reference cvextern.dll, msvcp140.dll, opencv_ffmpeg310_64.dll, vcruntime140.dll, raising an exception and preventing them to run properly.

SURF detection

As said in the Wikipedia page for Speeded up robust features (https://en.wikipedia.org/wiki/Speeded_up_robust_features), "[...] is a patented local feature detector and descriptor. It can be used for tasks such as object recognition, image registration, classification or 3D reconstruction. It is partly inspired by the scale-invariant feature transform (SIFT) descriptor. The standard version of SURF is several times faster than SIFT and claimed by its authors to be more robust against different image transformations than SIFT. To detect interest points, SURF uses an integer approximation of the determinant of Hessian blob detector, which can be computed with 3 integer operations using a precomputed integral image. Its feature descriptor is based on the sum of the Haar wavelet response around the point of interest. These can also be computed with the aid of the integral image. SURF descriptors have been used to locate and recognize objects, people or faces, to reconstruct 3D scenes, to track objects and to extract points of interest."

FeatureMatching sample

In the \emgucv-windesktop 3.1.0.2504\Emgu.CV.Example\FeatureMatching folder, there is a sample which was written to show image recognition capabilities described as above, so it's a great point to start further implementations. Let's start from the FeatureMatching.cs file: few lines of code are present into the static method Main(). To the present means, those of interest are the following:

long matchTime;

using(Mat modelImage = CvInvoke.Imread("box.png", ImreadModes.Grayscale))

using (Mat observedImage = CvInvoke.Imread("box_in_scene.png", ImreadModes.Grayscale))

{

Mat result = DrawMatches.Draw(modelImage, observedImage, out matchTime);

ImageViewer.Show(result, String.Format("Matched in {0} milliseconds", matchTime));

}

The Mat class can be roughly paired to an Image, with a set of method and properties serving for the most diverse purposes. As previously said, here we'll be limited to the essential ones. A complete reference for the Mat class can be found at http://www.emgu.com/wiki/files/3.1.0/document/html/2ec33afb-1d2b-cac1-ea60-0b4775e4574c.htm

Imread method will read an image from a given path and load type (grayscale, color, and so on), returning a Mat object for further uses. We have two Mat variables from two different sources, i.e. "box.png" and "box_in_scene.png" (both included into the sample folder).

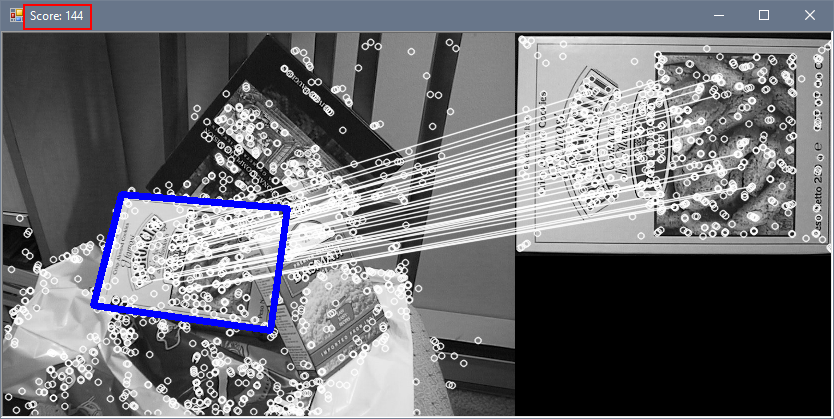

As we can see at the page which discuss this example (http://www.emgu.com/wiki/index.php/SURF_feature_detector_in_CSharp), the final result of the elaboration (namely and obviously, showing the result of the DrawMatches.Draw method) will be like this:

So it's clear what is done here: two images will be loaded, with one of them being a subpart of the other one, and the two get matched to show their common points, determining similarities/inclusiveness. That match is executed by the Draw() method of the static class DrawMatches, that can be found into the sample folder or at the SURF detector URL listed above. The source code is as follows:

//----------------------------------------------------------------------------

// Copyright (C) 2004-2016 by EMGU Corporation. All rights reserved.

//----------------------------------------------------------------------------

using System;

using System.Collections.Generic;

using System.Diagnostics;

using System.Drawing;

using Emgu.CV;

using Emgu.CV.CvEnum;

using Emgu.CV.Features2D;

using Emgu.CV.Flann;

using Emgu.CV.Structure;

using Emgu.CV.Util;

namespace FeatureMatchingExample

{

public static class DrawMatches

{

public static void FindMatch(Mat modelImage, Mat observedImage, out long matchTime, out VectorOfKeyPoint modelKeyPoints, out VectorOfKeyPoint observedKeyPoints, VectorOfVectorOfDMatch matches, out Mat mask, out Mat homography)

{

int k = 2;

double uniquenessThreshold = 0.80;

Stopwatch watch;

homography = null;

modelKeyPoints = new VectorOfKeyPoint();

observedKeyPoints = new VectorOfKeyPoint();

using (UMat uModelImage = modelImage.GetUMat(AccessType.Read))

using (UMat uObservedImage = observedImage.GetUMat(AccessType.Read))

{

KAZE featureDetector = new KAZE();

//extract features from the object image

Mat modelDescriptors = new Mat();

featureDetector.DetectAndCompute(uModelImage, null, modelKeyPoints, modelDescriptors, false);

watch = Stopwatch.StartNew();

// extract features from the observed image

Mat observedDescriptors = new Mat();

featureDetector.DetectAndCompute(uObservedImage, null, observedKeyPoints, observedDescriptors, false);

// Bruteforce, slower but more accurate

// You can use KDTree for faster matching with slight loss in accuracy

using (Emgu.CV.Flann.LinearIndexParams ip = new Emgu.CV.Flann.LinearIndexParams())

using (Emgu.CV.Flann.SearchParams sp = new SearchParams())

using (DescriptorMatcher matcher = new FlannBasedMatcher(ip, sp))

{

matcher.Add(modelDescriptors);

matcher.KnnMatch(observedDescriptors, matches, k, null);

mask = new Mat(matches.Size, 1, DepthType.Cv8U, 1);

mask.SetTo(new MCvScalar(255));

Features2DToolbox.VoteForUniqueness(matches, uniquenessThreshold, mask);

int nonZeroCount = CvInvoke.CountNonZero(mask);

if (nonZeroCount >= 4)

{

nonZeroCount = Features2DToolbox.VoteForSizeAndOrientation(modelKeyPoints, observedKeyPoints,

matches, mask, 1.5, 20);

if (nonZeroCount >= 4)

homography = Features2DToolbox.GetHomographyMatrixFromMatchedFeatures(modelKeyPoints,

observedKeyPoints, matches, mask, 2);

}

}

watch.Stop();

}

matchTime = watch.ElapsedMilliseconds;

}

/// <summary>

/// Draw the model image and observed image, the matched features and homography projection.

/// </summary>

/// <param name="modelImage">The model image</param>

/// <param name="observedImage">The observed image</param>

/// <param name="matchTime">The output total time for computing the homography matrix.</param>

/// <returns>The model image and observed image, the matched features and homography projection.</returns>

public static Mat Draw(Mat modelImage, Mat observedImage, out long matchTime)

{

Mat homography;

VectorOfKeyPoint modelKeyPoints;

VectorOfKeyPoint observedKeyPoints;

using (VectorOfVectorOfDMatch matches = new VectorOfVectorOfDMatch())

{

Mat mask;

FindMatch(modelImage, observedImage, out matchTime, out modelKeyPoints, out observedKeyPoints, matches,

out mask, out homography);

//Draw the matched keypoints

Mat result = new Mat();

Features2DToolbox.DrawMatches(modelImage, modelKeyPoints, observedImage, observedKeyPoints,

matches, result, new MCvScalar(255, 255, 255), new MCvScalar(255, 255, 255), mask);

#region draw the projected region on the image

if (homography != null)

{

//draw a rectangle along the projected model

Rectangle rect = new Rectangle(Point.Empty, modelImage.Size);

PointF[] pts = new PointF[]

{

new PointF(rect.Left, rect.Bottom),

new PointF(rect.Right, rect.Bottom),

new PointF(rect.Right, rect.Top),

new PointF(rect.Left, rect.Top)

};

pts = CvInvoke.PerspectiveTransform(pts, homography);

#if NETFX_CORE

Point[] points = Extensions.ConvertAll<PointF, Point>(pts, Point.Round);

#else

Point[] points = Array.ConvertAll<PointF, Point>(pts, Point.Round);

#endif

using (VectorOfPoint vp = new VectorOfPoint(points))

{

CvInvoke.Polylines(result, vp, true, new MCvScalar(255, 0, 0, 255), 5);

}

}

#endregion

return result;

}

}

}

}

The core method is FindMatch(), used to discover similarities amongst the images, and populating arrays which will be used by the Draw() method to show them graphically. We'll not analyze in detail the above code here, but we will modify it in the following, to make it able not only to spot differences, but to tell the user how much of them are present, allowing the application to tell - starting from a list of images - what image suits best the detailed one.

Developing the solution

In order to analyze an arbitrary number of images, to detect the one which contains our model image, it is necessary to have a score of similarities for each analyzed image. The idea is simple: given a certain folder(s) containing images, and a small image to be localized, the program must process each one of them, determining a value which represents how many key points from the model image have been spotted into the cycled image. At the end of the loop, the image(s) that will have returned the higher values are more likely to be the results we expect (or, in other word, higher the score, higher are the chances our model is contained into those images).

Determining a similarity score

FindMatch() method could be customized to expose a count of matches found between images.

Its signature can be modified in:

public static void FindMatch(Mat modelImage, Mat observedImage, out long matchTime, out VectorOfKeyPoint modelKeyPoints, out VectorOfKeyPoint observedKeyPoints, VectorOfVectorOfDMatch matches, out Mat mask, out Mat homography, out long score)

Adding the score variable to serve as an output parameter. Its value can be calculated after the VoteForUniqueness() call, looping between VectorOfVectorOfDMatch matches to increase score value every time we encounter a match:

score = 0;

for (int i = 0; i < matches.Size; i++)

{

if (mask.GetData(i)[0] == 0) continue;

foreach (var e in matches[i].ToArray())

++score;

}

FindMatch() method thus become as follows:

public static void FindMatch(Mat modelImage, Mat observedImage, out long matchTime, out VectorOfKeyPoint modelKeyPoints, out VectorOfKeyPoint observedKeyPoints, VectorOfVectorOfDMatch matches, out Mat mask, out Mat homography, out long score)

{

int k = 2;

double uniquenessThreshold = 0.80;

Stopwatch watch;

homography = null;

modelKeyPoints = new VectorOfKeyPoint();

observedKeyPoints = new VectorOfKeyPoint();

using (UMat uModelImage = modelImage.GetUMat(AccessType.Read))

using (UMat uObservedImage = observedImage.GetUMat(AccessType.Read))

{

KAZE featureDetector = new KAZE();

Mat modelDescriptors = new Mat();

featureDetector.DetectAndCompute(uModelImage, null, modelKeyPoints, modelDescriptors, false);

watch = Stopwatch.StartNew();

Mat observedDescriptors = new Mat();

featureDetector.DetectAndCompute(uObservedImage, null, observedKeyPoints, observedDescriptors, false);

// KdTree for faster results / less accuracy

using (var ip = new Emgu.CV.Flann.KdTreeIndexParams())

using (var sp = new SearchParams())

using (DescriptorMatcher matcher = new FlannBasedMatcher(ip, sp))

{

matcher.Add(modelDescriptors);

matcher.KnnMatch(observedDescriptors, matches, k, null);

mask = new Mat(matches.Size, 1, DepthType.Cv8U, 1);

mask.SetTo(new MCvScalar(255));

Features2DToolbox.VoteForUniqueness(matches, uniquenessThreshold, mask);

// Calculate score based on matches size

// ---------------------------------------------->

score = 0;

for (int i = 0; i < matches.Size; i++)

{

if (mask.GetData(i)[0] == 0) continue;

foreach (var e in matches[i].ToArray())

++score;

}

// <----------------------------------------------

int nonZeroCount = CvInvoke.CountNonZero(mask);

if (nonZeroCount >= 4)

{

nonZeroCount = Features2DToolbox.VoteForSizeAndOrientation(modelKeyPoints, observedKeyPoints, matches, mask, 1.5, 20);

if (nonZeroCount >= 4)

homography = Features2DToolbox.GetHomographyMatrixFromMatchedFeatures(modelKeyPoints, observedKeyPoints, matches, mask, 2);

}

}

watch.Stop();

}

matchTime = watch.ElapsedMilliseconds;

}

Note: for the sake of speed upon accuracy, in the above code LinearIndexParams has been substituted by KdTreeIndexParams.

Obviously, every method which calls FindMatch() must include the new score variable. So, Draw() method must be customized accordingly. In short, every time we execute Draw() method we must pass a long variable in which the score will be calculated, when it get passed to FindMatch() method. Please refer to the source code at the end of the article for further references.

Image and score viewer

To check rapidly the score value for any image, a customized image viewer form can be created.

Declare a new form, naming it emImageViewer, adding to it a PictureBox (imgBox in the example), with Dock = Fill. The source code will be the following:

using Emgu.CV;

using System.Drawing;

using System.Windows.Forms;

namespace ImgRecognitionEmGu

{

public partial class emImageViewer : Form

{

public emImageViewer(IImage image, long score = 0)

{

InitializeComponent();

this.Text = "Score: " + score.ToString();

if (image != null)

{

imgBox.Image = image.Bitmap;

Size size = image.Size;

size.Width += 12;

size.Height += 42;

if (!Size.Equals(size)) Size = size;

}

}

}

}

The constructor requires two parameters: the first, image, of type IImage, constitutes the image (casted Mat object) to be visualized inside imgBox. The second parameter, score, is the value calculated by the methods seen above. So, in we compare two images with the following code

long score;

long matchTime;

using (Mat modelImage = CvInvoke.Imread("box.png", ImreadModes.Grayscale))

using (Mat observedImage = CvInvoke.Imread("box_in_scene.png", ImreadModes.Grayscale))

{

Mat result = DrawMatches.Draw(modelImage, observedImage, out matchTime, out score);

var iv = new emImageViewer(result, score);

iv.Show();

}

The result will be

Which shows a score of 144. Obviously, changing the model image will result in different score values. If, for example, we wish to compare the scene image with itself, we will obtain

Process a list of images

At this point, calculating the score for a list of images becomes trivial.

Let's assume we have a given directory, images, with its subfolder structure, each of which may contain images. What is needed now is to traverse the entire directory tree and execute a comparison for every file found. The calculated score can be saved in an apt memory structure, to be listed at the end of the process.

We start by defining that structure/class

class WeightedImages

{

public string ImagePath { get; set; } = "";

public long Score { get; set; } = 0;

}

WeightedImages will be used to memorize the path of a complete image, with its calculated score. The class will be used in conjunction with a List<>:

List<WeightedImages> imgList = new List<WeightedImages>();

A simple method, ProcessFolder(), will parse every single file of each directory in the structure, recursively. For each of them, it will call ProcessImage(), which is the heart of the process.

private void ProcessFolder(string mainFolder, string detailImage)

{

foreach (var file in System.IO.Directory.GetFiles(mainFolder))

ProcessImage(file, detailImage);

foreach (var dir in System.IO.Directory.GetDirectories(mainFolder))

ProcessFolder(dir, detailImage);

}

private void ProcessImage(string completeImage, string detailImage)

{

if (completeImage == detailImage) return;

try

{

long score;

long matchTime;

using (Mat modelImage = CvInvoke.Imread(detailImage, ImreadModes.Color))

using (Mat observedImage = CvInvoke.Imread(completeImage, ImreadModes.Color))

{

Mat homography;

VectorOfKeyPoint modelKeyPoints;

VectorOfKeyPoint observedKeyPoints;

using (var matches = new VectorOfVectorOfDMatch())

{

Mat mask;

DrawMatches.FindMatch(modelImage, observedImage, out matchTime, out modelKeyPoints, out observedKeyPoints, matches,

out mask, out homography, out score);

}

imgList.Add(new WeightedImages() { ImagePath = completeImage, Score = score });

}

} catch { }

}

ProcessImage() doesn't need to draw the resultant object, it can simply stop when the score is calculated, and that value (with the file name) is added to imgList.

A button will start the process.

At the end of it, the imgList is ordered by descendant score value, and used as DataSource for a DataGridView, to show the results of the process itself.

In this example, the searched image was this:

As it can be seen, the sample directories has been traversed, and for each found image, a score was calculated, comparing the searched image to the complete one. Please note, since those image are not grayscaled, but coming in colours, that load type was set accordingly (ImreadModes.Color).

A second button allow to open the image represented by the current selected grid row, using the emImageViewer created above:

private void btnShow_Click(``object sender, System.EventArgs e)

{

``string imgPath = resultGrid.CurrentRow.Cells[0].Value.ToString();

``long score;

``long matchTime;

``using (Mat modelImage = CvInvoke.Imread(_detailedImage, ImreadModes.Color))

``using (Mat observedImage = CvInvoke.Imread(imgPath, ImreadModes.Color))

``{

``var result = DrawMatches.Draw(modelImage, observedImage, ``out matchTime, ``out score);

``var iv = ``new emImageViewer(result, score);

``iv.Show();

``}

}

Opening all the images in the list results in the following:

And we can see how the correct image has been spotted by the higher score of the list.

Further implementations

The presented code must be considered valid in learning contexts only. A further implementation, to manage - for example - many thousands of images in a single run, can be made by making the program asynchronous / multi-threaded, in order to better split resources and diminish elaboration time.

Source code

The source code used in the article can be downloaded at https://code.msdn.microsoft.com/Image-recognition-with-C-b13b2864