Logic Apps: Message Validation with XML, JSON and Flat-File Schemas (part 2)

1 Introduction

The first article in the series provides an overview of the message validation options available in Logic Apps, some shortcomings were highlighted, and various XML validation methods were discussed in detail. This second article will concentrate on the JSON schema validation options that are available in Logic Apps. Even though, at present, JSON schemas cannot be uploaded to the Integration Account, nor is there an equivalent to the XML Validation action, we will try to replicate that same functionality for JSON schemas as exists for XML schemas. This article assumes the reader has knowledge of the JSON schema definition being discussed and is familiar with Azure Logic Apps and Azure Functions.

2 Storing and Retrieving JSON Schemas

As we saw in the first article, Logic Apps uses an Integration Account for storing XML schemas and provides the Integration Account Artifact Lookup action for searching and retrieving Integration Account artifacts. Unfortunately, neither of these can be used for JSON schemas. One alternative to storing JSON schema files is to use an Azure Storage account and File Share. Details on how to create a file share in Azure Files can be read here. Once files have been uploaded, they can be retrieved within a Logic App using the Get File actions, e.g.:

The file name can be entered at design-time or specified dynamically at runtime. For further details on the Azure File Share Connector see here.

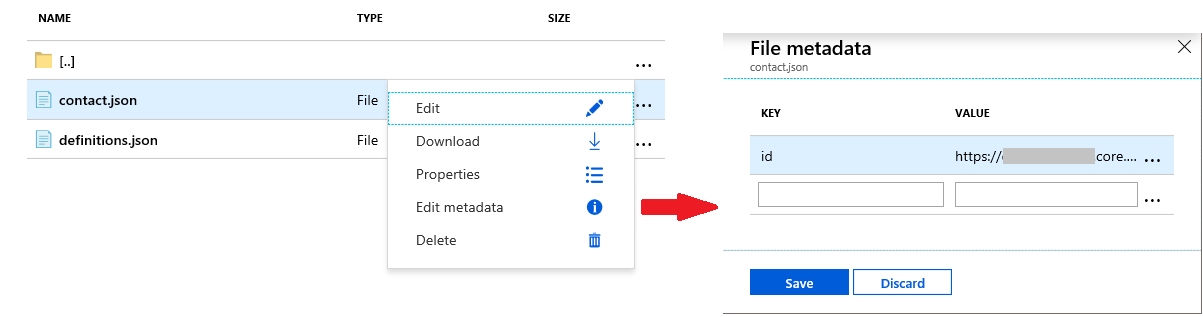

Just like the Integration Account Artifact Lookup action, the above method is limited in that it cannot be used for searching. In the first article, an alternative Azure Function was given that provided for the searching the properties of XML schemas stored in the Integration Account based on the root node name and namespace. Something similar can be achieved with Cloud Files stored in the file share. Metadata in the form of Key/Value pairs can be added to the file, e.g.:

This metadata can subsequently be used for searching using an Azure Function. The code below searches for a schema file (in a file share folder) based on a metadata key/value pair and returns the physical file name:

run.csx

#r "Microsoft.WindowsAzure.Storage"

using Microsoft.Azure;

using Microsoft.WindowsAzure.Storage;

using Microsoft.WindowsAzure.Storage.File;

using System.Net;

using System.Collections.Concurrent;

public static ConcurrentBag<CloudFile> _schemas = null;

public static HttpResponseMessage Run(HttpRequestMessage req, TraceWriter log)

{

log.Info("C# HTTP trigger function processed a request.");

// Get Azure file share and schemas folder reference.

if (_schemas == null)

{

CloudStorageAccount storageAccount = CloudStorageAccount.Parse(CloudConfigurationManager.GetSetting("AzureWebJobsStorage"));

CloudFileClient fileClient = storageAccount.CreateCloudFileClient();

CloudFileShare fileShare = fileClient.GetShareReference("integration");

CloudFileDirectory rootDir = fileShare.GetRootDirectoryReference();

CloudFileDirectory schemasDir = rootDir.GetDirectoryReference("Schemas");

_schemas = new ConcurrentBag<CloudFile>(schemasDir.ListFilesAndDirectories().Where(i => i is CloudFile).Cast<CloudFile>().ToList());

foreach (CloudFile cf in _schemas)

{

cf.FetchAttributes();

}

}

// Find schema name for the given metadata Key/Value pair.

string key = req.Headers.GetValues("Key").First();

string value = req.Headers.GetValues("Value").First();

string name = String.Empty;

var file = from s in _schemas where s.Metadata.ContainsKey(key) && (s.Metadata[key] == value) select s;

if (file.Count() > 0)

{

name = file.First().Name;

}

return req.CreateResponse(HttpStatusCode.OK, name);

}

project.json

{

"frameworks": {

"net46":{

"dependencies": {

"Microsoft.WindowsAzure.ConfigurationManager": "3.2.3"

}

}

}

}

3 JSON Validation

Logic App HTTP Request Trigger

Enabling validation on the HTTP Request trigger is described in detail here. By default, the schema validation is disabled. If validation is enabled, then the request header Content-Type value must be set to application/JSON or the validation will fail. Any attempt to reference an external schema will cause the Logic App to fail when saving as shown below, informing that the referenced schema could not be resolved:

Another point to note is that setting the $schema value to any version but draft-04 will produce a warning “unable to load schema” as shown below:

However, this does not prevent the Logic App from saving the validation from executing successfully.

The Logic App Parse JSON Action

As mentioned previously, there is no option of uploading and retrieving JSON schemas to and from the Integration Account (there are a couple of hacks, see Appendix A). Consequently, there is no equivalent of the XML Validation action for JSON messages. There does exist a Parse JSON action which requires a JSON schema to be specified and will validate a JSON message when it is parsed. The Parse JSON action in described in detail here.

One solution to replicating the XML Validation action for JSON messages is to store the JSON schema in a Storage Account File Share (as described previously) and use the Get file content action to retrieve the schema file. To validate use the 'Parse JSON' action and reference the file content, casting it to JSON as shown below:

This method does work (the Logic App saves and runs without any issue) even though the designer warns '[json] Expected a JSON object, array or literal.' One restriction on 'Parse JSON' action schema is that external references are not resolved. Just as with the XML Validation action, the Parse JSON action does not use a Resolver and so attempting to references external JSON schemas will fail with the error described in article 1.

Both the HTTP Request trigger and Parse JSON action can validate against three schema specifications: draft 3, draft 4 and draft 6. By way of example, consider the validation of the following JSON message:

{

"price": 12.5,

"productId": 1,

"productName": "A green door",

"tags": [

"home",

"green"

]

}

If we concentrate on two validation changes between the version - the required and exclusiveMinimum keywords – then the JSON schema for each draft version would be as shown in the table below:

Draft 3 |

Draft 4 |

Draft 6 |

{ "$id": "http://example.com/product.schema.json", "$schema": "http://json-schema.org/draft-03/schema", "description": "A product from Acme's catalog", "properties": { "price": { "description": "The price of the product", "exclusiveMinimum": true, "minimum": 13, "required": true, "type": "number" }, "productId": { "description": "The unique identifier for a product", "required": true, "type": "integer" }, "productName": { "description": "Name of the product", "required": true, "type": "string" } }, "title": "Product", "type": "object" } |

{ "$id": "http://example.com/product.schema.json", "$schema": "http://json-schema.org/draft-04/schema", "description": "A product from Acme's catalog", "properties": { "price": { "description": "The price of the product", "exclusiveMinimum": true, "minimum": 13, "type": "number" }, "productId": { "description": "The unique identifier for a product", "type": "integer" }, "productName": { "description": "Name of the product", "type": "string" } }, "required": [ "productId", "productName", "price" ], "title": "Product", "type": "object" } |

{ "$schema": "http://json-schema.org/draft-06/schema#", "$id": "http://example.com/product.schema.json", "title": "Product", "description": "A product from Acme's catalog", "type": "object", "properties": { "productId": { "description": "The unique identifier for a product", "type": "integer" }, "productName": { "description": "Name of the product", "type": "string" }, "price": { "description": "The price of the product", "type": "number", "exclusiveMinimum": 13 } }, "required": [ "productId", "productName", "price" ] } |

Specifying the wrong $schema values for the schema will cause the Parse JSON action to use the wrong schema and result in an error like the following (depending of the difference between the schema versions) “InvalidTemplate. Unable to process template language expressions in action 'xxxxxx' inputs at line 'x' and column 'x': 'Unexpected token encountered when reading value for 'required'. Expected Boolean, got StartArray. Path 'schema.required'.'.” If the $schema value is omitted the Parse JSON action uses the correct schema version to validate the JSON message.

JSON Validation with an Azure Function

We can, if needed, implement our own custom JSON validation function. The Newtonsoft.Json.Schema namespace can be used within an Azure Function to perform any required validation. This option may be appropriate where there is some functionality lacking in the current Parse Json action, e.g. external references. This function uses the JSchemaPreloadedResolver resolver to preload any external schemas. The root schema’s $id (see the example below) is used to set the base URI for the external schemas to add. The function checks for external references and if the schema references an external file (i.e. the value preceding the ‘#’ in the $ref token) the schema is retrieved from the file share and added to a JSchemaPreloadedResolver object.

The example below retrieves the schema(s) from a file share for a given schemaName value passed in the header and validates the JSON message passed in the body. This file share is created within the same file storage as the Azure Function which is why it uses the AzureWebJobsStorage connection string (otherwise a new connection string to some other file storage would need to be added to the function’s configuration):

schema.json

{

"$schema": "http://json-schema.org/draft-07/schema",

"$id": "https://xxxxxx.file.core.windows.net/integration/contactref.json",

"type": "object",

"properties": {

"contact": {

"type": "object",

"properties": {

"address": { "$ref": "contactdefs.json#/definitions/address" },

"person": { "$ref": "contactdefs.json#/definitions/person" }

}

}

}

}

run.csx

#r "Microsoft.WindowsAzure.Storage"

#r "Newtonsoft.Json"

using Microsoft.Azure;

using Microsoft.WindowsAzure.Storage;

using Microsoft.WindowsAzure.Storage.File;

using Newtonsoft.Json;

using Newtonsoft.Json.Linq;

using Newtonsoft.Json.Schema;

using Newtonsoft.Json.Serialization;

using System.Net;

public static async Task<HttpResponseMessage> Run(HttpRequestMessage req, TraceWriter log)

{

log.Info("C# HTTP trigger function processed a request.");

dynamic input = await req.Content.ReadAsStringAsync();

JObject message = JObject.Parse(input);

// Get Azure file share and schemas folder reference.

CloudStorageAccount storageAccount = CloudStorageAccount.Parse(CloudConfigurationManager.GetSetting("AzureWebJobsStorage"));

CloudFileClient fileClient = storageAccount.CreateCloudFileClient();

CloudFileShare fileShare = fileClient.GetShareReference("integration");

CloudFileDirectory rootDir = fileShare.GetRootDirectoryReference();

CloudFileDirectory schemasDir = rootDir.GetDirectoryReference("Schemas");

// Load schema specified in the header schemaName field.

CloudFile schemaFile = schemasDir.GetFileReference("contactref.json");

JObject joSchema = JObject.Load(new JsonTextReader(new StreamReader(schemaFile.OpenRead())));

JSchemaPreloadedResolver jpr = new JSchemaPreloadedResolver();

string id = ((string)joSchema.SelectToken("$id"));

string baseURI = id.Substring(0, id.LastIndexOf('/'));

var externalRefs = GetExternalRefs(joSchema);

RetrieveExternals(jpr, externalRefs, baseURI, schemasDir);

JSchema js = JSchema.Parse(joSchema.ToString(), jpr);

message.IsValid(js, out IList<ValidationError> validationErrors);

// Create validation response.

string response = String.Empty;

if (validationErrors.Count > 0)

{

response = JsonConvert.SerializeObject(validationErrors);

}

return req.CreateResponse(HttpStatusCode.OK, response);

}

// Recursive method to retrieve all referenced external schemas.

static void RetrieveExternals(JSchemaPreloadedResolver jpr, IEnumerable<string> externalReferences, string baseURI, CloudFileDirectory schemasDir)

{

foreach (string er in externalReferences)

{

using (StreamReader sr = new StreamReader(schemasDir.GetFileReference(er).OpenRead()))

{

// Retrieve schema.

JObject joSchema = JObject.Load(new JsonTextReader(sr));

// Search for any external references.

var externalRefs = GetExternalRefs(joSchema);

if (externalRefs.Count() > 0) { RetrieveExternals(jpr, externalRefs, baseURI, schemasDir); }

// Add schema to the preloader resolver.

jpr.Add(new Uri(baseURI + "/" + er), joSchema.ToString());

}

}

}

// Find all external schema references.

static IEnumerable<string> GetExternalRefs(JObject schema)

{

return schema

.Descendants()

.Where(x => x is JObject && x["$ref"] != null && ((string)x["$ref"]).IndexOf("#") > 1)

.Select(x => ((string)x["$ref"]).Remove(((string)x["$ref"]).IndexOf("#")))

.Distinct()

.ToList();

}

project.json

{

"frameworks": {

"net46":{

"dependencies": {

"Microsoft.WindowsAzure.ConfigurationManager": "3.2.3",

"Newtonsoft.Json.Schema": "3.0.11"

}

}

}

}

JSON Validation with Integration Account Maps & Assemblies

It is possible to transform JSON instances using XSLT. The functions to do this (JSON-to-XML, parse-JSON & XML-to-JSON), to cast JSON to and from XML format, were introduced in XSLT 3.0. This might seem strange but there are some limitations with using the Liquid actions (for example, there’s no conditional select, no pattern matching approach, no custom .NET code) and in some instances, for complex transformation (e.g., see here) it may make more sense to perform the transformation using XSLT.

As already mentioned in the first article, input instance validation prior to performing the transformation has been in extant since XSLT 2.0. But it was also mentioned that schema validation (using the xsl:import-schema declaration) is not available in the current XSLT 2.0/3.0 processor provided in Logic Apps. However, as in article 1 for XML validation, we can use XSLT 1.0 and C# script to implement this same pattern by utilizing the Newtonsoft.Json.Schema namespace.

This solution requires the uploading of the Newtonsoft.Json.Schema.dll (version 3.0.11) to the Integration Account assemblies’ blade. But first this assembly and the Newtonsoft.Json.dll (version 12.0.2) assembly must be merged using ILMerge (see here) to merge multiple .NET assemblies into a single assembly. Run the following command to generate the assembly:

ILMerge.exe /out:Newtonsoft.Json.Merged.dll Newtonsoft.Json.Schema.dll Newtonsoft.Json.dll /targetplatform:"v4, C:\Windows\Microsoft.NET\Framework\v4.0.30319"

The assembly can now be referenced in the XSLT. An example on how to do this is given below. The JSON schemas to use in the validation are passed in an xsl:param parameter, which are subsequently retrieved from a .NET assembly (Examples.Schemas). The XML message is first cast back to JSON, then the JSON schemas are retrieved and added to an JSchemaPreloadedResolver object, and finally the JSON message is validated and the validation result returned (note: the first JSON schema in the list is assumed to be the parent schema, all subsequent schemas are loaded into the resolver):

XSLT

<?xml version="1.0" encoding="utf-8"?>

<xsl:stylesheet xmlns:xsl="http://www.w3.org/1999/XSL/Transform" xmlns:msxsl="urn:schemas-microsoft-com:xslt" exclude-result-prefixes="msxsl s0 ScriptNS0" version="1.0" xmlns:s0="http://AzureSchemasDemo.examples.org/Person" xmlns:ns0="http://AzureLogicAppsMapsDemo/Contact" xmlns:ScriptNS0="http://schemas.microsoft.com/BizTalk/2003/ScriptNS0">

<xsl:output omit-xml-declaration="yes" method="xml" indent="yes" version="1.0" />

<xsl:param name ="SchemaList" select ="'contact.json'" />

<xsl:variable name ="validate" select ="ScriptNS0:Validate(., $SchemaList)" />

<xsl:template match="/">

<xsl:choose>

<xsl:when test="$validate='contact.json'">

<xsl:apply-templates select="/s0:Person" />

</xsl:when>

<xsl:otherwise>

<ValidationError>

<xsl:value-of select="$validate" />

</ValidationError>

</xsl:otherwise>

</xsl:choose>

</xsl:template>

<xsl:template match="/s0:Person">

<ns0:Contact>

<Title>

<xsl:value-of select="Tile/text()" />

</Title>

<Forename>

<xsl:value-of select="Forename/text()" />

</Forename>

<Surname>

<xsl:value-of select="Surname/text()" />

</Surname>

</ns0:Contact>

</xsl:template>

<msxsl:script language="C#" implements-prefix="ScriptNS0">

<msxsl:assembly name ="System.Core, Version=4.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089"/>

<msxsl:assembly name ="Newtonsoft.Json.Merged, Version=3.0.0.0, Culture=neutral, PublicKeyToken=null"/>

<msxsl:assembly name ="Example.Schemas, Version=1.0.0.0, Culture=neutral, PublicKeyToken=d03b35603a2999a2"/>

<msxsl:using namespace ="System"/>

<msxsl:using namespace ="System.Reflection"/>

<msxsl:using namespace ="System.IO"/>

<msxsl:using namespace ="System.Xml"/>

<msxsl:using namespace ="System.Collections.Generic"/>

<msxsl:using namespace ="Newtonsoft.Json"/>

<msxsl:using namespace ="Newtonsoft.Json.Linq"/>

<msxsl:using namespace ="Newtonsoft.Json.Schema"/>

<![CDATA[

public string Validate(XPathNodeIterator nodes, string schemaList)

{

nodes.MoveNext();

XmlDocument xmlDoc = new XmlDocument();

xmlDoc.LoadXml(nodes.Current.InnerXml);

string[] schemas = schemaList.Split(',');

Newtonsoft.Json.Linq.JObject input = Newtonsoft.Json.Linq.JObject.Parse(Newtonsoft.Json.JsonConvert.SerializeXmlNode(xmlDoc));

Newtonsoft.Json.Linq.JObject parentSchema = null;

var assembly = Assembly.Load("Example.Schemas, Version=1.0.0.0, Culture=neutral, PublicKeyToken=d03b35603a2999a2");

Newtonsoft.Json.Linq.JArray jaXSDs = new Newtonsoft.Json.Linq.JArray();

Newtonsoft.Json.Schema.JSchemaPreloadedResolver jpr = new Newtonsoft.Json.Schema.JSchemaPreloadedResolver();

for(int i = 0; i < schemas.Length; i++)

{

using (Stream stream = assembly.GetManifestResourceStream(assembly.GetName().Name + "." + schemas[i]))

{

if (i == 0)

{

parentSchema = Newtonsoft.Json.Linq.JObject.Load(new Newtonsoft.Json.JsonTextReader(new StreamReader(stream)));

}

else

{

jpr.Add(new Uri(schemas[i], UriKind.RelativeOrAbsolute), stream);

}

}

}

IList<Newtonsoft.Json.Schema.ValidationError> validationErrors = null;

Newtonsoft.Json.Schema.JSchema js = Newtonsoft.Json.Schema.JSchema.Parse(parentSchema.ToString(), jpr);

input.IsValid(js, out validationErrors);

if (validationErrors.Count > 0)

{

return validationErrors[0].Message;

}

return String.Empty;

}

]]>

</msxsl:script>

</xsl:stylesheet>

C# Class library

The JSON schemas are added to a .NET assembly (C# class library with the default class class1 removed), the Build Action set to Embedded Resource, and uploaded to the Integration Account assemblies’ blade.

JSON Validation with a Custom Logic App Connector

Another JSON validation option is to use a custom Logic App connector to allow a Logic App to communicate with some JSON validation service. A Logic App custom connector is a wrapper around a REST (or SOAP) API that allows Logic Apps (or Flow and PowerApps) to communicate with an API. There are numerous JSON validator libraries available (e.g. see here) and a so long as some service using a specific implementation exposes a RESTful API a Custom Connector can be created (see here). The Custom Connector is created either by using an OpenAPI definition or a Postman collection to describe the API.

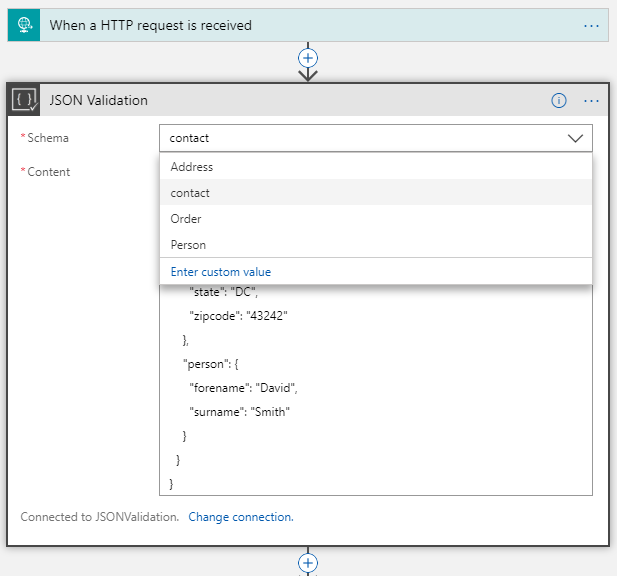

It is also possible to create a Custom Connector for our JSON validation Azure Function since Azure Function expose a REST API. Consequently, we can create a JSON validation connector with similar functionality to that of the XML Validation action. The code to do this is given in Appendix B (it’s an extension to the code given in the section JSON Validation with an Azure Function with an OpenAPI definition) and is used as follows:

The Custom Connector provides parameters, the first is a file schema name from the file share which is presented in a drop-down list to select as design-time or the value can also be set as run-time. The second parameter is the JSON content to validate. The result of a validation failure looks like the following output:

JSON Validation using Third-Party JSON Validators

Another JSON validation option is to use some third-party or open-source validator (for example, NJsonSchema). There may be several reasons for this (e.g. performance), but so long as all the required dependencies can by uploaded to an Azure Function bin folder, a script can be written to load and call the validator, and the result return to the calling Logic App.

JSON Validation by converting a JSON Schema to an XML Schema

JSON schemas cannot be upload to the Integration Account and the JSON schema pattern keyword has been blocked due to DoS security concerns. One work around to these two issues is to convert the JSON schema to an XML schema (XSD). Commercial tools do exist that will perform this conversion and there is also an open source one available on GitHub (see here). Alternatively, one can start with a fairly complete JSON document (and one that conforms to the specific JSON schema) and use a JSON to XML converter (e.g. JSON to XML Convertor) to convert the JSON document to an XML document. Next, use an XSD generator (such as the one in Visual Studio) to generate an XSD for the XML document.

The XSD can now be uploaded to the Integration Account schemas blade and the XML validation action can be used to validate the JSON message. The JSON messages must first be cast to XML using the native XML() function. Because the XML Validation action runs in its own sandbox environment the xsd:pattern facet can also be used (see here).

4 Summary

This article has focused in detail on the various JSON validation options that are available to us in Logic Apps and how we can implement similar functionality to XML validation despite not being able to use the Integration Account for JSON schema storage and retrieval. It also and provided workarounds in order to avoid embedding schemas with a Logic App, reference external JSON schemas, and using the pattern keyword. In the final article we’ll review the flat-file validation options currently available in Logic Apps.

5 References

Validating JSON with JSON Schema:

https://www.newtonsoft.com/json/help/html/JsonSchema.htm

JSON Schema Specification Links:

http://json-schema.org/specification-links.html

NJsonSchema (10.0.10):

https://www.nuget.org/packages/NJsonSchema/10.0.10

Azure Functions C# script (.csx) developer reference:

/en-us/azure/azure-functions/functions-reference-csharp

Microsoft.Azure.Storage.File Namespace:

/en-us/dotnet/api/microsoft.azure.storage.file?view=azure-dotnet

6 Appendix A: Uploading JSON Schemas to the Integration Account

One method of uploading a JSON schema to the Integration Account is to embed the JSON schema within a CDATA section in a xs:document element of an XML schema, and then use the xpath() function in the Logic App to retrieve it, e.g.:

<?xml version="1.0"?>

<xs:schema xmlns:xs="http://www.w3.org/2001/XMLSchema">

<xs:annotation>

<xs:documentation>

<![CDATA[ JSON schema goes here ]]>

</xs:documentation>

</xs:annotation>

</xs:schema>

Alternatively, you can upload the JSON schema to the Integration Account Map's blade (select Liquid map type). The file is only checked for valid JSON content and not specifically for valid Liquid content. The JSON schema can then be referenced as follows:

7 Appendix B: Custom Logic App Connector

Open API

{

"swagger": "2.0",

"info": {

"title": "JSON Validation",

"description": "",

"version": "1.0"

},

"host": "xxxxxxxxxxxxx.azurewebsites.net",

"basePath": "/",

"schemes": [

"https"

],

"consumes": [],

"produces": [],

"paths": {

"/api/JSONValidation/schema/list": {

"get": {

"description": "Gets a list of all the integration account schemas.",

"summary": "Schema List",

"operationId": "GetSchemaList",

"x-ms-visibility": "internal",

"parameters": [],

"responses": {

"200": {

"description": "OK",

"schema": {

"$ref": "#/definitions/SchemaList"

}

}

}

}

},

"/api/JSONValidation/schema/validate": {

"post": {

"description": "Validates the JSON instance against the given schema name.",

"summary": "JSON Validation",

"operationId": "Validate",

"parameters": [

{

"name": "SchemaName",

"in": "header",

"required": true,

"description": "The name of the JSON schema to use from the file share.",

"type": "string",

"x-ms-summary": "Schema",

"x-ms-dynamic-values": {

"operationId": "GetSchemaList",

"value-path": "id",

"value-title": "name"

}

},

{

"name": "Content",

"in": "body",

"required": true,

"description": "The JSON content to validate.",

"schema": { "type": "string" },

"x-ms-summary": "Content"

}

],

"responses": {

"200": {

"description": "OK",

"schema": {

"type": "object"

}

}

}

}

}

},

"definitions": {

"SchemaList": {

"type": "array",

"items": {

"type": "object",

"properties": {

"id": {

"type": "string"

},

"name": {

"type": "string"

}

},

"required": [

"id",

"name"

]

}

}

},

"parameters": {},

"responses": {},

"securityDefinitions": {},

"security": [],

"tags": []

}

run.csx

#r "Microsoft.WindowsAzure.Storage"

#r "Newtonsoft.Json"

using Microsoft.Azure;

using Microsoft.WindowsAzure.Storage;

using Microsoft.WindowsAzure.Storage.File;

using Newtonsoft.Json;

using Newtonsoft.Json.Linq;

using Newtonsoft.Json.Schema;

using Newtonsoft.Json.Serialization;

using System;

using System.Net;

using System.Net.Http.Formatting;

public static async Task<HttpResponseMessage> Run(HttpRequestMessage req, string action, TraceWriter log)

{

log.Info("C# HTTP trigger function processed a request.");

log.Info("action: " + action);

dynamic input = await req.Content.ReadAsStringAsync();

string share = "integration";

string folderPath = "schemas";

string response = String.Empty;

// Get Azure file share and schemas folder reference.

CloudStorageAccount storageAccount = CloudStorageAccount.Parse(CloudConfigurationManager.GetSetting("AzureWebJobsStorage"));

CloudFileClient fileClient = storageAccount.CreateCloudFileClient();

CloudFileShare fileShare = share != String.Empty ? fileClient.GetShareReference(share) : null;

CloudFileDirectory rootDir = share != String.Empty ? fileShare.GetRootDirectoryReference() : null;

CloudFileDirectory schemasDir = folderPath != String.Empty ? rootDir.GetDirectoryReference(folderPath) : null;

switch (action)

{

case "list":

// Retrieve a list of schema names (from the filename)

IEnumerable<IListFileItem> fileList = schemasDir.ListFilesAndDirectories();

List<file> files = new List<file>();

foreach (IListFileItem lfi in fileList)

{

if (lfi.GetType() == typeof(CloudFile))

{

string file = lfi.Uri.Segments.Last();

files.Add(new file {id = file, name = Path.GetFileNameWithoutExtension(file)});

}

}

return req.CreateResponse(HttpStatusCode.OK, files, JsonMediaTypeFormatter.DefaultMediaType);

break;

case "validate":

// Load schema specified in the header schemaName field.

string schemaName = req.Headers.GetValues("SchemaName").First();

CloudFile schemaFile = schemasDir.GetFileReference(schemaName);

JObject joSchema = JObject.Load(new JsonTextReader(new StreamReader(schemaFile.OpenRead())));

JSchemaPreloadedResolver jpr = new JSchemaPreloadedResolver();

string id = ((string)joSchema.SelectToken("$id"));

string baseURI = id.Substring(0, id.LastIndexOf('/'));

var externalRefs = GetExternalRefs(joSchema);

RetrieveExternals(jpr, externalRefs, baseURI, schemasDir);

JObject message = JObject.Parse(input);

JSchema js = JSchema.Parse(joSchema.ToString(), jpr);

message.IsValid(js, out IList<ValidationError> validationErrors);

// Create validation response.

if (validationErrors.Count > 0)

{

response = JsonConvert.SerializeObject(validationErrors);

}

log.Info("response: " + response);

return req.CreateResponse(HttpStatusCode.OK, response);

break;

}

return req.CreateResponse(HttpStatusCode.OK, String.Empty);

}

// Recursive method to retrieve all referenced external schemas.

static void RetrieveExternals(JSchemaPreloadedResolver jpr, IEnumerable<string> externalReferences, string baseURI, CloudFileDirectory schemasDir)

{

foreach (string er in externalReferences)

{

using (StreamReader sr = new StreamReader(schemasDir.GetFileReference(er).OpenRead()))

{

// Retrieve schema.

JObject joSchema = JObject.Load(new JsonTextReader(sr));

// Search for any external references.

var externalRefs = GetExternalRefs(joSchema);

if (externalRefs.Count() > 0) { RetrieveExternals(jpr, externalRefs, baseURI, schemasDir); }

// Add schema to the preloader resolver.

jpr.Add(new Uri(baseURI + "/" + er), joSchema.ToString());

}

}

}

// Find all external schema references.

static IEnumerable<string> GetExternalRefs(JObject schema)

{

return schema

.Descendants()

.Where(x => x is JObject && x["$ref"] != null && ((string)x["$ref"]).IndexOf("#") > 1)

.Select(x => ((string)x["$ref"]).Remove(((string)x["$ref"]).IndexOf("#")))

.Distinct()

.ToList();

}

public class file

{

public string id {get; set;}

public string name {get; set;}

}

Connector Icon