Disasters can be hardware failures, natural disasters, or software failures. The process of preparing for and recovering from a disaster is called disaster recovery (DR). This article discusses recommended practices to achieve business continuity and disaster recovery (BCDR) for Azure Data Factory and Azure Synapse Analytics pipelines.

BCDR strategies include availability zone redundancy, automated recovery provided by Azure disaster recovery, and user-managed recovery by using continuous integration and continuous delivery (CI/CD).

Architecture

Download a Visio file of this architecture.

Workflow

Data Factory and Azure Synapse pipelines achieve resiliency by using Azure regions and Azure availability zones.

- Each Azure region has a set of datacenters that are deployed within a latency-defined perimeter.

- Azure availability zones are physically separate locations within each Azure region that are tolerant to local failures.

- All Azure regions and availability zones are connected through a dedicated, regional low-latency network and by a high-performance network.

- All availability zone-enabled regions have at least three separate availability zones to ensure resiliency.

When a datacenter, part of a datacenter, or an availability zone in a region goes down, failover happens with zero downtime for zone-resilient Data Factory and Azure Synapse pipelines.

Components

Scenario details

Data Factory and Azure Synapse pipelines store artifacts that include the following data:

Metadata

- Pipeline

- Datasets

- Linked services

- Integration runtime

- Triggers

Monitoring data

- Pipeline

- Triggers

- Activity runs

Disasters can strike in different ways, such as hardware failures, natural disasters, or software failures that result from human error or cyberattack. Depending on the types of failures, their geographical impact can be regional or global. When planning a disaster recovery strategy, consider both the nature of the disaster and its geographic impact.

BCDR in Azure works on a shared responsibility model. Many Azure services require customers to explicitly set up their DR strategy, while Azure provides the baseline infrastructure and platform services as needed.

You can use the following recommended practices to achieve BCDR for Data Factory and Azure Synapse pipelines under various failure scenarios. For implementation, see Deploy this scenario.

Automated recovery with Azure disaster recovery

With automated recovery provided Azure Backup and disaster recovery, when there is a complete regional outage for an Azure region that has a paired region, Data Factory or Azure Synapse pipelines automatically fail over to the paired region when you Set up automated recovery. The exceptions are Southeast Asia and Brazil regions, where data residency requirements require data to stay in those regions.

In DR failover, Data Factory recovers the production pipelines. If you need to validate your recovered pipelines, you can back up the Azure Resource Manager templates for your production pipelines in secret storage, and compare the recovered pipelines to the backups.

The Azure Global team conducts regular BCDR drills, and Azure Data Factory and Azure Synapse Analytics participate in these drills. The BCDR drill simulates a region failure and fails over Azure services to a paired region without any customer involvement. For more information about the BCDR drills, see Testing of services.

User-managed redundancy with CI/CD

To achieve BCDR in the event of an entire region failure, you need a data factory or an Azure Synapse workspace in the secondary region. In case of accidental Data Factory or Azure Synapse pipeline deletion, outages, or internal maintenance events, you can use Git and CI/CD to recover the pipelines manually.

Optionally, you can use an active/passive implementation. The primary region handles normal operations and remains active, while the secondary DR region requires pre-planned steps, depending on specific implementation, to be promoted to primary. In this case, all the necessary configurations for infrastructure are available in the secondary region, but they aren't provisioned.

Potential use cases

User-managed redundancy is useful in scenarios like:

- Accidental deletion of pipeline artifacts through human error.

- Extended outages or maintenance events that don't trigger BCDR because there's no disaster reported.

You can quickly move your production workloads to other regions and not be affected.

Considerations

These considerations implement the pillars of the Azure Well-Architected Framework, which is a set of guiding tenets that can be used to improve the quality of a workload. For more information, see Microsoft Azure Well-Architected Framework.

Reliability

Reliability ensures your application can meet the commitments you make to your customers. For more information, see Overview of the reliability pillar.

Data Factory and Azure Synapse pipelines are mainstream Azure services that support availability zones, and they're designed to provide the right level of resiliency and flexibility along with ultra-low latency.

The user-managed recovery approach allows you to continue operating if there are any maintenance events, outages, or human errors in the primary region. By using CI/CD, the data factory and Azure Synapse pipelines can integrate to a Git repository and deploy to a secondary region for immediate recovery.

Cost optimization

Cost optimization is about looking at ways to reduce unnecessary expenses and improve operational efficiencies. For more information, see Overview of the cost optimization pillar.

User-managed recovery integrates Data Factory with Git by using CI/CD, and optionally uses a secondary DR region that has all the necessary infrastructure configurations as a backup. This scenario might incur added costs. To estimate costs, use the Azure pricing calculator.

For examples of Data Factory and Azure Synapse Analytics pricing, see:

Operational excellence

Operational excellence covers the operations processes that deploy an application and keep it running in production. For more information, see Overview of the operational excellence pillar.

By using the user-managed CI/CD recovery approach, you can integrate to Azure Repos or GitHub. For more information about best CI/CD practices, see Best practices for CI/CD.

Deploy this scenario

Take the following actions to set up automated or user-managed DR for Data Factory and Azure Synapse pipelines.

Set up automated recovery

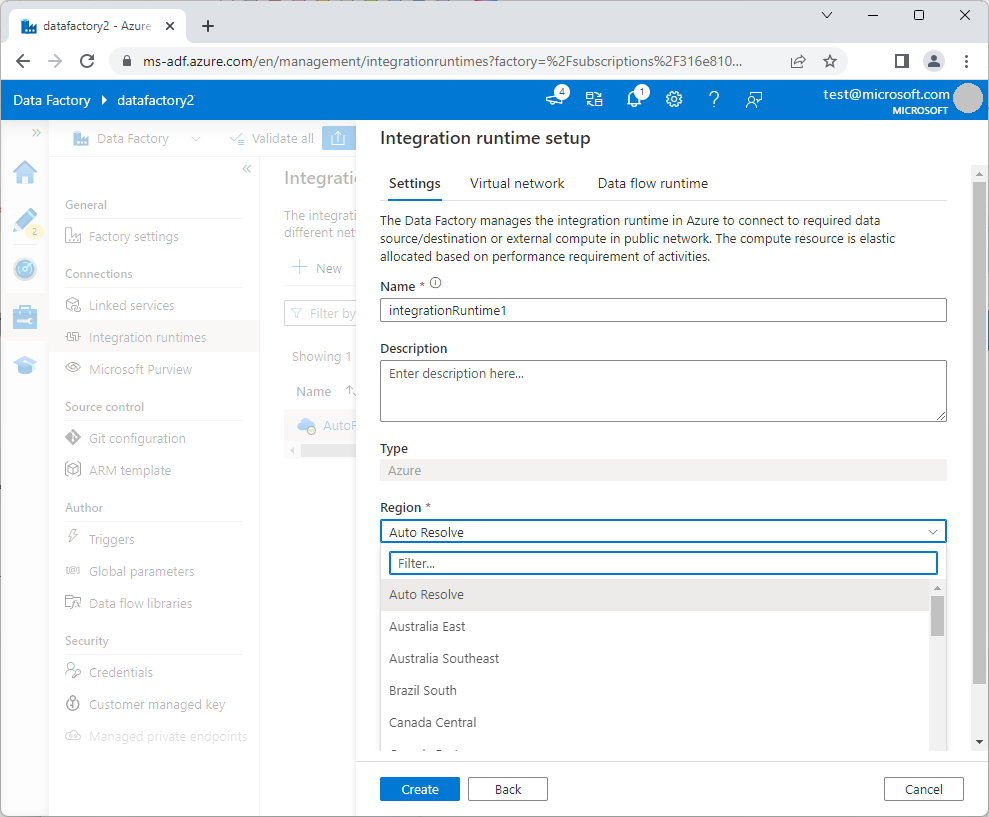

In Data Factory, you can set the Azure integration runtime (IR) region for your activity execution or dispatch in the Integration runtime setup. To enable automatic failover in the event of a complete regional outage, set the Region to Auto Resolve.

In the context of the integration runtimes, IR fails over automatically to the paired region when you select Auto Resolve as the IR region. For other specific location regions, you can create a secondary data factory in another region, and use CI/CD to provision your data factory from the Git repository.

For managed virtual networks, Data Factory also automatically switches over to the managed IR.

Azure managed automatic failover doesn't apply to self-hosted integration runtime (SHIR), because the infrastructure is customer-managed. For guidance on setting up multiple nodes for higher availability with SHIR, see Create and configure a self-hosted integration runtime.

To configure BCDR for Azure-SSIS IR, see Configure Azure-SSIS integration runtime for business continuity and disaster recovery (BCDR).

Linked services aren't fully enabled after failover, because of pending private endpoints in the newer network of the region. You need to configure private endpoints in the recovered region. You can automate private endpoint creation by using the approval API.

Set up user-managed recovery through CI/CD

You can use Git and CI/CD to recover pipelines manually in case of Data Factory or Azure Synapse pipeline deletion or outage.

To use Data Factory pipeline CI/CD, see Continuous integration and delivery in Azure Data Factory and Source control in Azure Data Factory.

To use Azure Synapse pipeline CI/CD, see Continuous integration and delivery for an Azure Synapse Analytics workspace. Make sure to initialize the Azure Synapse workspace first. For more information, see Source control in Synapse Studio.

When you deploy user-managed redundancy by using CI/CD, take the following actions:

Disable triggers

Disable triggers in the original primary data factory once it comes back online. You can disable the triggers manually, or implement automation to periodically check the availability of the original primary. Disable all triggers on the original primary data factory immediately after the factory recovers.

To use Azure PowerShell to turn Data Factory triggers off or on, see Sample pre- and post-deployment script and CI/CD improvements related to pipeline triggers deployment.

Handle duplicate writes

Most extract, transform, load (ETL) pipelines are designed to handle duplicate writes, because backfill and restatement require them. Data sinks that support transparent failover can handle duplicate writes with records merge or by deleting and inserting all records in the specific time range.

For data sinks that change endpoints after failover, primary and secondary storage might have duplicate or partial data. You need to merge the data manually.

Check the witness and control the pipeline flow (optional)

In general, you need to design your pipelines to include activities, like fail and lookup activities, for restarting failed pipelines from the point of interest.

Add a global parameter in your data factory to indicate the region, for example

region='EastUS'in the primary andregion='CentralUS'in the secondary data factory.Create a witness in a third region. The witness can be a REST call or any type of storage. The witness returns the current primary region, for example

'EastUS', by default.When a disaster happens, manually update the witness to return the new primary region, for example

'CentralUS'.Add an activity in your pipeline to look up the witness and compare the current primary value to the global parameter.

- If the parameters match, this pipeline is running on the primary region. Proceed with the real work.

- If the parameters don't match, this pipeline is running on the secondary region. Just return the result.

Note

This approach introduces a dependency on the witness lookup into your pipeline. Failure to read the witness halts all pipeline runs.

Contributors

This article is maintained by Microsoft. It was originally written by the following contributors.

Principal authors:

Krishnakumar Rukmangathan | Senior Program Manager - Azure Data Factory team

Sunil Sabat | Principal Program Manager - Azure Data Factory team

Other contributors:

Mario Zimmermann | Principal Software Engineering Manager - Azure Data Factory team

Wee Hyong Tok | Principal Director of PM - Azure Data Factory team

To see non-public LinkedIn profiles, sign in to LinkedIn.

Next steps

- Business continuity management in Azure

- Resiliency in Azure

- Azure resiliency terminology

- Regions and availability zones

- Cross-region replication in Azure

- Azure regions decision guide

- Azure services that support availability zones

- Shared responsibility in the cloud

- Azure Data Factory data redundancy

- Integration runtime in Azure Data Factory

- Pipelines and activities in Azure Data Factory and Azure Synapse Analytics

- Data integration in Azure Synapse Analytics versus Azure Data Factory