Azure AI Video Indexer object detection

Warning

Over the past year, Azure AI Video Indexer (VI) announced the removal of its dependency on Azure Media Services (AMS) due to its retirement. Features adjustments and changes were announced and a migration guide was provided.

The deadline to complete migration was June 30, 2024. VI has extended the update/migrate deadline so you can update your VI account and opt in to the AMS VI asset migration through August 31, 2024.

However, after June 30, if you have not updated your VI account, you won't be able to index new videos nor will you be able to play any videos that have not been migrated. If you update your account after June 30, you can resume indexing immediately but you won't be able to play videos indexed before the account update until they are migrated through the AMS VI migration.

Azure AI Video Indexer can detect objects in videos. The insight is part of standard and advanced video presets. Object detection is included in the insights that are the result of an Upload Video request.

Transparency note

Before using object detection, review transparency note overview.

JSON keys and definitions

| Key | Definition |

|---|---|

| ID | Incremental number of IDs of the detected objects in the media file |

| Type | Type of objects, for example, Car |

| ThumbnailID | GUID representing a single detection of the object |

| displayName | Name to be displayed in the VI portal experience |

| WikiDataID | A unique identifier in the WikiData structure |

| Instances | List of all instances that were tracked |

| Confidence | A score between 0-1 indicating the object detection confidence |

| adjustedStart | adjusted start time of the video when using the editor |

| adjustedEnd | adjusted end time of the video when using the editor |

| start | the time that the object appears in the frame |

| end | the time that the object no longer appears in the frame |

JSON response

Detected and tracked objects

Detected and tracked objects appear under "detected Objects" in the downloaded insights.json file. Every time a unique object is detected, it's given an ID. That object is also tracked, meaning that the model watches for the detected object to return to the frame. If it does, another instance is added to the instances for the object with different start and end times.

In this example, the first car was detected and given an ID of 1 since it was also the first object detected. Then, a different car was detected and that car was given the ID of 23 since it was the 23rd object detected. Later, the first car appeared again and another instance was added to the JSON. Here's the resulting JSON:

detectedObjects: [

{

id: 1,

type: "Car",

thumbnailId: "1c0b9fbb-6e05-42e3-96c1-abe2cd48t33",

displayName: "car",

wikiDataId: "Q1420",

instances: [

{

confidence: 0.468,

adjustedStart: "0:00:00",

adjustedEnd: "0:00:02.44",

start: "0:00:00",

end: "0:00:02.44"

},

{

confidence: 0.53,

adjustedStart: "0:03:00",

adjustedEnd: "0:00:03.55",

start: "0:03:00",

end: "0:00:03.55"

}

]

},

{

id: 23,

type: "Car",

thumbnailId: "1c0b9fbb-6e05-42e3-96c1-abe2cd48t34",

displayName: "car",

wikiDataId: "Q1420",

instances: [

{

confidence: 0.427,

adjustedStart: "0:00:00",

adjustedEnd: "0:00:14.24",

start: "0:00:00",

end: "0:00:14.24"

}

]

}

]

Supported objects

- airplane

- apple

- backpack

- banana

- baseball glove

- bed

- bench

- bicycle

- boat

- book

- bottle

- bowl

- broccoli

- bus

- cake

- car

- carrot

- cell phone

- chair

- clock

- computer mouse

- couch

- cup

- dining table

- donut

- fire hydrant

- fork

- frisbee

- hair dryer

- handbag

- hot dog

- keyboard

- kite

- knife

- laptop

- microwave

- motorcycle

- computer mouse

- necktie

- orange

- oven

- parking meter

- pizza

- potted plant

- sandwich

- scissors

- sink

- skateboard

- skis

- snowboard

- spoon

- sports ball

- stop sign

- suitcase

- surfboard

- teddy bear

- tennis racket

- toaster

- toilet

- toothbrush

- traffic light

- train

- umbrella

- vase

- wine glass

Limitations

- There are up to 20 detections per frame for standard and advanced processing and 35 tracks per class.

- Object size shouldn't be greater than 90 percent of the frame. Very large objects that consistently span over a large portion of the frame might not be recognized.

- Small or blurry objects can be hard to detect. They can either be missed or misclassified (wine glass, cup).

- Objects that are transient and appear in very few frames might not be recognized.

- Other factors that might affect the accuracy of the object detection include low light conditions, camera motion, and occlusions.

- Azure AI Video Indexer supports only real world objects. There's no support for animation or CGI. Computer generated graphics (such as news-stickers) might produce strange results.

- See specific class notes.

Specific class notes

Bound written materials

Binders, brochures, and other written materials tend to be detected as "book."

Try object detection

You can try out object detection with the web portal or with the API.

Once a video is uploaded, you can view the insights. On the insights tab, you can view the list of objects detected and their main instances.

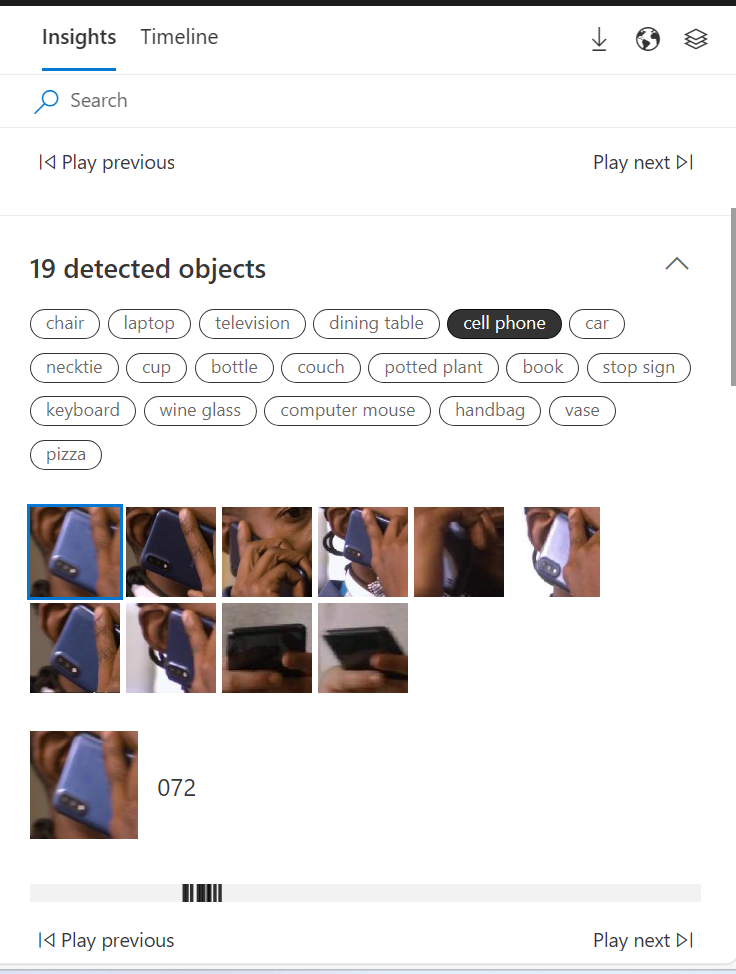

Insights

Select the Insights tab. The objects are in descending order of the number of appearances in the video.

Timeline

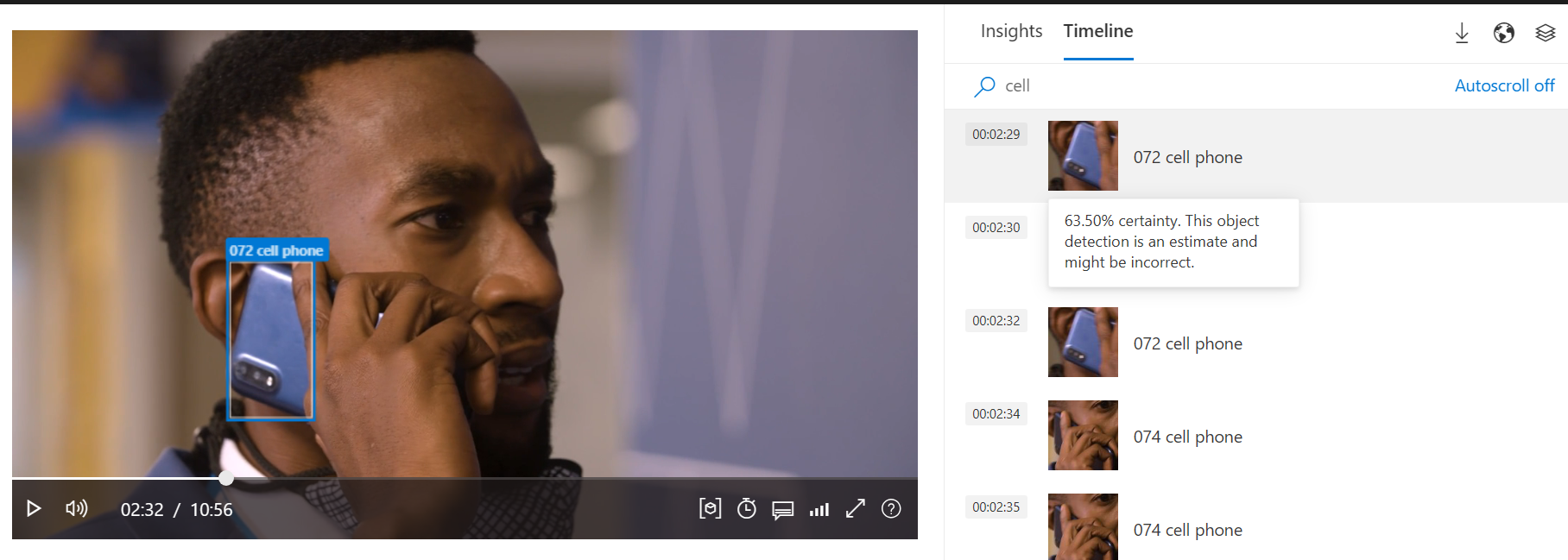

Select the Timeline tab.

Under the timeline tab, all objects detected are displayed according to the time of appearance. When you hover over a specific detection, it shows the detection percentage of certainty.

Player

The player automatically marks the detected object with a bounding box. The selected object from the insights pane is highlighted in blue with the objects type and serial number also displayed.

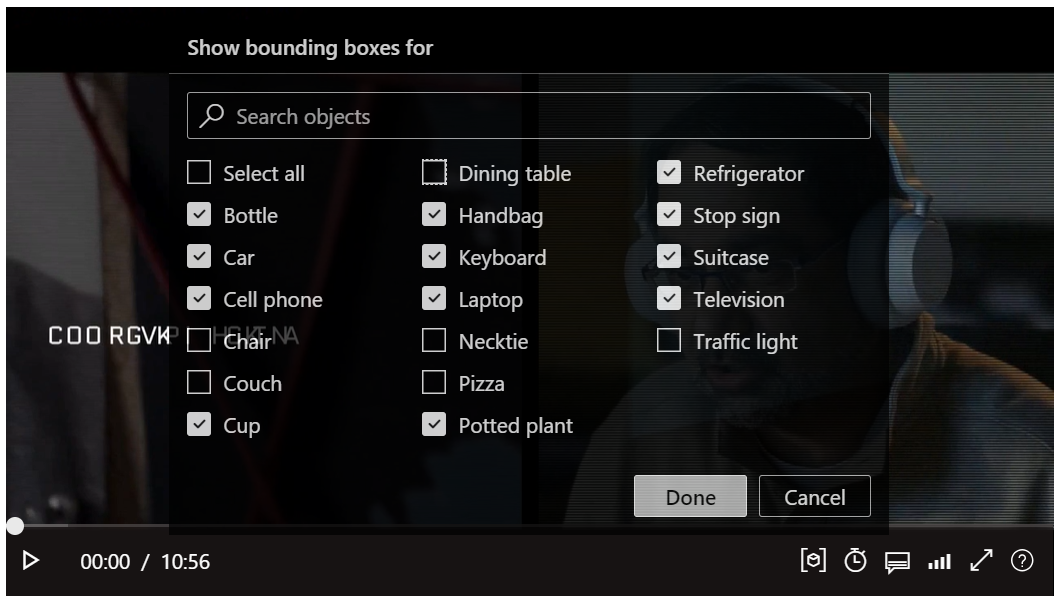

Filter the bounding boxes around objects by selecting bounding box icon on the player.

![]()

Then, select or deselect the detected objects checkboxes.

Download the insights by selecting Download and then Insights (JSON).

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for