How to use Hive Catalog with Apache Flink® on HDInsight on AKS

Note

We will retire Azure HDInsight on AKS on January 31, 2025. Before January 31, 2025, you will need to migrate your workloads to Microsoft Fabric or an equivalent Azure product to avoid abrupt termination of your workloads. The remaining clusters on your subscription will be stopped and removed from the host.

Only basic support will be available until the retirement date.

Important

This feature is currently in preview. The Supplemental Terms of Use for Microsoft Azure Previews include more legal terms that apply to Azure features that are in beta, in preview, or otherwise not yet released into general availability. For information about this specific preview, see Azure HDInsight on AKS preview information. For questions or feature suggestions, please submit a request on AskHDInsight with the details and follow us for more updates on Azure HDInsight Community.

This example uses Hive’s Metastore as a persistent catalog with Apache Flink’s Hive Catalog. We use this functionality for storing Kafka table and MySQL table metadata on Flink across sessions. Flink uses Kafka table registered in Hive Catalog as a source, perform some lookup and sink result to MySQL database

Prerequisites

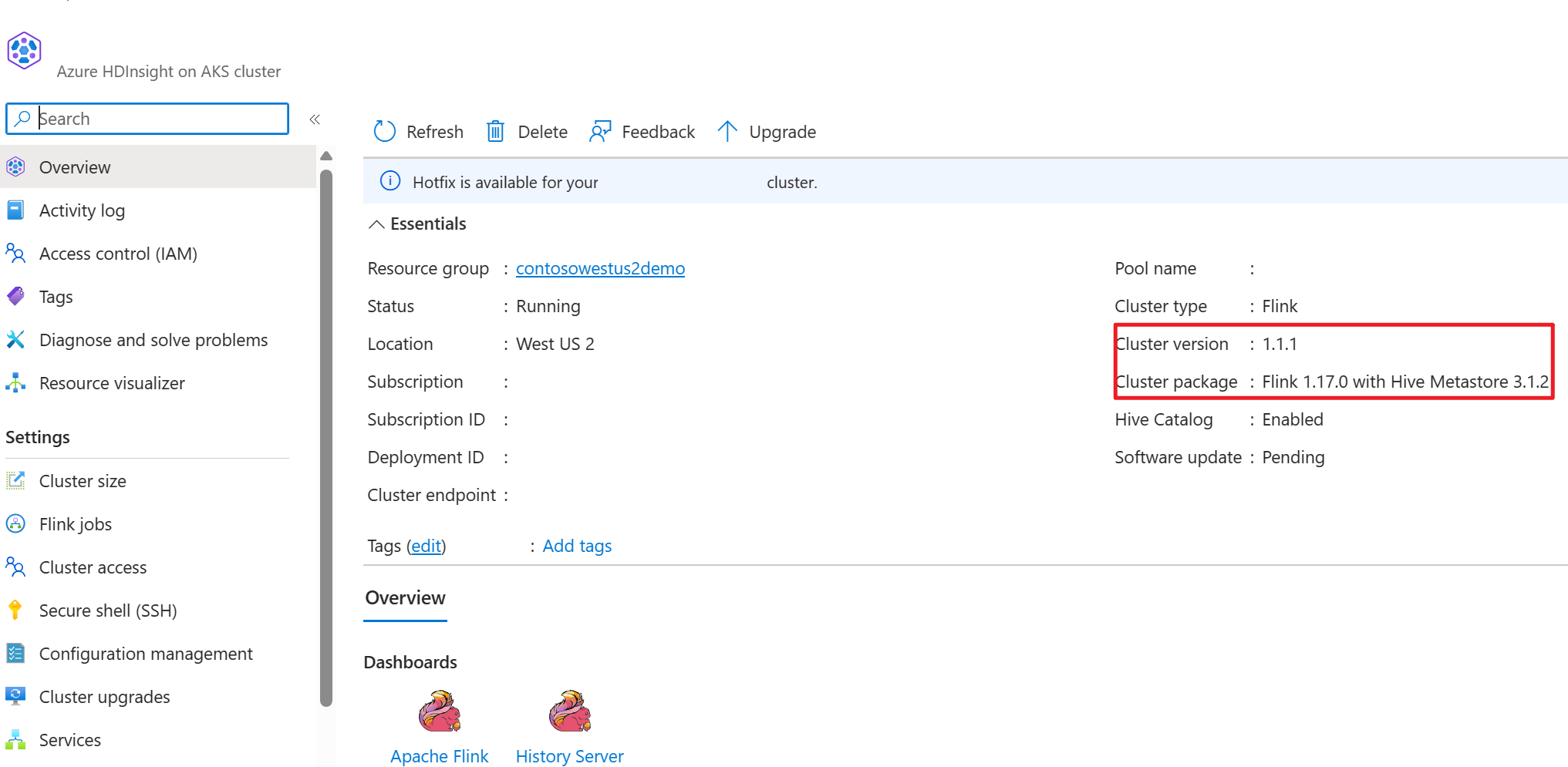

- Apache Flink Cluster on HDInsight on AKS with Hive Metastore 3.1.2

- Apache Kafka cluster on HDInsight

- You're required to ensure the network settings are complete as described on Using Kafka; that's to make sure HDInsight on AKS and HDInsight clusters are in the same VNet

- MySQL 8.0.33

Apache Hive on Apache Flink

Flink offers a two-fold integration with Hive.

- The first step is to use Hive Metastore (HMS) as a persistent catalog with Flink’s HiveCatalog for storing Flink specific metadata across sessions.

- For example, users can store their Kafka or ElasticSearch tables in Hive Metastore by using HiveCatalog, and reuse them later on in SQL queries.

- The second is to offer Flink as an alternative engine for reading and writing Hive tables.

- The HiveCatalog is designed to be “out of the box” compatible with existing Hive installations. You don't need to modify your existing Hive Metastore or change the data placement or partitioning of your tables.

For more information, see Apache Hive

Environment preparation

Create an Apache Flink cluster with HMS

Lets create an Apache Flink cluster with HMS on Azure portal, you can refer to the detailed instructions on Flink cluster creation.

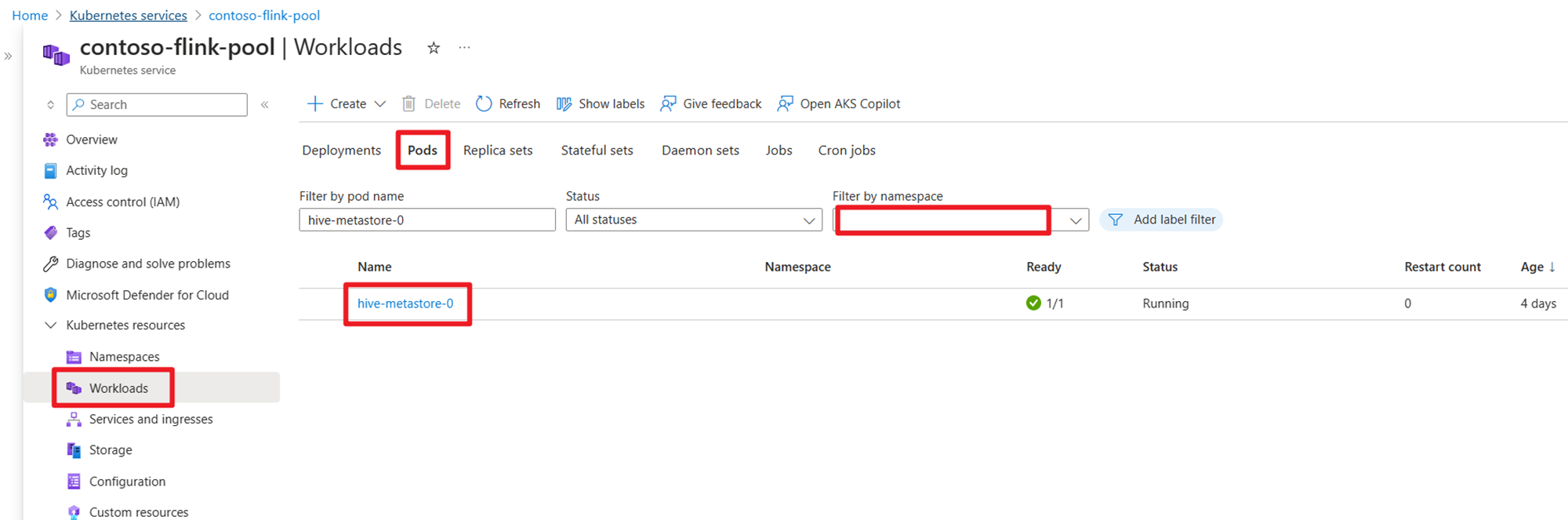

After cluster creation, check HMS is running or not on AKS side.

Prepare user order transaction data Kafka topic on HDInsight

Download the kafka client jar using the following command:

wget https://archive.apache.org/dist/kafka/3.2.0/kafka_2.12-3.2.0.tgz

Untar the tar file with

tar -xvf kafka_2.12-3.2.0.tgz

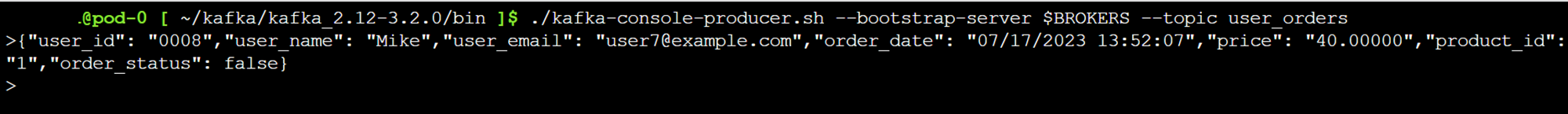

Produce the messages to the Kafka topic.

Other commands:

Note

You're required to replace bootstrap-server with your own kafka brokers host name or IP

--- delete topic

./kafka-topics.sh --delete --topic user_orders --bootstrap-server wn0-contsk:9092

--- create topic

./kafka-topics.sh --create --replication-factor 2 --partitions 3 --topic user_orders --bootstrap-server wn0-contsk:9092

--- produce topic

./kafka-console-producer.sh --bootstrap-server wn0-contsk:9092 --topic user_orders

--- consumer topic

./kafka-console-consumer.sh --bootstrap-server wn0-contsk:9092 --topic user_orders --from-beginning

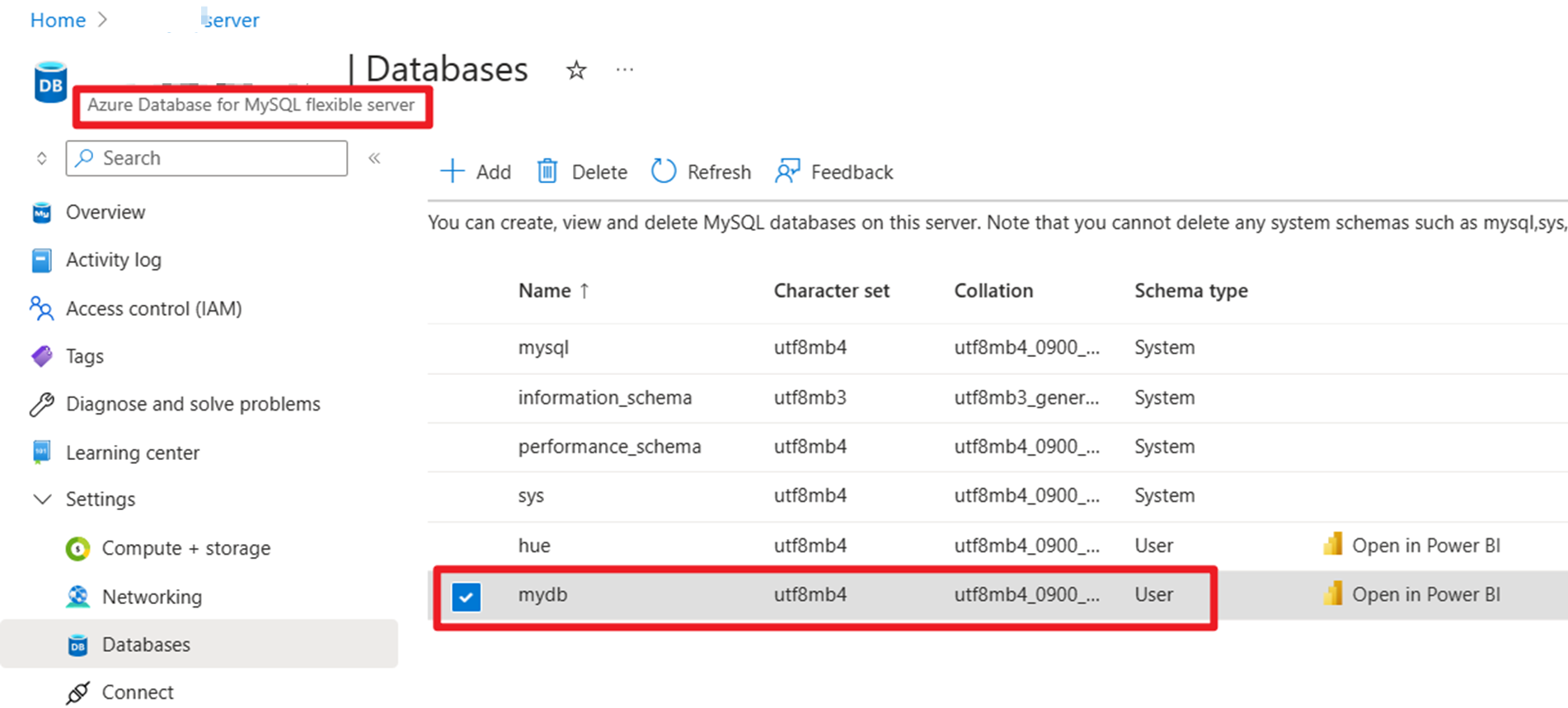

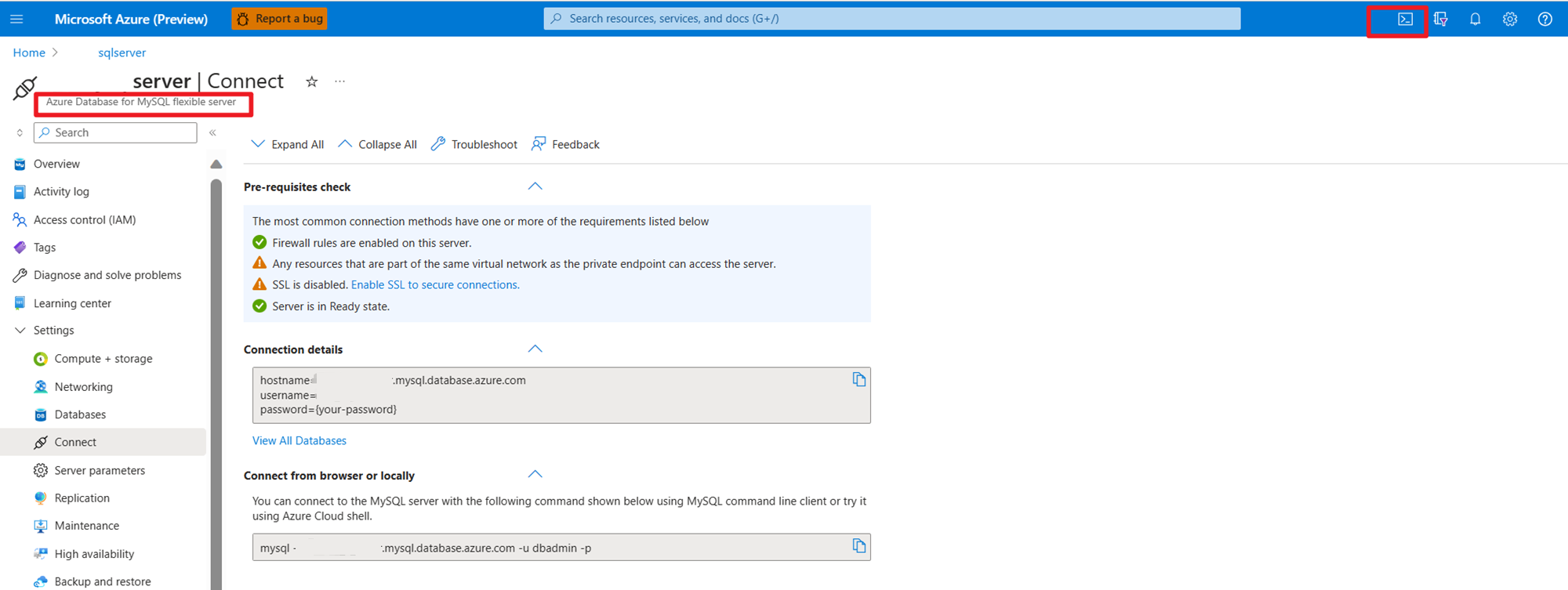

Prepare user order master data on MySQL on Azure

Testing DB:

Prepare the order table:

mysql> use mydb

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

mysql> CREATE TABLE orders (

order_id INTEGER NOT NULL AUTO_INCREMENT PRIMARY KEY,

order_date DATETIME NOT NULL,

customer_id INTEGER NOT NULL,

customer_name VARCHAR(255) NOT NULL,

price DECIMAL(10, 5) NOT NULL,

product_id INTEGER NOT NULL,

order_status BOOLEAN NOT NULL

) AUTO_INCREMENT = 10001;

mysql> INSERT INTO orders

VALUES (default, '2023-07-16 10:08:22','0001', 'Jark', 50.00, 102, false),

(default, '2023-07-16 10:11:09','0002', 'Sally', 15.00, 105, false),

(default, '2023-07-16 10:11:09','000', 'Sally', 25.00, 105, false),

(default, '2023-07-16 10:11:09','0004', 'Sally', 45.00, 105, false),

(default, '2023-07-16 10:11:09','0005', 'Sally', 35.00, 105, false),

(default, '2023-07-16 12:00:30','0006', 'Edward', 90.00, 106, false);

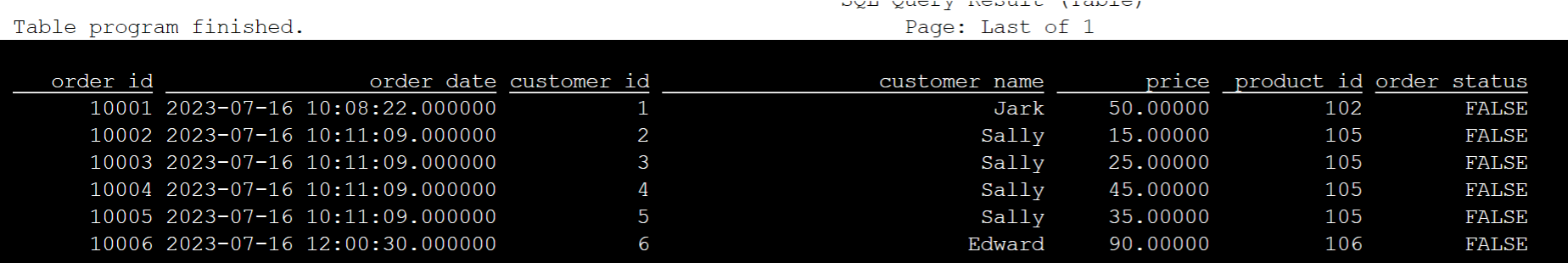

mysql> select * from orders;

+----------+---------------------+-------------+---------------+----------+------------+--------------+

| order_id | order_date | customer_id | customer_name | price | product_id | order_status |

+----------+---------------------+-------------+---------------+----------+------------+--------------+

| 10001 | 2023-07-16 10:08:22 | 1 | Jark | 50.00000 | 102 | 0 |

| 10002 | 2023-07-16 10:11:09 | 2 | Sally | 15.00000 | 105 | 0 |

| 10003 | 2023-07-16 10:11:09 | 3 | Sally | 25.00000 | 105 | 0 |

| 10004 | 2023-07-16 10:11:09 | 4 | Sally | 45.00000 | 105 | 0 |

| 10005 | 2023-07-16 10:11:09 | 5 | Sally | 35.00000 | 105 | 0 |

| 10006 | 2023-07-16 12:00:30 | 6 | Edward | 90.00000 | 106 | 0 |

+----------+---------------------+-------------+---------------+----------+------------+--------------+

6 rows in set (0.22 sec)

mysql> desc orders;

+---------------+---------------+------+-----+---------+----------------+

| Field | Type | Null | Key | Default | Extra |

+---------------+---------------+------+-----+---------+----------------+

| order_id | int | NO | PRI | NULL | auto_increment |

| order_date | datetime | NO | | NULL | |

| customer_id | int | NO | | NULL | |

| customer_name | varchar(255) | NO | | NULL | |

| price | decimal(10,5) | NO | | NULL | |

| product_id | int | NO | | NULL | |

| order_status | tinyint(1) | NO | | NULL | |

+---------------+---------------+------+-----+---------+----------------+

7 rows in set (0.22 sec)

Using SSH download required Kafka connector and MySQL Database jars

Note

Download the correct version jar according to our HDInsight kafka version and MySQL version.

wget https://repo1.maven.org/maven2/org/apache/flink/flink-connector-jdbc/3.1.0-1.17/flink-connector-jdbc-3.1.0-1.17.jar

wget https://repo1.maven.org/maven2/com/mysql/mysql-connector-j/8.0.33/mysql-connector-j-8.0.33.jar

wget https://repo1.maven.org/maven2/org/apache/kafka/kafka-clients/3.2.0/kafka-clients-3.2.0.jar

wget https://repo1.maven.org/maven2/org/apache/flink/flink-connector-kafka/1.17.0/flink-connector-kafka-1.17.0.jar

Moving the planner jar

Move the jar flink-table-planner_2.12-1.17.0-....jar located in webssh pod's /opt to /lib and move out the jar flink-table-planner-loader1.17.0-....jar /opt/flink-webssh/opt/ from /lib. Refer to issue for more details. Perform the following steps to move the planner jar.

mv /opt/flink-webssh/lib/flink-table-planner-loader-1.17.0-*.*.*.*.jar /opt/flink-webssh/opt/

mv /opt/flink-webssh/opt/flink-table-planner_2.12-1.17.0-*.*.*.*.jar /opt/flink-webssh/lib/

Note

An extra planner jar moving is only needed when using Hive dialect or HiveServer2 endpoint. However, this is the recommended setup for Hive integration.

Validation

Use bin/sql-client.sh to connect to Flink SQL

bin/sql-client.sh -j flink-connector-jdbc-3.1.0-1.17.jar -j mysql-connector-j-8.0.33.jar -j kafka-clients-3.2.0.jar -j flink-connector-kafka-1.17.0.jar

Create Hive catalog and connect to the hive catalog on Flink SQL

Note

As we already use Flink cluster with Hive Metastore, there is no need to perform any additional configurations.

CREATE CATALOG myhive WITH (

'type' = 'hive'

);

USE CATALOG myhive;

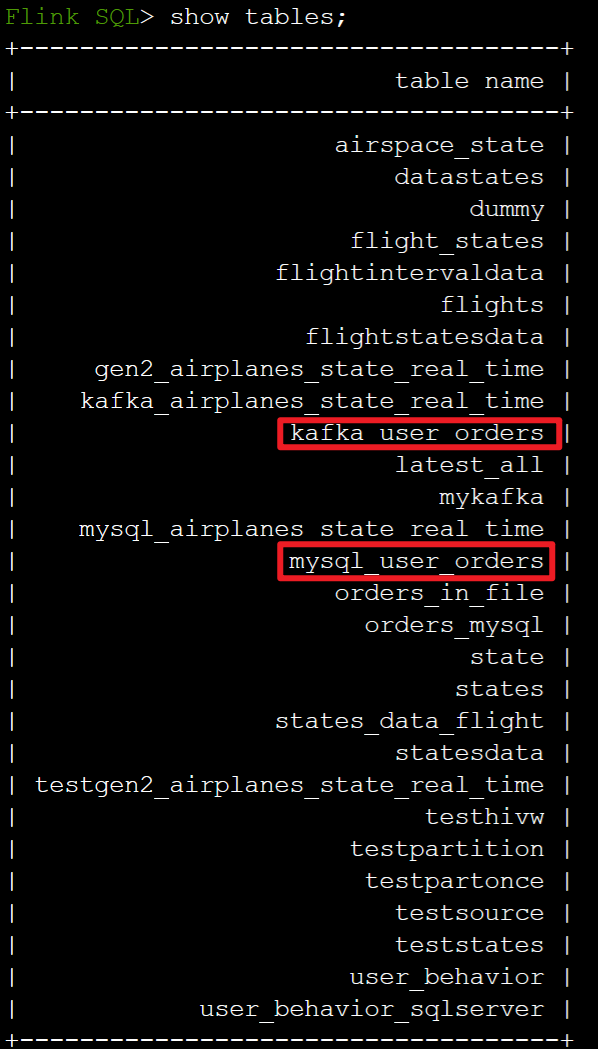

Create Kafka Table on Apache Flink SQL

CREATE TABLE kafka_user_orders (

`user_id` BIGINT,

`user_name` STRING,

`user_email` STRING,

`order_date` TIMESTAMP(3) METADATA FROM 'timestamp',

`price` DECIMAL(10,5),

`product_id` BIGINT,

`order_status` BOOLEAN

) WITH (

'connector' = 'kafka',

'topic' = 'user_orders',

'scan.startup.mode' = 'latest-offset',

'properties.bootstrap.servers' = '10.0.0.38:9092,10.0.0.39:9092,10.0.0.40:9092',

'format' = 'json'

);

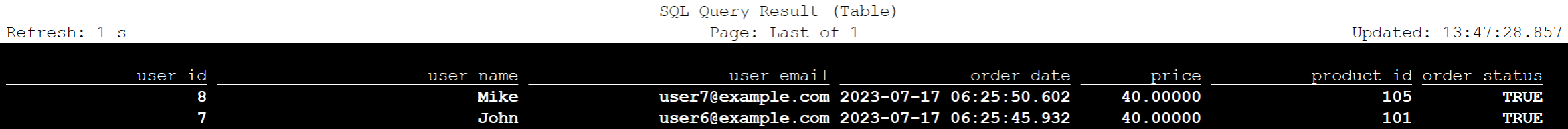

select * from kafka_user_orders;

Create MySQL Table on Apache Flink SQL

CREATE TABLE mysql_user_orders (

`order_id` INT,

`order_date` TIMESTAMP,

`customer_id` INT,

`customer_name` STRING,

`price` DECIMAL(10,5),

`product_id` INT,

`order_status` BOOLEAN

) WITH (

'connector' = 'jdbc',

'url' = 'jdbc:mysql://<servername>.mysql.database.azure.com/mydb',

'table-name' = 'orders',

'username' = '<username>',

'password' = '<password>'

);

select * from mysql_user_orders;

Check tables registered in above Hive catalog on Flink SQL

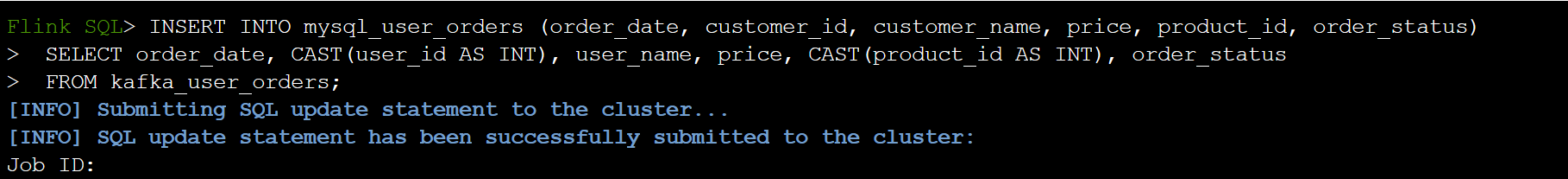

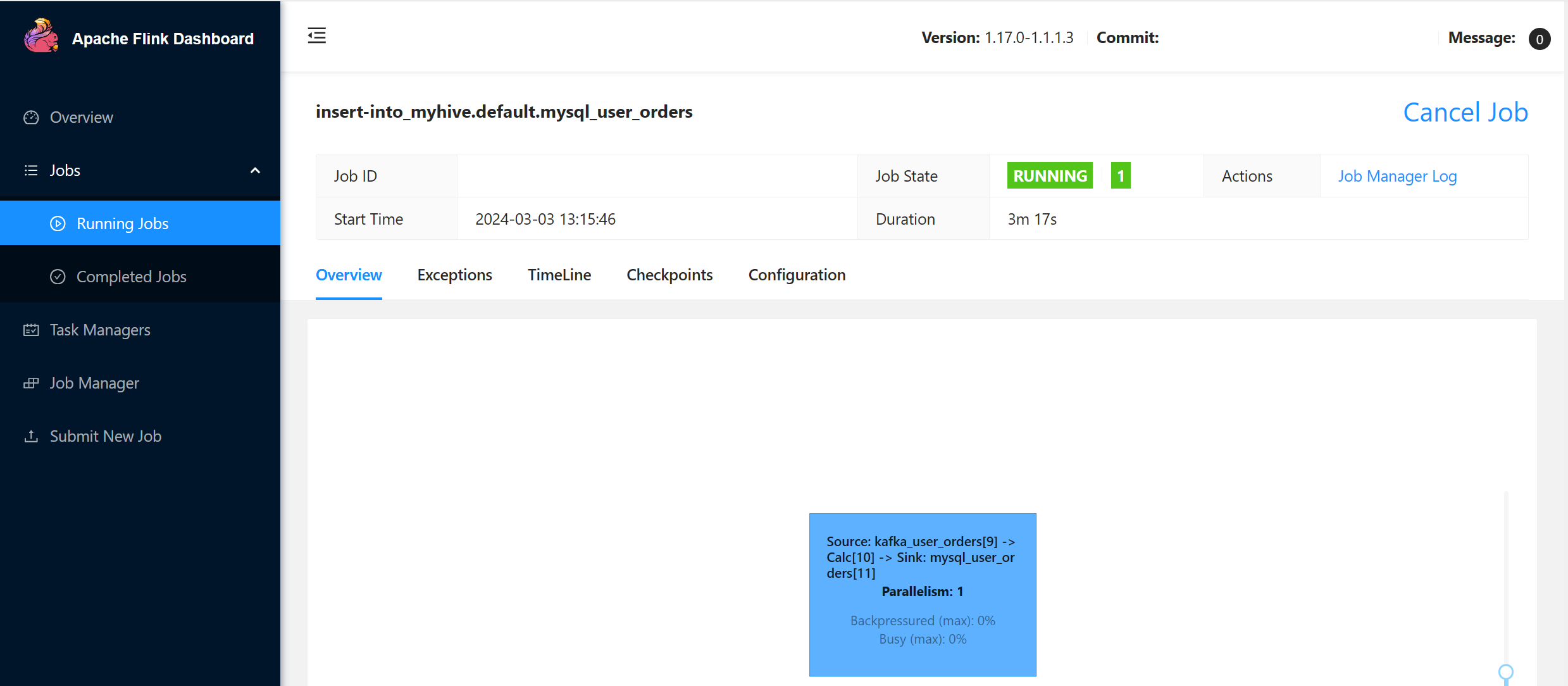

Sink user transaction order info into master order table in MySQL on Flink SQL

INSERT INTO mysql_user_orders (order_date, customer_id, customer_name, price, product_id, order_status)

SELECT order_date, CAST(user_id AS INT), user_name, price, CAST(product_id AS INT), order_status

FROM kafka_user_orders;

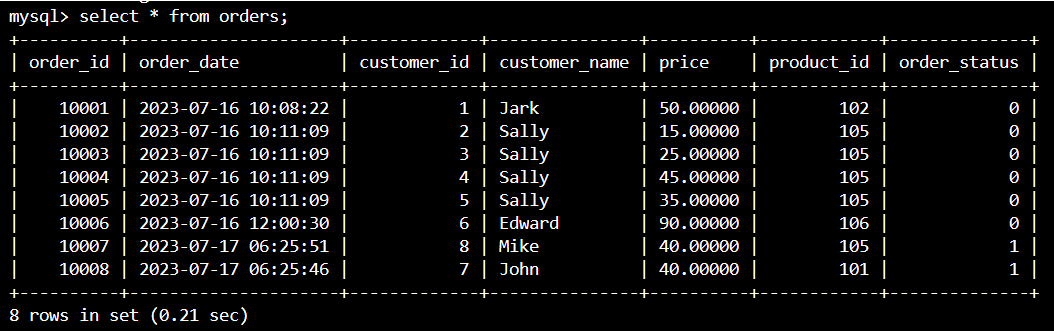

Check if user transaction order data on Kafka is added in master table order in MySQL on Azure Cloud Shell

Creating three more user orders on Kafka

sshuser@hn0-contsk:~$ /usr/hdp/current/kafka-broker/bin/kafka-console-producer.sh --bootstrap-server wn0-contsk:9092 --topic user_orders

>{"user_id": null,"user_name": "Lucy","user_email": "user8@example.com","order_date": "07/17/2023 21:33:44","price": "90.00000","product_id": "102","order_status": false}

>{"user_id": "0009","user_name": "Zark","user_email": "user9@example.com","order_date": "07/17/2023 21:52:07","price": "80.00000","product_id": "103","order_status": true}

>{"user_id": "0010","user_name": "Alex","user_email": "user10@example.com","order_date": "07/17/2023 21:52:07","price": "70.00000","product_id": "104","order_status": true}

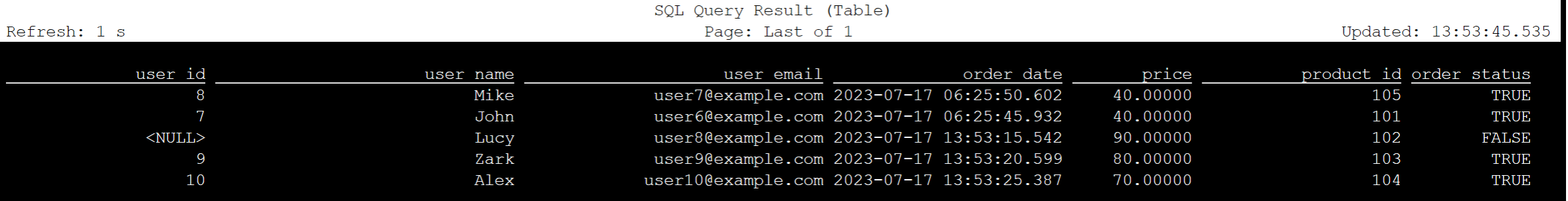

Check Kafka table data on Flink SQL

Flink SQL> select * from kafka_user_orders;

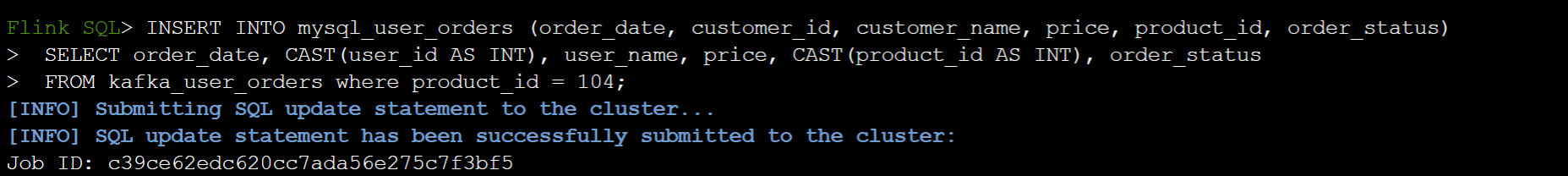

Insert product_id=104 into orders table on MySQL on Flink SQL

INSERT INTO mysql_user_orders (order_date, customer_id, customer_name, price, product_id, order_status)

SELECT order_date, CAST(user_id AS INT), user_name, price, CAST(product_id AS INT), order_status

FROM kafka_user_orders where product_id = 104;

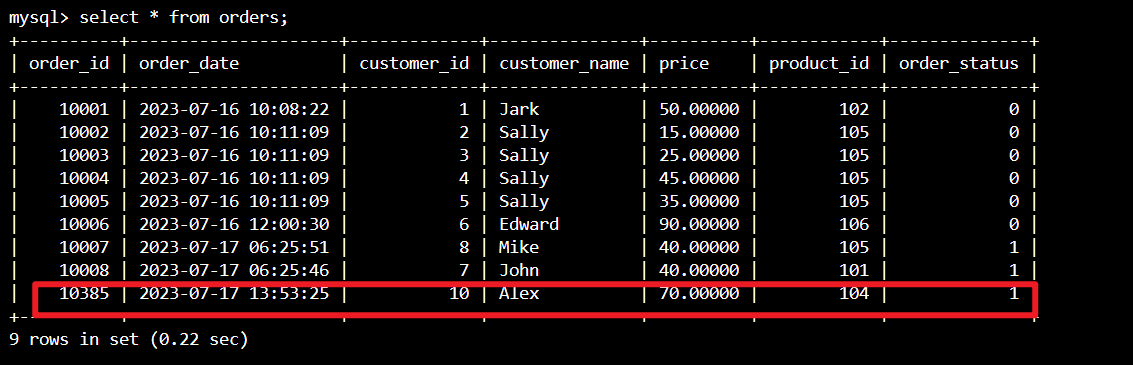

Check product_id = 104 record is added in order table on MySQL on Azure Cloud Shell

Reference

- Apache Hive

- Apache, Apache Hive, Hive, Apache Flink, Flink, and associated open source project names are trademarks of the Apache Software Foundation (ASF).