Migrate Spark configurations from Azure Synapse to Fabric

Apache Spark provides numerous configurations that can be customized to enhance the experience in various scenarios. In Azure Synapse Spark and Fabric Data Engineering, you have the flexibility to incorporate these configurations or properties to tailor your experience. In Fabric, you can add Spark configurations to an environment and use inline Spark properties directly within your Spark jobs. To move Azure Synapse Spark pool configurations to Fabric, use an environment.

For Spark configuration considerations, refer to differences between Azure Synapse Spark and Fabric.

Prerequisites

- If you don’t have one already, create a Fabric workspace in your tenant.

- If you don’t have one already, create an Environment in your workspace.

Option 1: Adding Spark configurations to custom environment

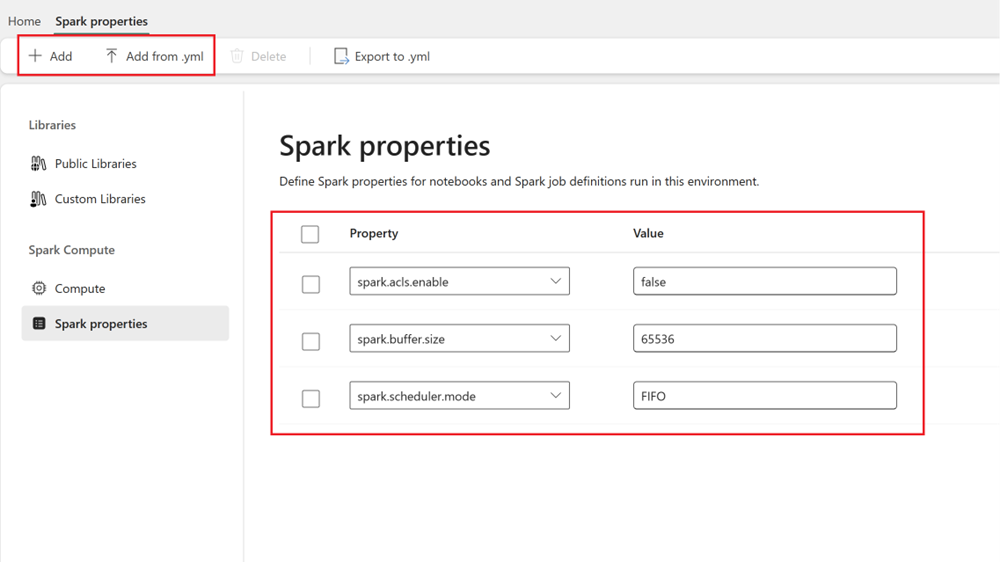

Within an environment, you can set Spark properties and those configurations are applied to the selected environment pool.

- Open Synapse Studio: Sign-in into Azure. Navigate to your Azure Synapse workspace and open the Synapse Studio.

- Locate Spark configurations:

- Go to Manage area and select on Apache Spark pools.

- Find the Apache Spark pool, select Apache Spark configuration and locate the Spark configuration name for the pool.

- Get Spark configurations: You can either obtain those properties by selecting View configurations or exporting configuration (.txt/.conf/.json format) from Configurations + libraries > Apache Spark configurations.

- Once you have Spark configurations, add custom Spark properties to your Environment in Fabric:

- Within the Environment, go to Spark Compute > Spark properties.

- Add Spark configurations. You can either add each manually or import from .yml.

- Click on Save and Publish changes.

Learn more on adding Spark configurations to an Environment.