What is the Synapse Visual Studio Code extension?

The Synapse Visual Studio Code extension supports a pro-developer experience for exploring Microsoft Fabric lakehouses, and authoring Fabric notebooks and Spark job definitions. Learn more about the extension, including how to get started with the necessary prerequisites.

Visual Studio (VS) Code is a one of the most popular lightweight source code editors; it runs on your desktop and is available for Windows, macOS, and Linux. By installing the Synapse VS Code extension, you can author, run, and debug your notebook and Spark job definition locally in VS Code. You can also post the code to the remote Spark compute in your Fabric workspace to run or debug. The extension also allows you to browse your lakehouse data, including tables and raw files, in VS Code.

Prerequisites

Prerequisites for the Synapse VS Code extension:

- Install Java Development Kit(JDK) from the OpenJDK8 website. Ensure to use the JDK link and not the JRE.

- Install Conda.

- Install the Jupyter extension for VS Code

After you have installed the required software, you must update the operating system properties.

Windows

Open the Windows Settings and search for the setting "Edit the system environment variables".

Under System variables look for the variable JAVA_HOME. If it does not exist, click the New button, enter the variable name JAVA_HOME and and add the directory where Java is installed in the variable value field.

For example, if you install JDK at this path

C:\Program Files\Java\jdk-1.8, set the JAVA_HOME variable value to that path.Under System variables look for the variable Path and double click that row. Add the following folder paths to the Path variable, by clicking the New button and adding the folder path:

%JAVA_HOME%/bin

For Conda add the following subfolders of the Conda installation. Add the full folder paths for theese subfolders

\miniconda3\condsbin\miniconda3\Scripts

For example:

C:\Users\john.doe\AppData\Local\miniconda3\condsbin

macOS

Run the conda.sh in the terminal:

Open the terminal window, change the directory to the folder where conda is installed, then change to the subdirectory etc/profile.d. The subdirectory should contain a file named conda.sh.

Execute

source conda.sh.In the same terminal window, run

sudo conda init.Type in

Java --version. The version should be Java 1.8.

Install the extension and prepare your environment

Search for Synapse VS Code in the VS Code extension marketplace and install the extension.

After the extension installation is complete, restart VS Code. The icon for the extension is listed at the VS Code activity bar.

Local working directory

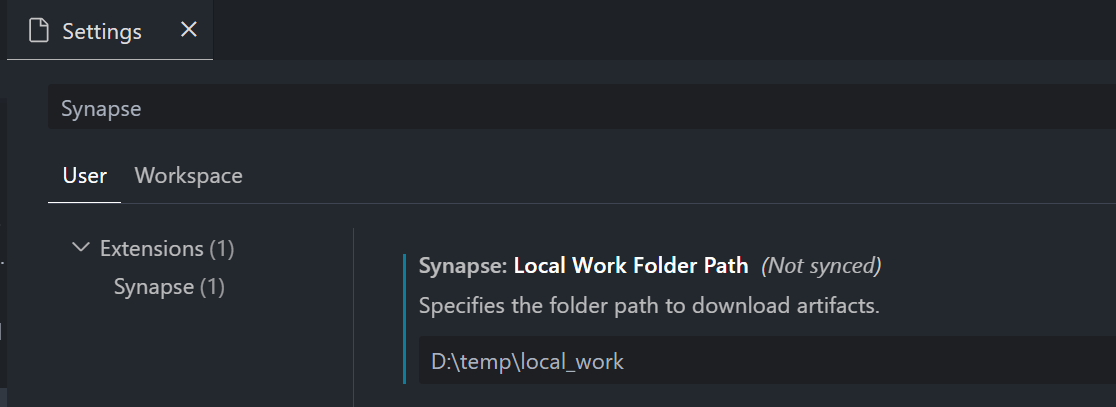

To edit a notebook, you must have a local copy of the notebook content. The local working directory of the extension serves as the local root folder for all downloaded notebooks, even notebooks from different workspaces. By invoking the command Synapse:Set Local Work Folder, you can specify a folder as the local working directory for the extension.

To validate the setup, open the extension settings and check the details there:

Sign in and out of your account

From the VS Code command palette, enter the

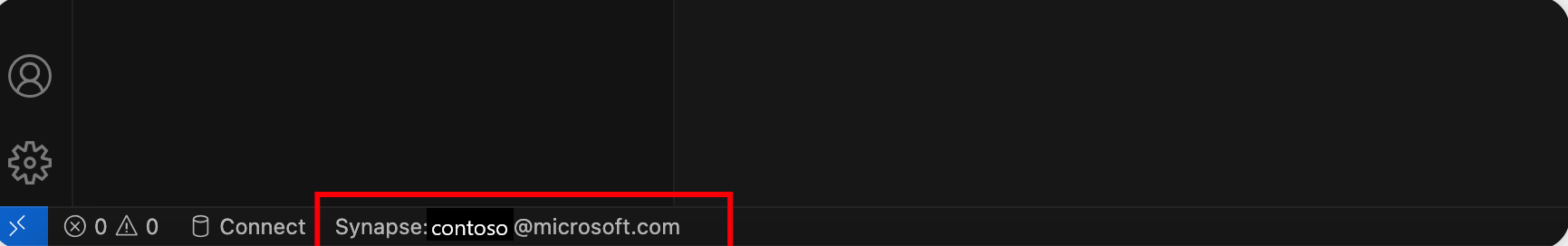

Synapse:Sign incommand to sign in to the extension. A separate browser sign-in page appears.Enter your username and password.

After you successfully sign in, your username will be displayed in the VS Code status bar to indicate that you're signed in.

To sign out of the extension, enter the command

Synapse: Sign off.

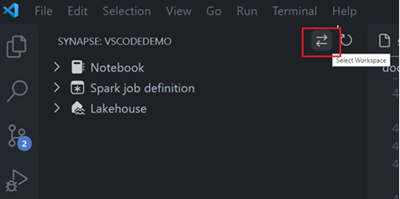

Choose a workspace to work with

To select a Fabric workspace, you must have a workspace created. If you don't have one, you can create one in the Fabric portal. For more information, see Create a workspace.

Once you have a workspace, choose it by selecting the Select Workspace option. A list appears of all workspaces that you have access to; select the one you want from the list.

Current Limitations

- The extension under the desktop mode doesn't support the Microsoft Spark Utilities yet

- Shell command start with "!" is not supported.

Related content

In this overview, you get a basic understanding of how to install and set up the Synapse VS Code extension. The next articles explain how to develop your notebooks and Spark job definitions locally in VS Code.

- To get started with notebooks, see Create and manage Microsoft Fabric notebooks in Visual Studio Code.

- To get started with Spark job definitions, see Create and manage Apache Spark job definitions in Visual Studio Code.