Install a Private Package as a requirement in data workflows

Note

Data workflows is powered by Apache Airflow. Apache Airflow is an open-source platform used to programmatically create, schedule, and monitor complex data workflows. It allows you to define a set of tasks, called operators, that can be combined into directed acyclic graphs (DAGs) to represent data pipelines.

A python package is a way to organize related Python modules into a single directory hierarchy. A package is typically represented as a directory that contains a special file called init.py. Inside a package directory, you can have multiple Python module files (.py files) that define functions, classes, and variables. In the context of Data workflows, you can create packages to add your custom code.

This guide provides step-by-step instructions on installing .whl (Wheel) file, which serves as a binary distribution format for Python package in Data workflows.

For illustration purpose, I create a simple custom operator as python package that can be imported as a module inside dag file.

Develop a custom operator and test with an Apache Airflow Dag

Create a file

sample_operator.pyand convert it to Private Package. Refer to the guide: Creating a package in pythonfrom airflow.models.baseoperator import BaseOperator class SampleOperator(BaseOperator): def __init__(self, name: str, **kwargs) -> None: super().__init__(**kwargs) self.name = name def execute(self, context): message = f"Hello {self.name}" return messageCreate the Apache Airflow DAG file

sample_dag.pyto test the operator defined in Step 1.from datetime import datetime from airflow import DAG from airflow_operator.sample_operator import SampleOperator with DAG( "test-custom-package", tags=["example"] description="A simple tutorial DAG", schedule_interval=None, start_date=datetime(2021, 1, 1), ) as dag: task = SampleOperator(task_id="sample-task", name="foo_bar") taskCreate a GitHub Repository containing the

sample_dag.pyinDagsfolder and your private package file. Common file formats includezip,.whl, ortar.gz. Place the file either in the 'Dags' or 'Plugins' folder, as appropriate. Synchronize your Git Repository with Data workflows or you can use preconfigured repository(Install-Private-Package)[https://github.com/ambika-garg/Install-Private-Package-Fabric]

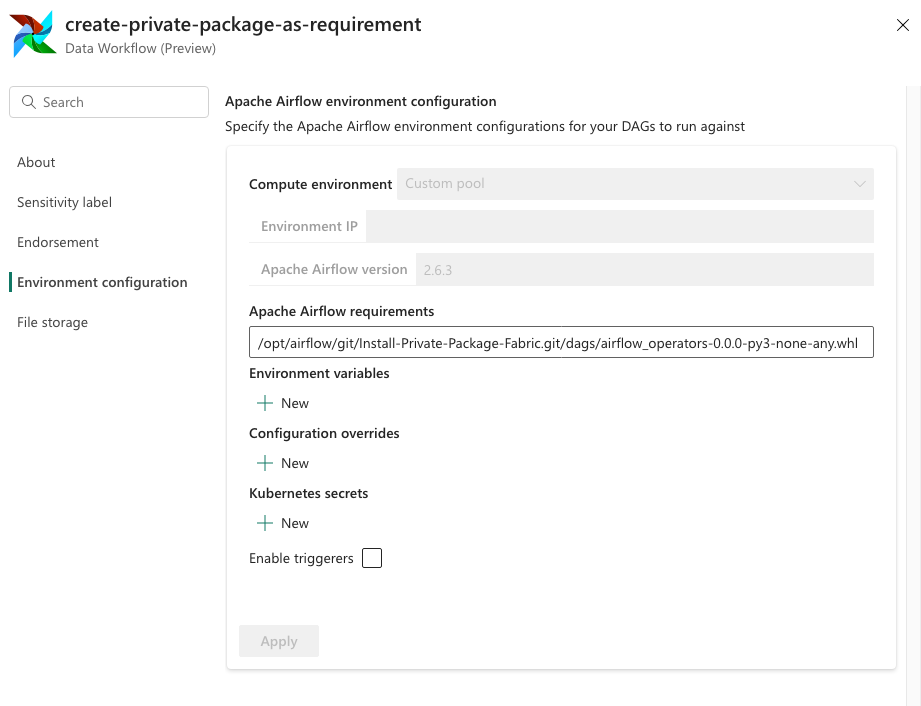

Add your package as a requirement

Add the package as a requirement under Airflow requirements. Use the format /opt/airflow/git/<repoName>.git/<pathToPrivatePackage>

For example, if your private package is located at /dags/test/private.whl in a GitHub repo, add the requirement /opt/airflow/git/<repoName>.git/dags/test/private.whl to the Airflow environment.

Related Content

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for