Module 3: Automate and send notifications with Data Factory

You complete this module in 10 minutes to send an email notifying you when all the jobs in a pipeline are complete, and configure it to run on a scheduled basis.

In this module you learn how to:

- Add an Office 365 Outlook activity to send the output of a Copy activity by email.

- Add schedule to run the pipeline.

- (Optional) Add a dataflow activity into the same pipeline.

Add an Office 365 Outlook activity to your pipeline

We use the pipeline you created in Module 1: Create a pipeline in Data Factory.

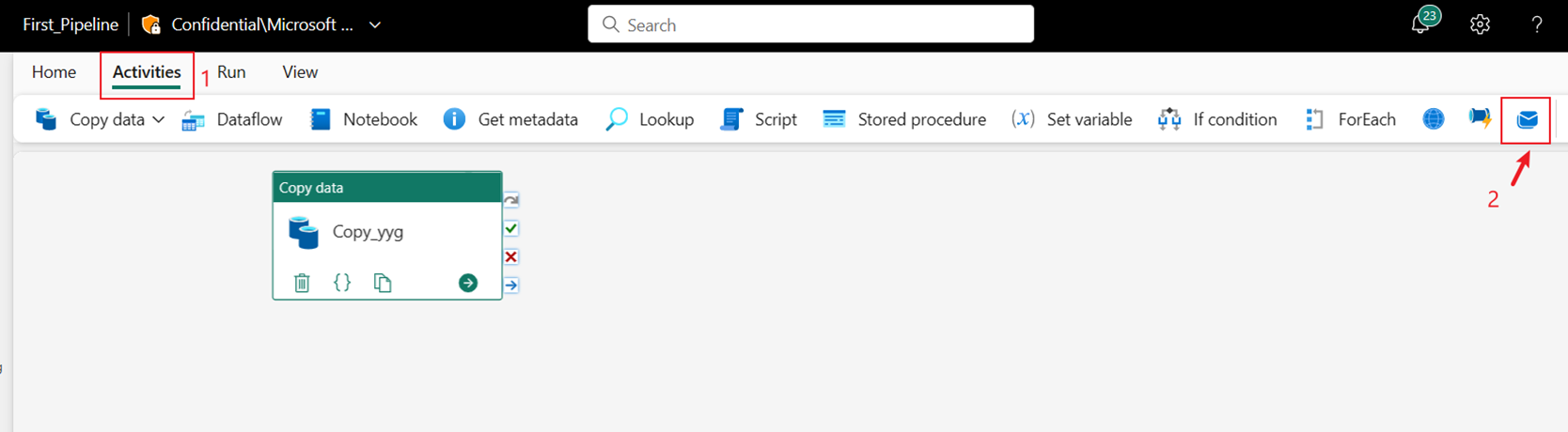

Select the Activities tab in the pipeline editor and find the Office Outlook activity.

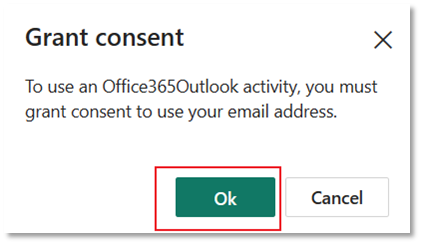

Select OK to grant consent to use your email address.

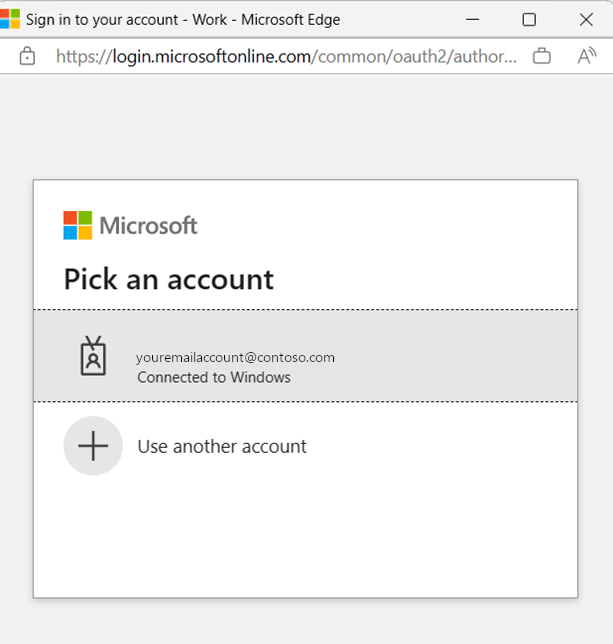

Select the email address you want to use.

Note

The service doesn't currently support personal email. You must use an enterprise email address.

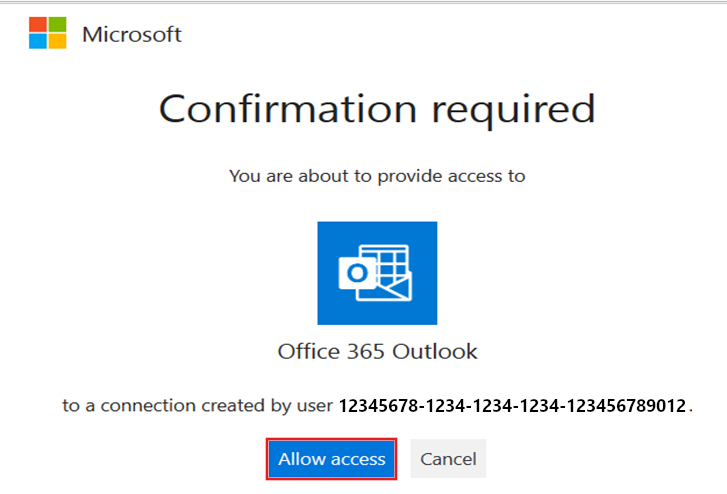

Select Allow access to confirm.

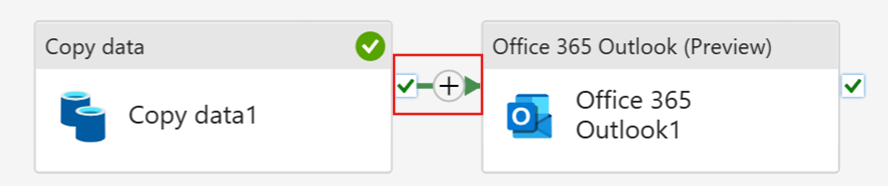

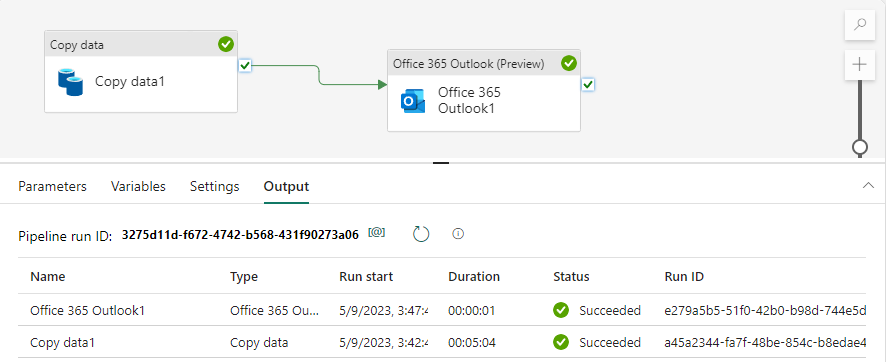

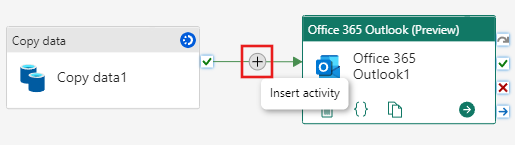

Select and drag the On success path (a green checkbox on the top right side of the activity in the pipeline canvas) from your Copy activity to your new Office 365 Outlook activity.

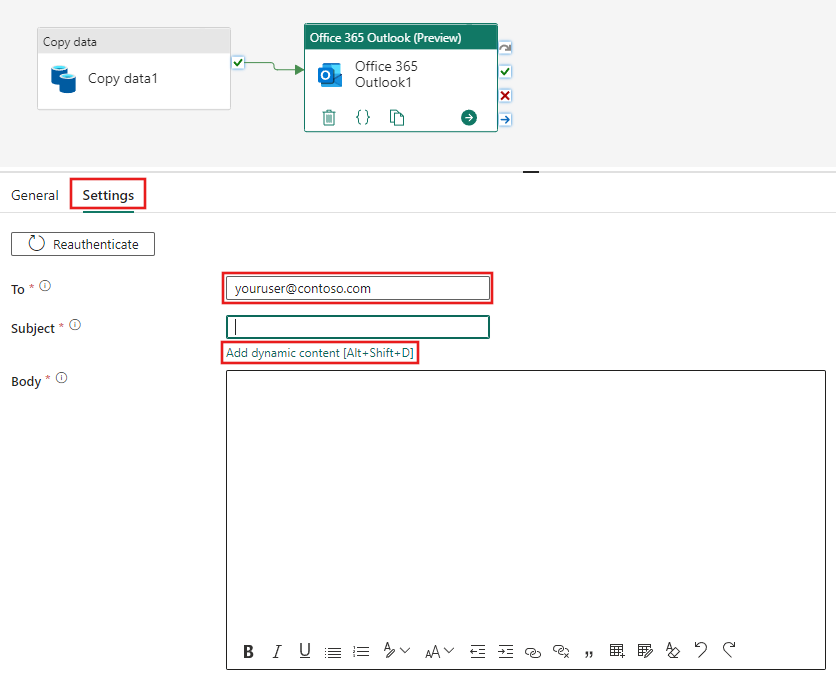

Select the Office 365 Outlook activity from the pipeline canvas, then select the Settings tab of the property area below the canvas to configure the email.

- Enter your email address in the To section. If you want to use several addresses, use ; to separate them.

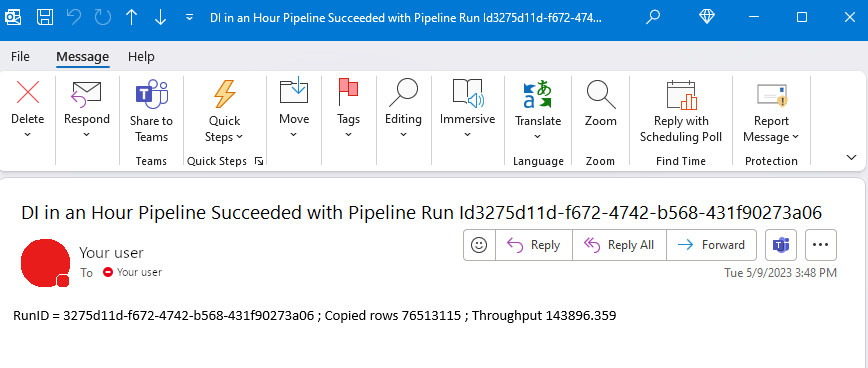

- For the Subject, select the field so that the Add dynamic content option appears, and then select it to display the pipeline expression builder canvas.

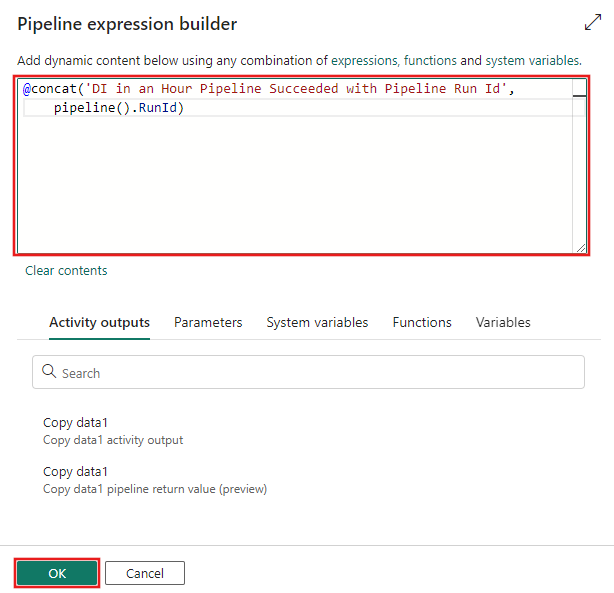

The Pipeline expression builder dialog appears. Enter the following expression, then select OK:

@concat('DI in an Hour Pipeline Succeeded with Pipeline Run Id', pipeline().RunId)

For the Body, select the field again and choose the Add dynamic content option when it appears below the text area. Add the following expression again in the Pipeline expression builder dialog that appears, then select OK:

@concat('RunID = ', pipeline().RunId, ' ; ', 'Copied rows ', activity('Copy data1').output.rowsCopied, ' ; ','Throughput ', activity('Copy data1').output.throughput)

Note

Replace Copy data1 with the name of your own pipeline copy activity.

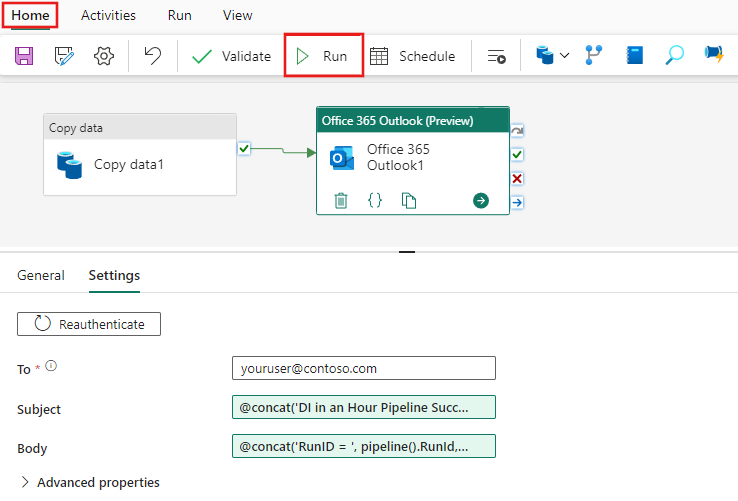

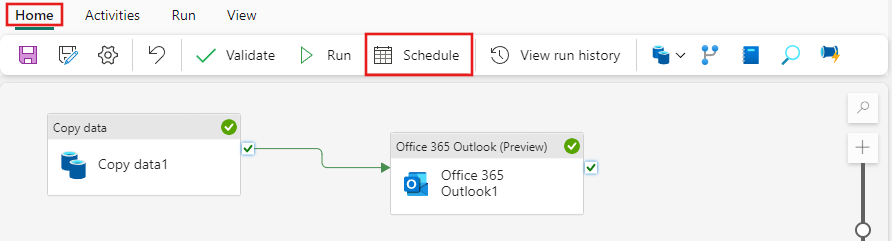

Finally select the Home tab at the top of the pipeline editor, and choose Run. Then select Save and run again on the confirmation dialog to execute these activities.

After the pipeline runs successfully, check your email to find the confirmation email sent from the pipeline.

Schedule pipeline execution

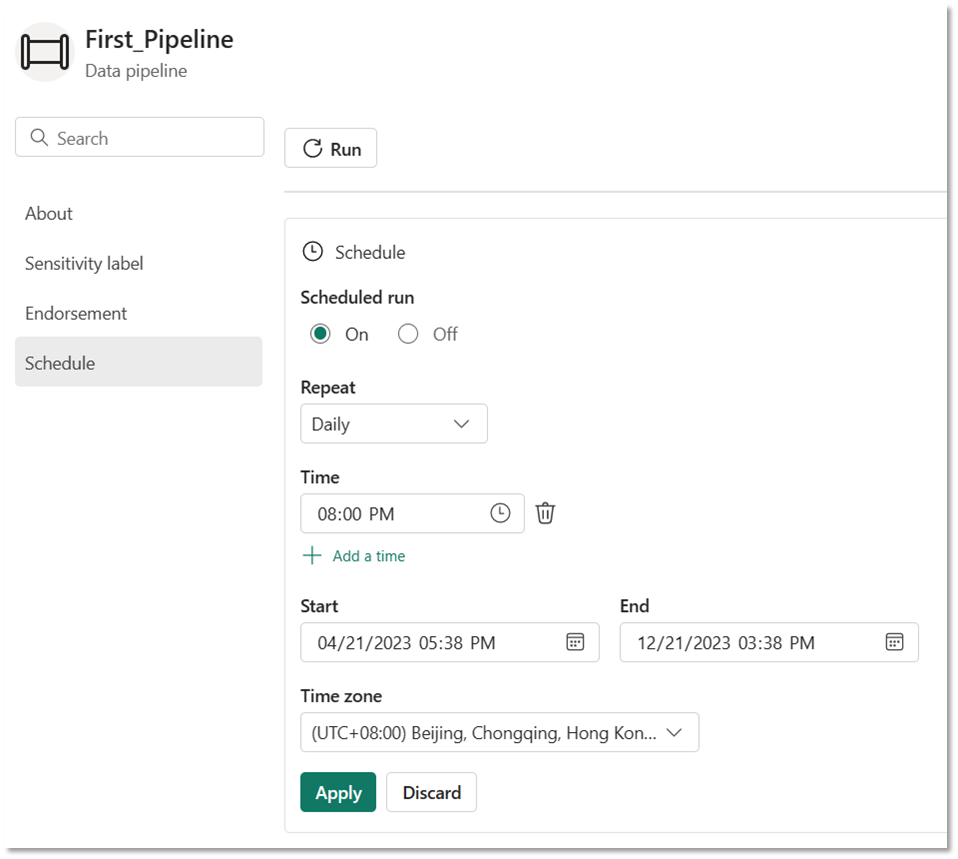

Once you finish developing and testing your pipeline, you can schedule it to execute automatically.

On the Home tab of the pipeline editor window, select Schedule.

Configure the schedule as required. The example here schedules the pipeline to execute daily at 8:00 PM until the end of the year.

(Optional) Add a Dataflow activity to the pipeline

You can also add the dataflow you created in Module 2: Create a dataflow in Data Factory into the pipeline.

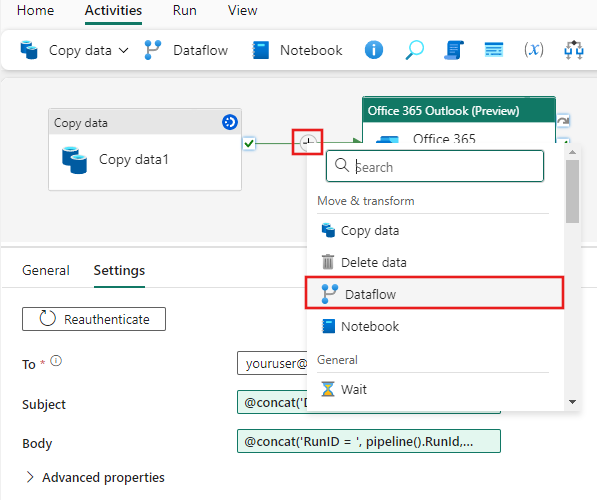

Hover over the green line connecting the Copy activity and the Office 365 Outlook activity on your pipeline canvas, and select the + button to insert a new activity.

Choose Dataflow from the menu that appears.

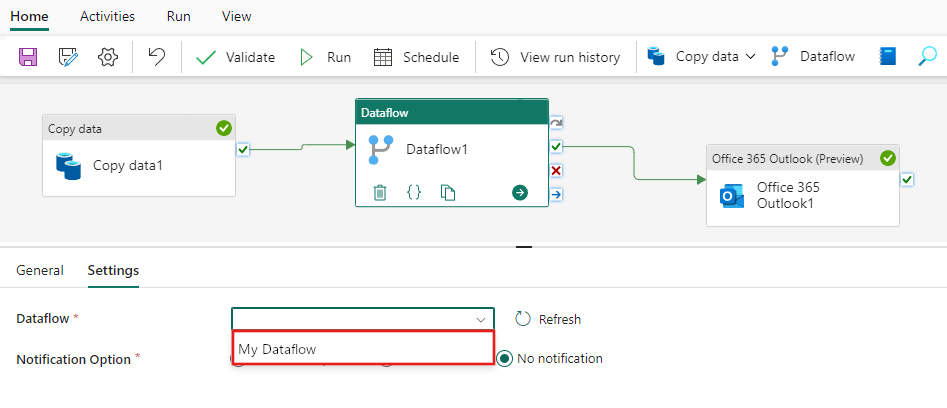

The newly created Dataflow activity is inserted between the Copy activity and the Office 365 Outlook activity, and selected automatically, showing its properties in the area below the canvas. Select the Settings tab on the properties area, and then select your dataflow created in Module 2: Create a dataflow in Data Factory.

Related content

In this third module to our end-to-end tutorial for your first data integration using Data Factory in Microsoft Fabric, you learned how to:

- Use a Copy activity to ingest raw data from a source store into a table in a data Lakehouse.

- Use a Dataflow activity to process the data and move it into a new table in the Lakehouse.

- Use an Office 365 Outlook activity to send an email notifying you once all the jobs are complete.

- Configure the pipeline to run on a scheduled basis.

- (Optional) Insert a Dataflow activity in an existing pipeline flow.

Now that you completed the tutorial, learn more about how to monitor pipeline runs: