Design a scalable partitioning strategy for Azure Table storage

This article discusses partitioning a table in Azure Table storage and strategies you can use to ensure efficient scalability.

Azure provides cloud storage that is highly available and highly scalable. The underlying storage system for Azure is provided through a set of services, including Azure Blob storage, Azure Table storage, Azure Queue storage, and Azure Files.

Azure Table storage is designed to store structured data. The Azure Storage service supports an unlimited number of tables. Each table can scale to massive levels and provide terabytes of physical storage. To best take advantage of tables, you must partition your data optimally. This article explores strategies you can use to efficiently partition data for Azure Table storage.

Table entities

Table entities represent the units of data that are stored in a table. Table entities are similar to rows in a typical relational database table. Each entity defines a collection of properties. Each property is defined as a key/value pair by its name, value, and the value's data type. Entities must define the following three system properties as part of the property collection:

PartitionKey: The PartitionKey property stores string values that identify the partition that an entity belongs to. Partitions, as we discuss later, are integral to the scalability of the table. Entities that have the same PartitionKey value are stored in the same partition.

RowKey: The RowKey property stores string values that uniquely identify entities within each partition. The PartitionKey and the RowKey together form the primary key for the entity.

Timestamp: The Timestamp property provides traceability for an entity. A timestamp is a date/time value that tells you the last time the entity was modified. A timestamp is sometimes referred to as the entity's version. Modifications to timestamps are ignored because the table service maintains the value for this property during all insert and update operations.

Table primary key

The primary key for an Azure entity consists of the combined PartitionKey and RowKey properties. The two properties form a single clustered index within the table. The PartitionKey and RowKey values can be up to 1024 characters in size. Empty strings also are permitted; however, null values are not permitted.

The clustered index sorts by the PartitionKey in ascending order and then by RowKey in ascending order. The sort order is observed in all query responses. Lexical comparisons are used during the sorting operation. A string value of "111" appears before a string value of "2". In some cases, you might want the sort order to be numeric. To sort in a numeric and ascending order, you must use fixed-length, zero-padded strings. In the preceding example, "002" appears before "111".

Table partitions

Partitions represent a collection of entities with the same PartitionKey values. Partitions are always served from one partition server. Each partition server can serve one or more partitions. A partition server has a rate limit of the number of entities it can serve from one partition over time. Specifically, a partition has a scalability target of 2000 entities per second. This throughput might be higher during minimal load on the storage node, but it's throttled down when the node becomes hot or active.

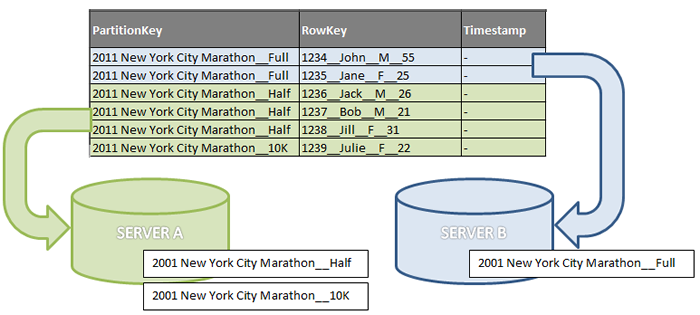

To better illustrate the concept of partitioning, the following figure shows a table that contains a small subset of data for foot race event registrations. The figure presents a conceptual view of partitioning where the PartitionKey contains three different values: the event's name combined with three distances (full marathon, half marathon, and 10 km). This example uses two partition servers. Server A contains registrations for the half-marathon and 10-km distances. Server B contains only the full-marathon distances. The RowKey values are shown to provide context, but the values aren't meaningful for this example.

A table with three partitions

Scalability

Because a partition is always served from a single partition server and each partition server can serve one or more partitions, the efficiency of serving entities is correlated with the health of the server. Servers that encounter high traffic for their partitions might not be able to sustain a high throughput. For example, in the preceding figure, if many requests for "2011 New York City Marathon__Half" are received, server A might become too hot. To increase the throughput of the server, the storage system load-balances the partitions to other servers. The result is that the traffic is distributed across many other servers. For optimal load balancing of traffic, you should use more partitions so that Azure Table storage can distribute the partitions to more partition servers.

Entity group transactions

An entity group transaction is a set of storage operations that are implemented atomically on entities that have the same PartitionKey value. If any storage operation in the entity group fails, all the storage operations in the entity are rolled back. An entity group transaction consists of no more than 100 storage operations and might be no more than 4 MiB in size. Entity group transactions provide Azure Table storage with a limited form of the atomicity, consistency, isolation, and durability (ACID) semantics provided by relational databases.

Entity group transactions improve throughput because they reduce the number of individual storage operations that must be submitted to Azure Table storage. Entity group transactions also provide an economic benefit. An entity group transaction is billed as a single storage operation regardless of how many storage operations it contains. Because all the storage operations in an entity group transaction affect entities that have the same PartitionKey value, a need to use entity group transactions can drive the selection of PartitionKey value.

Range partitions

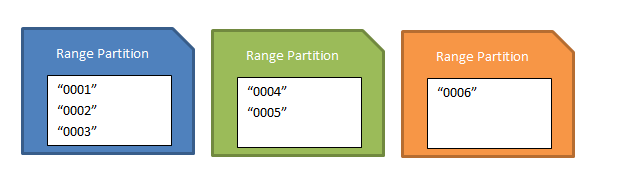

If you use unique PartitionKey values for your entities, each entity belongs in its own partition. If the unique values you use increase or decrease in value, it's possible that Azure will create range partitions. Range partitions group entities that have sequential, unique PartitionKey values to improve the performance of range queries. Without range partitions, a range query must cross partition boundaries or server boundaries, which can decrease query performance. Consider an application that uses the following table, which has an increasing sequence value for PartitionKey:

| PartitionKey | RowKey |

|---|---|

| "0001" | - |

| "0002" | - |

| "0003" | - |

| "0004" | - |

| "0005" | - |

| "0006" | - |

Azure might group the first three entities into a range partition. If you apply a range query to the table that uses the PartitionKey as the criteria and requests entities from "0001" to "0003", the query might perform efficiently because the entities are served from a single partition server. There's no guarantee when and how a range partition will be created.

The existence of range partitions for your table can affect the performance of your insert operations if you insert entities that have increasing or decreasing PartitionKey values. Inserting entities that have increasing PartitionKey values is called an append-only pattern. Inserting entities that have decreasing values is called a prepend-only pattern. Consider not using these kinds of patterns because the overall throughput of your insert requests is limited by a single partition server. This is because, if range partitions exist, the first and last (range) partitions contain the least and greatest PartitionKey values, respectively. Therefore, the insert of a new entity, one that has a sequentially lower or higher PartitionKey value, targets one of the end partitions. The following figure shows a possible set of range partitions that are based on the preceding example. If a set of "0007", "0008", and "0009" entities were inserted, they would be assigned to the last (orange) partition.

A set of range partitions

It's important to note that there's no negative effect on performance if the insert operations use PartitionKey values that are more scattered.

Analyze data

Unlike a table in a relational database that you can use to manage indexes, tables in Azure Table storage can only have one index. An index in Azure Table storage always consists of the PartitionKey and RowKey properties.

In an Azure table, you don't have the luxury of performance-tuning your table by adding more indexes or by altering an existing table after you roll it out. You must analyze the data as you design your table. The most important aspects to consider for optimal scalability and for query and insert efficiency are the PartitionKey and RowKey values. This article emphasizes how to choose the PartitionKey because it directly relates to how tables are partitioned.

Partition sizing

Partition sizing refers to the number of entities a partition contains. As we discuss in Scalability, having more partitions means you get better load balancing. The granularity of the PartitionKey value affects the size of the partitions. At the coarsest level, if a single value is used as the PartitionKey, all the entities are in a single partition that is very large. At the finest level of granularity, the PartitionKey can contain unique values for each entity. The result is that there's a partition for each entity. The following table shows the advantages and disadvantages for the range of granularities:

| PartitionKey granularity | Partition size | Advantages | Disadvantages |

|---|---|---|---|

| Single value | Small number of entities | Batch transactions are possible with any entity. All entities are local and served from the same storage node. |

|

| Single value | Large number of entities | Entity group transactions might be possible with any entity. For more information about the limits of entity group transactions, see Performing entity group transactions. | Scaling is limited. Throughput is limited to the performance of a single server. |

| Multiple values | Multiple partitions Partition sizes depend on entity distribution. |

Batch transactions are possible on some entities. Dynamic partitioning is possible. Single-request queries are possible (no continuation tokens). Load balancing across more partition servers is possible. |

A highly uneven distribution of entities across partitions might limit the performance of the larger and more active partitions. |

| Unique values | Many small partitions | The table is highly scalable. Range partitions might improve the performance of cross-partition range queries. |

Queries that involve ranges might require visits to more than one server. Batch transactions are not possible. Append-only or prepend-only patterns might affect insert throughput. |

The table shows how scaling is affected by PartitionKey values. It's a best practice to favor smaller partitions because they offer better load balancing. Larger partitions might be appropriate in some scenarios, and they're not necessarily disadvantageous. For example, if your application doesn't require scalability, a single large partition might be appropriate.

Determine queries

Queries retrieve data from tables. When you analyze the data for a table in Azure Table storage, it's important to consider which queries the application will use. If an application has several queries, you might need to prioritize them, although your decisions might be subjective. In many cases, dominant queries are discernable from other queries. In terms of performance, queries fall into different categories. Because a table has only one index, query performance usually is related to the PartitionKey and RowKey properties. The following table shows the different types of queries and their performance ratings:

| Query type | PartitionKey match | RowKey match | Performance rating |

|---|---|---|---|

| Row range scan | Exact | Partial | Better with smaller-sized partitions. Bad with partitions that are very large. |

| Partition range scan | Partial | Partial | Good with a small number of partition servers being touched. Worse with more servers being touched. |

| Full table scan | Partial, none | Partial, none | Worse with a subset of partitions being scanned. Worst with all partitions being scanned. |

Note

The table defines performance ratings relative to each other. The number and size of the partitions might ultimately determine how the query performs. For example, a partition range scan for a table that has many large partitions might perform poorly compared to a full table scan for a table that has a few small partitions.

The query types listed in the preceding table show a progression from the best types of queries to use to the worst types, based on their performance ratings. Point queries are the best types of queries to use because they fully use the table's clustered index. The following point query uses the data from the foot races registration table:

http://<account>.windows.core.net/registrations(PartitionKey=”2011 New York City Marathon__Full”,RowKey=”1234__John__M__55”)

If the application uses multiple queries, not all of them can be point queries. In terms of performance, range queries follow point queries. There are two types of range queries: the row range scan and the partition range scan. The row range scan specifies a single partition. Because the operation occurs on a single partition server, row range scans generally are more efficient than partition range scans. However, a key factor in the performance of row range scans is how selective a query is. Query selectivity dictates how many rows must be iterated to find the matching rows. More selective queries are more efficient during row range scans.

To assess the priorities of your queries, consider the frequency and response time requirements for each query. Queries that are frequently executed might be prioritized higher. However, an important but rarely used query might have low latency requirements that could rank it higher on the priority list.

Choose the PartitionKey value

The core of any table's design is its scalability, the queries used to access it, and storage operation requirements. The PartitionKey values you choose dictate how a table is partitioned and the type of queries you can use. Storage operations, and especially inserts, also might affect your choice of PartitionKey values. The PartitionKey values can range from single values to unique values. They also can be created by using multiple values. You can use entity properties to form the PartitionKey value. Or, the application can compute the value. The following sections discuss important considerations.

Entity group transactions

Developers should first consider whether the application will use entity group transactions (batch updates). Entity group transactions require entities to have the same PartitionKey value. Also, because batch updates are for an entire group, the choices of PartitionKey values might be limited. For example, a banking application that maintains cash transactions must insert cash transactions into the table atomically. Cash transactions represent both the debit and the credit sides and must net to zero. This requirement means that the account number can't be used as any part of the PartitionKey value because each side of the transaction uses different account numbers. Instead, a transaction ID might be a better choice.

Partitions

Partition numbers and sizes affect the scalability of a table that is under load. They're also controlled by how granular the PartitionKey values are. It can be challenging to determine the PartitionKey based on the partition size, especially if the distribution of values is hard to predict. A good rule of thumb is to use multiple, smaller partitions. Many table partitions make it easier for Azure Table storage to manage the storage nodes the partitions are served from.

Choosing unique or finer values for the PartitionKey results in smaller but more partitions. This generally is favorable because the system can load-balance the many partitions to distribute the load across many partitions. However, you should consider the effect of having many partitions on cross-partition range queries. These types of queries must visit multiple partitions to satisfy a query. It's possible that the partitions are distributed across many partition servers. If a query crosses a server boundary, continuation tokens must be returned. Continuation tokens specify the next PartitionKey or RowKey values to retrieve the next set of data for the query. In other words, continuation tokens represent at least one more request to the service, which can degrade the overall performance of the query.

Query selectivity is another factor that can affect the performance of the query. Query selectivity is a measure of how many rows must be iterated for each partition. The more selective a query is, the more efficient the query is at returning the rows you want. The overall performance of range queries might depend on the number of partition servers that must be touched or how selective the query is. You also should avoid using the append-only or prepend-only patterns when you insert data into your table. If you use these patterns, despite creating small and many partitions, you might limit the throughput of your insert operations. The append-only and prepend-only patterns are discussed in Range partitions.

Queries

Knowing the queries that you'll use can help you determine which properties are important to consider for the PartitionKey value. The properties that you use in the queries are candidates for the PartitionKey value. The following table provides a general guideline of how to determine the PartitionKey value:

| If the entity… | Action |

|---|---|

| Has one key property | Use it as the PartitionKey. |

| Has two key properties | Use one as the PartitionKey and the other as the RowKey. |

| Has more than two key properties | Use a composite key of concatenated values. |

If there's more than one equally dominant query, you can insert the information multiple times by using different RowKey values that you need. Your application will manage secondary (or tertiary, and so on) rows. You can use this type of pattern to satisfy the performance requirements of your queries. The following example uses the data from the foot race registration example. It has two dominant queries:

- Query by bib number

- Query by age

To serve both dominant queries, insert two rows as an entity group transaction. The following table shows the PartitionKey and RowKey properties for this scenario. The RowKey values provide a prefix for the bib and age so that the application can distinguish between the two values.

| PartitionKey | RowKey |

|---|---|

| 2011 New York City Marathon__Full | BIB:01234__John__M__55 |

| 2011 New York City Marathon__Full | AGE:055__1234__John__M |

In this example, an entity group transaction is possible because the PartitionKey values are the same. The group transaction provides atomicity of the insert operation. Although it's possible to use this pattern with different PartitionKey values, we recommend that you use the same values to gain this benefit. Otherwise, you might have to write extra logic to ensure atomic transactions that use different PartitionKey values.

Storage operations

Tables in Azure Table storage might encounter load not only from queries. They also might encounter load from storage operations like inserts, updates, and deletes. Consider what type of storage operations you will perform on the table and at what rate. If you perform these operations infrequently, you might not need to worry about them. However, for frequent operations like performing many inserts in a short time, you must consider how those operations are served as a result of the PartitionKey values that you choose. Important examples are the append-only and prepend-only patterns. The append-only and prepend-only patterns are discussed in Range partitions.

When you use an append-only or prepend-only pattern, you use unique ascending or descending values for the PartitionKey on subsequent insertions. If you combine this pattern with frequent insert operations, your table won't be able to service the insert operations with great scalability. The scalability of your table is affected because Azure can't load-balance the operation requests to other partition servers. In that case, you might want to consider using values that are random, such as GUID values. Then, your partition sizes can remain small and still maintain load balancing during storage operations.

Table partition stress testing

When the PartitionKey value is complex or requires comparisons to other PartitionKey mappings, you might need to test the table's performance. The test should examine how well a partition performs under peak loads.

To perform a stress test

- Create a test table.

- Load the test table with data so that it contains entities that have the PartitionKey value that you'll target.

- Use the application to simulate peak load to the table. Target a single partition by using the PartitionKey value from step 2. This step is different for every application, but the simulation should include all the required queries and storage operations. You might need to adjust the application so that it targets a single partition.

- Examine the throughput of the GET or PUT operations on the table.

To examine the throughput, compare the actual values to the specified limit of a single partition on a single server. Partitions are limited to 2000 entities per second. If the throughput exceeds 2000 entities per second for a partition, the server might run too hot in a production setting. In this case, the PartitionKey values might be too coarse, so that there aren't enough partitions or the partitions are too large. You might need to modify the PartitionKey value so that the partitions will be distributed among more servers.

Load balancing

Load balancing at the partition layer occurs when a partition gets too hot. When a partition is too hot, the partition, specifically the partition server, operates beyond its target scalability. For Azure storage, each partition has a scalability target of 2000 entities per second. Load balancing also occurs at the Distributed File System (DFS) layer.

The load balancing at the DFS layer deals with I/O load and is outside the scope of this article. Load balancing at the partition layer doesn't immediately occur after the scalability target is exceeded. Instead, the system waits a few minutes before beginning the load-balancing process. This ensures that a partition has truly become hot. It isn't necessary to prime partitions with generated load that triggers load balancing because the system automatically performs the task.

If a table was primed with a certain load, the system might be able to balance the partitions based on actual load, which results in a significantly different distribution of the partitions. Instead of priming partitions, consider writing code that handles the timeout and Server Busy errors. The errors are returned when the system is load balancing. By handling those errors by using a retry strategy, your application can better handle peak loads. Retry strategies are discussed in more detail in the following section.

When load balancing occurs, the partition becomes offline for a few seconds. During the offline period, the system reassigns the partition to a different partition server. It's important to note that your data isn't stored by the partition servers. Instead, the partition servers serve entities from the DFS layer. Because your data isn't stored at the partition layer, moving partitions to different servers is a fast process. This flexibility greatly limits the downtime, if any, that your application might encounter.

Retry strategy

It's important for your application to handle storage operation failures to help ensure that you don't lose any data updates. Some failures don't require a retry strategy. For example, updates that return a 401 Unauthorized error don't benefit from retrying the operation because it's likely that the application state won't change between retries that resolve the 401 error. However, errors like Server Busy or Timeout are related to the load-balancing features of Azure that provide table scalability. When the storage nodes serving your entities become hot, Azure balances the load by moving partitions to other nodes. During this time, the partition might be inaccessible, which results in Server Busy or Timeout errors. Eventually, the partition is reenabled and updates resume.

A retry strategy is appropriate for Server Busy or Timeout errors. In most cases, you can exclude 400-level errors and some 500-level errors from the retry logic. Errors that can be excluded include 501 Not Implemented and 505 HTTP Version Not Supported. Then, you can implement a retry strategy for up to 500-level errors, such as Server Busy (503) and Timeout (504).

You can choose from three common retry strategies for your application:

- No Retry: No retry attempt is made.

- Fixed Backoff: The operation is retried N times with a constant backoff value.

- Exponential Backoff: The operation is retried N times with an exponential backoff value.

The No Retry strategy is a simple (and evasive) way to handle operation failures. However a No Retry strategy isn't very useful. Not imposing any retry attempts poses obvious risks with data not being stored correctly after failed operations. A better strategy is to use the Fixed Backoff strategy. which provides the ability to retry operations with the same backoff duration.

However, the strategy isn't optimized for handling highly scalable tables. If many threads or processes are waiting for the same duration, collisions can occur. A recommended retry strategy is one that uses an exponential backoff where each retry attempt is longer than the last attempt. It's similar to the collision avoidance algorithm used in computer networks, such as Ethernet. The exponential backoff uses a random factor to provide an additional variance to the resulting interval. The backoff value is then constrained to minimum and maximum limits. The following formula can be used for calculating the next backoff value using an exponential algorithm:

y = Rand(0.8z, 1.2z)(2x-1

y = Min(zmin + y, zmax

Where:

z = default backoff in milliseconds

zmin = default minimum backoff in milliseconds

zmax = default maximum backoff in milliseconds

x = the number of retries

y = the backoff value in milliseconds

The 0.8 and 1.2 multipliers used in the Rand (random) function produces a random variance of the default backoff within ±20% of the original value. The ±20% range is acceptable for most retry strategies, and it prevents further collisions. The formula can be implemented by using the following code:

int retries = 1;

// Initialize variables with default values

var defaultBackoff = TimeSpan.FromSeconds(30);

var backoffMin = TimeSpan.FromSeconds(3);

var backoffMax = TimeSpan.FromSeconds(90);

var random = new Random();

double backoff = random.Next(

(int)(0.8D * defaultBackoff.TotalMilliseconds),

(int)(1.2D * defaultBackoff.TotalMilliseconds));

backoff *= (Math.Pow(2, retries) - 1);

backoff = Math.Min(

backoffMin.TotalMilliseconds + backoff,

backoffMax.TotalMilliseconds);

Summary

An application in Azure Table storage can store a massive amount of data because Table storage manages and reassigns partitions across many storage nodes. You can use data partitioning to control the table’s scalability. Plan ahead when you define a table schema to ensure that you implement efficient partitioning strategies. Specifically, analyze the application’s requirements, data, and queries before you select PartitionKey values. Each partition might be reassigned to different storage nodes as the system responds to traffic. Use a partition stress test to ensure that the table has the correct PartitionKey values. This test helps you determine when partitions are too hot and helps you make the necessary partition adjustments.

To ensure that your application handles intermittent errors and that your data is persisted, use a retry strategy with backoff. The default retry policy that the Azure Storage Client Library uses has an exponential backoff that avoids collisions and maximizes the throughput of your application.