Azure Storage Bindings Part 3 – Tables

This blog post was made during the early previews of the SDK. Some of the features/ APIs have changed. For the

latest documentation on WebJobs SDK, please see this link https://azure.microsoft.com/en-us/documentation/articles/websites-webjobs-resources

The dictionary bindings were removed from the core product and are being moved into an extension.

====

I previously described how the Azure WebJobs SDK can bind to Blobs and Queues. This entry describes binding to Tables.

You can use a [Table] attribute from the Microsoft.WindowsAzure.Jobs namespace in the Jobs.Host nuget package. Functions are not triggered on table changes. However, once a function is called for some other reason, it can bind to a table as a read/write resource for doing its task.

As background, here’s a good tutorial using about azure tables and using the v2.x+ azure storage sdk for tables.

The WebJobs SDK currently supports binding a table to an IDictionary. where:

- The dictionary key is Tuple<string,string> represents the partition and row key.

- the dictionary value is a user poco type whose properties map to the table properties to be read. Note that the type does not need to derive from TableServiceEntity or any other base class.

- Your poco type’s properties can by strongly typed (not just string) including binding to enum properties or any type with a TryParse() method.

- the binding is read/write

For example, here’s a declaration that binds the ‘dict’ parameter to an Azure Table. The table is treated as a homogenous table where each row has properties Fruit, Duration, and Value.

public static void TableDict([Table("mytable")] IDictionary<Tuple<string, string>, OtherStuff> dict) {}

public class OtherStuff { public Fruit Fruit { get; set; } public TimeSpan Duration { get; set; } public string Value { get; set; } } public enum Fruit { Apple, Banana, Pear, }

You can also retrieve PartitionKey, RowKey, or TimeStamp properties by including them as properties on your poco.

Writing to a table

You can use a the dictionary binding to write to a table via the index operator . Here’s an example of ingressing a file (read via some Parse<> function) to an azure table.

[NoAutomaticTrigger] public static void Ingress( [BlobInput(@"table-uploads\key.csv")] Stream inputStream, [Table("convert")] IDictionary<Tuple<string, string>, object> table ) { IEnumerable<Payload> rows = Parse<Payload>(inputStream); foreach (var row in rows) { var partRowKey = Tuple.Create("const", row.guidkey.ToString()); table[partRowKey] = row; // azure table write } }

In this case, the IDictionary implementation follows azure table best practices for writing by buffering up the writes by partition key and flushing the batches for you.

Writes default to Upserts.

Reading a table entry

You can use the dictionary indexer or TryGetValue to lookup a single entity based on partition row key.

public static void TableDict([Table("mytable")] IDictionary<Tuple<string, string>, OtherStuff> dict) { // Use IDictionary interface to access an azure table. var partRowKey = Tuple.Create("PartitionKeyValue", "RowKeyValue");OtherStuff val; bool found = dict.TryGetValue(partRowKey, out val); OtherStuff val2 = dict[partRowKey]; // lookup via indexer // another write exmaple dict[partRowKey] = new OtherStuff { Value = "fall", Fruit = Fruit.Apple, Duration = TimeSpan.FromMinutes(5) }; }

Enumerating table entries

You can use foreach() on the table to enumerate the entries. The dictionary<> binding will enumerate the entire table and doesn’t support enumerating a single partition.

public static void TableDict([Table("mytable")] IDictionary<Tuple<string, string>, OtherStuff> dict) { foreach (var kv in dict) { OtherStuff val = kv.Value; } }

You can also use linq expressions over azure tables, since that just builds on foreach().

Here’s an example of an basic RssAggregator that gets the blog roll from an Azure Table and then writes out a combined RSS feed via [BlobOutput]. The whole sample is available on GitHub, but the interesting code is:

// RSS reader. // Aggregates to: https://<mystorage>.blob.core.windows.net/blog/output.rss.xml // Get blog roll from a table. public static void AggregateRss( [Table("blogroll")] IDictionary<Tuple<string, string>, BlogRollEntry> blogroll, [BlobOutput(@"blog/output.rss.xml")] out SyndicationFeed output ) { // get blog roll form an azure table var urls = (

from kv in

blogroll select kv.Value.url).ToArray(); List<SyndicationItem> items = new List<SyndicationItem>(); foreach (string url in urls) { var reader = new XmlTextReader(url); var feed = SyndicationFeed.Load(reader); items.AddRange(feed.Items.Take(5)); } var sorted = items.OrderBy(item => item.PublishDate); output = new SyndicationFeed("Status", "Status from SimpleBatch", null, sorted); }

BlobRollEntry is just a poco, with no mandatory base class.

// Format for blog roll in the azure table public class BlogRollEntry { public string url { get; set; } }

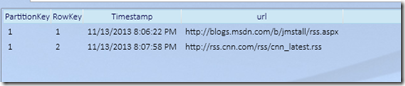

Here’s the contents of the azure table. So you can see how the POCO maps to the table properties of interest.

Removing from a table

You can use IDictionary.Remove() to remove from the table.

public static void TableDict([Table(TableNameDict)] IDictionary<Tuple<string, string>, OtherStuff> dict) { var partRowKey = Tuple.Create("PartitionKeyValue", "RowKeyValue"); // Clear dict.Remove(partRowKey); }

You can use IDictionary.Clear() to clear an entire table.

Summary

Here’s a summary of which IDictionary operations map to table operations.

Assume dict is a dictionary table mapping, and partRowKey is a tuple as used above.

| Operation | Code snippet |

| Read single entity | value = dict[partRowKey] |

| dict.TryGetValue(partRowKey, out val) | |

| Contains a key | bool found = dict.ContainsKey(partRowKey) |

| Write single entity | dict[partRowKey] = value |

| Add(partRowKey, value) | |

| enumerate entire table | foreach(var kv in dict) { } |

| Remove a single entity | dict.Remove(partRowKey); |

| Clear all entities | dict.Clear() |

Other notes

- This binding is obviously limited. You can always bind directly to CloudStorageAccount and use the SDK directly if you need more control.

- The dictionary adapter does not implement all properties on IDictionary<>. For example, in the Alpha 1 release, CopyTo, Contains, Keys, Value, and other aren’t implemented.

- We’re looking at more Table bindings in the next update (such as binding directly to CloudTable).

- You see some more examples for table usage on samples site.

Comments

Anonymous

March 31, 2014

In the summary the code snippet for "Write single entity" should be dict[partRowKey] = value instead of value = dict[partRowKey]Anonymous

April 01, 2014

@HuHa - thanks, good catch, I made the update.Anonymous

April 13, 2014

This binding writes all properties of the object to string values in the table. If I want strongly typed properties such as DateTime and int, should I be using the Azure SDK , or is this something that you are looking into?Anonymous

April 13, 2014

You say: “You can always bind directly to CloudStorageAccount and use the SDK directly if you need more control.” How do I bind to CloudStorageAccount?Anonymous

April 17, 2014

@Askew - re strong typing - we're looking at strong typing in azure table storage too. Although I'd guess the need here is mitigated since it goes over the wire as a string, and the WebJobs SDK will correctly parse into client types. In fact, the WebJobs SDK allows more flexibility by supporting any type with TryParse or TypeConverters (especially enums), rather than just a limited set of ED types. re CloudStorageAccount: to bind directly to the SDK, you can just include CloudStorageAccount in your signature. See the bottom of blogs.msdn.com/.../azure-storage-bindings-part-1-blobs.aspx for an example.