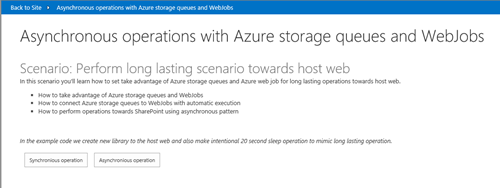

Using Azure storage queues and WebJobs for async actions in Office 365

One of the great capabilities in the Azure is the WebJobs, which can be used for scheduled actions extremely easily. In the context of the Office 365, we quite often use them to provide remote timer job style operations. This means that you have a scheduled job, which is executed for example once a day to check compliance of the sites compared to company policy. Typical use cases for scheduled remote timer jobs are for example following.

- Site metadata collection – for example reports on current administrators, storage usage, etc.

- Document governance – for example typical information management policy implementations

- Archive documents based on their status as a scheduled task

- Collect information based on user profile or update additional attributes to them from on-premises or from Azure AD

- Replicate data cross site collections for miscellaneous usage

- Collect information on site usage cross tenant or deployment

- Replicate taxonomy structure changes from on-premises master metadata management system to Office 365

Tobias Zimmergren blogged nice post few weeks back on showing the different options and how you can get started with scheduled tasks.

PnP team is also just about to release new Remote Timer Job framework as part of the Office 365Developer Patterns and Practices Guidance (PnP) with advance capabilities, like multi-threading. There will be separate blog posts on this one, but if you are interested, you can find details already from the dev branch of the PnP.

Alternative route for WebJobs is to use them as continuously running operation, which will handle incoming requests from message queue. This can be really easily achieved with only few lines of code and is extremely powerful technique for providing asynchronous operations started by end user. Comparing this to classic server side timer jobs, this equals to the model where you use SPOneTImeSchedule class for your timer job scheduling based on end user input.

We did actually use this model in the solution for automated One Drive for Business customizations, introduced already within older blog posts, but since we did not fully explain the potential for the pattern, we wanted to provide some additional details.

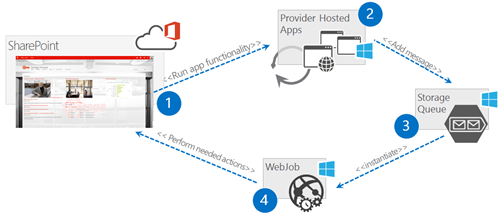

Logical implementation for asynchronous operations

In practice we are talking about connecting WebJob execution to queue processing, meaning that our WebJob will be triggered immediately whenever there is a new message added to queue, not as a scheduled task. Since the actual WebJob processing will be happening in separate thread, this means that we can use this queue model to provide us an asynchronous way to process needed operations also in Office 365. You can hook in your WebJob to either Azure queue storage or Azure service bus queues. In this blog post we concentrate on storage queue based implementation, but model is pretty much identical with the service bus based processing.

Let’s have a closer look on this from logical implementation perspective. Here’s the key elements for providing us the asynchronous processing pattern.

- User operates in the SharePoint and starts provider hosted app UI one way or another (full page, pop up, app part etc.)

- Actual operations are performed in the provider hosted app side which is collecting needed parameters and other settings for the processing

- Operation request is stored to Azure storage queue or Service bus for processing

- Task is picked up automatically by continuously running WebJob with needed details and requested operation is applied

Notice that operation could be also targeted not only to Office 365, but also to any other system. You could pretty easily also feed on-premises LOB system based on the requests from the Azure (blog post coming soon).

Typical use cases for long lasting asynchronous operations would be for example following.

- Complex configurations installed from the app to host web

- Complex app Installed operations due 30 sec time out

- Self service operations for the end users, like site collection provisioning for cloud or for on-premises with service bus usage

- Route entries or information from Office 365 to on-premises systems

- Start perform complex usage calculations or other long lasting business logic cross tenant

There will be few new blogs posts released soon for some of the topics mentioned above to show how to achieve them in practice with Office 365 and Microsoft Azure.

Configuration and key code for processing

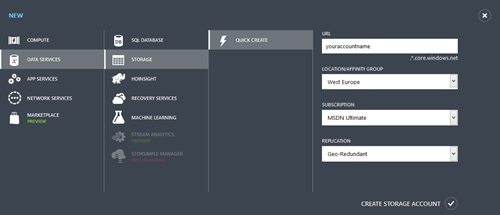

Create an Azure Storage account

Before you can make this sample work, you will need to have storage account in your Azure tenant. You can create one by logging to Azure management portal and then creating new service account using the creation wizard.

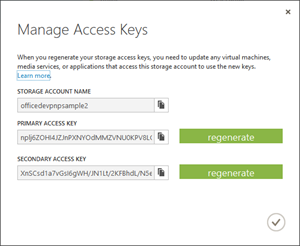

Configure Your application to access storage

Multiple projects in the solution accesses this storage account, so we need to copy the account name and key information for reuse. After the storage account has been created, you can access this needed information by clicking the Manage Access Keys button for the storage account. This will show you needed details for solution configuration.

Actual configuration of the individual projects is dependent on the project, but as a rule of thumb, you will need to update storage connection string from the app config or from the web.config based on your Azure storage details.

Add item to storage queue

Adding new items to queue is really easy and does not require that many lines of code. In our reference implementation case we have created a data entity called SiteModifyRequest, which contains the needed information for site changes.

public class SiteModifyRequest

{

public string SiteUrl { get; set; }

public string RequestorName { get; set; }

}

This object in then serialized to the queue using following lines of code. Notice that code is also creating the storage queue if it does not exist. Notice that we use constant called SiteManager.StorageQueueName to define the storage queue and we will reference this constant also in the WebJob side to avoid any typos on the queue name.

/// <summary>

/// Used to add new message to storage queue for processing

/// </summary>

/// <param name="modifyRequest">Request object with needed details</param>

/// <param name="storageConnectionString">Storage connection string</param>

public void AddAsyncOperationRequestToQueue(SiteModifyRequest modifyRequest,

string storageConnectionString)

{

CloudStorageAccount storageAccount =

CloudStorageAccount.Parse(storageConnectionString);

// Get queue... create if does not exist.

CloudQueueClient queueClient = storageAccount.CreateCloudQueueClient();

CloudQueue queue = queueClient.GetQueueReference(SiteManager.StorageQueueName);

queue.CreateIfNotExists();

// Add entry to queue

queue.AddMessage(new CloudQueueMessage(JsonConvert.SerializeObject(modifyRequest)));

}

Processing items from the queue from WebJob side

Hooking up WebJob to new storage queue messages is really simple due and does not require that much of code. Our WebJob has to be setup to be executing continuously, which we can implement as follows in our Main method. Azure SDK 2.5 templates have this code in place by default.

// Please set the following connection strings in app.config for this WebJob to run:

// AzureWebJobsDashboard and AzureWebJobsStorage

static void Main()

{

var host = new JobHost();

// The following code ensures that the WebJob will be running continuously

host.RunAndBlock();

}

Actual connection then to the Azure Storage queues is combination of correct connection string configuration in the app.config and proper signature for our method or function. In app.config side we need to ensure that AzureWebJobsStorage connection string is pointing to the right storage account. Notice that you definitely also want to ensure that the AzureWebJobsDashboard connection string has valid entry, so that your logging will work properly.

<connectionStrings>

<!-- The format of the connection string is "DefaultEndpointsProtocol=https;AccountName=NAME;AccountKey=KEY" -->

<!-- For local execution, the value can be set either in this config file or through environment variables -->

<add name="AzureWebJobsDashboard" connectionString="DefaultEndpointsProtocol=https;AccountName=[YourAccount];AccountKey=[YourKey]" />

<add name="AzureWebJobsStorage" connectionString="DefaultEndpointsProtocol=https;AccountName=[YourAccount];AccountKey=[YourKey]" />

</connectionStrings>

In our code side we only need to decorate or add right signature with right attributes are our method is called automatically. Here’s the code from our reference implementation. Notice that we use same SiteManager.StorageQueueName constant as the queue name to link the WebJob.

namespace Core.QueueWebJobUsage.Job

{

public class Functions

{

// This function will get triggered/executed when a new

// message is written on an Azure Queue called queue.

public static void ProcessQueueMessage(

[QueueTrigger(SiteManager.StorageQueueName)]

SiteModifyRequest modifyRequest, TextWriter log)

{

That’s it. At this point our WebJob will be automatically called whenever there is a new message added to the storage queue and we can start working on the actual business logic code for what should happen when that message is received.

Additional details on reference implementation

Let’s concentrate more on our reference implementation, which is concentrating on showing this process in practice.

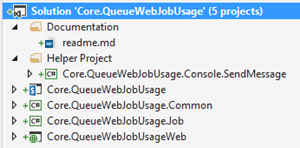

Visual Studio solution details

Here’s the actual Visual Studio structure and project by project description with configuration details, if needed.

Core.QueueWebJobUsage.Console.SendMessage

This is helper project, which can be used to test the storage queue behavior and send messages to the queue for processing without the need for user interface operations. Purpose is simply to ensure that the storage account information and queue creation works as expected.

app.config file contains StorageConnetionString key, which should be updated accordingly to match the storage queue for used Azure environment.

<appSettings>

<add key="StorageConnectionString"

value="DefaultEndpointsProtocol=https;AccountName=[account];AccountKey=[key]" />

</appSettings>

Core.QueueWebJobUsage

This is the actual SharePoint app project, which is used to introduce the app for SharePoint and contains also the requested permission, which are needed for the actual provider hosted app. In this case we are requesting following permissions, which are needed for the synchronous operation demonstration in the reference provider hosted code side. Technically these are not needed for the WebJob based implementation, since you could request or register needed permission directly by using appinv.aspx page explained for example in this great blog post from Kirk Evans related on the remote timer jobs.

- Allow the app to make app-only calls to SharePoint

- FullControl permission to Web

Core.QueueWebJobUsage.Common

This is business logic and entity project, so that we can use needed code from numerous projects. It also contains the data object or entity for message serialization. Notice that business logic (Core.QueueWebJobUsage.Common.SiteManager::PerformSiteModification()) has intentional 20 second sleep for the thread to demonstrate long lasting operation.

Core.QueueWebJobUsage.Job

This is the actual Azure WebJob created using WebJob template which was introduced by the Azure SDK for .NET 2.5. All the actual business logic is located in the Common component, but the logic to hook up the queues and initial creation of app only client context is located in this project.

You will need to update right App Id and App secret to the app.config for this one like follows.

<appSettings>

<add key="ClientId" value="[your client id]" />

<add key="ClientSecret" value="[your client secret]" />

</appSettings>

You will also need to update connection strings to match your storage connection strings.

<connectionStrings>

<!-- The format of the connection string is "DefaultEndpointsProtocol=https;AccountName=NAME;AccountKey=KEY" -->

<!-- For local execution, the value can be set either in this config file or through environment variables -->

<add name="AzureWebJobsDashboard" connectionString="DefaultEndpointsProtocol=https;AccountName=[YourAccount];AccountKey=[YourKey]" />

<add name="AzureWebJobsStorage" connectionString="DefaultEndpointsProtocol=https;AccountName=[YourAccount];AccountKey=[YourKey]" />

</connectionStrings>

Core.QueueWebJobUsageWeb

This is the user interface for the provider hosted app. Only thing you need to configure is the storage configuration string based on your environment. App ID and Secret information is managed automatically by Visual Studio when you deploy the solution for debugging with F5, but obviously those would need to be properly configured for actual deployment.

Notice also that you need to update the storage account information accordingly, so that UI can add requests to queue.

<appSettings>

<add key="ClientId" value="7b4d315d-e00a-46a1-b644-67e42ea37b79" />

<add key="ClientSecret" value="U/2uECA7fAT/IhIU2O2T8KYcUwvCcI1QLCOzHMtSOcM=" />

<add key="StorageConnectionString" value="DefaultEndpointsProtocol=https;AccountName=[YourAccountName];AccountKey=[YourAccountKey]" />

</appSettings>

This is typical Office 365 Developer Patterns and Practices sample, which is concentrating on demonstrating the pattern or functional model, but does not concentrate on anything else. This way you can easily learn or adapt only on the key functionality without any additional distractors.

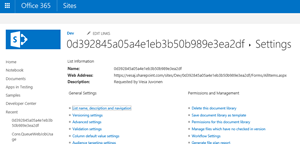

This app has two different buttons, one for synchronous and one for asynchronous operation. Both buttons will create new document library to the host web, but asynchronous operation will take advantage of the Azure WebJob based execution. Here’s example of the library created by using this code. Notice that the description for the library is also dynamic and the requestor name is added there for demonstration purposes. This was just done to show how to provide complex data types cross storage queues.

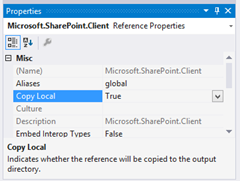

Deployment of WebJob to Azure

Before you deploy the WebJob to the Azure, make sure that you have Copy Local property set as True for those assemblies which are not natively in Azure side. This includes all SharePoint CSOM assemblies, so check the property for the Microsoft.SharePoint.Client and Microsoft.SharePoint.Client.Runtime assemblies. This will ensure that the referenced assemblies are copied to the Azure side and references will resolve without any issues.

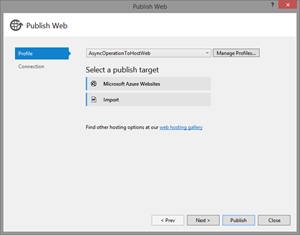

If you have the Azure SDK 2.5 or newer version installed to your Visual Studio, you can deploy the WebJob to Azure directly from the solution by right clicking the WebJob project and selecting “Publish as Azure WebJob…”. This will start up a wizard, where you can also create new Azure Web Site which will host your WebJob, if needed.

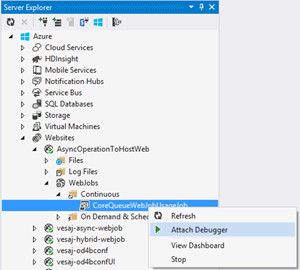

After deployment has been complied, you can see your WebJob status also from the Server Explorer, which is easiest way to control your WebJob actions and also access additional settings.

Configuration of the WebJob in Azure

Since Visual Studio is changing the app ID and secret due numerous reasons when you do development, you should be looking into registering the app ID and secret to your tenant specifically for the WebJob and then configure that to be used for the WebJob by using Azure Management Portal.

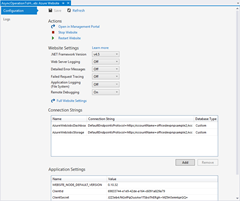

You can access app configuration and connection string information from the Configure tab for the Azure Web Site which is hosting the WebJob.

Alternatively you can always also right click the Azure Website from the Server Explorer and choose View Settings to manage the current configuration. This will show you sub set of the settings and also connection string and application settings information.

Here’s the settings view directly in Visual Studio using Visual Studio 2013 and Azure SDK 2.5.

Debugging WebJob in Azure

One of the really great things about WebJobs with latest SDKs is also the capability to do debugging directly on the WebJob process in the Azure side. You can start this debugging by right clicking the WebJob from the Server Explorer and selecting “Attach debugger”.

By doing this, you can now debug the code running in Azure and step through the code. You can use for example the Core.QueueWebJobUsage.Console.SendMessage project to now send item to the queue and then step through the code line-by-line.

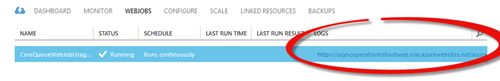

Logging from WebJob in Azure

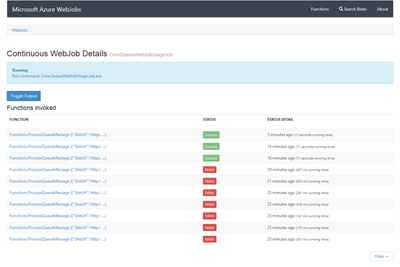

After WebJobs have been installed to the Azure and as long as you have right configuration for them, you will see really detailed level of logging on each execution and you can even “re-play” the executions later, if needed. You can access the logs from the WebJobs tab at the Azure Web Site.

You can see all the details from each execution and then dig into details for example in case of exceptions. This is obviously highly helpful on possible issues in the code… not that we would never do any mistakes there.

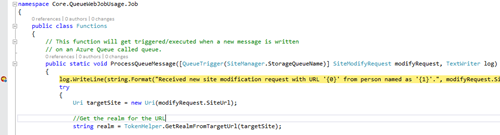

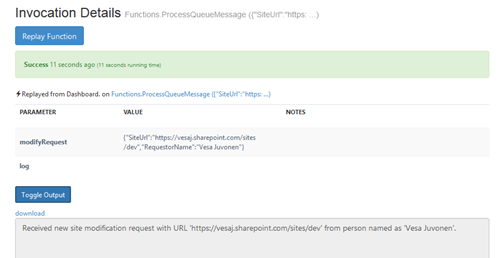

You can also add additional messages from your code to the logging process. This is done by using the log variable which is provided as a parameter to the method which wil be called when new items are added to storage queue. Here’s an example from the reference code where we output the details of the request to first line.

// This function will get triggered/executed when a new message is written

// on an Azure Queue called queue.

public static void ProcessQueueMessage(

[QueueTrigger(SiteManager.StorageQueueName)]

SiteModifyRequest modifyRequest, TextWriter log)

{

log.WriteLine(string.Format("{0} '{1}' {2} '{3}'.",

"Received new site modification request with URL",

modifyRequest.SiteUrl,

"from person named as ",

modifyRequest.RequestorName));

This will result additional line to the execution log, like in following execution entry.

Video for setting up things and code walkthrough

Here’s also video introduction to topics shown in this blog post. This is just free flow of the mind on the structure, but will cover all the same topics as in the written format to show how to setup things for execution.

Office 365 Developer Patterns and Practices

Techniques showed in this blog post are part of the Core.QueueWebJobUsage solution in the Office 365 Developer Patterns and Practices guidance, which contains more than 100 samples and solutions demonstrating different patterns and practices related on the app model development together with additional documentation related on the app model techniques.

Techniques showed in this blog post are part of the Core.QueueWebJobUsage solution in the Office 365 Developer Patterns and Practices guidance, which contains more than 100 samples and solutions demonstrating different patterns and practices related on the app model development together with additional documentation related on the app model techniques.

Check the details directly from the GitHub project at https://aka.ms/OfficeDevPnP. Please join us on sharing patterns and practices for the community for the benefit of the community. If you have any questions, comments or feedback related on this sample, blog post or anything on PnP, please join us and more than 1700 others who are using Office 365 Developer Patterns and Practices Yammer group at https://aka.ms/OfficeDevPnPYammer.

“From the community for the community” – “Sharing is caring”

Comments

Anonymous

March 03, 2015

Great post! To add another Scenario: I recently used continuous WebJobs in a customer solution to synchronize Permissions between SharePoint Online and a provider hosted app, as well as automated site provisioning and heavy database operations.Anonymous

March 04, 2015

The comment has been removedAnonymous

June 01, 2015

Hi Vesa, I have created a Azure web jobs to provision the site collection remotely. I have registered the app in SPO with Tenant, Taxonomy, Site Collection & Site with Full control privilege. My Azure job is creating the site collection successfully but am unable to create a Term in Term store. I am getting an error - "Access denied. you dont have permission to access or read this resource" :( I am using Term store for Custom Global Navigation for across site collections. Can you please help me on this?Anonymous

June 02, 2015

Hi Shankar, it's unfortunately not supported to use App Only token with taxonomy service, if that's what you have done in this case. If you are using specific account, you'll need to provide that right permission to taxonomy story. If you have any additional questions related on this, please use the Office 365 Developer Patterns and Practices Yammer group at aka.ms/OfficeDevPnPYammer. Notice also that there's user voice submission on this topic at officespdev.uservoice.com/.../7062007-provide-the-ability-to-write-to-managed-metadata-v . If you'd like this to be changed, please do your voting as well.Anonymous

July 22, 2015

Hey Vesa, Excellent post on this matter, it helped me out a lot! I'm using this scenario myself and it works when I register the WebJob using AppRegNew.aspx and AppInv.aspx. Since I also have a provider hosted add-in, like in your scenario, that needs to be registered I was wondering if the Client ID and Secret can be the same for the MVC part as for the WebJob. My second question would be how would you wrap this in a package that can be made commercially available? Since you always have to register the WebJob on your customers Tenant I assume this cannot be done from the Store? Kind regards, CasAnonymous

July 23, 2015

The comment has been removedAnonymous

August 09, 2016

Make sure your queuename is in lowercase. Otherwise CreateIfNotExists() will fail wothout telling you why.