Concurrency Runtime and Windows 7

Microsoft has recently released a beta for Windows 7, and a look at the official web site (https://www.microsoft.com/windows/windows-7/default.aspx) will show you a pretty impressive list of new features and usability enhancements. I encourage you to go look them over for yourself, but in this article I’m going to focus on a couple of Win7 features that are of particular importance to achieving the most performance of your parallel programs.

1. Support for more than 64 processors

2. User-Mode Scheduled Threads

Both of the new features I’m going to talk about will be supported in the Microsoft Concurrency Runtime, which will be delivered as part of Visual Studio 10.

An important note about each of these features is that they’re only supported on the 64-bit Windows 7 platform.

More Than 64 Processors

The most straightforward of these new features is Windows 7’s support for more than 64 processors. With earlier Windows OS’s, even high end servers could only schedule threads among a maximum of 64 processors. Windows 7 will allow threads to run on more than 64 processors by allowing threads to be affinitized to both a processor group, and a processor index within that group. Each group can have up to 64 processors, and Windows 7 supports a maximum of 4 processor groups. The mechanics of this new OS support are detailed in a white paper which is available at https://www.microsoft.com/whdc/system/Sysinternals/MoreThan64proc.mspx.

However, unless you actively modify your application to affinitize work amongst other processor groups, you’ll still be stuck with a maximum of 64 processors. The good news is that if you use the Microsoft Concurrency Runtime on Windows 7, you don’t need to be concerned at all with these gory details. As always, the runtime takes care of everything for you, and will automatically determine the total amount of available concurrency (e.g., total number of cores), and utilize as many as it can during any parallel computation. This is an example of what we call a “light-up” scenario. Compile your Concurrency Runtime-enabled application once, and you can run it on everything, from your Core2-Duo up to your monster Win7 256-core server.

User Mode Scheduling

User Mode Scheduled Threads (UMS Threads) is another Windows 7 feature that “lights up” in the Concurrency Runtime.

As the name implies, UMS Threads are threads that are scheduled by a user-mode scheduler (like Concurrency Runtime’s scheduler), instead of by the kernel. Scheduling threads in user mode has a couple of advantages:

1. A UMS Thread can be scheduled without a kernel transition, which can provide a performance boost.

2. Full use of the OS’s quantum can be achieved if a UMS Thread blocks for any reason.

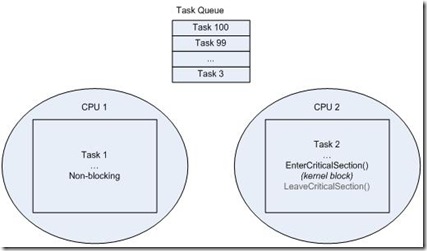

To illustrate the 2nd point, let’s assume a very simple scheduler with a single work queue. In this example, I’ll also assume that we have 100 tasks that can be run in parallel on 2 CPU’s.

Here we’ve started with 100 items in our task queue, and two threads have picked up Task 1 and Task 2 and are running them in parallel. Unfortunately, Task 2 is going to block on a critical section. Obviously, we would like the scheduler (i.e. the Concurrency Runtime) to use CPU 2 to run the other queued tasks while Task 2 is blocked. Alas, with ordinary Win32 threads, the scheduler cannot tell the difference between a task that is performing a very long computation and a task that is simply blocked in the kernel. The end result is that until Task 2 unblocks, the Concurrency Runtime will not schedule any more tasks on CPU 2. Our 2-core machine just became a 1-core machine, and in the worst case, all 99 remaining tasks will be executed serially on CPU 1.

This situation can be improved somewhat by using the Concurrency Runtime’s cooperative synchronization primitives (critical_section, reader_writer_lock, event) instead of Win32’s kernel primitives. These runtime-aware primitives will cooperatively block a thread, informing the Concurrency Runtime that other work can be run on the CPU. In the above example, Task 2 will cooperatively block, but Task 3 can be run on another thread on CPU 2. All this involves several trips through the kernel to block one thread and unblock another, but it’s certainly better than wasting the CPU.

The situation is improved even further on Windows 7 with UMS threads. When Task 2 blocks, the OS gives control back to Concurrency Runtime. It can now make a scheduling decision and create a new thread to run Task 3 from the task queue. The new thread is scheduled in user-mode by the Concurrency Runtime, not by the OS, so the switch is very fast. Now both CPU 1 and CPU 2 can now be kept busy with the remaining 99 non-blocking tasks in the queue. When Task 2 gets unblocked, Win7 places its host thread back on a runnable list so that the Concurrency Runtime can schedule it – again, from user-mode – and Task 2 can be continued on any available CPU.

You might say, “hey my task doesn’t do any kernel blocking, so does this still help me?” The answer is yes. First, it’s really difficult to know whether your task will block at all. If you call “new” or “malloc” you may block on a heap lock. Even if you didn’t block, the operation might page-fault. An I/O operation will also cause a kernel transition. All these occurrences can take significant time and can stall forward progress on the core upon which they occur. These are opportunities for the scheduler to execute additional work on the now-idle CPU. Windows 7 UMS Threads enables these opportunities, and the result is greater throughput of tasks and more efficient CPU utilization.

(Some readers may have noticed a superficial similarity between UMS Threads and Win32 Fibers. The key difference is that unlike a Fiber, a UMS Thread is a real bona-fide thread, with a backing kernel thread, its own thread-local state, and the ability to run code that can call arbitrary Win32 API’s. Fibers have severe restrictions on the kinds of Win32 API’s they can call.)

Obviously the above example is highly simplified. A UMS Thread scheduler is an extremely complex piece of software to write, and managing state when an arbitrary page fault can swap you out is challenging to say the least. However, once again users of the Concurrency Runtime don’t have to be concerned with any of these gory details. Write your programs once using PPL or Agents, and your code will run using Win32 threads or UMS Threads.

For more details about UMS Threads, check out Inside Windows 7 - User Mode Scheduler (UMS) with Windows Kernel architect Dave Probert on Channel9.

Better Together

Windows 7 by itself is a great enabler of fine grain parallelism, because of the above two specific features, as well as some other performance improvements made at the kernel level. The Concurrency Runtime helps bring the fullest potential of Windows 7 into the hands of programmers in a very simple and powerful way.

Comments

Anonymous

February 04, 2009

PingBack from http://www.anith.com/?p=5742Anonymous

February 04, 2009

Please, point to some API calls related to UMS thread scheduler. I've downloaded the Win7 SDK, but unfortunately can't find anything... What is the low-level API? -- Best regards, Dmitriy V'jukovAnonymous

February 05, 2009

Are those APIs available for developers, or Concurrency Runtime is the only official way to use them? If the latter, then it's quite unfair...Anonymous

February 05, 2009

Isn't the point that we're all meant to buy into a single Concurrency Runtime (which is itself pluggable) in order to avoid over subscription and other confliects. I gather Intel's doing this with their TBB and other bits and bobs. I'd rather plug into a nice C++ / Managed API like the Concurrency Runtime than some flat C API.Anonymous

February 05, 2009

Dmitriy, The UMS APIs are included in the Win7 Beta1 and they are public, the documentation however is still in progress by the Windows team which is why you aren't seeing it today. It will be complete by the time Windows7 ships. I've heard informally that they are working to have a very complete set of documentation for these APIs because of the difficulty and care that is needed to use them correctly. Bluntly, unless you have very specific needs, you will have a much easier time leveraging the ConcRT APIs which are built on top of UMS and implicitly offer downlevel compatibility. You could probably go spelunking for the UMS APIs in winbase.h, but I would strongly discourage doing without complete documentation. Tom, Yes, that is exactly the point as Don & Dave Probert said in the Channel9 video, we would rather see our developers spending their time building libraries and applications which leverage the APIs and unlock the performance of the platform implicitly as opposed to working with low-level threading APIs and worrying about composability downlevel compatibility and future extensibility as new hardware comes online across libraries. The world is changing, ConcRT, the PPL and Agents libraries will help insulate you from that change… Fyi, I don’t like to writing C-style APIs either, unless I have to :) (but that’s a personal choice and I know there are a lot of folks that do and the lower level runtime APIs in ConcRT can more easily be accessed by C programmers, that’s why it’s in the C runtime and note msvcp100.dll (the C++ runtime). -RickAnonymous

February 05, 2009

An interesting discussion about a very similar library is going on here: http://software.intel.com/en-us/forums/threading-on-intel-parallel-architectures/topic/62842/Anonymous

February 17, 2009

I've watched the video by Dave Probert - It's just great! Multicore reality does indeed require rethought support from an OS. UMS API in winbase.h/winnt.h looks quite straightforward (although I see that Don McCrady mentioned some caveats with page faults). Why I am interested in this - there can be some other useful applications of UMS API. For example, I see that I can implement "transactional locking", i.e. if thread is blocked inside critical section because of paging fault, I can just release the mutex and longjmp to the beginning of the critical section (not causing system-wide stall for 10ms) (although this will be significantly more useful if I will be notified about thread time slice end too). The other application in to maintain in a lightweight way a UMS host thread ID (I am not sure about terminology, probably better name would be a UMS scheduler ID, i.e. effectively processor ID, if I am creating UMS per processor). This can be useful for algorithms that use per-processor data structures. The other reason is that current ConcRT (as I see from the API) does not support such things as agent/task affinity, NUMA, HT, etc. ConcRT will not be able to thoughtfully decide whether it's worth doing to migrate my agent to different NUMA node, or how to schedule my tasks to make efficient use of HT or cache. And it's the things that can make significant performance difference (probably 2-10x).Anonymous

February 18, 2009

I am also curious as to how are you going to manage number of worker threads in ConcRT? Will the number of threads be bounded or unbounded?Anonymous

February 18, 2009

The short answer is that the Resource Manager will determine the number of threads but the developer will have some control over this by creating Scheduler Policies which can specify among other things number of threads. If you have the Visual Studio 2010 CTP you can get an idea of how some of this works by using the file concrt.h but note that future CTPs or betas will likely see changes to this. A longer answer will deserve at least one blog post of its own. Thanks Dmitriy.Anonymous

February 21, 2009

Thank you Rick. I see there are parameters like ThreadsMultiplier and RogueChoreThreshold. So, if I get it right, scheduler will increase number of threads infinitely if there are too many blocked of rogue chores.Anonymous

February 21, 2009

Hmmm... interesting question, is it possible to start several UMS recursively? For example, user makes library call from UMS thread, and library itself uses UMS. Outer UMS must get notification only when inner UMS blocks, and not when inner chore blocks...Anonymous

April 22, 2009

In a concurrent world multiple entities work together to achieve a common goal. A common way to interactAnonymous

May 02, 2009

Is this kind of "Scheduler Activation" idea proposed in "Scheduler Activations: Effective Kernel Support for the User-Level Management of Parallelism". T. E. Anderson et al ??Anonymous

May 03, 2009

UMS is similar in spirit if not exactly in design & implementation to the "T.E. Anderson" paper. UMS does not currently have any mechanism to request or respond to a changing number of available processors as discussed in the paper, but pretty much everything else maps very nicely to UMS.Anonymous

May 12, 2009

Regarding your example for point 2 above. You say that when task2 is blocked kernel does not schedule other tasks. Why? is this because you are using spin-locks. Won't mutexes help in such situations.Anonymous

May 13, 2009

I didn't say the kernel doesn't schedule other tasks (and by tasks here I assume you mean other threads in other processes)... it can and it does because the kernel knows the thread is blocked (by a mutex or a critical section, or an event, or whatever). That's assuming there is something available to run. If not, you've got an idle core and lots of potential work not getting done. Here I am talking about ConcRT tasks (which are thin wrappers on top of function objects). When a ConcRT task that is running on a Win32 thread blocks on kernel sync primitive, the ConcRT SCHEDULER has no idea it's blocked, and therefore won't try to create another thread to run additional tasks. On a UMS thread, the ConcRT SCHEDULER does know it's blocked and can therefore start up another thread to run additional tasks. I hope that helps clear things up.Anonymous

May 21, 2009

Visual Studio 2010 Beta1 has been released, and it is a full install version . The team has been busy,Anonymous

September 27, 2009

The comment has been removedAnonymous

September 28, 2009

Christian, it is not 100% predictable which will happen next. It really depends whether the scheduler notices that Task2 is unblocked before finding that Task16 is available. That said, ConcRT does look for unblocked (runnable) tasks before queued tasks, so it might be somewhat more likely that Task2 will run before Task16.Anonymous

September 28, 2009

@dmccrady: Thanks so much, that helps even more :)Anonymous

November 06, 2009

Thanks for the post. I'm very curious about what you say regarding managing state after page faults. Can you elaborate on when this would be a problem when implementing a UMS scheduler? I have written a task scheduler using the UMS Thread API on Windows 7 and it is indeed quite hard. I learnt the hard way that you have to be extremely careful with what APIs you call within a scheduler thread. For instance, I found out that if I call WaitForMultipleObjects with more than 8 handles it allocates memory from the heap under the hood, which can end up deadlocking the scheduler thread if a UMS task thread it manages has acquired the heap lock too. Similar problems surface if you try to create a new thread within a scheduler thread, since that accesses the heap too. However, I don't see how page faults can be problematic here. I am probably missing something and my scheduler will likely have some remaining cases where it might deadlock, so it would be great to know what to look for :)Anonymous

November 06, 2009

Javier, it's really as simple as a page fault can block in the kernel so if you page fault in your scheduler logic then it could be problematic.... Also, just curious have you considered using the Concurrency::Scheduler::ScheduleTask API with a scheduler instance instead of building an entire UMS scheduler from scratch.Anonymous

November 10, 2009

Thanks for the reply Rick. I still don't see the problem with page faults. If one of my scheduler threads encounters a page fault and it blocks in the kernel, then no tasks will be scheduled on that core for a while, which is not great but not catastrophic either. Now, a different problem would be if the scheduler thread encounters a page fault, and then the kernel makes it wait for a shared lock which is currently held by one of the tasks the scheduler is in charge of. That would produce a deadlock much like it can happen when you allocate from the heap from a scheduler thread, sharing the heap lock with your task threads. Is there such a shared lock? If so, I understand why you want to avoid page faulting inside a scheduler thread. What would be the strategy then? Is there some way to pin the memory pages the scheduler uses so that you are guaranteed to encounter no page faults? As for not using the ConcRT, I just think building a UMS scheduler from scratch is a very interesting exercise. For a real project I would use your API.Anonymous

November 12, 2009

Javier, Page faults (and other asynchronous suspensions) present an interesting issue because they cause the scheduler code to be invoked between any two arbitrary instructions. If such a page fault occurs in user code while running on a UMS thread, it's not so much of a concern. The interesting scenarios happen if any of the scheduler code itself runs on the UMS thread. You might have, for instance, structures which cache per-processor/core (/primary) information. Code such as "pStruct->field" may page fault conceptually on the "->" (really on the code page containing the instruction to reference the field). In this case, your scheduler would get invoked to run something else atop that processor/primary. When the original thread resumed, it might no longer be on the processor/primary it thought it was and hence might be referencing the wrong pStruct. This is only one example of things that get "tricky" when you have any of the scheduler code running on the UMS threads themselves. If the scheduler purely responds to blocking events and only takes action on the primary, the impact of page faults is far less of a concern.Anonymous

December 27, 2012

Apparently User-mode schedulable (UMS) threads are no longer supported in the Concurrency Runtime in Visual Studio 2012. See msdn.microsoft.com/.../dd492665(v=vs.110).aspx Does anyone know why this is the case?Anonymous

July 23, 2014

The problem is how many UMS context can be blocked in kernel, Microsoft didn't say.