One story of building a useful Assistive Technology tool from a UI Automation sample

Background

At the 2011 Annual International Technology and Persons with Disabilities Conference (aka “the CSUN conference”), I gave a presentation on the Windows UI Automation (UIA) API. This API allows apps to find out what things are shown visually on the screen and to programmatically interact with those things. As such, it can be very helpful to Assistive Technology (AT) tools such as screen readers.

I was new to UIA at the time, and so needed to learn some things on how it’s used. The most effective way for me to learn about an API is to build an app which uses it, and to step through the code in a debugger watching what happens. I felt people at the conference would be more interested in the client-side aspects of the API rather than the provider-side. (The “client” is the app gathering up the data about what’s shown in other apps, and the “provider” is the app that contains the UI of interest.) So I built a sample app which gathers details on all links shown in a browser and which can programmatically invoke those links. A twist to this sample which is unconnected to UIA is that I also added use of the Window Magnification API, and I’d not built an app which used both these API’s before. The UIA API and Magnification API are not related, and it was interesting for the sample to clearly separate the data gathering steps from the mechanism by which the results are presented to the user. Other apps might choose different ways to present the results depending on the needs of their users. For example, using speech, (as a screen reader does), or presenting the results using large text elsewhere on the screen, (if it helps with the user’s comprehension of what’s on the screen.)

The picture below shows the sample app listing all the links shown in the browser, and highlighting one of the links.

After presenting this sample app, (which I’d built in C++), I was asked if I had a C# version. So I set to work on an equivalent C# version of the sample. It’s worth noting that all my samples use the native-code version of the UIA API that’s included in Windows, not the managed version that’s included in the .NET Framework. Since then I’ve completed a set of sample apps which use the client-side UIA API, and together the samples demonstrate all the commonly used parts of the API. My goal has always been to build samples which might be the starting point for real-world AT tools. Interacting with hyperlinks in a browser is an extremely common action. Another sample uses UIA to track keyboard focus and magnifies the UI with focus. And a third sample uses UIA’s “Text Pattern” to programmatically interact with e-mails. While none of the samples are feature-complete as AT apps, I had hoped that they would be a good base for people who want to build AT apps.

Actually, one of the samples is pretty much complete – the Key Speaker. This uses UIA to find the text shown on the Prediction Keys in the On-Screen Keyboard. This satisfied a specific need that someone described to me and it was an important reminder that sometimes an AT tool doesn’t have to do much to be useful; it just has to do what’s needed. I’ve had a few thousand downloads of my samples, and so I’m pleased that they are proving to be a useful reference.

Deciding to build an AT app

I was recently in contact with an AT consultant who has a client with no gross motor skills, but does have some fine motor skills. This got me thinking about users who can’t use a mouse but can move their fingers to press keys on the number pad on a keyboard. How might the user easily browse the web? (I’m thinking about browsing again, give that it’s such a common thing that people do.) So I then broke the potential actions down into three stages.

1. Pressing a key to “highlight” things that can be clicked in the browser.

2. Pressing keys to specify which thing is to be clicked.

3. Pressing a key to do the click.

So I thought I’d build an AT tool which does this. Given that my original sample already had classes for calling UIA and then highlighting results, it was natural that I’d use this sample as starting point for my new AT app. (In Windows 7, the UIA API has certain threading requirements which means that depending on what client apps do, they sometimes need to do a certain amount of thread-related work. That’s all standard thread stuff, but I don’t want to write that code from scratch again if I again avoid it.)

I then grabbed my sample and got to work. I decided that I’d build an app which I knew would be useful but which would have some constraints. I’d look into addressing those constraints based on future user feedback. One constraint that would not change would be that the browser would have to be accessible through the UIA API. (Pointing the Inspect SDK tool to UI is a great way to get a feel for how accessible an app is.)

Getting things which can be clicked

I concentrated on the UIA aspects of the app next, as that’s core to what I’m trying to achieve. The original sample has a file called LinkProcessor.cpp, and that’s that where all the UIA calls in the sample are made. So this is where all the UIA calls would be made in my new AT app. In the sample, all the calls are made on a background thread, and we can see a call to CoInitializeEx() being made with COINIT_MULTITHREADED. This is necessary if the app might interact with its own UI through UIA. In the case of my new app, this isn’t actually necessary, but there was no reason for me to change the threading code. Having the calls done on the background thread would work perfectly fine for my app, and if in the future I change the app such that it does interact with its own UI, it’ll continue to work fine.

So I left all the threading-related code in, (including the mechanism for communication between threads), and then pulled out lots of action taken by the sample which I’m not interested in. This left me with one function of real interest; the one that gets me a list of elements on the screen. The sample built up a list of hyperlinks, and I now want a list of things that can be clicked. I decided to use UIA caching in my app in order to get the highest performance possible. (The original sample demonstrated both using caching and not using caching.) So this had now all boiled down to a single call to FindAllBuildCache().

FindAllBuildCache() takes a condition which describes what elements are to be retrieved. I needed to figure out a condition which would get me all the elements that I’m interested in, and only them. By using the Inspect SDK tool I could examine the properties of hyperlinks shown in the browser, and I decided on the following conditions for the element I would retrieve:

1. They would appear in UIA’s Control view of the element tree. (This is a subset of the Raw view, and the Raw view contains lots of the elements that the user usually doesn’t care about.)

2. The IsOffscreen property is false. If the IsOffscreen property if true, then the link isn’t visible on the screen.

3. The IsEnabled property is true. If the IsEnabled property is false, the link can’t be clicked.

4. The IsInvokePatternAvailable property is true.

This last condition is the most interesting one. In thinking about the condition, I decided that I’m not just interested in links. Rather, I want anything that can be clicked. This means my new AT app can be used to click buttons like the Back button.

I then combined the conditions with a number of calls to CreateAndCondition() and passed that into the call to FindAllBuildCache(). I also passed in a cache request for the bounding rectangle and InvokePattern. This meant that by calling FindAllBuildCache(), a single cross-process call would be made to gather the bounding rectangles of potentially hundreds of elements which can be clicked. (Cross-process calls are slow and so I want to make as few of them as possible.)

Having made this call, I did indeed get many elements returned, including links and buttons which could be clicked. However, I didn’t quite get all the things I expected. I found that the tabs shown in the browser weren’t included. So again I used Inspect and pointed it to the tabs. By doing this I could see that that tabs do not support the InvokePattern, and this explained why they were missing from the results returned from FindAllBuildCache(). Given that the tabs are so important to my app, I decided to change the condition I’d built to make sure the tabs were included in the result. Inspect showed me that the tabs support the LegacyIAccessible pattern. (IAccessible is related to the legacy MSAA API. That API has been replaced by the more powerful and performant UIA API.) Since the tabs do support that pattern and not the Invoke pattern, I decided to include that pattern in my condition. An element that supports the Invoke pattern will later be clicked by calling the Invoke() method on that interface, but if that pattern is not supported and LegacyIAccessible() is supported, then LegacyIAccessible’s DoDefaultAction() method would be used.

The final code looked something like this:

hr = _pUIAutomation->CreateCacheRequest(&pCacheRequest);

if (SUCCEEDED(hr))

{

hr = pCacheRequest->AddProperty(UIA_BoundingRectanglePropertyId);

if (SUCCEEDED(hr))

{

hr = pCacheRequest->AddPattern(UIA_InvokePatternId);

if (SUCCEEDED(hr))

{

hr = pCacheRequest->AddPattern(UIA_LegacyIAccessiblePatternId);

}

}

}

if (SUCCEEDED(hr))

{

hr = _pUIAutomation->get_ControlViewCondition(&pConditionControlView);

if (SUCCEEDED(hr))

{

VARIANT varPropOffScreen;

varPropOffScreen.vt = VT_BOOL;

varPropOffScreen.boolVal = VARIANT_FALSE;

hr = _pUIAutomation->CreatePropertyCondition(

UIA_IsOffscreenPropertyId,

varPropOffScreen,

&pConditionOffscreen);

if (SUCCEEDED(hr))

{

VARIANT varPropIsEnabled;

varPropIsEnabled.vt = VT_BOOL;

varPropIsEnabled.boolVal = VARIANT_TRUE;

hr = _pUIAutomation->CreatePropertyCondition(

UIA_IsEnabledPropertyId,

varPropIsEnabled,

&pConditionIsEnabled);

if (SUCCEEDED(hr))

{

VARIANT varPropHasInvokePattern;

varPropHasInvokePattern.vt = VT_BOOL;

varPropHasInvokePattern.boolVal = VARIANT_TRUE;

hr = _pUIAutomation->CreatePropertyCondition(

UIA_IsInvokePatternAvailablePropertyId,

varPropHasInvokePattern,

&pConditionHasInvokePattern);

{

VARIANT varPropHasLegacyIAccessiblePattern;

varPropHasLegacyIAccessiblePattern.vt = VT_BOOL;

varPropHasLegacyIAccessiblePattern.boolVal = VARIANT_TRUE;

hr = _pUIAutomation->CreatePropertyCondition(

UIA_IsLegacyIAccessiblePatternAvailablePropertyId,

varPropHasLegacyIAccessiblePattern,

&pConditionHasLegacyIAccessiblePattern);

}

}

}

if (SUCCEEDED(hr))

{

hr = _pUIAutomation->CreateOrCondition(

pConditionHasInvokePattern,

pConditionHasLegacyIAccessiblePattern,

&pConditionPatterns);

if (SUCCEEDED(hr))

{

hr = _pUIAutomation->CreateAndCondition(pConditionControlView,

pConditionOffscreen,

&pConditionCombined1);

if (SUCCEEDED(hr))

{

hr = _pUIAutomation->CreateAndCondition(

pConditionCombined1,

pConditionIsEnabled,

&pConditionCombined2);

if (SUCCEEDED(hr))

{

hr = _pUIAutomation->CreateAndCondition(

pConditionCombined2,

pConditionPatterns,

&pCondition);

}

}

}

}

}

}

if (SUCCEEDED(hr))

{

hr = pElementWindow->FindAllBuildCache(TreeScope_Descendants,

pCondition,

pCacheRequest,

&pElementArray);

}

The above code snippet doesn’t include the calls to release all the objects once they’re no longer needed.

Hold on - where am I getting the clickable things from?

The above call to FindAllBuildCache() is being made from an IUIAutomationElement called pElementWindow, but what is that? My goal here is to get clickable things in a browser, but I thought it would also be interesting to get the clickable things in whatever the foreground window is. So the code for getting pElementWindow is:

HWND hWndForeground = GetForegroundWindow();

if (hWndForeground != NULL)

{

IUIAutomationElement *pElementWindow = NULL;

HRESULT hr = _pUIAutomation->ElementFromHandle((UIA_HWND)hWndForeground,

&pElementWindow);

if (SUCCEEDED(hr) && (pElementWindow!= NULL))

{

// Now get the clickable elements…

In practice my AT app wasn’t able to click things in a number of apps, and I’ll look into fixing that at some point. Given that my main goal related to browser usage, and that worked fairly well, the above approach met my needs.

One approach that I’d certainly not want to take would be to get the root element of the UIA tree and then call one of the Find* methods from that with TreeScope_Descendants in the hope of getting every clickable things on the screen. MSDN points out that calling Find* must not be done from the root element with TreeScope_Descendants.

Another approach I’d not want to take is to call ElementFromPoint() to hit-test for every clickable thing on the screen. To feel confident that I’d not missed small elements, I’d need to make many thousands of calls to ElementFromPoint(). Given that each call would be cross-process, the performance would be grim.

But I did think that if at some point my AT app would be useful for working in some apps outside of the browser, then in addition to helping the user work in the foreground app, it should also help in starting apps and switching between apps. In that case, the app would need to interact with the Taskbar. So in addition to working with the foreground window, the app also finds the Taskbar with the code below and then finds the clickable things that are shown on it.

IUIAutomationElement *pElementRoot = NULL;

HRESULT hr = _pUIAutomation->GetRootElement(&pElementRoot);

if (SUCCEEDED(hr))

{

VARIANT varPropClassName;

varPropClassName.vt = VT_BSTR;

varPropClassName.bstrVal = SysAllocString(L"Shell_TrayWnd");

IUIAutomationCondition *pConditionClassName = NULL;

hr = _pUIAutomation->CreatePropertyCondition(UIA_ClassNamePropertyId,

varPropClassName,

&pConditionClassName);

if (SUCCEEDED(hr))

{

IUIAutomationElement *pElementTaskBar;

hr = pElementRoot->FindFirst(TreeScope_Children,

pConditionClassName,

&pElementTaskBar);

if (SUCCEEDED(hr) && (pElementTaskBar != NULL))

{

// Now get the clickable elements…

The above code finds the element with the accessible Name property of “Shell_TrayWnd” which is a direct child of the root element. It’s ok to call one of the Find* functions from the root element when TreeScope_Children is used. I used the Inspect SDK tool to look at the properties of the Taskbar in order to figure out a way to programmatically access it.

It turned out that in practice the gathering the Taskbar buttons was only partially useful in my app as it stands today. The user can start apps by clicking a Taskbar button with my app and sometimes switch between apps, but there were situations where it didn’t work. I’ll look into this further if I get related feedback about it.

So with the above code, my app had gathered a long list of elements which can be programmatically clicked in the browser. It keeps this list around, as the app will need to retrieve an element from the list later when the user wants to click it. The next step was to show these elements to the user.

Highlighting the results

The original UIA sample has a file called Highlight.cpp which is responsible for all the work to present results to the user. The sample did this by magnifying the element, but for my app I want to present something at the location of every clickable element. This will allow the user to specify that they want to click a particular element.

So I decided to create a fullscreen window that would be completely transparent except for identifiers that would lie over the clickable elements. I pulled out all the magnification related code from the sample, and modified the existing code which creates a window such that it created the big window I needed.

In the call to CreateWindowEx(), I passed the flags:

WS_EX_LAYERED | WS_EX_TRANSPARENT | WS_EX_NOACTIVATE | WS_EX_TOPMOST

I then called SetLayeredWindowAttributes() with LWA_COLORKEY and a colour that I thought would never be used as a system colour in practice. (I used system colours for the display of the element identifiers.)

By doing the above, I had a fullscreen window that was transparent, and which would not react at all to mouse clicks in it. (When I say “fullscreen” here, a constraint I have in the app at the moment is that I’m not supporting multi-monitor. I’ll fix that if I get feedback about it.)

Having created the window which will present the results to the user, I added a public method to the existing CHighlight object from the sample. This method allowed the object that gathered all the bounding rectangles of the things that can be clicked, to inform the CHighlight object where each identifier is to be displayed on the screen. So CHighlight ends up with an array of points, and that’s all it needs. (CHighlight also now calls GetTextExtentPoint32() to take into account the size of the identifier to be displayed, and as such, center the identifier over the element.)

Finally, whenever the window processes WM_PAINT, it draws the identifiers on the screen. It simply iterates through the array, building up strings as the identifiers based on the index in the point array. So the identifier for the first element in the array is “ 1 ”, the next is “ 2 ”, and so on.

The picture below shows clickable elements in the browser.

For many people the highlighting shown will be usable, but I’m conscious of the fact that the person with the mobility impairments which prompted me to build this app, also has visual impairments. So another aspect of the app which I may be enhancing is to try to make the identifiers easier to see. I specifically used theme-based colours for the text colour and background colour, (through calls to GetSysColor() with COLOR_WINDOWTEXT and COLOR_WINDOW), but the identifiers are still very small and often overlap.

The picture below shows the identifiers when the High Contrast #1 theme has been selected in the Personalization Control Panel.

Clicking something

The last step in all this is providing some way for the user to click the thing that’s highlighted. Different users might prefer different ways of doing this, (for example, typing a number and then automatically having the element clicked after say a couple of seconds). But I went with the following approach:

1. Press the ` key near the top left of the English keyboard to turn the identifier display on or off.

2. Type the identifying number of the thing you want to click.

3. Press the Escape key to click it.

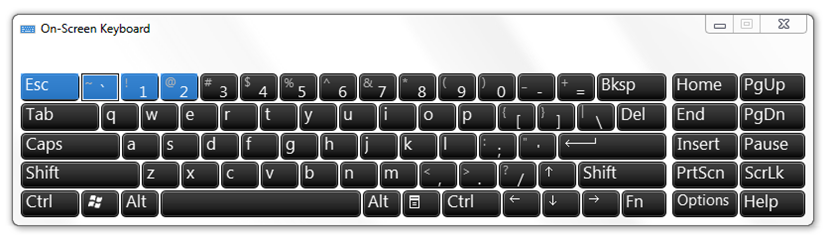

An important question here is, why choose the ` and Escape keys? I did this deliberately to make it as fast as possible for someone using the Windows 7 On-Screen Keyboard (OSK) in its Scan mode. The OSK’s Scan mode allows someone to control the keyboard with a hardware switch. So if someone has severe mobility impairments and uses either a foot switch or head switch to interact with the computer, my new app is optimized to make it quick for those users to type the keys they need to. The picture below shows the OSK in Scan mode:

I realize the keys I’ve chosen will be far from ideal for some people. For example, a user might prefer to be able to use the number pad on a physical keyboard, such that with only small movements of their fingers, they can click things in the browser. If I get feedback asking for the app to be enhanced to allow the user to configure which keys are used, I’ll do that.

The original sample had no code for detecting key presses in the way my app needed to, so I added a low-level keyboard hook through a call to SetWindowsHookEx(). The hook detects VK_OEM_3 to turn the identifier display on and off in response to a press of the ` key. (I’ve only used my app with an English keyboard, and so might have to update the hook for non-English keyboards.) In response to key presses of ‘0’-‘9’ while the identifiers are on, the app builds up a string to cache the full number typed. Then in response to a press of the VK_ESCAPE key while identifiers are on, the app performs the clicking of the element. Whenever it does take action in response to the key press, the app blocks the system from also processing the key press. If it did not do this, then the number that the user’s typing to specify an identifier would also appear in whatever control currently has keyboard focus. (And I realize that doing the above, I’m assuming that the user never wants to type a ` character into any app.)

When a click is made, the app uses the number typed to figure out which element is of interest. It still has the long list of elements that it originally gathered through UIA, and so it can retrieve the element of interest. That is, if the user typed “1”, then the app will use the first element found earlier.

The code below performs what the user considers to be the click on the element.

IUIAutomationInvokePattern *pPattern = NULL;

IUIAutomationLegacyIAccessiblePattern *pPatternLegacyIAccessible = NULL;

hr = pElement->GetCachedPatternAs(UIA_InvokePatternId, IID_PPV_ARGS(&pPattern));

if (SUCCEEDED(hr))

{

if (pPattern != NULL)

{

hr = pPattern->Invoke();

pPattern->Release();

pPattern = NULL;

}

else

{

hr = pElement->GetCachedPatternAs(UIA_LegacyIAccessiblePatternId,

IID_PPV_ARGS(&pPatternLegacyIAccessible));

if (SUCCEEDED(hr) && (pPatternLegacyIAccessible != NULL))

{

hr = pPatternLegacyIAccessible->DoDefaultAction();

pPatternLegacyIAccessible->Release();

pPatternLegacyIAccessible = NULL;

}

}

}

The calls to GetCachedPatternAs() above do not result in cross-process calls, because I explicitly asked for information about these patterns to be cached in the call to FindAllBuildCache(). The calls to Invoke() or DoDefaultAction() will result in cross-process calls, as this is where the app tells the browser to have the element perform some action. If the original element no longer exists by the time these calls are made, then the calls will return UIA_E_ELEMENTNOTAVAILABLE.

And that’s it

Having built the working app, I did pull out some more parts of the sample which I didn’t need. This included all the code related to UIA event handling. Looking at the final code, the amount of code which I’d had to add myself in order to build my app was fairly small, so I was pleased about that. The componentization of the original sample into parts for (i) the main app UI, (ii) the code which calls UIA, and (iii) the code which highlights the results worked well for me.

So in a few hours over a weekend, I’d built a functioning app which can help someone with very limited mobility to browse the web. I’ve made the app freely available at my own AT site, and documented the current constraints of the app there. I’ll enhance it as I get feedback from people who want to use it.

If you know of someone who could benefit from an app which helps them interact with things that are shown on the screen, perhaps one of my UI Automation samples could help you build it for them.

Guy

Comments

Anonymous

October 17, 2012

Very cool post! Love that the example is easy to follow and has real value.Anonymous

August 31, 2014

Guy, awesome as usual! I'm in the same situation with my app in terms of finding clickable elements using invoke (both legacy accessible and the current invoke pattern). I have had to resort to sendmessage, mouse-event, and the one that replaced mouse_event(I forget the name). Is this something you have to do for the cases mentioned above where clicks did not happen? PeterAnonymous

September 12, 2014

This discussion has continued in the Comments section at blogs.msdn.com/.../10423505.aspx.Anonymous

May 04, 2016

Hey Guy. Awesome tutorial - exactly what I was looking for. Do you have the code sample for this? I'm building an assistive technology app that's very similar but I'm having trouble with the overlay. Thanks! :)- Anonymous

May 05, 2016

Hi Jack, thanks for the feedback on the post. If I had my act together, I'd upload the whole sample to github. But it seems I never get my act together, so it would probably be quickest for me to just upload whatever code is useful to this blog. I have to say that it's been a few years since I worked on this sample, so it could take me a few days to dig it up. All being well I'll find it and sometime next week I can upload whichever bits of it you're interested in. Is it only the transparent window showing the numbers that you're interested in? On a side note, I had some fun recently building another tool which has a mostly-transparent window, and this time it was in C#. (Detailed at https://blogs.msdn.microsoft.com/winuiautomation/2016/03/28/a-real-world-example-of-quickly-building-a-simple-assistive-technology-app-with-windows-ui-automation.)Guy- Anonymous

May 09, 2016

The comment has been removed

- Anonymous

- Anonymous