“Partitioned” Cluster Networks

With Failover Cluster and the quorum models used, the concept of votes is used to determine which subset of nodes survives should one group of nodes lose connectivity with another group of nodes in the same cluster.

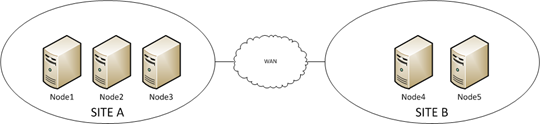

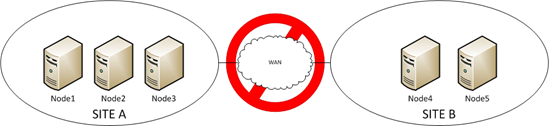

In this example, we’re using a ‘Node Majority’ quorum model and two sites separated by a WAN link

Each node in the cluster gets one vote. So, if there’s ever a break in the WAN link between the two sites, nodes 1,2, and 3 can still talk to each other and nodes 4 and 5 can still talk to each other.

However, site B doesn’t have enough votes to stay up (two votes) so the cluster service shuts down on nodes 4 and 5 to prevent site B from taking over the cluster and site A remains functional.

In the example above, site A has one more than 1/2 the number of votes in the cluster (three votes) so the nodes in site A stays running.

This is a very common configuration of clusters that are designed for site fault tolerance where the sites are separated by some geographic distance.

But what if there are cluster nodes in more than two sites? How does the cluster handle inter-site communication failures at that point?

Let’s take a look at an example where I have three sites, each with one node of a three-node cluster.

In this configuration, each site has connectivity with every other site. What happens when for instance, WAN3 between site B and C goes down?

In order to understand how cluster recovers, it’s important to understand that cluster requires that EVERY node of the cluster have connectivity to EVERY OTHER node in the cluster. In the above scenario where sites B and C lose connectivity, we have what’s called a ‘partitioned network’. Site B and A can still communicate and site A and C can still communicate but we don’t have a cluster with full connectivity therefore cluster needs to take recovery action.

The recovery action that the cluster takes is to determine the best surviving subset of nodes that exists AND still has full connectivity with each other. The cluster then trims out any node that is not part of that eventual ‘subset’ of nodes.

In the above diagram, the remaining combinations of nodes that still have full connectivity with each other is (A and B) and (A and C). Both possible outcomes have an equal number of votes (2). When the quantity of votes is equal among new proposed cluster membership, we use the NodeIDs of each node to determine who lives and who dies. Let’s say the nodeID of the node in site A is 1, site B is 2, site C is 3.

FYI: You can determine node IDs by running the following from a command prompt from any node of the cluster

c:\>cluster node

or the PowerShell cmdlet

PS> get-ClusterNode | fl *

Examples:

PowerShell

Cluster : ClusterName

State : Up

Name : NodeName-Node1

NodeName : NodeName-Node1

NodeHighestVersion : 400817

NodeLowestVersion : 400817

MajorVersion : 6

MinorVersion : 1

BuildNumber : 7601

CSDVersion : Service Pack 1

NodeInstanceID : 00000000-0000-0000-0000-000000000001

Description :

Id : 00000000-0000-0000-0000-000000000001

Cluster : ClusterName

State : Up

Name : NodeName-Node2

NodeName : NodeName-Node2

NodeHighestVersion : 400817

NodeLowestVersion : 400817

MajorVersion : 6

MinorVersion : 1

BuildNumber : 7601

CSDVersion : Service Pack 1

NodeInstanceID : 00000000-0000-0000-0000-000000000002

Description :

Id : 00000000-0000-0000-0000-000000000002

Command Line

Listing status for all available nodes:

| Node | Node ID | Status |

| NodeName-Node1 | 1 | Up |

| NodeName-Node2 | 2 | Up |

Out of our two possible remaining subsets of nodes (A and B) and (A and C), we can see that A is a member of both proposed partitions so A stays running. Out of the remaining nodes (B & C), we trim the node out of the cluster with the lowest nodeID (Node B with an ID of 2).

The event ID that you would see associated with node B getting removed from the cluster membership is:

EventID:1135

Source: Microsoft-Windows-FailoverClustering

Description: Cluster node '<node name>' was removed from the active failover cluster membership. The Cluster service on this node may have stopped. This could also be due to the node having lost communication with other active nodes in the failover cluster. Run the Validate a Configuration wizard to check your network configuration. If the condition persists, check for hardware or software errors related to the network adapters on this node. Also check for failures in any other network components to which the node is connected such as hubs, switches, or bridges.

The event ID you would see on the node that got kicked out of the cluster is:

EventID: 1006

Source: Microsoft-Windows-FailoverClustering

Description: Cluster service was halted due to incomplete connectivity with other cluster nodes.

The ultimate problem to be resolved in this scenario is the WAN connectivity. Hopefully this blog will help you understand root cause of cluster nodes dropping out of the cluster when the network becomes partitioned.

Jeff Hughes

Senior Support Escalation Engineer

Microsoft Enterprise Platforms Support

Comments

- Anonymous

January 01, 2003

@JackCluster doesn't route intracluster communications through other nodes. - Anonymous

January 01, 2003

@Ginger

When there is a partition in a network, it is going to depend on the connectivity between the nodes. So lets say there are two networks running between 3 nodes. NetworkB drops from between Node2 and Node3 while NetworkA remains up. In this case, NetworkB will show partitioned as it has a connection between several but not all nodes. Since NetworkA remains up and has connectivity between all nodes, there are no nodes dropping out of membership. - Anonymous

September 26, 2011

What if Nodes B and C can STILL communicate via the A Site? In this case you dont lose quorum? - Anonymous

April 20, 2013

What happens if its 2 nodes on the same LAN? we have our CSV, cluster and Management in a team and we are using a HyperV switch, and then virtual adapters. today we all of a sudden started getting errors about the CSV being partitioned. - Anonymous

December 16, 2013

I know this is an old post but...What if there was another node at Site C with node id of 4 and another node at Site B with node id of 5?

And say the nodeID's in Site B are 2 and 5 and the nodeID's in Site C are 3 and 4. How would it trim out the nodes? - Anonymous

August 26, 2014

The comment has been removed - Anonymous

April 28, 2015

I still don't know what a partitioned network is and in my case I never see a node being removed from cluster membership. - Anonymous

November 07, 2015

Would a FSW prevent a failover to node A because node B was still locking the file? I had it happened to me where I was not able to access the cluster until node B was rebooted. I guess I could have force a quorum but s reboot solved it.