Managing data motion during your deployments (Part 2)

This post finishes off a 2 part post. Sorry about the delay getting this second part out. I went on a vacation back in May and I am just now getting caught up.

In Part 1 we reviewed how to use the pre and post deployment scripts to manage data motion required to deploy some schema changes. In Part 2 we will look into how to implement your data motion scripts to only run when necessary.

You typically will not want your data motion scripts to run on every deployment. There could be various reasons including: the data motion is a one-time upgrade; a script may only be appropriate for a specific target; the script may only be applicable for a specific version of a database schema, etc. Scripts in the pre and post deployment scripts of VSTS: DB are retained in the project after deployment and are checked into SCC code control. These scripts continue to be a part of the project unless the developer removes them from the code base. If you are maintaining multiple versions of an application you would want to keep these scripts to enable upgrade of earlier versions of the database schema. Also, data motion scripts, while usually not reusable across versions of a schema, can be useful as a template if similar or same objects change in later versions of the database schema.

To enable scripts to only run when needed requires the developer to choose a strategy of how to detect when scripts are needed. There are many out there, but there are a few that are common with others being a variant of these:

· Expected schema conditions;

· Database version history table;

· Database version stamp;

· and combinations of the previous.

At the heart of these strategies is the goal to make data motion scripts be idempotent or simply re--runnable. To keep these scripts in the project and a part of every database deployment they must be re-runnable. If you have scripts that are one-shot DDL/DML scripts I would still enable them to be re-runnable since you will likely run the script multiple times during testing of trial deployments and database upgrade tests. You may not choose to keep all scripts around for the life of the project in the latest source, but experience has taught me that a little up front work to enable scripts to be re-runnable will pay dividends before the end of the project.

Expected schema conditions is probably the most common strategy and most seasoned database developers include this as a standard part of their DDL scripts. It’s just good practice and improves the reusability of scripts and achieves re-runnability. This strategy is checking for an expected state of schema. If schema is in an expected state the script runs. If the state of the schema is not in the expected state the script does nothing. I have sometimes heard database developers call these scripts non-destructible or non-invasive. I would steer clear of that terminology when talking about databases (especially when speaking to customers :)). Using our example from Part 1 here is the pre-deployment data motion script that employs the Expected Schema Condition:

Note: You would need to make similar modifications in the post deployment script, but instead of looking for the existence of the column you check to see the xxxDATAMOTOxxxCustomersTable exists .

PRINT 'STARTING PREDEPLOYMENT SCRIPT...'

SET NOCOUNT ON

--Add IsKeyCustomer Column to Customer Table. Required version 2->3 upgrade.

IF NOT EXISTS(

SELECT 1 FROM [sys].[syscolumns]

WHERE [sys].[syscolumns].[id] = OBJECT_ID('[Customer].[Customers]')

AND [sys].[syscolumns].[name] = 'IsKeyCustomer')

BEGIN

PRINT 'STARTING ADDIsKeyCustomer - PREDATAMOTO_CUSTOMERS_TABLE'

…

Database version history tables are pretty common as well. They became very common when it showed up as a part of the Adventure Works sample database SQL Server shipped back in 2000 (dbo.AWBuildVersion). The Database Version History strategy is an approach you use to record that a script has run or the database schema is of a particular version. This approach typically includes a table that is persisted as a part of the maintained database schema and upgrade scripts that will query this table to determine if they need to run. When they do run they update the table with a new version or add a record that the script has been ran. These tables are usually very simple including only the bare essentials. Here is an example of a Database Version History Table.

CREATE TABLE [dbo].[DBBuildVersion](

[Id] [tinyint] IDENTITY(1,1) NOT NULL

CONSTRAINT PK_Id PRIMARY KEY,

[DBversion] [NVARCHAR](25) NOT NULL,

[VersionDate] [DATETIME] NOT NULL

CONSTRAINT DF_VersionDate DEFAULT GETDATE())

And here is our example using the database version history table:

PRINT 'STARTING PREDEPLOYMENT SCRIPT...'

SET NOCOUNT ON

--Add IsKeyCustomer Column to Customer Table. Required version 2->3 upgrade.

SELECT 1 FROM [dbo].[DBBuildVersion]

WHERE [VersionDate] =

(SELECT MAX([VersionDate]) FROM [dbo].[DBBuildVersion])

AND RTRIM(LTRIM([DBVersion])) = '2'

BEGIN

PRINT 'STARTING ADDIsKeyCustomer - PREDATAMOTO_CUSTOMERS_TABLE'

…

At the end of your deployment, in the last statement of your post deployment script, you add the new version to the DBBuildVersion table.

INSERT [dbo].[DBBuildVersion](DBversion)VALUES(3)

GO

Database version stamping is not very common, but is gaining popularity. This strategy includes objects as a part of the database schema that indicates the version of the database schema. Like the database version history table, the version stamp indicates the current version of the database schema, but instead of the version being persisted as data, it is persisted as an object. The object can be a Stored Procedure that returns the version of the database from a literal, a View that provides version history from a SELECT statement of literals, CLR object that reads information from registry, Extended Properties of the database or database objects. There are many ways you can implement this approach. This approach typically includes an object that will provide the current version of the database, maintained as a part of the database schema, and upgrade scripts that will query this version to determine if they need to run. Unlike the database version history table they do not persist any data to the database once an upgrade script has ran. Here is an example of the database version stamp using an Extended Property.

Added CustomerDB.extendedproperties.sql to project which contains:

EXEC sp_addextendedproperty @name='DBBuildVersion',

@value ='3',

@level0type = NULL,

@level0name = NULL,

@level1type = NULL,

@level1name = NULL,

@level2type = NULL,

@level2name = NULL

See how I specified ‘3’ as the value. Deployment will actually update the extended property with what is defined in the project during deployment if you are not ignoring extended properties. It’s important to understand this since by the time the post deployment script executes the version will be what is defined in the project and not the original version value in the database. This means that in your post deployment script you use the state changed by the pre-deployment script to determine if your post deployment action needs to run. This is actually a good thing since the post deployment action is dependent on the pre deployment action. Alternatively, you can manage the extended property and its value in the pre/post deployment scripts and have the targets original version value around until the very end. I have another post that coming that uses an approach where the version value actually comes from the build process and not the project.

And here is our example using the database version extended property:

PRINT 'STARTING PREDEPLOYMENT SCRIPT...'

SET NOCOUNT ON

--Required for version 2->3 upgrade

IF EXISTS(

SELECT 1 FROM sys.extended_properties

WHERE class_desc = 'DATABASE'

AND name = 'DBBuildVersion'

AND (value = '2' OR value ='1'))

BEGIN

PRINT 'STARTING ADDIsKeyCustomer - PREDATAMOTO_CUSTOMERS_TABLE'

…

BTW, I know the table value function fn_listextendedproperty exists. I prefer to use the sys.extended_properties table. I am hoping the SQL Team adds T-SQL support for extended properties eventually. When I think about it the following just feels right.

CREATE PROPERTY 'DBBuildVersion' ON DATABASE WITH VALUE '?’

GO

SELECT * FROM DATABASE.PROPERTIES

GO

UPDATE DATABASE.PROPERTIES SET DBBuildVersion = ’3’

GO

Back to topic…

I have seen many customer implementations of data motion and upgrade scripts. There are reasons why one strategy may be more appealing than another and each affords some benefits that the others do not. I believe the strategies are most effective in combinations. I often recommend customers consider the Expected Schema and Database Version Stamp strategies combination. These 2 approaches work very well for teams using source code control and the database version is managed at design time. This combination also does not rely on the existence of data which may or may not be there for a database target or might get stepped on by database users or other code running against the database. The last thing you want is the database history table to get tampered with and scripts run when you did not intend them to. However, a database version history table can be useful for many things, but it should not be what ultimately controls the execution of your data motion or upgrade scripts. Using a persisted data to control execution actually will complicate your data motion scripts as you have to account for the non-existence of data.

Now, let’s update our example to use the Database Version Stamp and Expected Schema Conditions. First we will create our Extended Property in version 2 of the database schema hosted on my sandbox database instance.

EXEC sp_addextendedproperty @name='DBBuildVersion',

@value ='2',

@level0type = NULL,

@level0name = NULL,

@level1type = NULL,

@level1name = NULL,

@level2type = NULL,

@level2name = NULL

GO

I also add the Extended Property to the database project like so:

[CustomerDB.extendedproperties.sql]

EXEC sp_addextendedproperty @name='DBBuildVersion',

@value ='3',

@level0type = NULL,

@level0name = NULL,

@level1type = NULL,

@level1name = NULL,

@level2type = NULL,

@level2name = NULL

GO

I then update the pre and post deployment scripts to include expected schema conditions and version checks. In the pre-deployment script I move the version 3 upgrade into a new file in a sub-directory and include the file in the pre-deployment. These look like this:

[Script.PreDeployment.sql]

PRINT 'STARTING PREDEPLOYMENT SCRIPT...'

:r .\DataMotionUpgrades\Version3Upgrade.sql

PRINT 'PREDEPLOYMENT SCRIPT COMPLETE'

[.\Scripts\Pre-Deployment\DataMotionUpgrades\Version3Upgrade.sql]

--Version 3 Pre-Data Motion requred to upgrade version 1-2

IF EXISTS(

SELECT 1 FROM sys.extended_properties

WHERE class_desc = 'DATABASE'

AND name = 'DBBuildVersion'

AND (value = '2' OR value ='1'))

BEGIN

PRINT 'STARTING ADDIsKeyCustomer - PREDATAMOTO_CUSTOMERS_TABLE'

SET NOCOUNT ON

IF NOT EXISTS(

SELECT 1 FROM [sys].[syscolumns]

WHERE [sys].[syscolumns].[id] = OBJECT_ID('[Customer].[Customers]')

AND [sys].[syscolumns].[name] = 'IsKeyCustomer')

AND EXISTS(

SELECT * FROM [sys].[tables]

WHERE object_id = OBJECT_ID('[Customer].[Customers]'))

BEGIN

IF (SELECT COUNT(*) FROM [Customer].[Customers]) > 0

BEGIN

BEGIN TRY

BEGIN TRAN PREDATAMOTO_CUSTOMERS_TABLE

PRINT 'BACKING UP [Customer].[Customers]'

SELECT [Id],[FName],[LName],[Email],[Created],[Updated],[Deleted]

INTO [dbo].[v3xDATAMOTOxxxCustomers]

FROM [Customer].[Customers]

PRINT 'DELETING [Customer].[Customers]'

ALTER TABLE [Accounting].[CustomerInvoices] NOCHECK CONSTRAINT [FK_CustomerInvoices_Customer];

DELETE [Customer].[Customers]

COMMIT TRAN PREDATAMOTO_CUSTOMERS_TABLE;

END TRY

BEGIN CATCH

ROLLBACK TRAN PREDATAMOTO_CUSTOMERS_TABLE;

PRINT 'PREDATAMOTO_CUSTOMERS_TABLE TRANSACTION ROLLED BACK'

PRINT CAST(ERROR_NUMBER() AS CHAR(5)) + ERROR_MESSAGE()

END CATCH

END

ELSE

PRINT 'NO RECORDS TO WORRY ABOUT!!'

END

ELSE

PRINT 'WARNING!!!!!: SCHEMA IS AN UNEXPECTED STATE FOR THIS UPGRADE SCRIPT AND DATABASE VERSION'

SET NOCOUNT OFF

END

[Script.PostDeployment.sql]

PRINT 'STARTING POSTDEPLOYMENT SCRIPT...'

:r .\DataMotionUpgrades\Version3Upgrade.sql

PRINT 'POSTDEPLOYMENT SCRIPT COMLETE'

[.\Scripts\Post-Deployment\DataMotionUpgrades\Version3Upgrade.sql]

--Version 3 Pre-Data Motion requred to upgrade version 1-2

SET NOCOUNT ON

IF EXISTS(

SELECT 1 FROM sys.extended_properties

WHERE class_desc = 'DATABASE'

AND name = 'DBBuildVersion'

AND value ='3')

BEGIN

IF EXISTS(

SELECT * FROM [sys].[tables]

WHERE object_id = OBJECT_ID('[dbo].[v3xDATAMOTOxxxCustomers]'))

BEGIN

BEGIN TRAN POSTDATAMOTO_CUSTOMERS_TABLE;

BEGIN TRY

PRINT 'RESTORING [Customer].[Customers]'

IF (SELECT COUNT(*) FROM [dbo].[v3xDATAMOTOxxxCustomers]) > 0

BEGIN

SET IDENTITY_INSERT [Customer].[Customers] ON

INSERT [Customer].[Customers]

([Id],[FName],[LName],[Email],[IsKeyCustomer],[Created],[Updated],[Deleted])

SELECT

[Id],

[FName],

[LName],

[Email],

CASE

WHEN EXISTS

(SELECT SUM(InvoiceTotal) FROM [Accounting].[CustomerInvoices]

WHERE [Accounting].[CustomerInvoices].[CustomerId] =

[dbo].[v3xDATAMOTOxxxCustomers].[Id]

HAVING SUM(InvoiceTotal) >= 500000)

THEN 1

ELSE 0

END,

[Created],

[Updated],

[Deleted]

FROM [dbo].[v3xDATAMOTOxxxCustomers]

ORDER BY [dbo].[v3xDATAMOTOxxxCustomers].[Id]

SET IDENTITY_INSERT [Customer].[Customers] OFF

END

ELSE

BEGIN

PRINT 'WARNING!!!!!: SCHEMA IS AN UNEXPECTED STATE FOR THIS UPGRADE SCRIPT'

END

PRINT 'DROPPING [dbo].[v3xDATAMOTOxxxCustomers]'

DROP TABLE [dbo].[v3xDATAMOTOxxxCustomers]

COMMIT TRAN POSTDATAMOTO_CUSTOMERS_TABLE;

END TRY

BEGIN CATCH

ROLLBACK TRAN POSTDATAMOTO_CUSTOMERS_TABLE;

PRINT 'POSTDATAMOTO_CUSTOMERS_TABLE TRANSACTION ROLLED BACK'

PRINT CAST(ERROR_NUMBER() AS CHAR(5)) + ERROR_MESSAGE()

END CATCH

END

END

SET NOCOUNT OFF

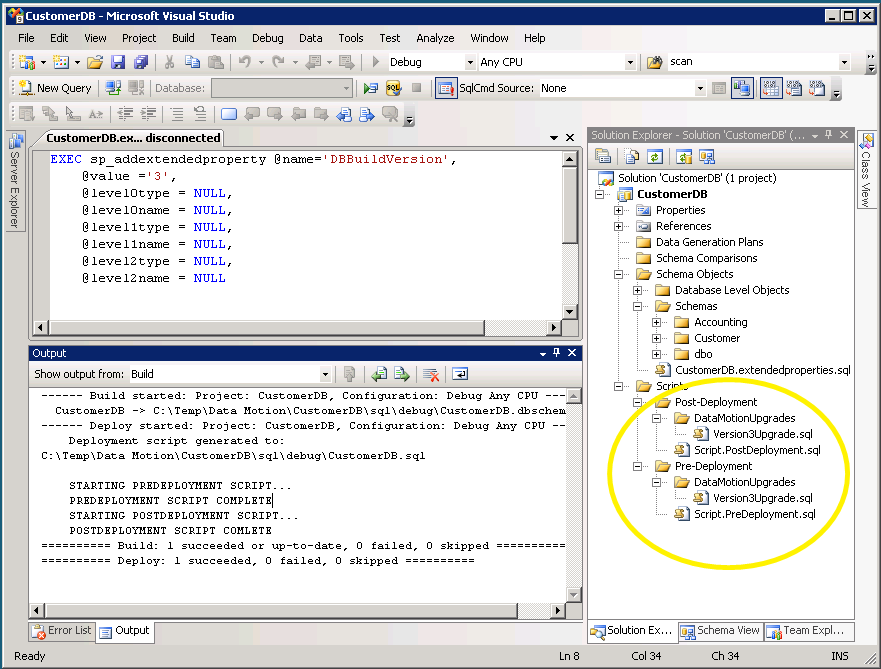

Here is what the project system looks like now with these new pre and post deployment files:

Ok, I am ready now to test my updated data motion against a previous version of the database. To do this I pull previous version of the database project from source code control or from build drops if you have them around. I do this for both version 2 and version 3 of the schema as described in a previous post. I then deploy my latest database project against the previous versions of the database. Here is the output from both deployments.

Deployment output of latest and greatest against version 2. Our data motion runs…just what we want.

------ Deploy started: Project: CustomerDB, Configuration: Debug Any CPU ------

CustomerDB.dbschema(0,0)Warning TSD01269: The column [Customer].[Customers].[IsKeyCustomer] on table [Customer].[Customers] must be added, but the column has no default value and does not allow NULL values. If the table contains data, the ALTER script will not work. To avoid this issue, you must add a default value to the column or mark it as allowing NULL values.

Deployment script generated to:

C:\Temp\Data Motion\CustomerDB\sql\debug\CustomerDB.sql

STARTING PREDEPLOYMENT SCRIPT...

STARTING ADDIsKeyCustomer - PREDATAMOTO_CUSTOMERS_TABLE

BACKING UP [Customer].[Customers]

DELETING [Customer].[Customers]

PREDEPLOYMENT SCRIPT COMPLETE

Dropping DBBuildVersion...

Starting rebuilding table Customer.Customers...

Caution: Changing any part of an object name could break scripts and stored procedures.

Caution: Changing any part of an object name could break scripts and stored procedures.

Creating DBBuildVersion...

STARTING POSTDEPLOYMENT SCRIPT...

RESTORING [Customer].[Customers]

DROPPING [dbo].[v3xDATAMOTOxxxCustomers]

POSTDEPLOYMENT SCRIPT COMLETE

========== Build: 1 succeeded or up-to-date, 0 failed, 0 skipped ==========

========== Deploy: 1 succeeded, 0 failed, 0 skipped ==========

Deployment output against version 3. No Mods. Again, just what we want.

------ Deploy started: Project: CustomerDB, Configuration: Debug Any CPU ------

Deployment script generated to:

C:\Temp\Data Motion\CustomerDB\sql\debug\CustomerDB.sql

STARTING PREDEPLOYMENT SCRIPT...

PREDEPLOYMENT SCRIPT COMPLETE

STARTING POSTDEPLOYMENT SCRIPT...

POSTDEPLOYMENT SCRIPT COMLETE

========== Build: 1 succeeded or up-to-date, 0 failed, 0 skipped ==========

========== Deploy: 1 succeeded, 0 failed, 0 skipped ==========

Finally, I also need to test a clean deployment so I deploy to a new target. Here is the output.

------ Deploy started: Project: CustomerDB, Configuration: Debug Any CPU ------

Deployment script generated to:

C:\Temp\Data Motion\CustomerDB\sql\debug\CustomerDB.sql

Creating CustomerDB...

STARTING PREDEPLOYMENT SCRIPT...

PREDEPLOYMENT SCRIPT COMPLETE

Creating Accounting...

Creating Accounting.CustomerInvoices...

Creating Customer...

Creating Customer.Customers...

STARTING POSTDEPLOYMENT SCRIPT...

POSTDEPLOYMENT SCRIPT COMLETE

========== Build: 1 succeeded or up-to-date, 0 failed, 0 skipped ==========

========== Deploy: 1 succeeded, 0 failed, 0 skipped ==========

As you can see, managing data motion takes up front planning and effort, but provides many benefits. You can now deploy with more confidence and can keep your version upgrades scripts as a part of the latest source. Deploying to earlier versions of your database is now a breeze.

One thing I want to point out is that this is a very simple example. More complicated schema changes against more sophisticated schemas work with the same approaches, but will take additional planning and consideration. I have gotten a lot of feedback and questions on the previous posts. One question that I have gotten a few times is how to manage data motion when your schema changes involve tables with constraints between them and the modifications are to all tables within these dependencies. This is where advance planning pays off. For these types of deployments these approaches work just as well, but you will have more work to do upfront as the number of data motion and dependencies to manage during deployment increases.

In my next post I will show you how to setup your version stamp so that you can increment the version from a build process.

Comments

- Anonymous

July 08, 2009

Awesome info - just what I was looking for. - Anonymous

August 18, 2009

The comment has been removed - Anonymous

June 13, 2011

Thanks for the articles, they helped me understand database projects much better.Unfortunately I have hit a snag which I was hoping that you would be able to help me with.I need my predeploy script to do something similar to the following (I will be replacing the select with inserts and updates). Unfortunately it causes a compile time error because the column does not exist when it compiles the query.IF EXISTS (SELECT * FROM INFORMATION_SCHEMA.COLUMNS WHERE TABLE_NAME = 'Customer' AND COLUMN_NAME = 'Title')begin select id, title from customerendInterestingly this does not happen when tables do not exist, only columns.This issue seems to undermine the whole purpose of being able to have a freeflowing schema between versions.The only way I can think of around this (and the same idea everyone has on forums) is to run the select as dynamic SQL, which is far from ideal.I am sure there is a better way and was hoping that you might be able to help? - Anonymous

June 26, 2011

Hi Steve,The pre and post deployment scripts are not checked against the schema defined the project. They always run unvalidated for the existence of objects perspective. You are on the right track with the IF EXISTs check you have, Are you saying it fails when you deploy your project to the database?Thanks. - Anonymous

June 26, 2011

Steve,Check the Build Action property of your script. if it is set to 'Build' then its wrong.If your script is te "root" of your pre deployment stuff (i.e. Script.preDeployment.sql) then Build Action should be 'PreDeploy'. if your script is a script that is called from Script.preDeployment.sql using the :r syntax then Build Action should be 'Not in Build'.RegardsJamie - Anonymous

February 10, 2014

The reason I prefer to use a table for storing the database version instead of a stored procedure or extended property is that you can also store a date with it, so you know when it was done, and also the incremental steps that were applied along the way (or accidentally missed). Just having one number on a large database isn't enough... - Anonymous

February 12, 2014

Thank you! It's very helpful!