Making data ingestion through HTTP Data Collector API automatic using Operations Manager.

Hi folks,

Here I am again. This time I will be writing my second post around HTTP Data Collector, more in particular on how to make that data ingestion automatic.

There are several methods that can be used. Basically, depending on your need and environment you could use:

- Azure Automation

- Azure Automation with Hybrid Worker roles

- Windows Task Scheduler

- System Center Operations Manager (SCOM)

Background:

I implemented a script to ingest data using HTTP Data Collector. This data represents a custom Log Analytics field, that maps to the Organizational Unit (OU) a given computer belongs to.

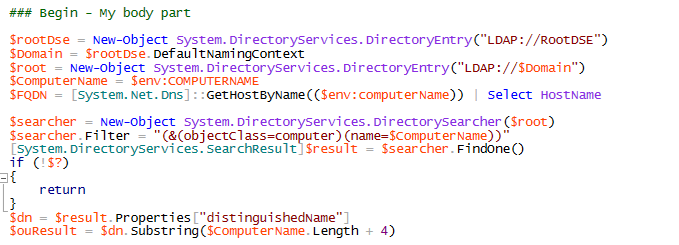

In the example below, you see a part of the script which is used to retrieve and push the OU information:

I want to later use this data to create computer groups in Azure Log Analytics based on the OU. Now, since there is a data retention policy in Azure Log Analytics, I need to ingest this data on a given frequency: say once per day.

As listed above, I can use the Task Scheduler in my Windows Operating System, to create a task which executes my PowerShell script or, since I have System Center Operations Manager monitoring all my computers I would go for it.

Implementation:

I will focus the discussion on SCOM, since the first 2 methods have been discussed in several other blogs and Task Scheduler is something we all know very well. Moreover, I personally do not recommend any approach which involves manual configuration or action to be carried on or applied on more than one target.

At this point the question is: How can I teach SCOM to retrieve and send data?

First, note that this approach works also for agents which have not been configured to report to Azure Log Analytics as long as they have Internet access. Second, the "secret" ingredient that you need is just a "collection rule" which run a PowerShell script (mine or any other script of your choice) and that's it.

I will continue my discussion, with the assumption that you have dealt with Management Pack Authoring before. If not, you will appreciate the following links:

- Authoring for System Center 2012 - Operations Manager at https://technet.microsoft.com/en-us/library/hh457564(v=sc.12).aspx

- Authoring Tools at https://technet.microsoft.com/en-us/library/hh457569(v=sc.12).aspx

- Authoring Management Packs – the fast and easy way, using Visual Studio??? at https://blogs.technet.microsoft.com/kevinholman/2016/06/04/authoring-management-packs-the-fast-and-easy-way-using-visual-studio/

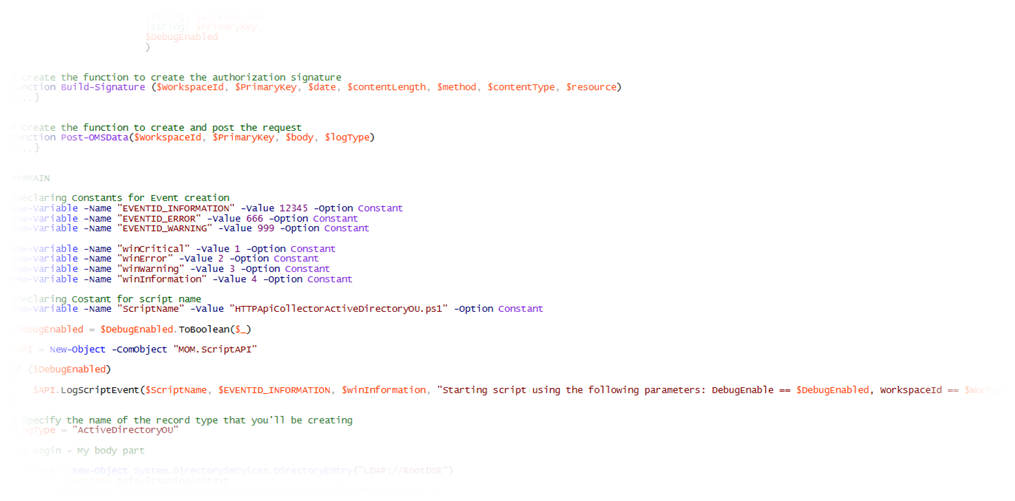

I built my data ingestion MP using a fragment from Kevin Holman's SCOM Management Pack VSAE Fragment Library. I used the Timed PowerShell Rule (identified by the Rule.TimedScript.PowerShell.WithParams.mpx file. Since it was just a Proof of Concepts (PoC) I created the MP directly using an XML editor, doing a bit of copy/paste.

Before importing the sample MP, let's have a look at the key parts:

The frequency: This needs to be adjusted according to your needs. It can be done through overrides. During my tests, I set it to run once per day (86400 seconds)

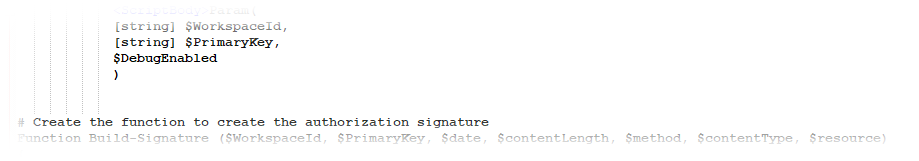

The script: I basically used the same sample script that I referenced in my previous post with few small adjustments to add the logging and to use parameters for WorkspaceID and PrimaryKey. Of course, you can replace my sample script with any other script that fits your needs the best; it's just a matter of doing it the right way (parameters order, data retrieving and posting syntax, and so on)

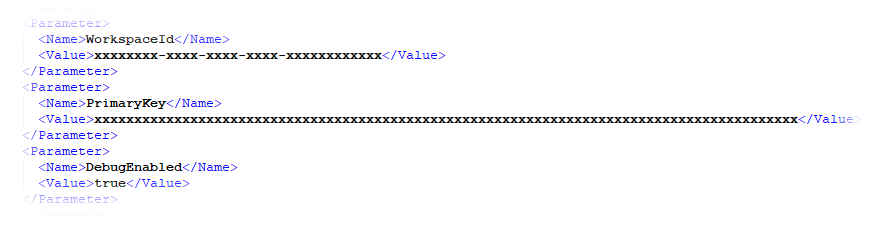

The parameters: They really depends on the script in use; hence parameters can be considered something which are up to your script. In my example, on one of the rules, I did use the following:

- WorkspaceID

- PrimaryKey

- DebugEnabled

And passed them through, this way (you'll need to replace with your WorkspaceID and PrimaryKey):

With all that said, it should be easier to go and create rules to upload data to Azure Log Analytics. Anyway, attached to the post, I am providing you with my sample MP which includes both RegistryKey and OU based rules. As I normally do, the rules come disabled by default.

Lesson Learned:

- Take into high consideration the pricing tier you subscribed. Data retention differs according to the pricing tier. For instance, if you are on the free subscription, the data will be kept for only 7; hence configuring the script to run every 2 weeks will put you in a situation in which you have missing data on the week before the collection is configured to run again. More info on pricing and licensing can be found at https://www.microsoft.com/en-us/cloud-platform/operations-management-suite and at https://azure.microsoft.com/en-us/pricing/ .

- Enable the rule only where needed. if you need data from more than one computer, the recommendation is to create a group and to configure the override for that group.

- Disable the debug once you made sure that the rules work as expected.

Thanks,

Bruno.