Content-Length and Transfer-Encoding Validation in the IE10 Download Manager

Back in March of 2011, I mentioned that we had encountered some sites and servers that were not sending proper Content-Length headers for their HTTP responses. As a result, we disabled our attempt to verify Content-Length for IE9.

Unfortunately, by April, we’d found that this accommodation had led to some confusing error experiences. Incomplete executable files were not recognized by SmartScreen’s Application Reputation feature, and other signed filetypes would show “xxxx was reported as unsafe” because WinVerifyTrust would report that the incomplete file’s signature was corrupt. This problem was very commonly reported for large files (e.g. 50mb installers) by users in locations with spotty network access (e.g. where such connections are often interrupted).

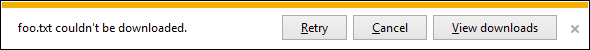

With IE10, we’ve reenabled the Content-Length / Transfer-Encoding checks in IE’s Download Manager. If the Download Manager encounters a transfer that does not include the number of bytes specified by the Content-Length header, or the transfer fails to end with the proper 0-sized chunk (when using Transfer-Encoding: chunked), the following message will be shown:

If the user clicks Retry, IE will attempt to resume (or restart the download). In many cases of network interruption, this feature helps ensure that the user is able to download the complete file. As a compatibility accommodation, if the retried transfer again does not provide the expected number of bytes, Internet Explorer will permit the download to be treated as “finished” anyway, so that users are not blocked from interacting with buggy servers.

For instance, one buggy pattern we've seen is a server which delivers the HTTP response body as a single chunk, then calls HttpResponse.Close() instead of the proper HttpApplication.CompleteRequest.

// Add Excel as content type and attachment

Response.ContentType = "application/vnd.ms-excel";

Response.AddHeader("Content-Disposition", "attachment; filename=" + binTarget);

mStream.Position = 0;

mStream.WriteTo(Response.OutputStream);

Response.Flush();

// BAD PATTERN: DO NOT USE.

// See https://blogs.msdn.com/b/aspnetue/archive/2010/05/25/response-end-response-close-and-how-customer-feedback-helps-us-improve-msdn-documentation.aspx

Response.Close();

Calling Close() like this omits the final chunk, and would cause the server's output to fail in the Download Manager if not for the compatibility accommodation.

You can test how browsers handle incorrect transfer sizes using these two Meddler scripts:

-Eric

Comments

- Anonymous

July 16, 2012

Thanks for posting this, Eric, but it kind of threw me for a loop and I'm left with a couple of questions. First, how does using Response.End() fit in with this? From the MSDN blog you linked, it sounds like it's not quite as bad (doesn't just terminate the connection), but it does abort the thread and bypass the rest of the pipeline. Will that still result in wrong data being sent? I ask, because in a couple of places on our website we allow downloading of files from an aspx page. These essentially use the pattern you describe (though with TransmitFile instead of WriteTo and Response.End instead of Close). Because of this I started looking around to find a better example of how this should be written. What I'm finding, however, is that pretty much all MS (and other) documentation follows the "wrong" pattern. For example: support.microsoft.com/.../306654 There are also several older MSDN blogs that mention this: blogs.msdn.com/.../excel-found-unreadable-content-in-workbook-do-you-want-to-recover-the-contents-of-this-workbook.aspx Looking for more information about the "right" way to do this, I came across this Stack Overflow question: stackoverflow.com/.../1087777 Unfortunately it's still pretty vague as to what the best way to go about it is. I realize that you can't do much about all the old stuff online, but it's be nice to see what the "right" way to send files to the clients in ASP.NET is. From what I can tell, it involves calling CompleteRequest() AND overriding a couple of pipeline methods to prevent them from rendering markup to the client:

- Write file contents code here

- HttpContext.Current.ApplicationInstance.CompleteRequest();

- Override RaisePostBackEvent and only call base method if not sending a file

- Override Render and only call base method if not sending a file Does that sound correct? Sorry if you're the wrong person to ask -- I won't shoot the messenger, but I will inundate him with questions :)

Anonymous

July 16, 2012

@Nick: Response.Close abnormally terminates the connection and fails to send the closing chunk; this "bad" example was originally provided by the Excel Services team. I'm not sure whether Response.End behaves the same way, but it should be pretty simple to test; just hit the page in question with Fiddler and see what's in the response. In terms of the best pattern to follow, you'd probably want to talk to some ASP.NET experts.Anonymous

August 20, 2012

One way I've done in the past is to just overriding the Page.Render event to do nothing. So far no obvious problem is observed.Anonymous

August 08, 2013

We had a serious issue affecting over 4000 customer visits who were not able to use our site and buy our $2000 product online because of this serious problem in IE10. The issue is as follows. Our site referenced two non-existent javascript files. The tomcat server sent a 404 to the IE10 browser but did not include a content link. The net effect was that subsequent javascripts included on the same page were not loaded correctly by the IE10 browser. We could see through httpwatch that IE10 kept the tcp sockets on these 404 responses open for 184seconds! The 404s being returned by the application to the requests below are not strictly kosher as they contain no Content-Length or Transfer-Encoding headers which enable the browser to determine when the content finishes, so we are in an ‘indeterminate’ condition, at the whim of the UA as to how they deal with it. We had to fix this by introducing an F5 rule (in our load balancers) such that it drops the 404 content and adds Content-Length 0. We will also take steps to ensure that we don't have any missing content - but this is hard in these days of analytics. This only happens in IE10 and only on windows 8. EricLaw: Browsers tend to assume Connection: Close semantics if servers fail to specify Content-Length properly. Such behavior is unlikely to be new, nor is it likely to be unique to IE. I'd love to talk about this further-- please email me. thanks!Anonymous

September 12, 2013

The comment has been removedAnonymous

February 12, 2015

Firefox took a change here: daniel.haxx.se/.../tightening-firefoxs-http-framing-againAnonymous

February 17, 2015

I'm going to guess this would also affect Excel web query CSV imports where content length isn't supplied and the client computer's IE version is 10? Just wondering because I have a client with this problem and we're unable to replicate because we're all using IE 11 with otherwise identical setups.