DBA 101: Collecting and Interpreting Failover Cluster Logs

When an unexplained outage occurs on a standalone server and you need to determine root cause, DBAs usually start with the collection and analysis of the following “artifacts”:

- Event Log (Application, System)

- SQL Error Logs

- Perfmon stats during the timeframe of the outage (assumes collection either via counter log or another external tool like Ops Mgr)

If the outage was on a Failover Cluster, you’ll also want to grab the cluster log. How and where you retrieve the cluster log depends on the operating system:

- For Windows Server 2003, you can find the cluster log under C:\Windows\Cluster (located under %systemroot%\cluster).

- For Windows Server 2008, the overall method of event collection is via Event Tracing for Windows (ETW). The Windows Server 2003 “Cluster.log” no longer exists in Windows Server 2008. Instead, cluster events are now logged to an Event Trace Session File (.etl format).

There are a few ways to capture Failover Cluster activity in Windows Server 2008 – but for the scope of this blog post, I’ll focus on how to generate a cluster log similar to what you may have seen in Windows Server 2003. The cluster log(s) can be generated by the CLUSTER.EXE command. For example:

CLUSTER.EXE YourClusterName LOG /GEN /COPY:"C:\Temp\cluster.log"

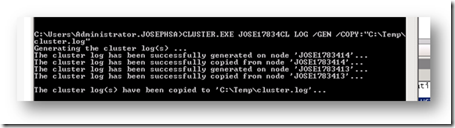

The below figure shows an example of executing this command with administrator rights on one node of a two node Windows Server 2008 Failover Cluster:

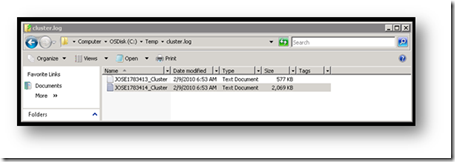

Looking in the C:\Temp directory I see a new cluster.log folder and see two generated cluster log files (one file for each node):

For a more detailed description of cluster log generation in Windows Server 2008, along with some logging command option details, check out “How to create the cluster.log in Windows Server 2008 Failover Clustering” by Steven Ekren, Senior Program Manager of Clustering & High Availability.

Opening up a cluster log for the first time, your impression may be that the log is both cryptic and verbose. Similar to reading a SQL Error Log for the first time, your comfort level and ability will grow with experience and practice. Here are a few tips to help start you out on getting the most out of your cluster log analysis:

· As with Windows event logs – you’ll want to narrow down the time period where the Failover Cluster issue or outage was thought to have occurred.

· Time is logged in GMT format. Keep this in mind when narrowing down the time range of your Failover Cluster outage.

· Beware of downstream error messages (more recent error messages from a specific event). Try to find the first occurrence of an error or warning that preceded the event. For example, if a disk resource became unavailable, you might see an initial error regarding the disk resource – followed by several other errors from cluster resources that are dependent on the offline disk resource. Your task is to work your way back to the original event and not get thrown off track by downstream events (focus on cause – and not downstream effects).

· The default cluster logging level is “3” on a possible range between 1 through 5 (5 being the most verbose and highest in overhead). Level 3 translates to a logging of errors, warnings, and info details. You can find your logging level on a Windows Server 2008 Failover Cluster log by searching for the text “cluster service logging level”. For example:

00000ccc.0000ca4::2009/10/17-19:20:41.265 INFO [CS] cluster service logging level is 3

· Within the cluster log you’ll find the log entry category on each logged line after the GMT timestamp – showing INFO, WARN, and ERR. Similar to a Windows application or system event log – your attention should be focused on WARN and ERR to start with.

· If your issue has resulted in several node reboots– you may have lost the necessary data needed to determine root cause. A new ETL file is generated each time a node is rebooted and the cluster log history is kept (by default) for up to five reboots.

Here is a full description of what you can expect to see in a single log entry. The format is the same across entries – and I’ll deconstruct a single color-coded log event entry below:

00000df4.00000c04::2009/10/17-19:20:47.093 ERR [RHS] RhsCall::Perform_NativeEH: ERROR_MOD_NOT_FOUND(126)' because of 'Unable to load resource DLL mqclus.dll.

- 00000df4 Hex format of the PID that logged the process.

- 00000c04 Hex thread ID of the thread that logged the entry.

- 2009/10/17-19:20:47.093 Date and timestamp in GMT format

- ERR Entry type (informational (INFO), warning (WARN), or error(ERR))

- [RHS] Component or Resource DLL identifier. In this example, RHS represents the Resource Host Subsystem which hosts all resources in the Failover Cluster (via rhs.exe executable). Other common components you’ll see in the log include the [NM] Network Manager, [RES] Resource DLL logging, [IM] Interface Manager, [GUM] Global Update Manager and more. From a practical standpoint, interpreting these various codes assumes a deeper understanding of the underlying Windows Failover Clustering architecture. Don’t worry too much if you do not have this background - the error message found after this identifier will likely be more helpful to you.

- RhsCall::Perform_NativeEH: ERROR_MOD_NOT_FOUND(126)' because of 'Unable to load resource DLL mqclus.dll. Event description / error message. Can vary significantly, and is your top “pointer” to what was happening at the specific moment in time of your outage or event.

I’ll end this blog post with a summarized list of cluster log evaluation tactics:

1. Focus on the time range of the outage or issue.

2. Windows Application and System event logs can add useful information in conjunction with the cluster log.

3. Focus on ERR and WARN entry types

4. Sometimes the error messages are intuitively written and can point you in the right direction. You may not find the root cause directly – but the log can point you in the right direction. When not intuitive – see the next step.

5. Pattern match on captured error messages using Microsoft KB articles or other preferred forums. If you have purchase Microsoft support, the engineer will most certainly want to see the cluster log you have collected.

That’s all for today. Happy cluster log surfing!

Comments

Anonymous

January 03, 2011

Useful Info... SQL Server DBA IBM India Pvt LtdAnonymous

May 09, 2011

Thanks Joe, That was helpful :)