Windows Azure Host Updates: Why, When, and How

Windows Azure’s compute platform, which includes Web Roles, Worker Roles, and Virtual Machines, is based on machine virtualization. It’s the deep access to the underlying operating system that makes Windows Azure’s Platform-as-a-Service (PaaS) uniquely compatible with many existing software components, runtimes and languages, and of course, without that deep access – including the ability to bring your own operating system images – Windows Azure’s Virtual Machines couldn’t be classified as Infrastructure-as-a-Service (IaaS).

The Host OS and Host Agent

Machine virtualization of course means that your code - whether it’s deployed in a PaaS Worker Role or an IaaS Virtual Machine - executes in a Windows Server hyper-v virtual machine. Every Windows Azure server (also called a Physical Node or Host) hosts one or more virtual machines, called “instances”, scheduling them on physical CPU cores, assigning them dedicated RAM, and granting and controlling access to local disk and network I/O.

The diagram below shows a simplified view of a server’s software architecture. The host partition (also called the root partition) runs the Server Core profile of Windows Server as the host OS and you can see the only difference between the diagram and a standard Hyper-V architecture diagram is the presence of the Windows Azure Fabric Controller (FC) host agent (HA) in the host partition and the Guest Agents (GA) in the guest partitions. The FC is the brain of the Windows Azure compute platform and the HA is its proxy, integrating servers into the platform so that the FC can deploy, monitor and manage the virtual machines that define Windows Azure Cloud Services. Only PaaS roles have GAs, which are the FC’s proxy for providing runtime support for and monitoring the health of the roles.

Reasons for Host Updates

Ensuring that Windows Azure provides a reliable, efficient and secure platform for applications requires patching the host OS and HA with security, reliability and performance updates. As you would guess based on how often your own installations of Windows get rebooted by Windows Update, we deploy updates to the host OS approximately once per month. The HA consists of multiple subcomponents, such as the Network Agent (NA) that manages virtual machine VLANs and the Virtual Machine virtual disk driver that connects Virtual Machine disks to the blobs containing their data in Windows Azure Storage. We therefore update the HA and its subcomponents at different intervals, depending on when a fix or new functionality is ready.

The steps we can take to deploy an update depend on the type of update. For example, almost all HA-related updates apply without rebooting the server. Windows OS updates, though, almost always have at least one patch, and usually several, that necessitate a reboot. We therefore have the FC “stage” a new version of the OS, which we deploy as a VHD, on each server and then the FC instructs the HAs to reboot their servers into the new image.

PaaS Update Orchestration

A key attribute of Windows Azure is its PaaS scale-out compute model. When you use one of the stateless virtual machine types in your Cloud Service, whether Web or Worker, you can easily scale-up and scale-down the role just by updating the instance count of the role in your Cloud Service’s configuration. The FC does all the work automatically to create new virtual machines when you scale out and to shut down virtual machines and remove when you scale down.

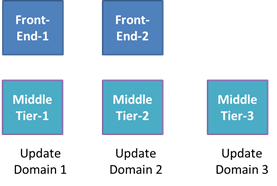

What makes Windows Azure’s scale-out model unique, though, is the fact that it makes high-availability a core part of the model. The FC defines a concept called Update Domains (UDs) that it uses to ensure a role is available throughout planned updates that cause instances to restart, whether they are updates to the role applied by the owner of the Cloud Service, like a role code update, or updates to the host that involve a server reboot, like a host OS update. The FC’s guarantee is that no planned update will cause instances from different UDs to be offline at the same time. A role has five UDs by default, though a Cloud Service can request up to 20 UDs in its service definition file. The figure below shows how the FC spreads the instances of a Cloud Service’s two roles across three UDs.

Role instances can call runtime APIs to determine their UD and the portal also shows the mapping of role instances to UDs. Here’s a cloud service with two roles having two instances each, so each UD has one instance from each role:

The behavior of the FC with respect to UDs differs for Cloud Service updates and host updates. When the update is one applied by a Cloud Service, the FC updates all the instances of each UD in turn. It moves to a subsequent UD only when all the instances of the previous have restarted and reported themselves healthy to the GA, or when the Cloud Service owner asks the FC via a service management API to move to the next UD.

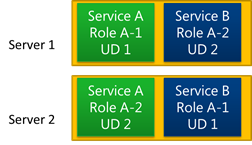

Instead of proceeding one UD at a time, the order and number of instances of a role that get rebooted concurrently during host updates can vary. That’s because the placement of instances on servers can prevent the FC from rebooting the servers on which all instances of a UD are hosted at the same time, or even in UD-order. Consider the allocation of instances to servers depicted in the diagram below. Instance 1 of Service A’s role is on server 1 and instance 2 is on server 2, whereas Service B’s instances are placed oppositely. No matter what order the FC reboots the servers, one service will have its instances restarted in an order that’s reverse of their UDs. The allocation shown is relatively rare since the FC allocation algorithm optimizes by attempting to place instances from the same UD - regardless of what service they belong to - on the same server, but it’s a valid allocation because the FC can reboot the servers without violating the promise that it not cause instances of different UDs of the same role (of the a single service) to be offline at the same time.

Another difference between host updates and Cloud Service updates is that when the update is to the host, however, the FC must ensure that one instance doesn’t indefinitely stall the forward progress of server updates across the datacenter. The FC therefore allots instances at most five minutes to shut down before proceeding with a reboot of the server into a new host OS and at most fifteen minutes for a role instance to report that it’s healthy from when it restarts. It takes a few minutes to reboot the host, then restart VMs, GAs and finally the role instance code, so an instance is typically offline anywhere between fifteen and thirty minutes depending on how long it and any other instances sharing the server take to shut down, as well as how long it takes to restart. More details on the expected state changes for Web and Worker roles during a host OS update can be found here. Note that for PaaS services the FC manages the OS servicing for guests as well, so a host OS update is typically followed by a corresponding guest OS update (for PaaS services that have opted into updates), which is orchestrated by UD like other cloud service updates.

IaaS and Host Updates

The preceding discussion has been in the context of PaaS roles, which automatically get the benefits of UDs as they scale out. Virtual Machines, on the other hand, are essentially single-instance roles that have no scale-out capability. An important goal of the IaaS feature release was to enable Virtual Machines to be able to also achieve high availability in the face of host updates and hardware failures and the Availability Sets feature does just that. You can add Virtual Machines to Availability Sets using PowerShell commands or the Windows Azure management portal. Here’s an example cloud service with virtual machines assigned to an availability set:

Just like roles, Availability Sets have five UDs by default and support up to twenty. The FC spreads instances assigned to an Availability Set across UDs, as shown in the figure below. This allows customers to deploy Virtual Machines designed for high availability, for example two Virtual Machines configured for SQL Server mirroring, to an Availability Set, which ensures that a host update will cause a reboot of only one half of the mirror at a time as described here (I don’t discuss it here, but the FC also uses a feature called Fault Domains to automatically spread instances of roles and Availability Sets across servers so that any single hardware failure in the datacenter will affect at most half the instances).

More Information

You can find more information about Update Domains, Fault Domains and Availability Sets in my Windows Azure conference sessions, recordings of which you can find on my Mark’s Webcasts page here. Windows Azure MSDN documentation describes host OS updates here and the service definition schema for Update Domains here.

Comments

Anonymous

January 01, 2003

@Ryan Thanks! We're definitely working on taking advantage of Server 2012 in Azure.Anonymous

January 01, 2003

Very nice post, thanks! This blog is like the zero-page thread to your life (low priority, get it? waah waaah) so it's always exciting to something new pop up in my feed reader. Has Server 2012 and the myriad improvements to the next version of Hyper-V been affecting your work on Azure? Are you making plans for it, or have you already been using it in Azure for a long time?Anonymous

November 26, 2013

Good info, but one question; you write that the FC allows the guests on a host 5 minutes to shut down before rebooting the host, and 15 mintues for the guests to report a status of healthy. Does this apply to IaaS instances as well? From time to time when logging into VMs in Azure I get the "unexpected shutdown" notification. Is this because the FC has rebooted the host before all the guests had time to shut down? Morgan