How We Approach Agile Design

For approximately the past year and a half, I have been working on building the next generation of testing tools. Our standalone UI, Microsoft Test and Lab Manager, was built to allow testers to plan their testing and overview their results. We have built the UI in an Agile manner and followed Agile processes. In this post, I'll go over some of the practices that we have been following to plan and design in an agile manner. In the next post, I'll go over an example of this by looking at how the UI for Camano has evolved in the past year and a half.

Breaking down the Planning

The Camano team follows an Agile Development process with Sprints of 5 weeks. I'm sure most of you are familiar with Agile. One of its premises is that requirements and priorities are constantly changing and that software is best developed by breaking work into bite size chunks which can be coded, tested and completed in a single Sprint. The idea is to incrementally add the most pressing features to the product in a manner where the overall quality of the product is maintained.

To accommodate planning in this manner, our team maintains a priority sorted product backlog in a Team Foundation Server (TFS) database. Each backlog item has a corresponding work item that contains a field for its relative priority (RankInt) to other items on the backlog. We leverage the integration that TFS has with Excel, to view the list of all the backlog work items in Excel so that we can move the work items around in the list and change their relative rankings. We are constantly adding more items to the backlog and shifting around their relative priorities.

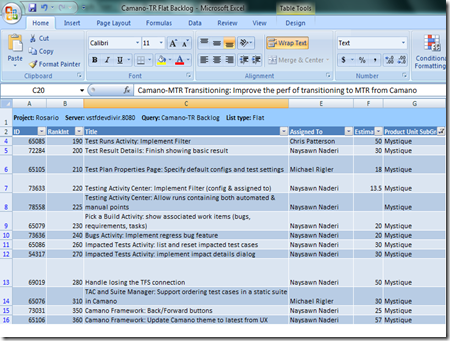

This is a screenshot of how the Camano backlog looked at one point in time. Each row in excel corresponds to a TFS work item

This is a screenshot of how the Camano backlog looked at one point in time. Each row in excel corresponds to a TFS work item

About 4 weeks before a Sprint begins, we flag the items at the top of the backlog to be planned and developed in the next Sprint. Us Program Managers, take it as our goal to fully spec out the features for the Sprints so that developers have all unanswered questions hashed out by the time they need to plan out its implementation. We don't always hit our mark of identifying which features to develop in time or manage to close open issues by design week, but we our best to achieve the goal.

Rapid Design to Accommodate Agile Development

PMs and designers typically have about 5 weeks to grind out a design for a feature to be implemented in Camano. Although this may sound like a while, striving to go from nothing to something which all the important stake holders agree upon can be a challenging task.

Our team has attempted to create rapid designs by having Program Manger spearhead a design who presents to higher ups the design at regular intervals. When there are 4 weeks to develop a design, it typically looks like this:

Prior to Week 1: Management and PMs decide on the components to add to the product in the next Sprint.

Week 1: PM chats with a few passionate people about a new and cool feature, develops a low fidelity wireframe of the experience & discusses the experience with higher ups at the end of the week

Week 2: PM iterates on feedback from the discussion & works with a designer to create a high fidelity mock ups of their features and presents it again to the higher ups for feedback

Week 3: PM iterates on feedback and does another review with higher ups and engineering leads to see to it that they are content with the final solution. The PM also typically will run a design by other effected teams to be sure that dependencies are flagged properly.

Week 4: PM presents the design to the engineering team. Engineering team has feedback which is jotted down to make design trade-offs.

Week 5: PM updates the design from feedback from the engineering team.

End of Week 5: The Sprint begins and the designs begin to be implemented by the engineering team. The PM team starts to design the features for the next Sprint.

Feedback along the way

Since we are developing in an Agile manner, we are able to demonstrate functionality to customers along the way and get feedback. Although our product is being released as a part of Visual Studio 2010, we have shown the completed portions to customers in pre-betas, called Customer Technology Previews (CTPs), at regular milestones.

In addition to getting input via customer use of CTPs, we use a variety of other mechanisms to get feedback from customer as the product is developed.

- Hands on Labs: With each CTP release, we have adopted the practice of getting both internal and external testers to run through a scripted scenario. Although it is painful to set up machines for all parties to run through, it is a good means to hear feedback from first time users of the product.

- Focus Groups: We have 2 groups of customers, one made up of local software testers, one of remote testers. We routinely discuss priorities and features before they are coded and ask them to find gaps in our story.

- Usability Studies: We routinely run usability studies where we bring in local testers and observe their efforts to perform various tasks on the developed product. I've found it quite eye opening to see users struggling to perform tasks with a product that you've designed. It is also quite motivating to fix an issue when the whole engineering team observes a user yelling at the screen!

- Customer Chats: Its nice to work on a team where people care about the product that you are developing. The Camano team has been fortunate to be able to have many customers eager to provide feedback via the Microsoft TAP program. Via this program, most members on the team are assigned one member at an interested company to speak with about the product in development on a regular basis. Through these informal chats, we strive to keep everyone on the development team focused on solving real world problems.

Responding to Feedback

As we get feedback on implemented code, we do our best to respond to this feedback by making design changes and tweaks in subsequent sprints. This can be as simple as adding another button or changing a color to overhauling an entire design and dropping functionality to go in another direction.

While it is challenging to plan in an iterative manner, I find it all worthwhile as through its use we can show customers a design, find out it needs more work, and make appropriate changes before we ship. In the next post, I'll go over how designs have changed as we have progressed by looking at how the UI of Camano has evolved as we have gotten feedback.

Comments

Anonymous

May 08, 2009

PingBack from http://microsoft-sharepoint.simplynetdev.com/how-we-approach-agile-design/Anonymous

May 10, 2009

The comment has been removedAnonymous

May 19, 2009

The comment has been removed