Using Windows Azure Drive Part 1: migrate your data to the Cloud

This month, we announced the Beta Release of Windows Azure Drive (a.k.a. XDrive), a technology that allows you to mount a VHD (Virtual Hard Drive) into a Windows Azure instance and access it as if it was a locally attached NTFS drive.

There are many applications for this technology, and in this post I would like to illustrate one of them: migrating an existing on-premise application, that uses regular Windows file access APIs, into the cloud, with no impact on the existing I/O code, thanks to Windows Azure Drive. There will really be two parts in this post:

- How to create a VHD locally, and upload it into the cloud so that it can be used as an XDrive; this part is important because the XDrive must be created as a Page Blob, a special kind of Windows Azure Blob

- How to modify your existing code to use the XDrive; we will see that once the XDrive is set up, the rest of your code will think it is dealing with a regular NTFS drive

This is the first part, where the most interesting part has actually been contributed by my colleague Stéphane Crozatier from the ISV DPE Team here in France, who programmed the Page Blob Upload Tool that is the key to making your data available as an XDrive in the cloud. Thanks Stéphane!

Introduction

First, let’s have a look at my application. It is a very simple Windows application that allows you to browse a directory of PDF files using thumbnails. The idea is to port this application to the cloud, so that you can browse the PDF files, hosted in Windows Azure, from a Web browser.

This application has two important characteristics, beyond its ugliness, in our case:

- It has some existing file & directory I/O code, just regular .NET System.IO stuff, that is used to navigate through directories, finding PDF files, and driving thumbnails generation.

- It uses a third-party Win32 DLL and P/Invoke to generate the thumbnails (the well-known Ghostscript Open Source library, which gives you a PostScript /PDF interpreter and viewer); I am using GhostscriptSharp, Matthew Ephraim’s P/Invoke wrapper for Ghostscript, to access the DLL from my C# application.

Of course, we could port our System.IO stuff to use Windows Azure Blobs instead; in my case, it would be quite easy, because the code is very simple! But this may not be the case of your existing applications. Furthermore, the case of the Ghostscript DLL is more complicated: I really don’t want to port this library, written in C, to Windows Azure Storage! It will be much simpler for me to just simulate a disk drive using XDrive, and I can keep my DLL as it is. Besides, if you have existing third-party closed source libraries, you may not have a choice.

How to migrate your data into an XDrive

I could start with an empty XDrive, creating it directly from my Azure application, and there are some examples of this in the Windows Azure Drive White Paper we have published. But my problem is different: my existing data (my directories of PDF files) and my application are linked: they work together, and I want to migrate them both into the cloud.

So, I am going to create a local VHD, where I will create the directory structure I need and copy my data, and I will upload this VHD to Windows Azure as a Page Blob, so it can be later mounted is an XDrive.

Creating the VHD

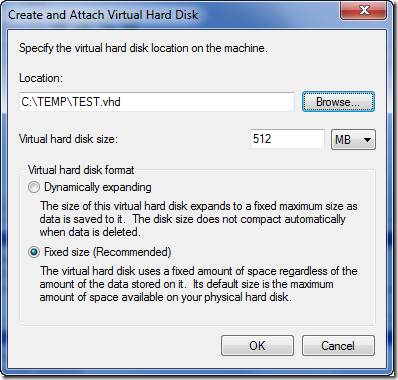

Creating a new VHD and mounting it is easy in Windows 7. If you want to use the VHD as an XDrive, you need to pay attention to the following:

- The VHD should be of fixed size

- It must not be smaller than 16 MB

- It must not be bigger than 1 TB

- Do not use exotic sizes, just use a multiple of 1 MB

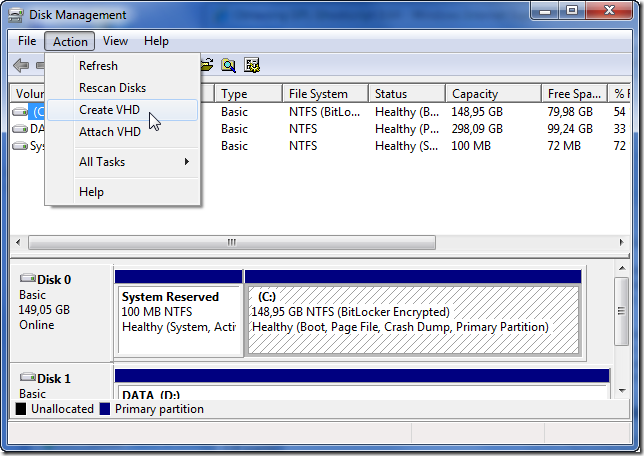

Here are the steps using the Windows 7 Disk Management Tool.

Just type “format” in the start menu and select “Create and format hard disk partitions”:

Select “Create VHD” from the “Action” menu:

Specify the path, size and format:

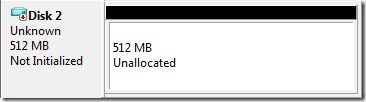

Click OK, and the VHD will be created and attached automatically:

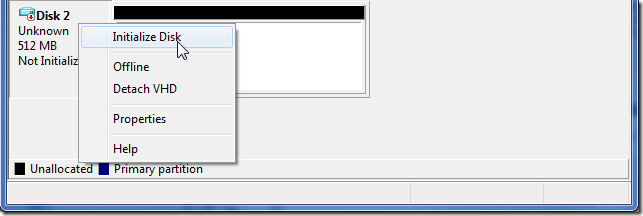

Now you need to initialize it, like any other disk; right-click on the disk an select “Initialize Disk”:

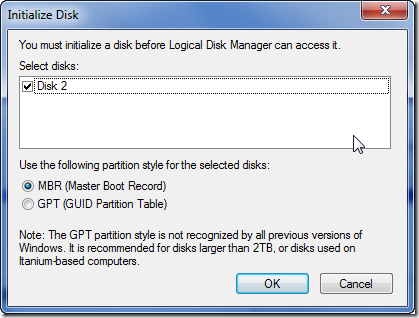

Select “MBR” (the default) and click OK:

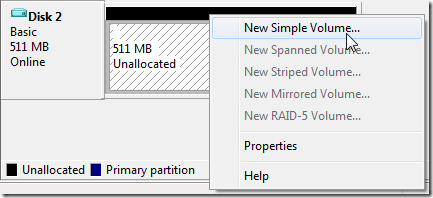

Now, right-click on the partition and select “New Simple Volume…”:

Just follow the defaults in the wizard that follows. You will assign a drive letter to the new drive, then format it as NTFS. Give it a volume name if you want and make sure to select “Perform a quick format”.

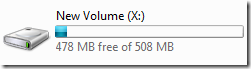

You now have a brand new Virtual Hard Drive, attached locally like a regular hard drive:

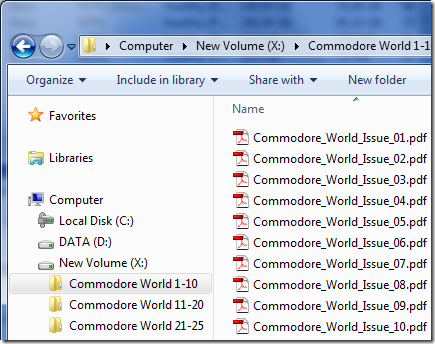

I will now copy my data into this virtual hard drive; I will create three directories for the various PDF files I want to browse, and copy a number of PDF files in each directory:

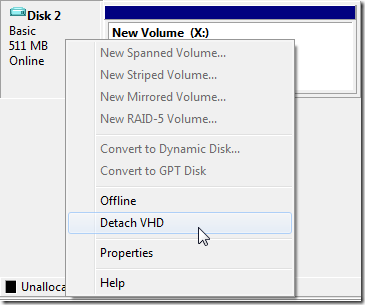

Once I am done, I can detach the VHD from the Disk Management tool, and my VHD is ready to be uploaded:

Uploading the VHD

Now, the really fun part begins! All we need to do is upload the VHD in Windows Azure Storage as a Blob, right? So why not use any of the nice graphical tools at our disposal, like the Azure Storage Explorer from CodePlex, Cerebrata Cloud Storage Studio, or CloudBerry Explorer for Azure Blob Storage? Easy: the VHD must be uploaded as a Page Blob, and none of the graphical tools can handle Page Blob at this time! So, we need to build our own upload tool.

Here I must thank my colleague Stéphane Crozatier from the ISV DPE Team here in France, who did all the hard work of figuring out how to do this. All the code is his!

Basically, the first thing we need to do is to create a Page Blob using the StorageClient API from the Windows Azure SDK:

CloudBlobClient blobStorage = account.CreateCloudBlobClient();

CloudPageBlob blob = blobStorage.GetPageBlobReference(blobUri);

blob.Properties.ContentType = "binary/octet-stream";

blob.Create(blobSize);

Then, we need to upload the whole file page by page, using the Put Page operation, which is surfaced as the CloudPageBlob.WritePages method in the Storage Client API. Because WritePages requires a Stream as input, we will use a MemoryStream to hold the pages of data we read from the file.

int pageSize = 1024 * 1024;

int numPages = (int)(file.Length / pageSize);

byte[] page = new byte[pageSize];

using (MemoryStream stmPage = new MemoryStream(page))

{

// process all pages until last

for (int i = 0; i < numPages; i++)

{

// write page to storage

file.Read(page, 0, pageSize);

stmPage.Seek(0, SeekOrigin.Begin);

blob.WritePages(stmPage, i * pageSize);

}

// process last page

if (blobSize > numPages * pageSize)

{

int remain = (int)(blobSize - (numPages * pageSize));

page = new byte[remain]; // allocate a clean buffer, padding with zero

using (MemoryStream stmLast = new MemoryStream(page))

{

// write page to storage

file.Read(page, 0, remain);

stmLast.Seek(0, SeekOrigin.Begin);

blob.WritePages(stmLast, numPages * pageSize);

}

}

}

Here is the complete version of Stéphane’s Page Blob Upload tool:

It is a Visual Studio 2010 solution, ready to use. Here is how to use it:

in the app.config file (or in UploadPageBlob.exe.config), enter your Windows Azure Storage Account credentials:

- <add key="StorageAccount" value="DefaultEndpointsProtocol=http;AccountName=...;AccountKey=..." />

- These credentials can be found in the administration page for your storage account, in the “Cloud Storage” section. AccountName is the first part of your storage URL (i.e. if your endpoint is foo.blob.core.windows.net, then your AccountName is foo). AccountKey is the associated Primary Access Key.

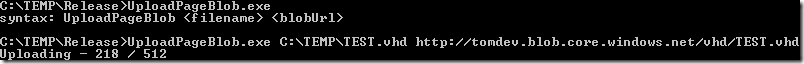

Build the solution, open a command window and go to the output directory; the syntax for the tool is:

- UploadPageBlob <filename> <blobUrl>

- The tool will report nicely on the upload status. Here you can see the upload of the 512 MB VHD I created above:

Next Part: Modifying your code to use the XDrive

To be continued…

Comments

Anonymous

March 01, 2010

Thanks so much for mentioning CloudBerry Explorer in your blog!Anonymous

March 04, 2010

Great post! When are you going to write part 2 - "Modifying your code to use the XDrive"? That's exactly what I'd like to do next.Anonymous

September 07, 2011

UploadPageBlob has an integer overflow bug that causes it to fail after 2GB (a least when compiled to 32-bit). Fix: change blob.WritePages(stmPage, i * pageSize); Into: blob.WritePages(stmPage, ((long)i) * pageSize);Anonymous

July 27, 2014

who can be bothered with all this - just to share a disk. what a retrograde nightmare