Architecture Considerations around Fabric Computing

Architecture Considerations around Fabric Computing

The fabric concept has been around since 1998, and computing fabrics using commodity hardware and software are now starting to come into fruition. The fabric system provides a set of interconnected nodes and links (structures) and operations (behaviors) which function as a unified computing system or subsystem. In order to better understand the nature of the fabric, there is an interesting discipline in mathematics called graph theory. Graph theory is the study structures, used to model relationships between vertices or nodes. A graph (fabric) is a collection of vertices (nodes) and edges (links).

In order to study the behavior of the fabric, it is important to understand the concept of node centrality and entropy. (On a side note, graph theory is now being studied with respect applied to social and organizational networks.)

There are four measures of centrality to consider:

- Degree: The number of links a nodes has. I think of this as the tightness of the weave. The tighter the weave, communications are more fluid without having other nodes intervene. On the other hand, a tighter weave can bring more risk as node exhibiting bad behavior may spread to other nodes more quickly.

- Betweeness: The measure of intervening nodes between nodes. This is the number of hops takes for a node to interact with another node.

- Closeness: Is the measure of distance (links) between two nodes. This can be regarded as how long it would take to disseminate information from one node to all other "reachable" nodes in the fabric.

- Eigenvector: Is the measure of importance of a node within the fabric. In an ideal world, all nodes are considered equal, but there are some nodes that are more critical than others. This would be the "weakest link" within the fabric. If this node fails, the fabric can become compromised.

Entropy is the measure of disorder on the fabric. A fabric must be able to be constructed to handle a requisite variety of loads. Consider a fabric of identical nodes that have a certain state associated with it. The links within the fabric indicate possible causal influences on one another. The dynamic behavior of the fabric system is determined by specific functions assigned to the nodes and their characteristics. Over time the fabric system's nodes and links will change state. The fabric system must have a level of knowledge of its nodes, links, and state in order to find the balance of acceptable entropy.

In Stafford Beer's Designing For Freedom (1973) he writes about the law of requisite variety. "Variety is the number of possible states within the system. In order to regulate a system, we have to absorb its variety. If we fail in this, the system becomes unstable."

I have blogged previously about Complex Adaptive Systems (CAS). A well-functioning complex adaptive system is at peak fitness on the edge of chaos or at a level of acceptable entropy. On intern can make the assersion that performance of the fabric is directly related balancing between rigidity and flexibility. This balance is found in manufacturing of Samurai words using the Japanese steel tamahagane. In order for the sword to be fit and useful, it has to perform under stress or when the state of steel changes, it must have the right balance of hardness and ductility. If the sword is too hard, it will shatter under stress. If the sword is too soft, it would collapse or melt. Therefore, the sword or in this case the fabric has to have certain level of entropy in order for it to be useful.

What makes fabric computing different from grid computing is that there is a level of entropy where the state of the fabric system is dynamic. The rules that govern a CAS are simple. Elements can self-organize, yes are able to generate a large variety of outcomes and will grow organically from emergence. The fabric allows for the consumer to generate or evolve a large number of solutions from the basic building blocks provided by the fabric. If the elements of the fabric are disrupted, the fabric should maintain the continuity of service. This provides resiliency. In addition, if the tensile strength of the fabric is stressed by a particular work unit within a particular area of the fabric, the fabric must be able scale to distribute the load and adapt to provide elasticity and flexibility.

A well performing fabric should provide the consumer an environment to build a variety of solutions while keeping minimizing the provider's pain of managing the fabric. The fabric system strives to provide the ultimate balance between consumer freedom and provider discipline.

So what does the mean with respect to architecture of systems? These computing fabrics are utilized in the composition of a cloud. Layering these fabrics effectively provides the architect a series of "platforms" to help build a "service." Service being defined as an aggregation of system elements which deliver value to the service consumer.

There are three classic layers in the architectures of IT systems. They are technology, applications, and business. This natural extension of service orientation allows for the separation of concerns (SoC) (Dijkstra, 1974). Common concerns can be aggregated so that a group of like-minded stakeholders can address them. Using the notation of IEEE 1471/ISO 42010, we can describe the system from a particular viewpoint where the design of a particular layer can be done in isolation by effectively partitioning the system. This provides focus on aspects of the system that are relevant to a particular constituency. By making each layer effectively being autonomous from one another, it gives a higher degree of freedom to the consumer of the layer.

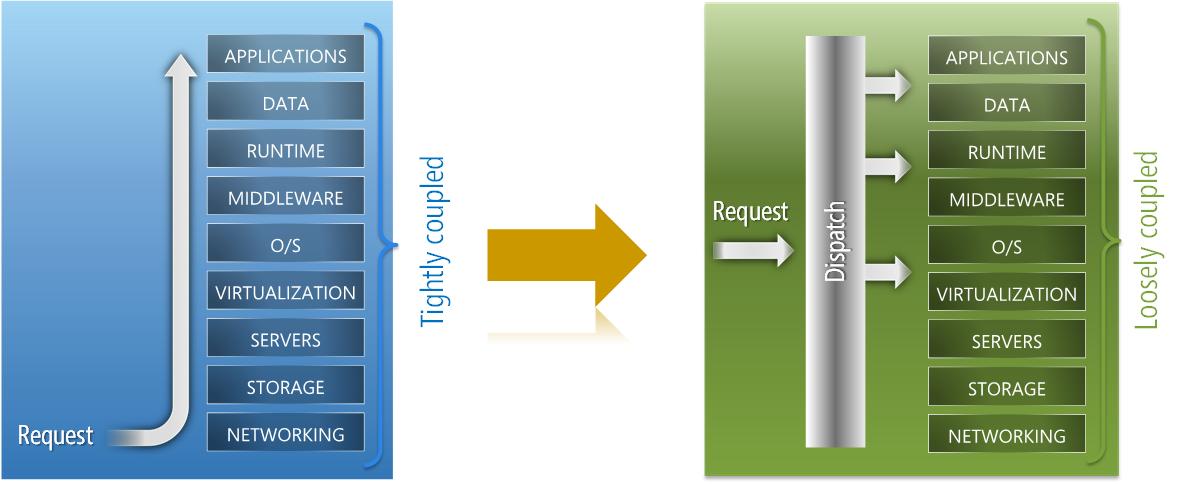

In the Traditional Architecture paradigm, the layers of the stack (system) are tightly coupled. Each layer of the stack is statically bound at the time of deployment to the layer below. For each service that needs to be deployed, the entire stack (an entire system) must be provisioned. This makes it difficult to balance isolation, utilization, and efficiency. There is no freedom, there is a rigid structure which is essentially design to accomodate a finite set of behaviors.

In the paradigm of Contemporary Architecture, layers are loosely coupled. Each layer of the stack is dynamically bound to the layer below. To provision a new service, one can deploy elements in a piecemeal fashion where elements can be mixed and match to provide the appropriate mixture of isolation, utilization, and resiliency. This separate of logical and physical aspects of the model allows for each layer to managed and updated independently. The horizonal partitioning of the stacks "structure" can allow for larger requisite variety of behaviors.

If we treat each layer of the stack as a subsystem or a system in itself; one can discover the service sourcing model for contemporary applications: the cloud. A cloud provider actually delivers a fabric of commoditized computing resources. Infrastructure as a Service (IaaS), can be thought of as Infrastructure as a (core) Technology Subsystem. Additionally, Platform as a Service (PaaS) can be considered an Application Subsystem. Lastly, the Business Information Subsystem corresponeds to Software as a Service (SaaS). Specifically the cloud system is set of one or more subsystems that are utilized in the construction of a consumable service. This consumable service has to provide a certain set of functionality that is assumed that will always be available regardless of the size of load, its utilization or failure. Each of these subsystems is essentially a fabric on its own. The fabric gives the illusion of freedom to the consumer.

A well performing "fully finished" cloud service is one that effectively uses all of the fabrics together in unison. A contemporary architecture then has to make sure that each layer can operate independently from one another. Therefore the interactions between structures and behaviors that cross these fabrics have to be minimized wherever possible. This means that some cloud services can be constructed with or without the use of a fabric, but one can make an argument that if you do not use the fabric for at least one of the layers, your service will behave "less cloud-like."

The amount of entropy that fabric can absorb will make the cloud more resilient and flexible in the face of both negative state change (load failure) and positive state change (load change). With respect to degree, one would may wish to distribute the nodes that have high interaction with each other to have a low degree and low betweenness. To minimize the risk of failure, nodes may need to be distributed across important or high ranking nodes (eigenvector value). If a node is authoritative needs to distribute information to all other nodes on change, then having low closeness is desired as information will be disseminated faster.

For example, let us consider the modern data center. It consists of homogenized devices (server, network, storage nodes) that are highly interconnected. Standardization of the devices simplifies the structure of the data center. As we examine the behaviors of this data center, we should constrain the rules of how interactions are performed against those structures. Meaning we do not want too many ways of doing things. Therefore there should be a simple, well set of defined behaviors that are performed govern how the structures behave with one another within the system. In most data centers, having single source of power is usually node with highest eigenvector centrality measure, therefore fault domains are often delineated at the rack, where the power is delivered. Servers in a resource pool may be considered to have low degree and closeness, but when one adds additional data centers or more resource pools, then betweeness and closeness centrality factors may be higher.

So in summary, what does this mean? It means that we as architects can focus less on the structure of the fabric, and more on the behavior of the nodes and their interactions and how fabric responds to new, retired, failed, and decayed nodes.

More on this to come....