So you want to automate your test cases?

Many CIOs and Test Managers are interested in increasing the level of automation in testing efforts. What is automation good for? What is the best way to go about this? How much automation is enough? And who should do it, and when?

I've heard on more than one occasion test managers say that 100% of all test cases should be automated. Really??? The first thing to assess is what are your goals for automation. Automation is about running the same tests over and over again in an efficient way, which can achieve two purposes:

- Regression testing. This about finding bugs in functionality that at one time was working.

- Matrix or configuration testing. This is about running the same test against the same build, but in different configurations.

Regression Testing

Regression testing comes in two flavors, depending on the phase of the development cycle you are in. The first is BVT or smoke tests, which determine whether or not the product is "off the floor" or has basic functionality working. For example, if you are doing regular builds as hand offs from dev to test, you want to make sure the product is "self-test", or basically working so the testers can use it. If, for example, login fails with no workaround, the test will be blocked, so there's no point promoting the build to test. BVTs are a small number of tests that validate the basic functionality of the software.

Another flavor of regression testing is at project shutdown time. Here you are stabilizing new features preparing to deploy the software to production or ship it to customers. In the process of building new features, did the dev team break any existing features? Automation can help find these types of failures.

A third important scenario is software maintenance. Say you have a hot bug fix you want to roll into production. How can you lower the risk of the bug fix breaking other features? Or if you are taking a security patch for the OS, how can you be sure your critical applications will continue to work? Just like the project shutdown scenario, automation can help here too.

So if you are doing automation for regression testing, a key question is how much testing needs to be done before you are ready to ship? Look at automating those parts. I always recommend crawl, walk, run. Before investing a ton of effort into a given strategy, be sure you understand the downstream costs. I recommend starting with a few key scenarios and running them through a lifecycle to get a feel for costs.

Config Testing

Another use of automation is if you have to run the same set of tests against many different configurations. This is most often the case for ISVs shipping software, where their software may be run in a variety of environments. For example, web and load tests are available in both VSTT and VSTS, both of which are available in 8 languages and on XP, Vista, Windows 2003, Windows 2008. And of course the OS comes in a variety of languages too, then there are other configs like running as Admin vs. normal user, SQL 2005 and 2008, etc. You can see the combinatorics are crazy here, each dimension is a multiplier. So we are smart about making sure we cover each in at least one test run, but you can see we have to run the same set of tests many, many times on different configs.

If you have a browser app, another example may be testing it with different browsers (although here you're likely interested in page layout type bugs, which are difficult to detect in automation).

Automation is no replacement for manual testing

Fundamentally automation is about running tests that used to work, or worked on one config, and then no longer work when re-run (except in the case of TDD of course, that's another topic :)).

You will definitely get the highest bug yields from manual testing, also manual testing allows testers to be creative in finding bugs. Automation is not creative at all, it typically just does the same thing over and over.

A typical cycle for testing is to make sure all features are tested manually first, then select which test cases to automate.

The Hidden Cost of Automation: Maintenance

In order to reliably determine regressions, your tests must be reliable and working. As the application changes, you will need to fix your tests to match the application. If you don't do this your automation will turn into a smolder pile that is really good for nothing. Take this example, let's say you've automated 100 tests for a given release. Then you do a second release, and as you go into feature complete and the stabilization phase you go to re-run your tests and find that 75 of them are now failing. As you investigate, you find that the UI changed, which caused your scripts to break. So now you are left with fixing your automation up, which for UI tests is really no easy task and often it takes longer to fix an automated test case than it did to develop it in the first place.

If have seen on more than one occasion where the effort to maintain a set of tests outweighed the effort to run the test cases manually. I've also seen it where the test team is so wrapped up in building and maintaining automation that actual manual testing falls by the wayside, and as a result bugs make it out to customers or are found late in the cycle.

I can't say this enough, the downstream cost of automating a test is huge, particularly if automating at the UI layer. UI tests have the advantage that they are easy to create and get started on, and easy to understand, but the disadvantage that they are significantly less reliable than unit (or I prefer the term API) tests, and more prone to break due to changes in the application. API tests have the huge advantage of failing with compile-time errors rather than run-time errors, and are easier to maintain.

Also where as unit tests are written by developers as they complete new features, you are likely best off holding off on automating at the UI layer until later in the cycle once the changes in the UI settle out.

I love James Bach's classic paper Test Automation Snake Oil. It's almost 10 years old, but still applicable today.

Best Practices for Automation

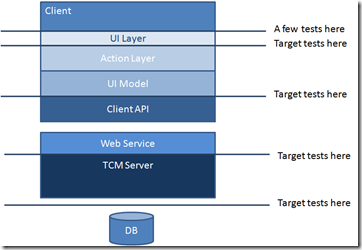

Keep the UI layer as thin as possible, and do as little automation at the UI layer as possible. Architect your application to allow testing at different layers via APIs, rather than driving all automation through the UI. This is referred to as proper architectural layering, where you have defined clear architectural layers in the software. Tests below the UI layer are developed as unit tests, so they have the advantages of lower cost of maintenance and less fragility.

Here's the architecture of the test case management product we are developing, which enables this. We're currently focusing our test case development at the client API layer and action API layer, with a few UI tests for end-to-end testing.Ensure developers and testers work together.

- Developers should develop unit tests for the layers they are developing as they go. Testers should leverage developer unit tests for config testing. For example, if your application supports different server configurations, and you have dev unit tests that test the server, testers should run these tests against the different supported configs.

- Avoid duplication of tests. If developers have a rich set of business object unit tests to test the business logic, all of these tests likely does not need to be repeated at the UI layer. For the products we are developing, our test team develops the test plan and test cases, we tag which ones we want to automate, and both developers and testers work off this list.

Getting started with VS 2008

You can start now with automation using VS 2008 unit tests to target the layers in your software, and web tests to test your server at the http layer. Web tests largely fall into the same boat as UI automation tests for maintenance and development cost. They were primarily developed for load testing, but can also be used for functional testing of the server. Since they do not drive the application at the UI layer, but rather the http layer, they are harder to work with than UI tests.

You can also automate your builds using TeamBuild, and configure BVT unit tests to run as part of the build to ensure the build quality. Test results are published to TFS, so the entire team can see the results of the tests and quality of the build.

There are several different test partners offering UI automation solutions for VS, including Automated QA and ArtOfTest.

We are also working on UI automation technology for our next release, as well as TFS server-based test case management and a manual test runner.

Ed.

Comments

Anonymous

August 15, 2008

PingBack from http://blog.a-foton.ru/2008/08/so-you-want-to-automate-your-test-cases/Anonymous

August 15, 2008

The comment has been removedAnonymous

August 19, 2008

Check out James's recent post with the eye-catching title “if Microsoft is so good at testing, why doesAnonymous

February 05, 2009

[ Nacsa Sándor , 2009. február 6.] Ez a Team System változat a webalkalmazások és –szolgáltatások teszteléséhezAnonymous

June 12, 2009

A while back I posted this on automation . I want to resurface this topic now, as it has been on my mindAnonymous

June 18, 2009

Ed has published some interesting articles on automated Testing. http://blogs.msdn.com/edglas/archive/2008/08/15/so-you-want-to-automate-your-test-cases.aspxAnonymous

November 06, 2009

One of the techniques to reduce the cost of maintenance of automation test cases is the implementation of Frameworks, being one of them the Keyword Driven Frameworks. The question is, do you think it would be possible to immplement in VSTS 2010 ? Has MSFT considered this ?