Azure SQLDB and SQL Server VM - How to make an equal cost comparison

Azure SQLDB is one of my favorite technologies and I often have to talk and work with my partners around features, performances, scaling, high-availability and disaster recovery. A key part of the deal is related to the cost, sooner or later in the preliminary discussion, I always expect comments like these:

- “Azure SQLDB is nice, we like the concept of Database-as-a-Service, but it’s too costly and not competitive with a SQL Server VM”

- “Azure SQLDB Standard/Premium tiers are amazing but too expensive compared to Web/Business tiers”

If you want to be able to articulate a detailed and exhaustive answer and then enter this kind of discussion, I would recommend you to continue reading this post. The most common mistake I have seen in my personal experience, and talking with colleagues in Microsoft, is that many customers do not fully consider what is the value of what they effectively get. In order to establish a common context for Azure SQLDB, I’m going to talk about the new Basic/standard/Premium service tiers, the “old” Web/Business editions,

and V12 more recent version as mentioned in the articles below:

SQL Database Pricing

http://azure.microsoft.com/en-us/pricing/details/sql-database

What I want to share here with you is a set of considerations, based on my personal experience, that I hope will permit you to correctly evaluate the cost, and even more important the value, that each solution brings to you and then finally make a realistic comparison. This is not intended to be a functional comparison between Azure SQLDB and SQL Server in Azure VM, there is already a great article and related content you can review using the link below:

Understanding Azure SQL Database and SQL Server in Azure VMs

Performance predictability

Here the context for the comparison is between the “old” Web/Business tiers and the newest Basic/Standard/Premium tiers we recently introduced in Azure SQLDB. Sometimes, customers and partners that used in the past Web and Business editions/tiers, consider new Basic/Standard/Premium more expensive while giving them the same performances, but there is a very important aspect to consider. While in Web/Business tiers there is no performance target estimation (SLO), in the new Basic/Standard/Premium tiers you have the choice to adopt a service plan that will provide you much more consistent performances in terms of a synthetic measure called DTU (Database Throughput Unit). As you can see in the table below, you can buy an Azure SQLDB service plan that will fit your resource/transaction requirements in a more predictable way:

Azure SQL Database Service Tiers and Performance Levels

https://msdn.microsoft.com/en-us/library/azure/dn741336.aspx

It is worth noting that you can dynamically adjust up and down your service tier and performance level, based on your application temporal requirements, thus optimizing the cost of your solution. Almost any customer give very high value to the possibility to have predictable performance levels, since Web/Business tiers do not give you any estimation on that, you need to include this in your cost evaluation plan. Please note that Web/Business tiers are deprecated and will be retired next September 2015, for more details you can read at the link below:

Web and Business Edition Sunset FAQ

https://msdn.microsoft.com/en-us/library/azure/dn741330.aspx

Regarding SQL Server in a VM scenario, if you want guaranteed/reserved resources to ensure your database/application performances will be predictable, you are already in a good situation: in Azure, when you buy a VM you have reserved cores and memory, no overcommit here, but you need to consider the storage part. If you want guaranteed/reserved resources (at a certain degree) for Azure Blob storage hosting your VM disks, you will need to purchase the Premium version (currently in preview), then an additional (even not big) cost you will need to consider.

Comparing cost based on Database size

A pivotal change in the new Basic/Standard/Premium editions of Azure SQLDB is that Microsoft moved away from pure billing based on database size to new criteria based on features and performance levels: in order to facilitate your understanding, I reported below the main characteristics of the new tiers:

With the legacy Web/Business editions, cost was only based on database size, then if you want to compare the cost of 50GB database, for example, with Basic/Standard/Premium, the former is less expensive than the latter. However, be careful, you are not comparing apples to apples! As a former DBA for long time, I give extreme value to high-availability, disaster-recovery, business continuity, security and compliance, and many other features that are not available in the Web/Business editions. If you want to

make an equal comparison, you need to give a value to all these additional features and then include in your cost plan evaluation.

SQL Database Web and Business pricing (to be retired)

http://azure.microsoft.com/en-us/pricing/details/sql-database/web-business

Then, if you want to maximize your investment in, for example, Premium Edition and reduce per GB cost, then you need to maximize the density of your data and utilize almost all the available space up to the tier maximum database size of 500GB. Additionally, you want/need to leverage all the great features that Premium can give you, if you are only using it as storage space, cost comparison with Web/Business is odd. Working with partners, you may find a scenario where cost of the new tiers may be problematic: imagine that you have to manage hundreds/thousands of tenants/users and your application requires one 50 GB (for example) database per single tenant. What would be the cost on using hundreds/thousands of Standard tier databases without the possibility to fill in more data and maximize the density? The good news is that Azure SQLDB team is planning to solve this situation in the next future and make cost affordable for big database “fleets”, then stay tuned! Regarding SQL Server in a VM, almost all the advanced features I just mentioned above are available, but remember that since you are in the IaaS world, you need to setup, configure, manage and monitor all of them.

Upgrade availability

Microsoft commitment to the cloud is reflected in many aspects, but what is interesting here is the development strategy of new SQL Server and Azure SQLDB versions (and related features): code development first happen for the cloud and then reverse engineered to be included in the box product. This means that you will have access to new SQL features *first* in Azure SQLDB, then if you want to install SQL Server in a VM, or on-premise, you will need to wait for the next version. Additionally, in Azure SQLDB you don’t have to install a new version, neither you have to plan for upgrade downtime: in Azure SQLDB it will happen magically, the Product Group will release the feature and on Day-1 you can use. To give you a practical example, consider a new recent feature that has been introduced (actually in preview) in Azure SQLDB V12, that is “Row Level Security” (RLS):

Row-Level Security (Azure SQL Database)

https://msdn.microsoft.com/library/dn765131.aspx

This feature, that will be released soon, it’s the first example of a new technology that will be available in Azure SQLDB *first*, then for SQL Server box product but only in the next version that will be released in the next future. But wait, there is more than that: did you hear about a new Azure SQLDB V12 feature called “Dynamic Data Masking”? You can read the technical details using the link below, what is important here for this discussion context is that it will be only available in Azure SQLDB, not in SQL Server box product. Again, this is something that you should include in your cost evaluation and quote for a monetary value when comparing Azure SQLDB database-as-a-service and SQL Server in a VM.

Limit the exposure of sensitive data in Azure SQL Database using Dynamic Data Masking

High Availability

Here the context for the comparison is between SQL Server in a VM and Azure SQLDB in general. This is my favorite discussion point when comparing cost since the common mistake is to compare a single SQL VM with a single Azure SQLDB database. When you create a database in Azure SQLDB, what you get by default is an highly-available configuration composed by three local synchronous replicas (zero data loss): using the new service tiers the availability SLA is 99,99% with no user intervention required for

configuration.

If you want to achieve a similar availability level (99,95% Azure 2 VMs SLA) with SQL Server VMs, you need to use two SQL Server VMs deployed in the same Cloud Service, in the same Availability Set and you need to manually configure AlwaysOn Availability Group (AG) with synchronous replication. It is all here? No, you need more VMs since AG requires Active Directory, then at least two as Domain Controllers, to ensure high-availability for this role as well, and you need also another one small VM as the Cluster witness. Now you can see that you need several VMs, typically at least five, to provide equal high-availability SLA and make an equal meaningful comparison. If you want to have an idea of the complexity, you can give a look to my blog post below, please only consider the left side of the picture since here I only considered local high-availability (same Azure datacenter).

SQL Server 2014 High-Availability and Multi-Datacenter Disaster Recovery with Multiple Azure ILBs

In addition to that, you need to consider the cost in managing an AG configuration: since it’s IaaS, you have to take care of monitoring, setting alerts, configure backups and monitor performances: all these aspects are simply in charge of Azure SQLDB service, then you don’t need to worry about.

Disaster Recovery

This is probably one of the biggest point to consider when talking about costs and comparison between SQL Server in a VM or using Azure SQLDB. Let’s first talk about local disaster recovery, that is what happens in a single datacenter when you lose for some reason one of your SQL replicas. Using SQL Server in a VM requires first of all that you will be able to detect this event, then you will need to eventually reinstall SQL Server in the VM, reestablish AG replication and test everything is ok. Remember that Azure will

monitor and eventually trigger self-recovery at the VM container level, what is inside the VM (in this case SQL Server) is in your responsibility. In Azure SQLDB you simply don’t care about this, the service infrastructure will monitor health of the 3 replicas and will eventually (and automatically) recover the faulty component without administrators/DBAs intervention. I hope you will agree that here there is an evident cost difference between the two solutions. Now let’s consider disaster recovery in a multi-datacenter scenario. In the previous diagram, I hope I gave you the idea of the cost and complexity to implement a geo-DR solution using AlwaysOn Availability Group (consider the right side of the picture). The number of additional VMs, e-gress network traffic and/or ExpressRoute connectivity solution, additional storage, management and monitoring are all elements that must be carefully considered. In Azure SQLDB, you can easily create in minutes a multi datacenter geo-DR configuration using an amazing user experience, up to 4 readable remote replicas across all Azure regions in the world with RPO = 5 seconds (Premium tier)!

Azure SQL Database Business Continuity

https://msdn.microsoft.com/en-us/library/azure/hh852669.aspx

Be careful, you are going to pay for the additional instances/databases, but you can easily do you maths and compare the costs on implementing a similar mechanism with Azure VMs. If you want to compare with Web/Business editions, you need to remember that essentially you had only two options here: Azure SQLDB "Database Copy" and the ”Import and Export" service to dump out a consistent copy of your database on Azure Blob storage. Compared to these, new Azure SQLDB Standard and Active geo-replication mechanisms, available in the new service tiers, are much more powerful and versatile and, most important, they all comes with precise RPO and RTO as you can see below:

Finally, let’s talk about database backups. Since the initial Web/Business tiers, Azure SQLDB always took care of your data and performed backups to save your data, but never exposed self-service restore: if you wanted to restore one of your databases, you had to open a case to Microsoft Support and ask for restore. In Azure SQLDB new service tiers, you finally have the possibility to trigger database restore yourself. Additionally, the cost to store all your backup sets, based on retention policy associated to each service tier, is included in the base cost, you are not going to pay more for storage. Keep also in mind that backup sets are geo-replicated to protect from datacenter loss, and you can restore in any geo location you want. One nice additional benefit here is the possibility to recover an accidentally deleted database that will be retained based on service tier retention period. You can easily guess that if you drop a database in SQL Server, that data is gone with no possibility to recovery, except by restore of a previous backup. Regarding SQL Server in Azure VMs, Microsoft has introduced (SQL Server 2014) the possibility to have automatically managed database backups to Azure Blob storage, but in this case the price to store your backups is *not* included and must be considered as additional cost for the purpose of comparison.

Azure SQL Database Point in Time Restore

http://azure.microsoft.com/blog/2014/10/01/azure-sql-database-point-in-time-restore

Compliance

Did you consider the cost of being compliant for your SQL Server in a VM or Azure SQLDB solution? Being compliant with security and privacy standards is becoming more stringent and important for customers and partners, especially when operating solutions in the Cloud. SQL Server box product, along with Windows Server OS when installed in an Azure VM, provides many features and capabilities able to achieve your needs in this area, but there is a key aspect to consider. Many of these standards require specific

configurations, setup hardening, audit logic, certified and documented management processes related to the way you manage and operate your environment. As you can easily understand, this represent an additional cost that may greatly varies based on the complexity of the specific compliance level and privacy standard you want to enforce. Since you are in the IaaS world, ensuring that your solution is “compliant” is essentially in your hands, it is your responsibility to use SQL and Windows in a way that adheres to what is dictated by such standards. When you use Azure SQLDB, an important part of this effort is in charge of Microsoft: Azure SQLDB already obtained many certifications last year, as you can see in the table below, and more will come in the near future. In some cases, being fully compliant still require you, as Azure SQLDB users, to enable and use some features, but the effort is greatly reduced compare to the IaaS usage. Acquiring a certification is only part of the work, in order to retain this achievement periodical checks and renewal are required: Microsoft will take care of this, along with the way we operate Azure SQLDB as a service. You can find latest information on compliance, security and privacy standards using the link below:

Microsoft Azure Trust Center

http://azure.microsoft.com/en-us/support/trust-center

Scale Out

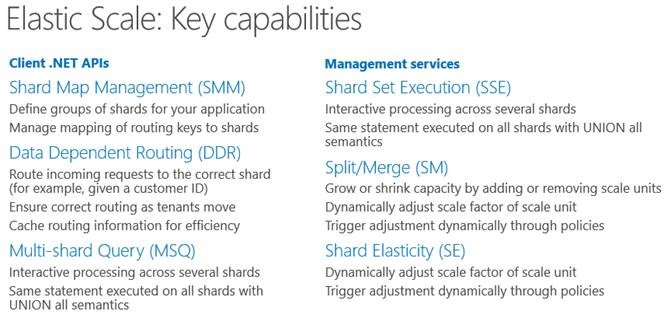

Scaling vertically up and down is pretty easy with SQL Server box product with new bigger VM SKUs release, and now it’s easy as well in Azure SQLDB with new service tiers. But what happen if you need to scale out to avoid resource limitations of a single SQL Server VM or Azure SQLDB instance/database? Using VMs this would require a pretty complex and ad-hoc application re-architecting generally based on data-dependent routing (DDR) strategy. Generally the cost of designing, implementing and managing this

approach is pretty high. In the Azure SQLDB world something new has been recently announced under the name of “Elastic Scale":

Introducing Elastic Scale preview for Azure SQL Database

http://azure.microsoft.com/blog/2014/10/02/introducing-elastic-scale-preview-for-azure-sql-database

“Elastic Scale” is a framework of components including .NET client libraries and Azure cloud service packages providing the ability to easily develop, scale, and manage the stateful data tiers of your SQL Server applications. This comes to you free of charge and ready to be used, you don’t pay for this technology, expect for the usage of additional Azure SQLDB databases, that you would need anyway in any multi-shard solution, and few Azure compute resources. If your application requires a multi-sharding solution for scaling out, this is really a huge value and cost saving factor that I recommend you to consider.

SQL Server Licensing

For Azure SQLDB, obviously, there is no licensing cost, everything is included in the tier you buy. When you deploy SQL Server in a VM, there are two ways you can pay: using one of the SQL Server images included in the Azure VM template Gallery (billing per minute), or installing your own already licensed image (BYOL using the license mobility benefits under Software Assurance). In the former case, the cost of the VM also includes SQL Server licensing, then quoted implicitly. But in the latter case, if you bring into

the VM your own SQL Server license, you need to consider this cost and add to the overall cost of your IaaS solution. If you want to deploy a highly available solution with Azure VMs, you will have to have a license also for the passive VM/SQL instance. For more information on SQL Server licensing in this context you can check the FAQ below:

Virtual Machines Licensing FAQ

http://azure.microsoft.com/en-us/pricing/licensing-faq

From the link above, it is important to note that SQL Server licensing is per core. There is also a minimum of four cores to be licensed, if you are using license mobility benefit for installations inside an Azure VM.

Cold/Offline Database & Downsizing

I have encountered a quite unusual scenario, only one time to be honest, I want to mention for your awareness anyway. What happens if you have a SQL database that need to be online only periodically and only for a small amount of time? Imagine a situation where you have an archival database, 400GB constant size that need to receive data once a week, and then only used for reporting only at the end of each month. If you use SQL Server inside a VM, you can easily start it weekly for the time of data loading and reporting processing, then you can shut down (with de-allocation) for the remaining time, or reduce the number of cores used and keep the VM running. This is a nice example of Cloud elasticity. In Azure SQLDB you cannot shut down a database and stop paying, what you can do to reduce cost is try to lower the service level. Unfortunately, if you follow my numerical example here, for a 400GB sized database you need Premium tier, then your downgrading options are limited inside the Premium tier family (P1, P2, P3). In theory, there is an option in Azure SQLDB to “shutdown” a database and stop paying for it: drop it and restore when you need!

To be honest with you, as a former DBA I’m a bit worried to drop/delete a database, even if Azure SQLDB guarantees that you will be able to recover up to a certain amount of days, I will leave further considerations to you. Be aware that the retention period is determined by the service tier of the database while it existed or the number of days where the database exists, whichever is less.

That’s all I wanted to share with you, hope you have found this content useful. If you want to see and discuss additional points regarding cost comparison between SQL Server in a VM, Azure SQL DB Web/Business and Basic/Standard/Premium, please leave a comment here and I will try to answer you and eventually incorporate new content in this blog post. You can follow me on Twitter ( @igorpag). Regards.

Comments

Anonymous

March 23, 2015

The comment has been removedAnonymous

March 23, 2015

The comment has been removedAnonymous

March 23, 2015

Sorry, my answer was not complete. Retention for more than 35 days can be achieved creating a DB COPY and then use Azure SQLDB export service to trigger an offline backup in Azure blob storage. regards.Anonymous

March 23, 2015

Igor - thanks for the 2 answers , it seems silly / redundant to have to do a separate SQLDB export and then Export , when there is already a backup sitting somewhere on disk . Not really manageable if you have a large amount of databases and I assume doing the DB Copy will incur extra cost ?Anonymous

March 24, 2015

Andy, the DB COPY is necessary only to have a transactionally consistent export of the database. You can in theory run the export without it, but may be it's now what you want. Yes, using this method you will be charged for the extra database created. We have plans to improve in the near future. Regards.Anonymous

June 26, 2017

The comment has been removed